lucene分词器介绍

1、几种分词器简介

(1)

public class AnalyzerUtils {

public static void display(String str,Analyzer a) {

try {

TokenStream stream = a.tokenStream("content", new StringReader(str));

CharTermAttribute cta = stream.addAttribute(CharTermAttribute.class);

while(stream.incrementToken()) {

System.out.print(" cta:"+cta);

}

System.out.println();

} catch (IOException e) {

e.printStackTrace();

}

}

public static void displayAllTokenInfo(String str,Analyzer a) {

try {

TokenStream stream = a.tokenStream("content", new StringReader(str));

PositionIncrementAttribute pia = stream.addAttribute(PositionIncrementAttribute.class);

OffsetAttribute oa = stream.addAttribute(OffsetAttribute.class);

CharTermAttribute cta = stream.addAttribute(CharTermAttribute.class);

TypeAttribute ta = stream.addAttribute(TypeAttribute.class);

for(;stream.incrementToken();) {

System.out.println(pia.getPositionIncrement()+":");

System.out.println(cta+"["+oa.startOffset()+"-"+oa.endOffset()+"]"+ta.type());

}

} catch (IOException e) {

e.printStackTrace();

}

}

(2)测试

@Test

public void test01() {

Analyzer a1 = new StandardAnalyzer(Version.LUCENE_35);

Analyzer a2 = new StopAnalyzer(Version.LUCENE_35);

Analyzer a3 = new SimpleAnalyzer(Version.LUCENE_35);

Analyzer a4 = new WhitespaceAnalyzer(Version.LUCENE_35);

String txt = "this is my house,I am come from China";

AnalyzerUtils.display(txt, a1);

AnalyzerUtils.display(txt, a2);

AnalyzerUtils.display(txt, a3);

AnalyzerUtils.display(txt, a4);

}

2、自定义分词器

(1)

public class MyStopAnalyzer extends Analyzer{

private Set stops;

public MyStopAnalyzer(String[] sws) {

//会自动将字符串数组转换为set

stops = StopFilter.makeStopSet(Version.LUCENE_35, sws, true);

}

@Override

public TokenStream tokenStream(String fieldName, Reader reader) {

return new StopFilter(Version.LUCENE_35,

new LowerCaseFilter(Version.LUCENE_35,

new LetterTokenizer(Version.LUCENE_35,reader)),stops);

}

}

(2)测试

@Test

public void test04() {

Analyzer a1 = new MyStopAnalyzer(new String[]{"house","come"});

String txt = "this is my house,I am come from China";

AnalyzerUtils.displayAllTokenInfo(txt, a1);

}

3、中文分词器

(1)paoding:庖丁解牛,已经不用

(2)mmseg4j:搜狗的,可以使用

mmseg4j简介

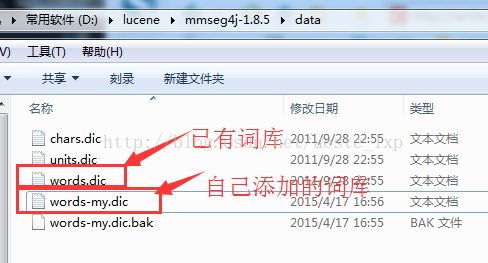

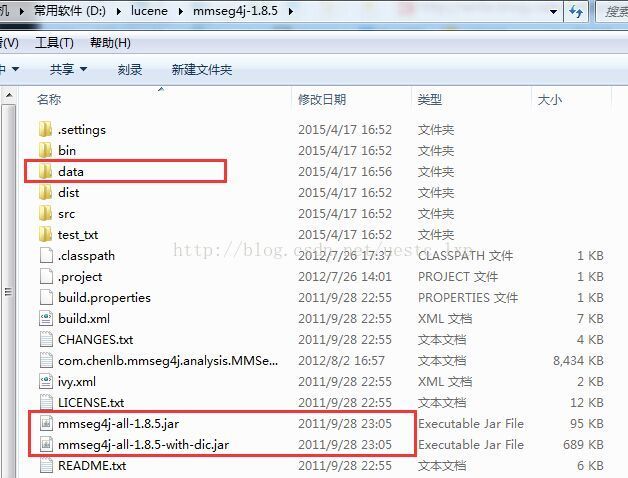

(1)下载mmseg4j-1.8.5.jar(有带dic的,有不带dic的版本,如果使用不带dic的,必须自己指定词库位置

(2)创建的时候使用MMSegAnalyzer分词器,其它代码无需改变

@Test

public void test02() {

String txt = "我来自中国,我爱我的祖国,快乐的小天使";

Analyzer a5 = new MMSegAnalyzer("D:\\lucene\\mmseg4j-1.8.5\\data");

AnalyzerUtils.display(txt, a5);

<span style="white-space:pre"> </span>}

(3)如果分出来的词有没有的,我们可以通过手动添加的形式添加到mmseg4j根目录/data/words-my.dic进行手动添加