机器学习--svm算法一些参数调节demo

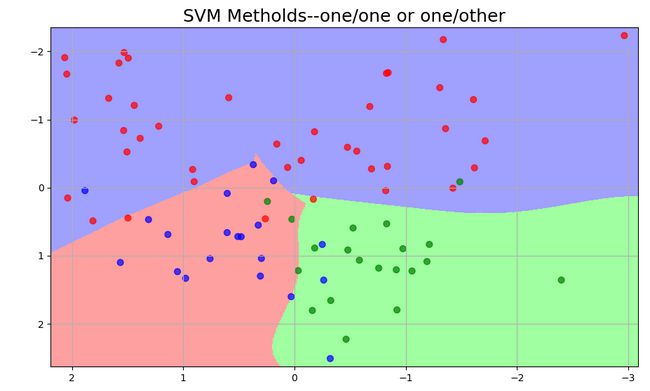

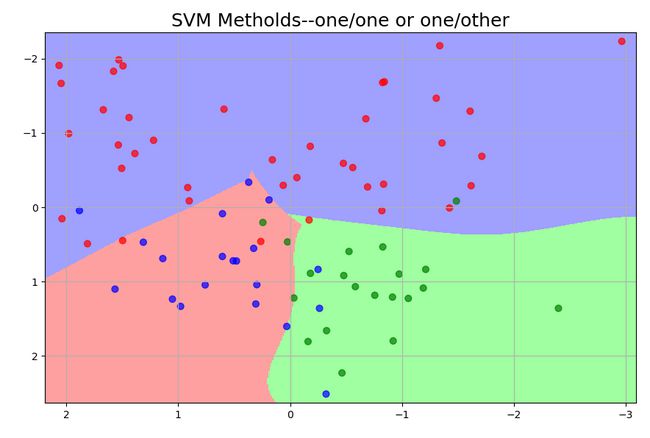

1.这里生成一些样本点,然后使用svm里面的径向基函数作为核方法,分别使用ovo和ovr方法进行实验:

import numpy as np from sklearn import svm from scipy import stats from sklearn.metrics import accuracy_score import matplotlib as mpl import matplotlib.pyplot as plt def extend(a, b, r): x = a - b m = (a + b) / 2 return m - r*x/2, m + r*x/2 if __name__ == '__main__': np.random.seed(0) N = 20 x = np.empty((4*N, 2)) # print(x) means = [(-1, 1), (1, 1), (1, -1), (-1, -1)] sigmas = [np.eye(2), 2*np.eye(2), np.diag((1, 2)), np.array(((2, 1), (1, 2)))] for i in range(4): mn = stats.multivariate_normal(means[i], sigmas[i]*0.3) x[i*N:(i+1)*N, :] = mn.rvs(N) a = np.array((0, 1, 2, 3)).reshape((-1, 1)) y = np.tile(a, N).flatten() clf = svm.SVC(C=1, kernel='rbf', gamma=1, decision_function_shape='ovr') # clf = svm.SVC(C=1, kernel='rbf', gamma=1, decision_function_shape='ovo') clf.fit(x, y) y_hat = clf.predict(x) acc = accuracy_score(y, y_hat) np.set_printoptions(suppress=True) print('True prediction numbers:%d, predictions:%.3f%%' %(round(acc*4*N), 100*acc)) print('decision_function:\n', clf.decision_function(x)) print('y_hat:\n', y_hat) # 开始画图 x1_min, x2_min = np.min(x, axis=0) x1_max, x2_max = np.max(x, axis=0) x1_min, x1_max = extend(x1_min, x1_max, 1.05) x2_min, x2_max = extend(x2_min, x2_max, 1.05) # 生成网格采样点 x1, x2 = np.mgrid[x1_min:x1_max:500j, x2_min:x2_max:500j] x_test = np.stack((x1.flat, x2.flat), axis=1) y_test = clf.predict(x_test) y_test = y_test.reshape(x1.shape) cm_light = mpl.colors.ListedColormap(['#A0FFA0', '#FFA0A0', '#A0A0FF']) cm_dark = mpl.colors.ListedColormap(['g', 'b', 'r']) plt.figure(facecolor='w') plt.pcolormesh(x1, x2, y_test, cmap=cm_light) plt.scatter(x[:, 0], x[:, 1], s=40, c=y, cmap=cm_dark, alpha=0.7) plt.xlim((x1_min, x1_max)) plt.ylim((x2_min, x2_max)) plt.grid(True) plt.tight_layout(pad=2.5) plt.title('SVM Metholds--one/one or one/other', fontsize=18) plt.show()ovo:

ovr:

由于数据的问题,这里基本看不出什么差别,具体哪种方法孰优孰劣具体看不同的数据集。

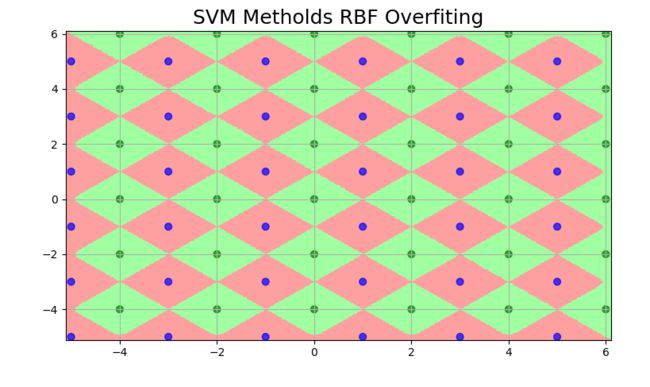

code2:

import numpy as np from sklearn import svm import matplotlib as mpl import matplotlib.pyplot as plt import matplotlib.colors def extend(a, b): return (1.01*a - 0.01*b), (1.01*b - 0.01*a) if __name__ == '__main__': t = np.linspace(-5, 5, 6) t1, t2 = np.meshgrid(t, t) x1 = np.stack((t1.ravel(), t2.ravel()), axis=1) N = len(x1) x2 = x1 + (1, 1) x = np.concatenate((x1, x2)) y = np.array([1]*N + [-1]*N) clf = svm.SVC(C=0.1, kernel='rbf', gamma=5) clf.fit(x, y) y_hat = clf.predict(x) print('Accuracy:%.3f%%' %(np.mean(y_hat == y) * 100)) # 开始画图 cm_light = mpl.colors.ListedColormap(['#A0FFA0', '#FFA0A0']) cm_dark = mpl.colors.ListedColormap(['g', 'b']) x1_min, x1_max = extend(x[:, 0].min(), x[:, 0].max()) x2_min, x2_max = extend(x[:, 1].min(), x[:, 1].max()) # 生成网格采样点 x1, x2 = np.mgrid[x1_min:x1_max:500j, x2_min:x2_max:500j] x_test = np.stack((x1.flat, x2.flat), axis=1) y_test = clf.predict(x_test) y_test = y_test.reshape(x1.shape) plt.figure(facecolor='w') plt.pcolormesh(x1, x2, y_test, cmap=cm_light) plt.scatter(x[:, 0], x[:, 1], s=40, c=y, cmap=cm_dark, alpha=0.7) plt.xlim((x1_min, x1_max)) plt.ylim((x2_min, x2_max)) plt.grid(True) plt.tight_layout(pad=2.5) plt.title('SVM Metholds RBF Overfiting', fontsize=18) plt.show()

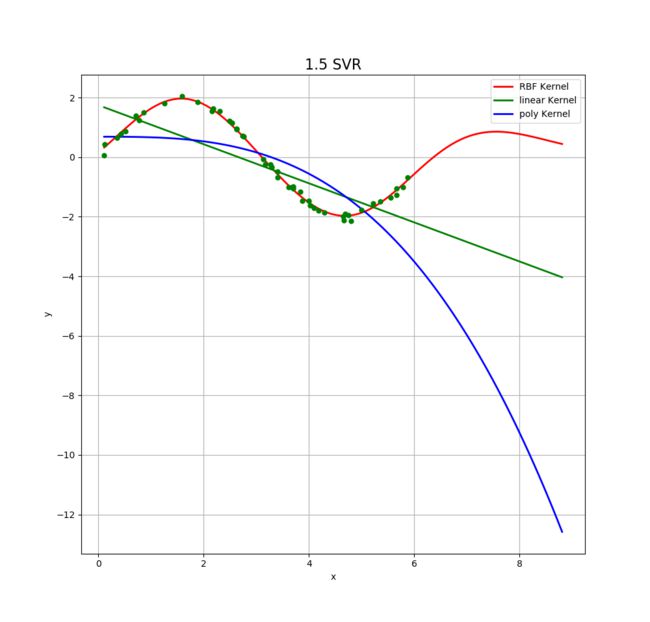

code3:

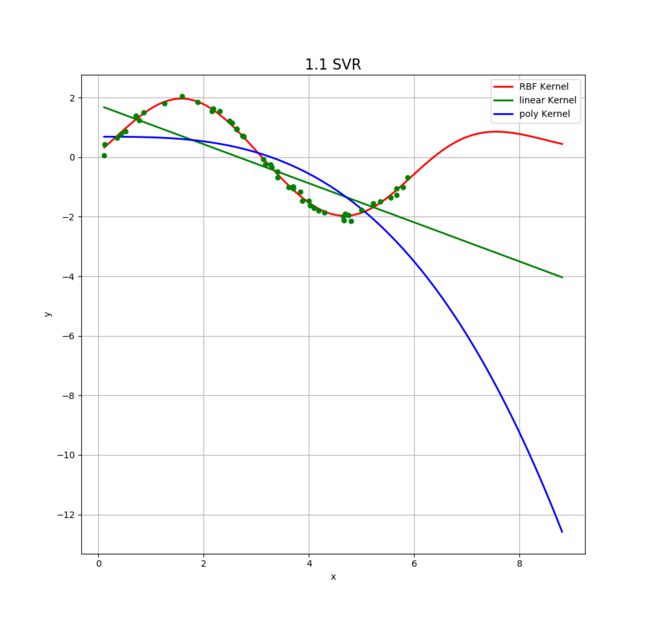

import numpy as np from sklearn import svm from sklearn.model_selection import GridSearchCV import matplotlib.pyplot as plt if __name__ == '__main__': N = 50 np.random.seed(0) x = np.sort(np.random.uniform(0, 6, N), axis=0) y = 2*np.sin(x) + 0.1*np.random.randn(N) x = x.reshape(-1, 1) print('x:\n', x) print('y:\n', y) print('--------SVR RBF---------') svr_rbf_model = svm.SVR(kernel='rbf', gamma=0.2, C=100) svr_rbf_model.fit(x, y) print('--------SVR Linear---------') svr_linear_model = svm.SVR(kernel='linear', C=100) svr_linear_model.fit(x, y) print('--------SVR Polynomial---------') svr_poly_model = svm.SVR(kernel='poly', degree=3, C=100) svr_poly_model.fit(x, y) x_test = np.linspace(x.min(), 1.5*x.max(), 100).reshape(-1, 1) y_hat_rbf = svr_rbf_model.predict(x_test) y_hat_linear = svr_linear_model.predict(x_test) y_hat_poly = svr_poly_model.predict(x_test) plt.figure(figsize=(10, 10), facecolor='w') # plt.scatter(x[sp], y[sp], s=120, c='r', marker='*', label='Support Vectors', zorder=3) plt.plot(x_test, y_hat_rbf, 'r-', lw=2, label='RBF Kernel') plt.plot(x_test, y_hat_linear, 'g-', lw=2, label='linear Kernel') plt.plot(x_test, y_hat_poly, 'b-', lw=2, label='poly Kernel') plt.plot(x, y, 'go', markersize=5) plt.legend(loc='upper right') plt.title('1.1 SVR', fontsize=16) plt.xlabel('x') plt.ylabel('y') plt.grid(True) plt.show()接着使用一个数据集:bipartition.txt(其实就是生成的一堆3维数据:

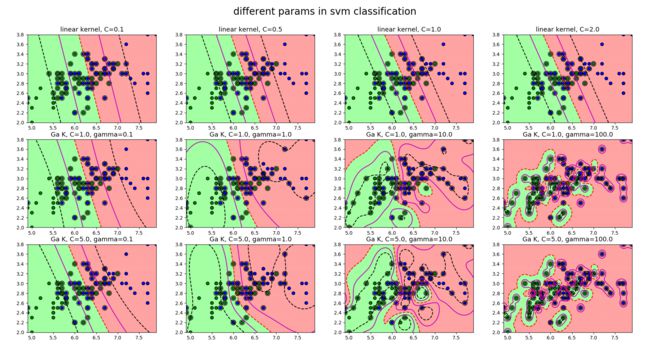

import numpy as np from sklearn import svm import matplotlib as mpl import matplotlib.colors import matplotlib.pyplot as plt def show_accuracy(a, b): acc = a.ravel() == b.ravel() print('the acc:%.3f%%' % (100*float(acc.sum()) / a.size)) if __name__ == '__main__': data = np.loadtxt('bipartition.txt', dtype=np.float, delimiter='\t') x, y = np.split(data, (2,), axis=1) y[y == 0] = -1 y = y.ravel() print(x) print(y) # 设置分类器,进行调参 clf_param = (('linear', 0.1), ('linear', 0.5), ('linear', 1), ('linear', 2), ('rbf', 1, 0.1), ('rbf', 1, 1), ('rbf', 1, 10), ('rbf', 1, 100), ('rbf', 5, 0.1), ('rbf', 5, 1), ('rbf', 5, 10), ('rbf', 5, 100)) # 开始画图 x1_min, x1_max = x[:, 0].min(), x[:, 0].max() x2_min, x2_max = x[:, 1].min(), x[:, 1].max() # 生成网格采样点 x1, x2 = np.mgrid[x1_min:x1_max:200j, x2_min:x2_max:200j] # 测试点 grid_test = np.stack((x1.flat, x2.flat), axis=1) cm_light = mpl.colors.ListedColormap(['#A0FFA0', '#FFA0A0']) cm_dark = mpl.colors.ListedColormap(['g', 'b']) plt.figure(figsize=(14, 14), facecolor='w') for i, param in enumerate(clf_param): # print(param) clf = svm.SVC(C=param[1], kernel=param[0]) if param[0] == 'rbf': clf.gamma = param[2] title = 'Ga K, C=%.1f, gamma=%.1f' %(param[1], param[2]) else: title = 'linear kernel, C=%.1f' %param[1] clf.fit(x, y) y_hat = clf.predict(x) show_accuracy(y_hat, y) # 画图 print(title) print('the numbers of support vectors:\n', clf.n_support_) print('the params of support vectors:\n', clf.dual_coef_) print('the support vectors:\n', clf.support_) plt.subplot(3, 4, i+1) grid_hat = clf.predict(grid_test) grid_hat = grid_hat.reshape(x1.shape) plt.pcolormesh(x1, x2, grid_hat, cmap=cm_light, alpha=0.8) plt.scatter(x[:, 0], x[:, 1], c=y, edgecolor='k', s=40, cmap=cm_dark) plt.scatter(x[clf.support_, 0], x[clf.support_, 1], edgecolor='k', facecolor='none', s=100, marker='o') z = clf.decision_function(grid_test) z = z.reshape(x1.shape) plt.contour(x1, x2, z, colors=list('kmrmk'), linestyles=['--', '-', '--', '-', '--'], lw=[1, 0.5, 1.5, 0.5, 1], levels=[-1, -0.5, 0, 0.5, 1]) plt.xlim(x1_min, x1_max) plt.ylim(x2_min, x2_max) plt.title(title, fontsize=13) plt.suptitle('different params in svm classification', fontsize=20) plt.tight_layout(2.0) plt.subplots_adjust(top=0.90) plt.show()

调参经验总结:对于线性核函数,C参数越小,其算法的泛化能力相对要较强,然后过渡带要宽一些,C越大,要求算法分类误差要尽量小,因此准确率要较高,但也可能在训练集上出现overfitting。对于高斯核函数,C的调参效果和线性核一样,但是gamma参数越小其算法分类精确度要偏低,但是泛化能力较强。