Tensorflow Mask-RCNN(二)——非实时 检测视频

代码参考:https://github.com/Tony607/colab-mask-rcnn

具体安装请见上一篇博客

分两步走:

①把下载好的视频变成一帧一帧的,对每一帧进行detection,把框,label,scores都标在图上,保存成图片

② 把保存好的一帧一帧的图片,合成视频

有些同学可能不太懂怎么获取摄像头

下面的代码是一个小demo——如何获取摄像头或者获取本地视频,并显示在当前窗口上

import cv2

import numpy as np#添加模块和矩阵模块

cap=cv2.VideoCapture(0)

#打开摄像头,若打开本地视频,同opencv一样,只需将0换成("×××.avi")

while(1): # get a frame

ret, frame = cap.read() # show a frame

cv2.imshow("capture", frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()import cv2

import numpy as np

# 定义随机颜色函数

def random_colors(N):

np.random.seed(1)

colors=[tuple(255 * np.random.rand(3)) for _ in range(N)]

return colors

def apply_mask(image,mask,color,alpha=0.5):

for n, c in enumerate(color):

image[:, :, n] = np.where(

mask == 1,

image[:, :, n] * (1 - alpha) + alpha * c,

image[:, :, n]

)

return image

def display_instances(image, boxes, masks, ids, names, scores):

"""

take the image and results and apply the mask, box, and Label

"""

n_instances = boxes.shape[0]

colors = random_colors(n_instances)

if not n_instances:

print('NO INSTANCES TO DISPLAY')

else:

assert boxes.shape[0] == masks.shape[-1] == ids.shape[0]

for i, color in enumerate(colors):

if not np.any(boxes[i]):

continue

y1, x1, y2, x2 = boxes[i]

label = names[ids[i]]

score = scores[i] if scores is not None else None

caption = '{} {:.2f}'.format(label, score) if score else label

mask = masks[:, :, i]

image = apply_mask(image, mask, color)

image = cv2.rectangle(image, (x1, y1), (x2, y2), color, 2)

image = cv2.putText(

image, caption, (x1, y1), cv2.FONT_HERSHEY_COMPLEX, 0.7, color, 2

)

return image

if __name__ == '__main__':

"""

test everything

"""

import os

import sys

from mrcnn import utils

import mrcnn.model as modellib

from mrcnn import visualize

import coco

# We use a K80 GPU with 24GB memory, which can fit 3 images.

batch_size = 3

#ROOT_DIR = os.getcwd()

ROOT_DIR =os.path.abspath("../") # 根目录的地址

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

VIDEO_DIR = os.path.join(ROOT_DIR, "videos") #原始视频存放的文件夹

VIDEO_SAVE_DIR = os.path.join(VIDEO_DIR, "save2") #每一帧图片所存放的文件夹

COCO_MODEL_PATH = os.path.join(ROOT_DIR, "mask_rcnn_coco.h5")

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

class InferenceConfig(coco.CocoConfig):

GPU_COUNT = 1

IMAGES_PER_GPU = batch_size

config = InferenceConfig()

config.display()

model = modellib.MaskRCNN(

mode="inference", model_dir=MODEL_DIR, config=config

)

model.load_weights(COCO_MODEL_PATH, by_name=True)

class_names = [

'BG', 'person', 'bicycle', 'car', 'motorcycle', 'airplane',

'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird',

'cat', 'dog', 'horse', 'sheep', 'cow', 'elephant', 'bear',

'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie',

'suitcase', 'frisbee', 'skis', 'snowboard', 'sports ball',

'kite', 'baseball bat', 'baseball glove', 'skateboard',

'surfboard', 'tennis racket', 'bottle', 'wine glass', 'cup',

'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza',

'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed',

'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote',

'keyboard', 'cell phone', 'microwave', 'oven', 'toaster',

'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors',

'teddy bear', 'hair drier', 'toothbrush'

]

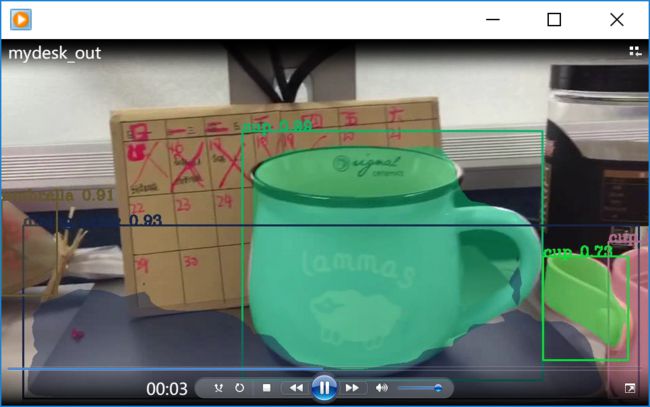

capture = cv2.VideoCapture(os.path.join(VIDEO_DIR, 'mydesk.mp4')) #这里是输入视频的文件名

try:

if not os.path.exists(VIDEO_SAVE_DIR):

os.makedirs(VIDEO_SAVE_DIR)

except OSError:

print ('Error: Creating directory of data')

frames = []

frame_count = 0

# these 2 lines can be removed if you dont have a 1080p camera.

capture.set(cv2.CAP_PROP_FRAME_WIDTH, 1920)

capture.set(cv2.CAP_PROP_FRAME_HEIGHT, 1080)

while True:

ret, frame = capture.read()

# Bail out when the video file ends

if not ret:

break

# Save each frame of the video to a list

frame_count += 1

frames.append(frame)

print('frame_count :{0}'.format(frame_count))

if len(frames) == batch_size:

results = model.detect(frames, verbose=0)

print('Predicted')

for i, item in enumerate(zip(frames, results)):

frame = item[0]

r = item[1]

frame = display_instances(

frame, r['rois'], r['masks'], r['class_ids'], class_names, r['scores']

)

name = '{0}.jpg'.format(frame_count + i - batch_size)

name = os.path.join(VIDEO_SAVE_DIR, name)

cv2.imwrite(name, frame)

print('writing to file:{0}'.format(name))

# Clear the frames array to start the next batch

frames = []

capture.release()

会在save2中生成每一帧图片对应的检测结果:

第二步:将文件夹中的每一帧图片合为一个视频

def make_video(outvid, images=None, fps=30, size=None,

is_color=True, format="FMP4"):

"""

Create a video from a list of images.

@param outvid output video

@param images list of images to use in the video

@param fps frame per second

@param size size of each frame

@param is_color color

@param format see http://www.fourcc.org/codecs.php

@return see http://opencv-python-tutroals.readthedocs.org/en/latest/py_tutorials/py_gui/py_video_display/py_video_display.html

The function relies on http://opencv-python-tutroals.readthedocs.org/en/latest/.

By default, the video will have the size of the first image.

It will resize every image to this size before adding them to the video.

"""

from cv2 import VideoWriter, VideoWriter_fourcc, imread, resize

fourcc = VideoWriter_fourcc(*format)

vid = None

for image in images:

if not os.path.exists(image):

raise FileNotFoundError(image)

img = imread(image)

if vid is None:

if size is None:

size = img.shape[1], img.shape[0]

vid = VideoWriter(outvid, fourcc, float(fps), size, is_color)

if size[0] != img.shape[1] and size[1] != img.shape[0]:

img = resize(img, size)

vid.write(img)

#vid.release()

return vid

import glob

import os

# Directory of images to run detection on

ROOT_DIR =os.path.abspath("../")

VIDEO_DIR = os.path.join(ROOT_DIR, "videos")

VIDEO_SAVE_DIR = os.path.join(VIDEO_DIR, "save2")

images = list(glob.iglob(os.path.join(VIDEO_SAVE_DIR, '*.*')))

# Sort the images by integer index

images = sorted(images, key=lambda x: float(os.path.split(x)[1][:-3]))

outvid = os.path.join(VIDEO_DIR, "mydesk_out.mp4")

make_video(outvid, images, fps=30)

print('make video success')

生成一个mydesk_out.mp4的文件夹