机器学习实战 KNN实战

KNN实战

- 1、KNN算法的一般流程

- 1、搜集数据:可以使用任何方法

- 2、准备数据:距离计算所需要的数值,最好是结构化的数据格式

- 3、分析数据:可以使用任何方法

- 4、训练算法:此步骤不适用于KNN算法

- 5、测试算法:计算错误率

- 6、使用算法:首先需求输入样本数据和结构化的输出结果,然后运行KNN算法判定输入数据分别属于哪一个分类,最后应用对计算出的分类执行后续的处理。

- 2、约会问题

- 3、使用Matplotlib创建散点图

- 4、归一化数值

- 5、手写体识别数据分类

学习《机器学习实战》

1、KNN算法的一般流程

1、搜集数据:可以使用任何方法

2、准备数据:距离计算所需要的数值,最好是结构化的数据格式

建立一个KNN.py,里面放置数据和标签。

# _*_ encoding=utf8 _*_

from numpy import *

import operator

def createDataSet():

group = array([1.1,1.0],[1.0,1.0],[0,0],[0,0.1])

labels = ['A','A','B','B']

return group,labels

3、分析数据:可以使用任何方法

四个点很明显可以看见,分为两类,A标签有两个点,B标签有两个点。

4、训练算法:此步骤不适用于KNN算法

KNN的伪代码思想:

对未知类别属性的数据集中的每个点依次执行以下操作:

(1)、计算已知类别数据集中的点与当前点之间的操作;

(2)、按照距离递增次序排序

(3)、选取与当前点距离最小的k个点

(4)、确定前k个点所在类别出现的概率

(5)、返回前k个点出现概率最高的类别作为当前点的预测分类

KNN的python代码

def classify(inX,dataSet,labels,k):

dataSetSize = dataSet.shape[0]

print(dataSetSize) # 获取第一维的维度 4*2 也就是4

# tile 重复inX维度(4,1) inX = [0,0] ==>先横向复制4次,纵向复制1次,也就是本身 最后的维度(4,2)

# 这儿求欧式距离,都是求到0 0 的距离 以下几行都是求欧式距离

diffMat = tile(inX, (dataSetSize, 1)) - dataSet

sqDiffMat = diffMat ** 2

# 按列求和,也可按行求和axis=1

sqDistances = sqDiffMat.sum(axis=1)

print(sqDistances)

distances = sqDistances ** 0.5

print(distances)

# 返回从小到大的数值的索引

sortedDistIndicies = distances.argsort()

print(sortedDistIndicies)

classCount = {}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

# 统计离0 0 最近的范围的标签是什么,

classCount[voteIlabel] = classCount.get(voteIlabel, 0) + 1

sortedClassCount = sorted(classCount.items(), key=operator.itemgetter(1), reverse=True)

print(sortedClassCount[0][0]) # 输出预测属于哪一类

5、测试算法:计算错误率

检测分类的结果

if __name__ == '__main__':

group,labels = createDataSet()

classify([0,0],group,labels,3)

6、使用算法:首先需求输入样本数据和结构化的输出结果,然后运行KNN算法判定输入数据分别属于哪一个分类,最后应用对计算出的分类执行后续的处理。

2、约会问题

在线约会想找一个适合自己的对象,她把每一个约会对象划分为三类人。

- 不喜欢的人

- 魅力一般的人

- 极具魅力的人

根据哪些特征可以把一个人划分到其中一类呢,她是根据三个特点的分值占比,将一个人划分到某一类,这三个特点是:

- 每年获得飞行常客里程数

- 玩视频游戏所占时间百分比

- 每周消费的冰淇淋公升数

数据特征如下:

40920 8.326976 0.953952 3

14488 7.153469 1.673904 2

26052 1.441871 0.805124 1

75136 13.147394 0.428964 1

38344 1.669788 0.134296 1

72993 10.141740 1.032955 1

35948 6.830792 1.213192 3

42666 13.276369 0.543880 3

首先让文件数据读入内存,训练数据和标记数据分开存储

def file2matrix(filename):

fr = open(filename)

numberOfLines = len(fr.readlines())

returnMat = zeros((numberOfLines,3))

classLabelVector = []

fr = codecs.open(filename,'r','utf-8')

index = 0

for line in fr.readlines():

line = line.strip()

listFromLine = line.split('\t')

returnMat[index,:] = listFromLine[0:3]

classLabelVector.append(int(listFromLine[-1]))

index += 1

return returnMat,classLabelVector

查看一下内存中的训练数据和标签数据

代码:

if __name__ == '__main__':

# group,labels = createDataSet()

# classify([0,0],group,labels,3)

datingDataMat,datingLabels = file2matrix('../data/datingTestSet2.txt')

print(datingDataMat[:3])

print(datingLabels[0:20])

结果:

[[4.092000e+04 8.326976e+00 9.539520e-01]

[1.448800e+04 7.153469e+00 1.673904e+00]

[2.605200e+04 1.441871e+00 8.051240e-01]]

[3, 2, 1, 1, 1, 1, 3, 3, 1, 3, 1, 1, 2, 1, 1, 1, 1, 1, 2, 3]

有了数据,有了代码,有了结果标签,就可以从训练数据抽取出一条数据,看看结果预测的对不对

选取最后一条数据,数据如下,把他从训练数据文件中删除:

43757 7.882601 1.332446 3

找前3个点出现的概率,测试代码如下:

if __name__ == '__main__':

# group,labels = createDataSet()

# classify([0,0],group,labels,3)

datingDataMat,datingLabels = file2matrix('../data/datingTestSet2.txt')

print(datingDataMat[:3])

print(datingLabels[0:20])

classify([43757,7.882601,1.332446], datingDataMat, datingLabels, 3)

。。。预测出来是1,看来是拿到的特征不够,改一行代码试试,找与前100个点的距离

classify([43757,7.882601,1.332446], datingDataMat, datingLabels, 3)

nice,预测出来是3了。

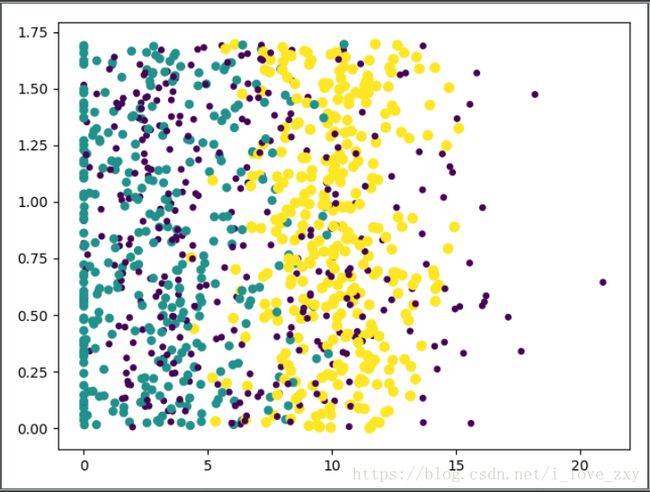

3、使用Matplotlib创建散点图

还是使用刚刚从文件读取出来的数据,查看玩视频游戏消耗时间占比和每周消费冰淇淋的公升数这两个数值,(第一个值太大,不压缩的话其他特征就看不见了,所以不选第一列的值)

代码:

if __name__ == '__main__':

datingDataMat,datingLabels = file2matrix('../data/datingTestSet2.txt')

fig = plt.figure()

ax = fig.add_subplot(111)

ax.scatter(datingDataMat[:,1],datingDataMat[:,2])

plt.show()

结果图

横轴是玩视频游戏消耗时间占比,纵轴是每周消费冰淇淋的公升数

由于颜色一样,这样的数据显示没什么太大的意义,现在加上标签分类,区分颜色查看这个点属于那一类。下图就是查看第二列和第三列数据,显示的属于哪一类(不喜欢、一般魅力、极具魅力)

修改的代码:

ax.scatter(datingDataMat[:,1],datingDataMat[:,2],

15.0*array(datingLabels),15.0*array(datingLabels))

4、归一化数值

刚刚所说,第一列的数据范围远远大于第二列和第三列的数值范围,所以第一列带来的影响也远远大于其他两列,

归一化就是将数据利用一定的比例压缩在0-1之间,这儿使用的方法如下

newValue = (oldValue-min)/(max-min)

也就是,(当前数据-该列最小数据)/(该列的最大数据-该列的最小数据)

代码:

def autoNorm(dataSet):

minVals = dataSet.min(0)

maxVals = dataSet.max(0)

ranges = maxVals - minVals

normDataSet = zeros(shape(dataSet))

m = dataSet.shape[0]

normDataSet = dataSet - tile(minVals, (m,1))

normDataSet = normDataSet/tile(ranges, (m,1))

return normDataSet, ranges, minVals

好像给了测试数据,测试方法如下:

def datingClassTest():

hoRatio = 0.50 #hold out 10%

datingDataMat,datingLabels = file2matrix('../data/datingTestSet2.txt')

normMat, ranges, minVals = autoNorm(datingDataMat)

m = normMat.shape[0]

numTestVecs = int(m*hoRatio)

errorCount = 0.0

for i in range(numTestVecs):

classifierResult = classify0(normMat[i,:],normMat[numTestVecs:m,:],datingLabels[numTestVecs:m],3)

print("the classifier came back with: %d, the real answer is: %d" % (classifierResult, datingLabels[i]))

if (classifierResult != datingLabels[i]): errorCount += 1.0

print("the total error rate is: %f" % (errorCount/float(numTestVecs)))

print(errorCount)

运行测试代码:

if __name__ == '__main__':

datingClassTest()

查看结果:

...

the classifier came back with: 2, the real answer is: 2

500

the classifier came back with: 1, the real answer is: 1

500

the classifier came back with: 1, the real answer is: 1

the total error rate is: 0.064128

可以看见约会问题的分类错误率是6.4128%

全部代码

# _*_ encoding=utf8 _*_

from numpy import *

import operator

import codecs

import matplotlib

import matplotlib.pyplot as plt

def createDataSet():

group = array([[1.1,1.0],[1.0,1.0],[0.0,0.0],[0.0,0.1]])

labels = ['A','A','B','B']

return group,labels

def classify(inX,dataSet,labels,k):

dataSetSize = dataSet.shape[0]

print(dataSetSize) # 获取第一维的维度 4*2 也就是4

# tile 重复inX维度(4,1) inX = [0,0] ==>先横向复制4次,纵向复制1次,也就是本身 最后的维度(4,2)

# 这儿求欧式距离,都是求到0 0 的距离 以下几行都是求欧式距离

diffMat = tile(inX, (dataSetSize, 1)) - dataSet

sqDiffMat = diffMat ** 2

# 按列求和,也可按行求和axis=1

sqDistances = sqDiffMat.sum(axis=1)

# print(sqDistances)

distances = sqDistances ** 0.5

# print(distances)

# 返回从小到大的数值的索引

sortedDistIndicies = distances.argsort()

# print(sortedDistIndicies)

classCount = {}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

# 统计离0 0 最近的范围的标签是什么,

classCount[voteIlabel] = classCount.get(voteIlabel, 0) + 1

sortedClassCount = sorted(classCount.items(), key=operator.itemgetter(1), reverse=True)

# print(sortedClassCount[0][0]) # 输出预测属于哪一类

return sortedClassCount[0][0]

def file2matrix(filename):

fr = open(filename)

numberOfLines = len(fr.readlines())

returnMat = zeros((numberOfLines,3))

classLabelVector = []

fr = codecs.open(filename,'r','utf-8')

index = 0

for line in fr.readlines():

line = line.strip()

listFromLine = line.split('\t')

returnMat[index,:] = listFromLine[0:3]

classLabelVector.append(int(listFromLine[-1]))

index += 1

return returnMat,classLabelVector

def autoNorm(dataSet):

minVals = dataSet.min(0)

maxVals = dataSet.max(0)

ranges = maxVals - minVals

normDataSet = zeros(shape(dataSet))

m = dataSet.shape[0]

normDataSet = dataSet - tile(minVals, (m,1))

normDataSet = normDataSet/tile(ranges, (m,1))

return normDataSet, ranges, minVals

def datingClassTest():

hoRatio = 0.50 #hold out 10%

datingDataMat,datingLabels = file2matrix('../data/datingTestSet2.txt') #load data setfrom file

normMat, ranges, minVals = autoNorm(datingDataMat)

m = normMat.shape[0]

numTestVecs = int(m*hoRatio)

errorCount = 0.0

for i in range(numTestVecs):

classifierResult = classify(normMat[i,:],normMat[numTestVecs:m,:],datingLabels[numTestVecs:m],3)

print("the classifier came back with: %d, the real answer is: %d" % (classifierResult, datingLabels[i]))

if (classifierResult != datingLabels[i]): errorCount += 1.0

print("the total error rate is: %f" % (errorCount/float(numTestVecs)))

print(errorCount)

if __name__ == '__main__':

# group,labels = createDataSet()

# classify([0,0],group,labels,3)

# datingDataMat,datingLabels = file2matrix('../data/datingTestSet2.txt')

# print(datingDataMat[:3])

# print(datingLabels[0:20])

# classify([43757,7.882601,1.332446], datingDataMat, datingLabels, 100)

# fig = plt.figure()

# ax = fig.add_subplot(111)

# ax.scatter(datingDataMat[:,1],datingDataMat[:,2],

# 15.0*array(datingLabels),15.0*array(datingLabels))

# plt.show()

datingClassTest()

5、手写体识别数据分类

类似之前的约会问题,构造输入和标签(10分类)就好。

构造输入方法也就是读取文件的方法:

查看手写体的文件,如下这样一个矩阵刻画出来的阿拉伯数字。

00000000000001111000000000000000

00000000000011111110000000000000

00000000001111111111000000000000

00000001111111111111100000000000

00000001111111011111100000000000

00000011111110000011110000000000

00000011111110000000111000000000

00000011111110000000111100000000

00000011111110000000011100000000

00000011111110000000011100000000

00000011111100000000011110000000

00000011111100000000001110000000

00000011111100000000001110000000

00000001111110000000000111000000

00000001111110000000000111000000

00000001111110000000000111000000

00000001111110000000000111000000

00000011111110000000001111000000

00000011110110000000001111000000

00000011110000000000011110000000

00000001111000000000001111000000

00000001111000000000011111000000

00000001111000000000111110000000

00000001111000000001111100000000

00000000111000000111111000000000

00000000111100011111110000000000

00000000111111111111110000000000

00000000011111111111110000000000

00000000011111111111100000000000

00000000001111111110000000000000

00000000000111110000000000000000

00000000000011000000000000000000

# 手写体识别是32*32的

def img2vector(filename):

returnVect = zeros((1,1024))

fr = open(filename)

for i in range(32):

lineStr = fr.readline()

for j in range(32):

returnVect[0,32*i+j] = int(lineStr[j])

return returnVect

测试代码:

def handwritingClassTest():

hwLabels = []

trainingFileList = os.listdir('../data/trainingDigits')

m = len(trainingFileList)

trainingMat = zeros((m,1024))

for i in range(m):

fileNameStr = trainingFileList[i]

fileStr = fileNameStr.split('.')[0]

classNumStr = int(fileStr.split('_')[0])

hwLabels.append(classNumStr)

trainingMat[i,:] = img2vector('../data/trainingDigits/%s' % fileNameStr)

testFileList = os.listdir('../data/testDigits')

errorCount = 0.0

mTest = len(testFileList)

for i in range(mTest):

fileNameStr = testFileList[i]

fileStr = fileNameStr.split('.')[0]

classNumStr = int(fileStr.split('_')[0])

vectorUnderTest = img2vector('../data/testDigits/%s' % fileNameStr)

classifierResult = classify(vectorUnderTest, trainingMat, hwLabels, 3)

print("the classifier came back with: %d, the real answer is: %d" % (classifierResult, classNumStr))

if (classifierResult != classNumStr):

errorCount += 1.0

print("\nthe total number of errors is: %d" % errorCount)

print("\nthe total error rate is: %f" % (errorCount/float(mTest)))

结果:

the total number of errors is: 11

the total error rate is: 0.011628

错误率很低,非常不错了