from .stochastic_gradient import BaseSGDClassifier

class Perceptron(BaseSGDClassifier):

def __init__(self, penalty=None, alpha=0.0001, fit_intercept=True,

max_iter=None, tol=None, shuffle=True, verbose=0, eta0=1.0,

n_jobs=None, random_state=0, early_stopping=False,

validation_fraction=0.1, n_iter_no_change=5,

class_weight=None, warm_start=False, n_iter=None):

super(Perceptron, self).__init__(

loss="perceptron", penalty=penalty, alpha=alpha, l1_ratio=0,

fit_intercept=fit_intercept, max_iter=max_iter, tol=tol,

shuffle=shuffle, verbose=verbose, random_state=random_state,

learning_rate="constant", eta0=eta0, early_stopping=early_stopping,

validation_fraction=validation_fraction,

n_iter_no_change=n_iter_no_change, power_t=0.5,

warm_start=warm_start, class_weight=class_weight, n_jobs=n_jobs,

n_iter=n_iter)

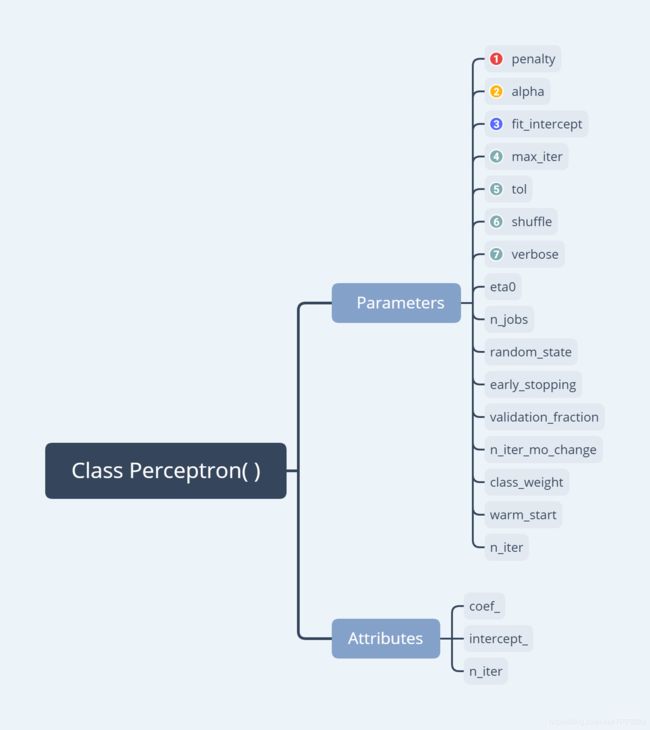

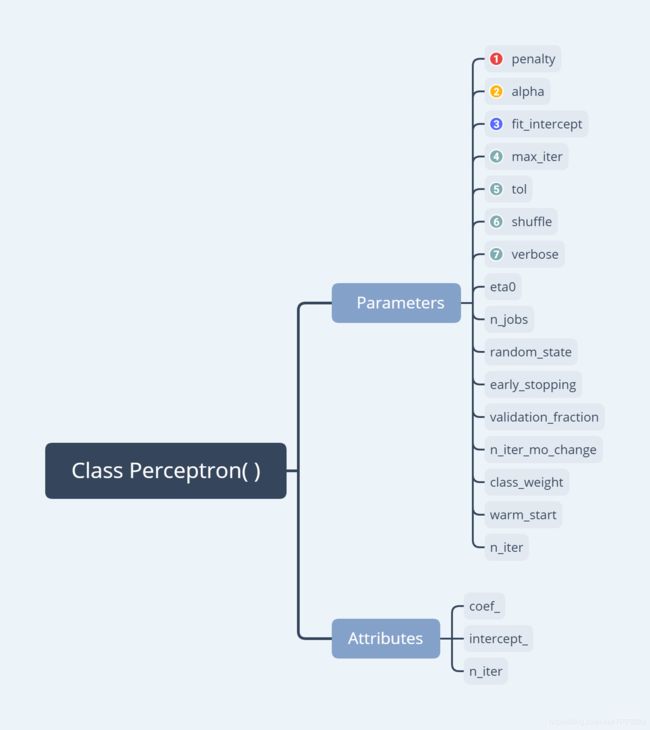

参数列表概览

1. penalty

penalty : None, 'l2' or 'l1' or 'elasticnet'

The penalty (aka regularization term) to be used. Defaults to None.

2. alpha

alpha : float

Constant that multiplies the regularization term if regularization is

used. Defaults to 0.0001

3. fit_intercept

fit_intercept : bool

Whether the intercept should be estimated or not. If False, the

data is assumed to be already centered. Defaults to True.

4. max_iter

max_iter : int, optional

The maximum number of passes over the training data (aka epochs).

It only impacts the behavior in the ``fit`` method, and not the

`partial_fit`.

Defaults to 5. Defaults to 1000 from 0.21, or if tol is not None.

.. versionadded:: 0.19

5. tol

tol : float or None, optional

The stopping criterion. If it is not None, the iterations will stop

when (loss > previous_loss - tol). Defaults to None.

Defaults to 1e-3 from 0.21.

.. versionadded:: 0.19

6. shuffle

shuffle : bool, optional, default True

Whether or not the training data should be shuffled after each epoch.

7. verbose

erbose : integer, optional

The verbosity level

8. eta0

eta0 : double

Constant by which the updates are multiplied. Defaults to 1.

9. n_jobs

n_jobs : int or None, optional (default=None)

The number of CPUs to use to do the OVA (One Versus All, for

multi-class problems) computation.

``None`` means 1 unless in a :obj:`joblib.parallel_backend` context.

``-1`` means using all processors. See :term:`Glossary `

for more details.

10.random_state

random_state : int, RandomState instance or None, optional, default None

The seed of the pseudo random number generator to use when shuffling

the data. If int, random_state is the seed used by the random number

generator; If RandomState instance, random_state is the random number

generator; If None, the random number generator is the RandomState

instance used by `np.random`.

11. early_stopping

early_stopping : bool, default=False

Whether to use early stopping to terminate training when validation.

score is not improving. If set to True, it will automatically set aside

a fraction of training data as validation and terminate training when

validation score is not improving by at least tol for

n_iter_no_change consecutive epochs.

.. versionadded:: 0.20

12. validation_fraction

validation_fraction : float, default=0.1

The proportion of training data to set aside as validation set for

early stopping. Must be between 0 and 1.

Only used if early_stopping is True.

.. versionadded:: 0.20

13. n_iter_change

n_iter_no_change : int, default=5

Number of iterations with no improvement to wait before early stopping.

.. versionadded:: 0.20

14. class_weighe

class_weight : dict, {class_label: weight} or "balanced" or None, optional

Preset for the class_weight fit parameter.

Weights associated with classes. If not given, all classes

are supposed to have weight one.

The "balanced" mode uses the values of y to automatically adjust

weights inversely proportional to class frequencies in the input data

as ``n_samples / (n_classes * np.bincount(y))``

15. warm_stat

warm_start : bool, optional

When set to True, reuse the solution of the previous call to fit as

initialization, otherwise, just erase the previous solution. See

:term:`the Glossary `.

16. n_iter

n_iter : int, optional

The number of passes over the training data (aka epochs).

Defaults to None. Deprecated, will be removed in 0.21.

.. versionchanged:: 0.19

Deprecated