TensorFlow实现经典深度学习网络(6):TensorFlow实现基于LSTM的语言模型

TensorFlow实现经典深度学习网络(6):TensorFlow实现基于LSTM的语言模型

循环神经网络(Recurrent Neural Networks,RNNs)出现于20世纪80年代,由于神经网络结构的进步和GPU上深度学习训练效率的突破,PNNs已经在众多自然语言处理(Natural Language Processing, NLP)中取得了巨大成功以及广泛应用。RNNs对时间序列数据非常有效,其每个神经元可通过内部组件保存之前输入的信息。RNNs已经被在实践中证明对NLP是非常成功的,如词向量表达、语句合法性检查、词性标注等。在RNNs中,目前使用最广泛最成功的模型便是LSTMs模型,该模型通常比Vanilla RNNs能够更好地对长短时依赖进行表达,该模型相对于一般的RNNs,只是在隐藏层做了手脚。对于LSTMs,后面会进行详细地介绍。下面对RNNs在NLP中的应用进行简单的介绍。

深度语言模型简介:

递归神经网络RNN

• 有2类

• 时间递归神经网络(Recurrent Neural Network)

• 针对时间序列

• 结构递归神经网络(Recursive Neural Network)

• 针对树状结构

• 优化方法

• 时序后向传播(Back propagation through time)

• 长时记忆/递归深度问题

• 梯度爆炸(Gradient exploding) ——梯度剪切

• 梯度消失(Gradient vanishing) ——特殊设计

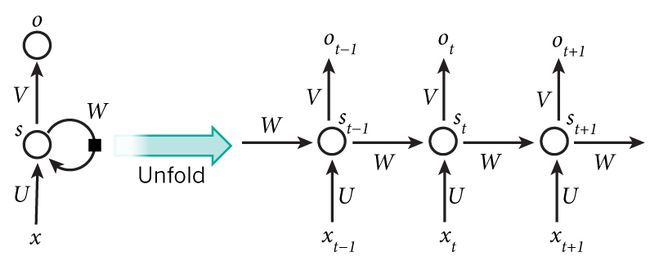

递归网络类似于数据结构中的树形结构,且其每层之间会有共享参数。而最为常用的循环神经网络,它的每层的结构相同,且每层之间参数完全共享。RNN的缩略图和展开图如下:

时序后向传播(BPTT)

• 传统后向传播(BP)在时间序列上的扩展

• t时刻的梯度是前t-1时刻所有梯度的累积

• 时间越长,梯度消失越严重

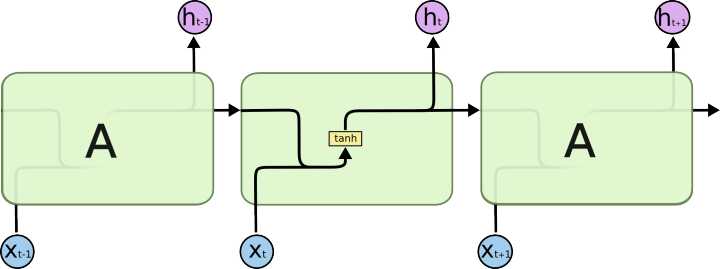

朴素Vanilla-RNN存在的问题

• 单层神经网络在时间上的扩展

• t-1时刻的隐层状态(Hidden state)会参与t时刻输出的计算

• 严重的梯度消失问题

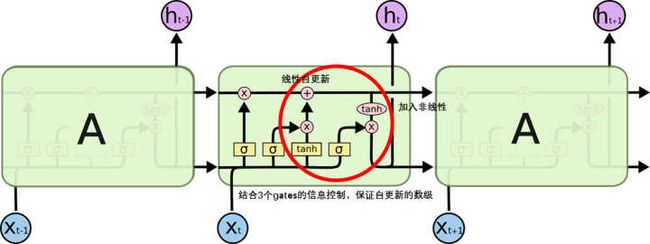

本文主要使用的为LSTM长短时记忆模型( Long Short-Term Memory )

• Hochreiter & Schmidhuber 于1997提出

• 有效捕捉长时记忆(Long dependency)

• 包含4个神经元组

• 1个记忆神经元(Memory cell)

• 3个控制门神经元

• 输入门(Input gate)

• 忘记门(Forget gate)

• 输出门(Output gate)

为了解决原始RNN网络结构存在的“vanishing gradient”问题,前辈们设计了LSTM这种新的网络结构。但从本质上来讲,LSTM是一种特殊的循环神经网络,其和RNN的区别在于,对于特定时刻t,隐藏层输出st的计算方式不同。故对LSTM网络的训练的思路与RNN类似,仅前向传播关系式不同而已。值得一提的是,在对LSTM网络进行训练时,cell state c[0]和hidden state s[0]都是随机初始化得到的。LSTM(或者其他gated RNNs)是在标准RNN 的基础上装备了若干个控制数级(magnitude)的gates。可以理解成神经网络(RNN整体)中加入其他神经网络(gates),而这些gates只是控制数级,控制信息的流动量。对于LSTM网络结构相关的理论,请参考点击打开链接.

在对LSTM有所了解后,我们就可以搭建网络了。首先需要下载PTB数据集并解压,将其放在自己的工程目录下,点此下载PTB数据集。其次下载TensorFlow Models库,进入models/tutorials/rnn/ptb文件,将reader.py拷贝到自己的工程目录下,点此下载Models库。以下代码是根据本人对LSTM网络的理解和现有资源(《TensorFlow实战》、TensorFlow的开源实现等)整理而成,并根据自己认识添加了注释。代码注释若有错误请指正。

# -*- coding: utf-8 -*-

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

# 导入常用库,载入TensorFlow

import time

import numpy as np

import tensorflow as tf

import reader

# 定义语言模型处理输入数据的class,PTBInput

class PTBInput(object):

def __init__(self, config, data, name=None):

self.batch_size = batch_size = config.batch_size

self.num_steps = num_steps = config.num_steps

self.epoch_size = ((len(data) // batch_size) - 1) // num_steps

self.input_data, self.targets = reader.ptb_producer(

data, batch_size, num_steps, name=name)

# 定义语言模型的class,PTBModels

class PTBModel(object):

def __init__(self, is_training, config, input_):

self._input = input_

batch_size = input_.batch_size

num_steps = input_.num_steps

size = config.hidden_size

vocab_size = config.vocab_size

# 设置LSTM单元

def lstm_cell():

return tf.contrib.rnn.BasicLSTMCell(

size, forget_bias=0.0, state_is_tuple=True)

attn_cell = lstm_cell

if is_training and config.keep_prob < 1:

def attn_cell():

return tf.contrib.rnn.DropoutWrapper(

lstm_cell(), output_keep_prob=config.keep_prob)

cell = tf.contrib.rnn.MultiRNNCell(

[attn_cell() for _ in range(config.num_layers)], state_is_tuple=True)

self._initial_state = cell.zero_state(batch_size, tf.float32)

# 创建网络的词嵌入embedding部分

with tf.device("/cpu:0"):

embedding = tf.get_variable(

"embedding", [vocab_size, size], dtype=tf.float32)

inputs = tf.nn.embedding_lookup(embedding, input_.input_data)

if is_training and config.keep_prob < 1:

inputs = tf.nn.dropout(inputs, config.keep_prob)

# 定义输出outputs

outputs = []

state = self._initial_state

with tf.variable_scope("RNN"):

for time_step in range(num_steps):

if time_step > 0: tf.get_variable_scope().reuse_variables()

(cell_output, state) = cell(inputs[:, time_step, :], state)

outputs.append(cell_output)

# 将output的内容用tf.concat串接,并转为一维向量

# Softmax层

output = tf.reshape(tf.concat(outputs, 1), [-1, size])

softmax_w = tf.get_variable(

"softmax_w", [size, vocab_size], dtype=tf.float32)

softmax_b = tf.get_variable("softmax_b", [vocab_size], dtype=tf.float32)

logits = tf.matmul(output, softmax_w) + softmax_b

loss = tf.contrib.legacy_seq2seq.sequence_loss_by_example(

[logits],

[tf.reshape(input_.targets, [-1])],

[tf.ones([batch_size * num_steps], dtype=tf.float32)])

self._cost = cost = tf.reduce_sum(loss) / batch_size

self._final_state = state

if not is_training:

return

# 定义学习速率变量lr,并设为不可训练

# 获取全部可训练的参数

self._lr = tf.Variable(0.0, trainable=False)

tvars = tf.trainable_variables()

grads, _ = tf.clip_by_global_norm(tf.gradients(cost, tvars),

config.max_grad_norm)

optimizer = tf.train.GradientDescentOptimizer(self._lr)

self._train_op = optimizer.apply_gradients(

zip(grads, tvars),

global_step=tf.contrib.framework.get_or_create_global_step())

# 控制学习速率

self._new_lr = tf.placeholder(

tf.float32, shape=[], name="new_learning_rate")

self._lr_update = tf.assign(self._lr, self._new_lr)

def assign_lr(self, session, lr_value):

session.run(self._lr_update, feed_dict={self._new_lr: lr_value})

# 定义property,将返回变量设为只读,防止修改变量引发的问题

@property

def input(self):

return self._input

@property

def initial_state(self):

return self._initial_state

@property

def cost(self):

return self._cost

@property

def final_state(self):

return self._final_state

@property

def lr(self):

return self._lr

@property

def train_op(self):

return self._train_op

# 定义不同大小的模型的参数

class SmallConfig(object):

init_scale = 0.1

learning_rate = 1.0

max_grad_norm = 5

num_layers = 2

num_steps = 20

hidden_size = 200

max_epoch = 4

max_max_epoch = 13

keep_prob = 1.0

lr_decay = 0.5

batch_size = 20

vocab_size = 10000

class MediumConfig(object):

init_scale = 0.05

learning_rate = 1.0

max_grad_norm = 5

num_layers = 2

num_steps = 35

hidden_size = 650

max_epoch = 6

max_max_epoch = 39

keep_prob = 0.5

lr_decay = 0.8

batch_size = 20

vocab_size = 10000

class LargeConfig(object):

init_scale = 0.04

learning_rate = 1.0

max_grad_norm = 10

num_layers = 2

num_steps = 35

hidden_size = 1500

max_epoch = 14

max_max_epoch = 55

keep_prob = 0.35

lr_decay = 1 / 1.15

batch_size = 20

vocab_size = 10000

class TestConfig(object):

init_scale = 0.1

learning_rate = 1.0

max_grad_norm = 1

num_layers = 1

num_steps = 2

hidden_size = 2

max_epoch = 1

max_max_epoch = 1

keep_prob = 1.0

lr_decay = 0.5

batch_size = 20

vocab_size = 10000

# 定义训练一个epoch数据的函数,记录当前时间、初始化损失loss、迭代数iters

# 创建输出字典表fetches

def run_epoch(session, model, eval_op=None, verbose=False):

start_time = time.time()

costs = 0.0

iters = 0

state = session.run(model.initial_state)

fetches = {

"cost": model.cost,

"final_state": model.final_state,

}

if eval_op is not None:

fetches["eval_op"] = eval_op

for step in range(model.input.epoch_size):

feed_dict = {}

for i, (c, h) in enumerate(model.initial_state):

feed_dict[c] = state[i].c

feed_dict[h] = state[i].h

vals = session.run(fetches, feed_dict)

cost = vals["cost"]

state = vals["final_state"]

costs += cost

iters += model.input.num_steps

if verbose and step % (model.input.epoch_size // 10) == 10:

print("%.3f perplexity: %.3f speed: %.0f wps" %

(step * 1.0 / model.input.epoch_size, np.exp(costs / iters),

iters * model.input.batch_size / (time.time() - start_time)))

return np.exp(costs / iters)

# 使用reader.ptb_raw_data直接读取解压后的数据,得到训练数据、验证数据、测试数据

raw_data = reader.ptb_raw_data('simple-examples/data/')

train_data, valid_data, test_data, _ = raw_data

config = SmallConfig()

eval_config = SmallConfig()

eval_config.batch_size = 1

eval_config.num_steps = 1

# 创建默认Graph,设置参数的初始化器

with tf.Graph().as_default():

initializer = tf.random_uniform_initializer(-config.init_scale,

config.init_scale)

# 创建训练模型m

with tf.name_scope("Train"):

train_input = PTBInput(config=config, data=train_data, name="TrainInput")

with tf.variable_scope("Model", reuse=None, initializer=initializer):

m = PTBModel(is_training=True, config=config, input_=train_input)

# 验证模型

with tf.name_scope("Valid"):

valid_input = PTBInput(config=config, data=valid_data, name="ValidInput")

with tf.variable_scope("Model", reuse=True, initializer=initializer):

mvalid = PTBModel(is_training=False, config=config, input_=valid_input)

# 测试模型

with tf.name_scope("Test"):

test_input = PTBInput(config=eval_config, data=test_data, name="TestInput")

with tf.variable_scope("Model", reuse=True, initializer=initializer):

mtest = PTBModel(is_training=False, config=eval_config,

input_=test_input)

# 使用tf.train.Supervisor()创建训练管理器sv

# 使用sv.managed_session()创建默认session

# 执行训练多个epoch数据的循环

# 完成全部训练后,计算并输出模型在测试集上的perplexity

sv = tf.train.Supervisor()

with sv.managed_session() as session:

for i in range(config.max_max_epoch):

lr_decay = config.lr_decay ** max(i + 1 - config.max_epoch, 0.0)

m.assign_lr(session, config.learning_rate * lr_decay)

print("Epoch: %d Learning rate: %.3f" % (i + 1, session.run(m.lr)))

train_perplexity = run_epoch(session, m, eval_op=m.train_op,

verbose=True)

print("Epoch: %d Train Perplexity: %.3f" % (i + 1, train_perplexity))

valid_perplexity = run_epoch(session, mvalid)

print("Epoch: %d Valid Perplexity: %.3f" % (i + 1, valid_perplexity))

test_perplexity = run_epoch(session, mtest)

print("Test Perplexity: %.3f" % test_perplexity)

运行程序,我们会看到如下的程序显示,这就是SmallConfig小型模型的最后结果:

Epoch: 12 Learning rate: 0.004

0.004 perplexity: 61.806 speed: 3252 wps

0.104 perplexity: 45.104 speed: 3253 wps

0.204 perplexity: 49.345 speed: 3254 wps

0.304 perplexity: 47.315 speed: 3253 wps

0.404 perplexity: 46.501 speed: 3252 wps

0.504 perplexity: 45.829 speed: 3253 wps

0.604 perplexity: 44.332 speed: 3253 wps

0.703 perplexity: 43.706 speed: 3253 wps

0.803 perplexity: 42.985 speed: 3253 wps

0.903 perplexity: 41.538 speed: 3253 wps

Epoch: 12 Train Perplexity: 40.693

Epoch: 12 Valid Perplexity: 119.818

Epoch: 13 Learning rate: 0.002

0.004 perplexity: 61.564 speed: 3245 wps

0.104 perplexity: 44.918 speed: 3253 wps

0.204 perplexity: 49.157 speed: 3252 wps

0.304 perplexity: 47.141 speed: 3252 wps

0.404 perplexity: 46.333 speed: 3252 wps

0.504 perplexity: 45.665 speed: 3252 wps

0.604 perplexity: 44.176 speed: 3252 wps

0.703 perplexity: 43.552 speed: 3252 wps

0.803 perplexity: 42.834 speed: 3252 wps

0.903 perplexity: 41.390 speed: 3252 wps

Epoch: 13 Train Perplexity: 40.547

Epoch: 13 Valid Perplexity: 119.663

Test Perplexity: 113.717