openstack创建实例失败,bug(1)解决办法

先说出我找见错误是在哪里,虽然是很小的失误,但还是得注意

1,一次不小心把控制节点的主机名给修改了,然后就导致keystone工作不正常,消息机制出现错误。把控制节点的主机名改回来到原来的主机名就可以了。

2,创建实例出现错误:

查看nova-compute.log 看到的是:

Starting instan

ce…

2017-05-16 15:10:55.461 3677 WARNING nova.compute.resource_tracker [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Host field should not be set on the instance unt

il resources have been claimed.

2017-05-16 15:10:55.461 3677 WARNING nova.compute.resource_tracker [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Node field should not be set on the instance unt

il resources have been claimed.

2017-05-16 15:10:55.464 3677 AUDIT nova.compute.claims [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Attempting claim: memory 512 MB, disk 1 GB

2017-05-16 15:10:55.464 3677 AUDIT nova.compute.claims [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Total memory: 9891 MB, used: 8192.00 MB

2017-05-16 15:10:55.465 3677 AUDIT nova.compute.claims [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] memory limit not specified, defaulting to unlimited

2017-05-16 15:10:55.465 3677 AUDIT nova.compute.claims [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Total disk: 196 GB, used: 93.00 GB

2017-05-16 15:10:55.466 3677 AUDIT nova.compute.claims [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] disk limit not specified, defaulting to unlimited

2017-05-16 15:10:55.482 3677 AUDIT nova.compute.claims [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Claim successful

2017-05-16 15:10:55.818 3677 INFO nova.scheduler.client.report [-] Compute_service record updated for (‘sles11sp3x64-hsm1-vm3’, ‘sles11sp3x64-hsm1-vm3.istl.chd.edu.cn’)

2017-05-16 15:10:55.871 3677 INFO nova.network.neutronv2.api [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Unable to reset device ID for port None

2017-05-16 15:10:56.350 3677 INFO nova.scheduler.client.report [-] Compute_service record updated for (‘sles11sp3x64-hsm1-vm3’, ‘sles11sp3x64-hsm1-vm3.istl.chd.edu.cn’)

2017-05-16 15:10:56.657 3677 INFO nova.virt.libvirt.driver [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Creating image

2017-05-16 15:10:56.752 3677 INFO nova.virt.disk.vfs.api [-] Unable to import guestfsfalling back to VFSLocalFS

2017-05-16 15:10:56.754 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:10:56.754 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:10:56.943 3677 INFO nova.scheduler.client.report [-] Compute_service record updated for (‘sles11sp3x64-hsm1-vm3’, ‘sles11sp3x64-hsm1-vm3.istl.chd.edu.cn’)

2017-05-16 15:10:58.755 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:10:58.756 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:00.758 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:00.759 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:02.762 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:02.763 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:04.766 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:04.767 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:06.769 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:06.770 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:08.771 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:08.772 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:10.774 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:10.775 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:10.775 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:12.778 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:12.779 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:14.781 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:14.782 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:16.784 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:16.785 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:18.786 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:18.788 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:20.790 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:20.791 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:22.793 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:22.794 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:24.795 3677 ERROR nova.virt.disk.mount.nbd [-] nbd module not loaded

2017-05-16 15:11:24.796 3677 INFO nova.virt.disk.mount.api [-] Device allocation failed. Will retry in 2 seconds.

2017-05-16 15:11:26.797 3677 WARNING nova.virt.disk.mount.api [-] Device allocation failed after repeated retries.

2017-05-16 15:11:26.798 3677 INFO nova.virt.libvirt.driver [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Using config drive

2017-05-16 15:11:26.892 3677 INFO nova.virt.libvirt.driver [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Creating config drive at /var/lib/nova/instances/9cd465f

8-76ec-40c2-8222-04796818f552/disk.config

2017-05-16 15:11:26.987 3677 ERROR nova.compute.manager [-] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Instance failed to spawn

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Traceback (most recent call last):

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] File “/usr/lib64/python2.6/site-packages/nova/compute/manager

.py”, line 2317, in _build_resources

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] yield resources

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] File “/usr/lib64/python2.6/site-packages/nova/compute/manager

.py”, line 2187, in _build_and_run_instance

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] block_device_info=block_device_info)

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] File “/usr/lib64/python2.6/site-packages/nova/virt/libvirt/driver.py”, line 2682, in spawn

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] write_to_disk=True)

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] File “/usr/lib64/python2.6/site-packages/nova/virt/libvirt/dr

iver.py”, line 4212, in _get_guest_xml

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] context)

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] File “/usr/lib64/python2.6/site-packages/nova/virt/libvirt/dr

iver.py”, line 3990, in _get_guest_config

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] flavor, CONF.libvirt.virt_type)

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] File “/usr/lib64/python2.6/site-packages/nova/virt/libvirt/vif.py”, line 358, in get_config

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] _(“Unexpected vif_type=%s”) % vif_type)

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552] NovaException: Unexpected vif_type=binding_failed

2017-05-16 15:11:26.987 3677 TRACE nova.compute.manager [instance: 9cd465f8-76ec-40c2-8222-04796818f552]

2017-05-16 15:11:26.989 3677 AUDIT nova.compute.manager [req-50e1bc3a-ebb8-4ec5-979d-c3db0f0e2fe0 None] [instance: 9cd465f8-76ec-40c2-8222-04796818f552] Terminating ins

Tance

首先我们要做的是:

1、检查openstack的各个组件是否正常工作

查看nova组件:

命令:nova service-list

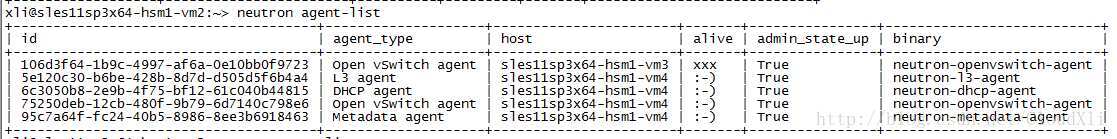

查看neutron组件是否正常工作:

命令:neutron agent-list

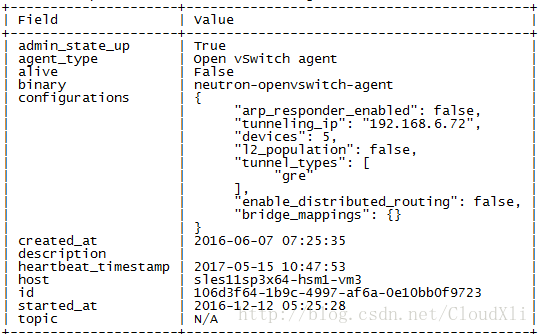

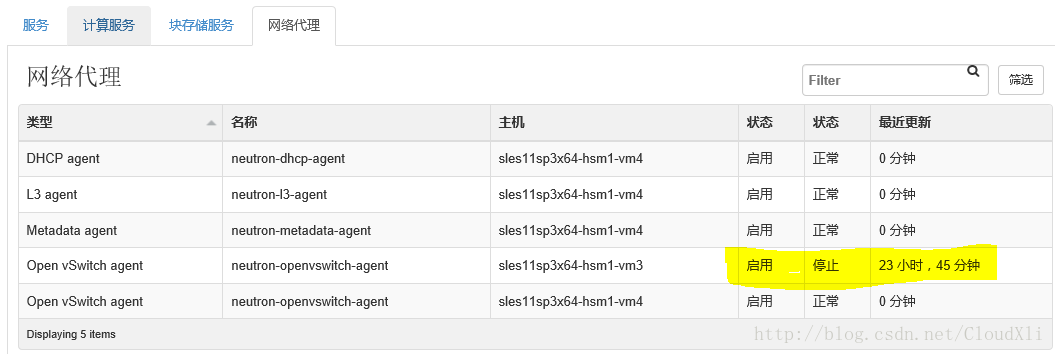

感觉是这个neutron-openvswitch-agent出问题了

neutron-openvswitch-agent只是Neutron的一个网络插件,那么什么是openvswitch,这里我们需要了解下:

OpenvSwitch,简称OVS是一个虚拟交换软件,主要用于虚拟机VM环境,作为一个虚拟交换机,支持Xen/XenServer, KVM, and VirtualBox多种虚拟化技术。

在这种某一台机器的虚拟化的环境中,一个虚拟交换机(vswitch)主要有两个作用:传递虚拟机VM之间的流量,以及实现VM和外界网络的通信。

在openstack中目前用的比较多的L2层agent应该就是openvswitch agent了。本文大致分析了一下openvswithc agent做了哪些事。

看一下openvswitch agent的启动:

1.neutron/plugins/openvswitch/agent/ovs_neutron_agent.py:main()

2. plugin = OVSNeutronAgent(**agent_config)

3. self.setup_rpc()

4. self.plugin_rpc = OVSPluginApi(topics.PLUGIN)

5. self.state_rpc = agent_rpc.PluginReportStateAPI(topics.PLUGIN)

6. self.connection = agent_rpc.create_consumers(…)

7. heartbeat = loopingcall.FixedIntervalLoopingCall(self._report_state)

8. self.setup_integration_br()

9. self.setup_physical_bridges(bridge_mappings)

10. self.sg_agent = OVSSecurityGroupAgent(…)

11. plugin.daemon_loop()

12. self.rpc_loop()

13. port_info = self.update_ports(ports)

14. sync = self.process_network_ports(port_info)

复制代码

启动时做了以下工作:

1. 设置plugin_rpc,这是用来与neutron-server通信的。

2. 设置state_rpc,用于agent状态信息上报。

3. 设置connection,用于接收neutron-server的消息。

4. 启动状态周期上报。

5. 设置br-int。

6. 设置bridge_mapping对应的网桥。

7. 初始化sg_agent,用于处理security group。

8. 周期检测br-int上的端口变化,调用process_network_ports处理添加/删除端口。

首先boot虚机时,nova-compute发消息给neutron-server请求创建port。之后,在driver里面在br-int上建立port后,neutron-openvswitch-port循环检测br-int会发现新增端口,对其设定合适的openflow规则以及localvlan,最后将port状态设置为ACTIVE。

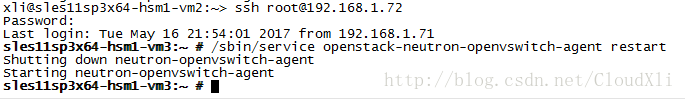

所以我们要做的是把vm3 上的neutron-openvswitch-agent 给变成正常,

命令:/sbin/service openstack-neutron-openvswitch-agent restart