3.统计学习方法—logistic regression

文章目录

- 1. logistic模型

- 1.2.参数估计

- 1.3 案例及代码

- 1.4 sklearn调包实现

1. logistic模型

回归模型: f ( x ) = 1 1 + e − w x f(x) = \frac{1}{1+e^{-wx}} f(x)=1+e−wx1

其中wx线性函数: w x = w 0 ∗ x 0 + w 1 ∗ x 1 + w 2 ∗ x 2 + . . . + w n ∗ x n , ( x 0 = 1 ) wx =w_0*x_0 + w_1*x_1 + w_2*x_2 +...+w_n*x_n,(x_0=1) wx=w0∗x0+w1∗x1+w2∗x2+...+wn∗xn,(x0=1)

对于二分类的logistic:

P ( Y = 1 ∣ x ) = e w ⋅ x 1 + e w x P(Y=1|x)=\frac{e^{w·x}}{1+e^{wx}} P(Y=1∣x)=1+ewxew⋅x

P ( Y = 0 ∣ x ) = 1 1 + e w x P(Y=0|x)=\frac{1}{1+e^{wx}} P(Y=0∣x)=1+ewx1

在解释系数的时候采用优势比:一件事情发生的机率(odds)是指该事件发生的概率与不发生的概率的比值。

如果事情的发生概率是P,则该事件发生的机率是 P 1 − P \frac{P}{1-P} 1−PP,该事件的对数几率或者logit函数是:

l o g i t ( p ) = log P 1 − P logit(p)= \log \frac{P}{1-P} logit(p)=log1−PP

对于logistic来说:

log P ( Y = 1 ∣ x ) 1 − P ( Y = 1 ∣ x ) = w ⋅ x \log \frac{P(Y=1|x)}{1-P(Y=1|x)} = w·x log1−P(Y=1∣x)P(Y=1∣x)=w⋅x

1.2.参数估计

极大似然法,然后取对数似然函数

最优化:梯度下降法、拟牛顿法

1.3 案例及代码

from math import exp

import numpy as np

import pandas as pd

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

def load_data():

iris = load_iris()

df = pd.DataFrame(iris.data,columns = iris.feature_names)

df['label'] = iris.target

df.columns = ['sepal length', 'sepal width', 'petal length', 'petal width', 'label']

data = np.array(df.iloc[:100, [0, 1, -1]])

return data[:,:2] , data[:,-1]

class LogisticRegressionClassifier:

def __init__(self,max_iter=200,learning_rate=0.01):

self.max_iter = max_iter

self.learning_rate = learning_rate

def sigmod(self, x):

return 1 / (1+exp(-x))

def data_matrix(self, X):

data_mat = []

for d in X:

data_mat.append([1.0 ,*d]) # 单星号是多组参数以元组的形式传参,双星号是字典

return data_mat

def fit(self, X, y):

data_mat = self.data_matrix(X)

self.weights = np.zeros((len(data_mat[0]),1),dtype=np.float32)

for iter_ in range(self.max_iter):

for i in range(len(X)):

result = self.sigmod(np.dot(data_mat[i],self.weights)) # dot矩阵乘法

error = y[i] - result

self.weights += self.learning_rate * error * np.transpose([data_mat[i]]) #transpose矩阵转置

def score(self, X_test, y_test):

right = 0

X_test = self.data_matrix(X_test)

for x, y in zip(X_test, y_test): #zip就是将x,y变成一对一对的,就像(1,22)这种

result = np.dot(x, self.weights)

if (result > 0 and y == 1) or (result < 0 and y == 0):

right += 1

return right / len(X_test)

if __name__ == '__main__':

X,y = load_data()

X_train,X_test,y_train,y_test = train_test_split(X,y,train_size=0.7,test_size=0.3)

sc = StandardScaler()

sc.fit(X_train) # 计算均值和方差

x_train_std = sc.transform(X_train) #利用计算好的方差和均值进行Z分数标准化

x_test_std = sc.transform(X_test)

model = LogisticRegressionClassifier()

model.fit(x_train_std,y_train)

print(model.score(x_test_std,y_test))

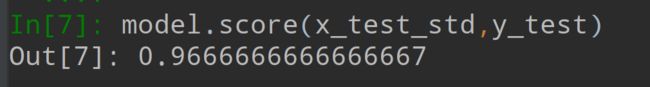

1.4 sklearn调包实现

这篇文章对sklearn实现logistic讲的特别详细,还有案例和代码