UnityShader——屏幕空间反射(一)

从Crytek提出SSR后,基本上成了各种大作标配,而自己不知道有意还是无意居然也一直没有自己写过SSR实现,正好最近有点时间,自己写了写试了试,啃了啃别人的源码,博客内容主要是自己在学习过程中的一些踩坑试错过程和分析,写在这权当笔记了,想直接看成熟方案的看官可以绕道了。

SSR本质上就是 screen space raytracing 的一种应用, 通过深度和法线贴图重建视锥内的世界,之前的博客我们扒过 Unity 的 global fog 重建世界的方法,其通过一个自定义的 blit 函数将视锥远截面的四个顶点传入 Shader 来插值,但实际上我们可以不用那么麻烦,我们直接插值内置的 Blit 函数所绘制的 Quad 顶点的 UV 就可以了,因为 Blit 函数所构造的 quad 在经过坐标转换和裁剪后, 是恰好覆盖屏幕的(要不然怎么叫屏幕特效呢),其顶点的屏幕位置和顶点 UV 是恰好对应的:

v2f vert (appdata v)

{

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

o.test = v.uv;

o.test1 = o.vertex;

return o;

}

fixed4 frag (v2f i) : SV_Target

{

return fixed4(i.test1.xy/i.test1.w * 0.5 + 0.5, 0, 1);

return fixed4(i.test.xy,0,1);

}上面代码中两个返回的结果是一模一样的

因此,我们直接构造

o.rayDirVS = mul(unity_CameraInvProjection, float4((float2(v.uv.x, v.uv.y) - 0.5) * 2, 1, 1));便可在片元着色器中得到相机出发、穿过每一个像素点到达相机远截面的射线,结合深度贴图,便可得到 view space 中每个像素的位置:

float depth = SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv);

depth = Linear01Depth (depth);

float3 view_pos = i.rayDirVS.xyz/i.rayDirVS.w * depth; 然后我们获取法线并转换至相机空间:

float3 normal = tex2D (_CameraGBufferTexture2, i.uv).xyz * 2.0 - 1.0;

normal = mul((float3x3)_NormalMatrix, normal);注意到这里使用了自己传过来的矩阵而不是内置的矩阵,因为 blit 函数生成的 quad 在绘制时已经调用了 GL.LoadOrtho 来进行坐标转换,因此在 Shader 中, UNITY_MATRIX_M、UNITY_MATRIX_V 都是单位矩阵, UNITY_MATRIX_P 则是:

接下来便正式开始计算反射了,原始思路非常简单,有了 normal 和 ray, 可以非常简单的计算出反射后的光线,计算该光线与 depth 的交点,拿到该交点处的着色信息叠加到反射点就行了,重点就在于光线与 depth 求交,方法都是光线步进,基于这个思路,我们马上可以写出最简单的实现:

#define MAX_TRACE_DIS 50

#define MAX_IT_COUNT 50

#define EPSION 0.1

float2 PosToUV(float3 vpos)

{

float4 proj_pos = mul(unity_CameraProjection, float4(vpos ,1));

float3 screenPos = proj_pos.xyz/proj_pos.w;

return float2(screenPos.x,screenPos.y) * 0.5 + 0.5;

}

float compareWithDepth(float3 vpos, out bool isInside)

{

float2 uv = PosToUV(vpos);

float depth = tex2D (_CameraDepthTexture, uv);

depth = LinearEyeDepth (depth);

isInside = uv.x > 0 && uv.x < 1 && uv.y > 0 && uv.y < 1;

return depth + vpos.z;

}

bool rayTrace(float3 o, float3 r, out float3 hitp)

{

float3 start = o;

float3 end = o;

float stepSize = 0.15;//MAX_TRACE_DIS / MAX_IT_COUNT;

for (int i = 1; i <= MAX_IT_COUNT; ++i)

{

end = o + r * stepSize * i;

if(length(end - start) > MAX_TRACE_DIS)

return false;

bool isInside = true;

float diff = compareWithDepth(end, isInside);

if(isInside)

{

if(abs(diff) < EPSION)

{

hitp = end;

return true;

}

}

else

{

return false;

}

}

return false;

}

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

float depth = SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv);

depth = Linear01Depth (depth);

float3 view_pos = i.rayDirVS.xyz/i.rayDirVS.w * depth;

float3 normal = tex2D (_CameraGBufferTexture2, i.uv).xyz * 2.0 - 1.0;

normal = mul((float3x3)_NormalMatrix, normal);

float3 reflectedRay = reflect(normalize(view_pos), normal);

float3 hitp = 0;

if(rayTrace(view_pos, reflectedRay, hitp))

{

float2 tuv = PosToUV(hitp);

float3 hitCol = tex2D (_CameraGBufferTexture0, tuv);

col += fixed4(hitCol, 1);

}

return col;

}

至于效果嘛,也非常屎,而且,在特定角度下,我们还能看到这样的现象:

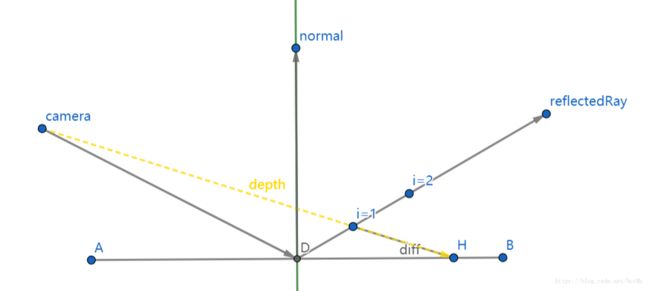

原因如下图所示:

在第一步时,如果 diff < EPSION, 则反射光线与反射点所在的平面相交了,然后就出现了自己反射自己的现象,那么解决方法有两个:

1、增加第一个采样点的距离

2、减小阈值 EPSION

第一个方法显然不行,例如我们把代码改成:

for (int i = 3; i <= MAX_IT_COUNT; ++i)结果就会变成这样:

近处的相交完全检测不到了

但如果在步长和采样方法不变的时候直接减小阈值的话,一样会漏掉许多相交点:

如果不是要完全舍弃性能的话,剩下的就只有在步进过程中变动步长了,Binary Search 呼之欲出:

先定义一个步进,当发现某个step与几何体相交时,将step的步进/2,并以该step的起点为总体起点重新进行step march,并找到新的相交点;以此循环N次,N=iteration;

根据这个思路我们把代码改成这样:

#define MAX_TRACE_DIS 50

#define MAX_IT_COUNT 10

#define MAX_BS_IT 5

uniform float EPSION;

uniform float _StepSize;

bool rayTrace(float3 o, float3 r, float stepSize, out float3 _end, out float _diff)

{

float3 start = o;

float3 end = o;

for (int i = 1; i <= MAX_IT_COUNT; ++i)

{

end = o + r * stepSize * i;

if(stepSize * i > MAX_TRACE_DIS)

return false;

bool isInside = true;

float diff = compareWithDepth(end, isInside);

if(!isInside)

return false;

_diff = diff;

_end = end;

if(_diff < 0)

return true;

}

return false;

}

bool BinarySearch(float3 o, float3 r, out float3 hitp, out float debug)

{

float3 start = o;

float3 end = o;

float sign = 1;

float stepSize = _StepSize;

float diff = 0;

for (int i = 0; i < MAX_BS_IT; ++i)

{

if(rayTrace(start, r, stepSize, end, diff))

{

if(abs(diff) < EPSION)

{

debug = diff;

hitp = end;

return true;

}

start = end - stepSize * r;

stepSize /= 2;

}

else

{

return false;

}

}

return false;

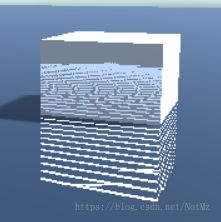

}最大循环次数保持和之前一样,初始步长0.3, 阈值0.03的效果如图:

效果比之前好上不少,但依然是一坨 Shit,但我们毕竟下官方 Post Processing Pack 中的SSR的相同迭代次数类似步长下模糊降至最低的效果:

嗯。。貌似也好不到哪去,先忍住不看官方的源码,要不然快乐就少了一倍(哈哈,反正也没人催我弄),那么,接下来从哪开始进行优化呢?

依然是先从光线步进的算法下手,这些年发展下来,大佬们不断的提出了优化的方法,这里主要参考的是 Morgan McGuire 的方法,即每次步进都至少移动一个像素点,以避免在同一个像素点重复采样,作者还贴心的给出了完整代码,该方法通过DDA在屏幕空间绘制直线以避免同一个像素多次采样,然后将直线转换到 view space 得到 z 值并与该点的深度进行比较,这里要注意的就是 z 值是按照 1/z 进行线性插值的,否则计算出来的 z 值和 depthbuffer 中的深度是对不上的,具体证明可以参考我之前的博客,原代码中有一些我觉得莫名其妙的地方,基本上就按照自己的理解写了,代码如下:

#define MAX_IT_COUNT 50

uniform float EPSION;

uniform float _StepSize;

uniform float _MaxLength;

inline bool isIntersectWithDepth(float zb, float2 hitPixel)

{

float depth = tex2D (_CameraDepthTexture, hitPixel * 0.5 + 0.5);

depth = LinearEyeDepth (depth);

return abs(zb + depth) < EPSION;

}

bool DDARayTrace(float3 o, float3 r, out float2 hitPixel)

{

float3 end = o + r * _MaxLength;

float4 h0 = mul(unity_CameraProjection, float4(o, 1));

float4 h1 = mul(unity_CameraProjection, float4(end, 1));

float k0 = 1/h0.w, k1 = 1/h1.w;

//screen space

float2 p0 = h0.xy * k0, p1 = h1.xy * k1;

//DDA

float2 delta = p1 - p0;

bool permute = false;

if(abs(delta.x) < abs(delta.y))

{

permute = true;

delta = delta.yx;

p0 = p0.yx;

p1 = p1.yx;

}

float stepDir = sign(delta.x);

float invdx = stepDir / delta.x;

//derivatives

float dk = (k1 - k0) * invdx * _StepSize / _MaxLength;

float2 dp = float2(stepDir, delta.y * invdx) * _StepSize / _MaxLength;

float pixelSize = min(_MainTex_TexelSize.x, _MainTex_TexelSize.y);

if(length(dp) < pixelSize)

dp *= pixelSize / min(dp.x, dp.y);

bool intersect = false;

float zb = 0;

float2 p = p0;

float k = k0;

for (int i = 0; i < MAX_IT_COUNT && intersect == false; ++i)

{

p += dp;

k += dk;

zb = -1/k;

hitPixel = permute? p.yx : p;

intersect = isIntersectWithDepth(zb, hitPixel);

}

return intersect;

}阈值0.025, 步长1, 最大距离50的情况下效果如图所示:

虽然出现了一些莫名其妙的反射,但…但至少排线整齐了呀……好吧依然是一坨 Shit,我们先来解决这些莫名其妙的反射,输出交点法线如图:

好嘛,又是自己反射自己了,但既然是这样,干脆把反射点和交点法线非常接近的反射点直接排除掉,把相交判断改成下面这样:

inline bool isIntersectWithDepth(float zb, float3 worldnormal, float2 hitPixel)

{

float depth = tex2D (_CameraDepthTexture, hitPixel * 0.5 + 0.5);

depth = LinearEyeDepth (depth);

float3 pNormal = tex2D (_CameraGBufferTexture2, hitPixel * 0.5 + 0.5).xyz * 2.0 - 1.0;

return abs(zb + depth) < EPSION && dot(worldnormal, pNormal) < 0.9;

}效果如下:

嗯,简单粗暴,一下子干净了,貌似也很少能出现反射点和交点法线方向一样的情况,等等,这里又是什么鬼:

我们分析一波,大佬的算法是不可能出现这种低级错误的,那就是我改错了,一波观察后把 end 定义改成大佬的模样:

float rayLength = ((o.z + r.z * _MaxLength) > -_ProjectionParams.y) ?

(-_ProjectionParams.y - o.z) / r.z : _MaxLength;

float3 end = o + r * rayLength;问题解决:

我们继续,现在这一条一条的看着很突兀,之前体积光那边博客的时候就接触过在 raytrace 过程中加噪声来均匀采样的技巧,但是懒得没去自己实现,DDA这个算法的作者源代码里也是加入了噪声的,正好这里试一试:

float2 p = p0 + jitter * dp;

float k = k0 + jitter * dk;嗯。。肉眼居然完全看不出有啥变化,那就只能上大招了,模糊走起,我们将反射结果单独输出并进行高斯模糊后再与原场景 buffer 合并,代码如下:

private void OnRenderImage(RenderTexture src, RenderTexture dst)

{

if (combineMat != null)

{

RenderTexture gbuffer3 = RenderTexture.GetTemporary(src.width, src.height, 0);

Graphics.Blit(src, gbuffer3);

if (reflectMat == null)

{

Graphics.Blit(src, dst);

}

else

{

reflectMat.SetFloat("_MaxLength", maxLength);

reflectMat.SetFloat("EPSION", epsion);

reflectMat.SetFloat("_StepSize", stepSize);

reflectMat.SetMatrix("_NormalMatrix", Camera.current.worldToCameraMatrix);

Graphics.Blit(src, dst, reflectMat, 0);

}

if (blurMat != null)

{

int rtW = src.width / downSample;

int rtH = src.height / downSample;

RenderTexture buffer0 = RenderTexture.GetTemporary(rtW, rtH, 0);

buffer0.filterMode = FilterMode.Bilinear;

Graphics.Blit(dst, buffer0);

for (int i = 0; i < iterations; i++)

{

blurMat.SetFloat("_BlurSize", 1.0f + i * blurSpread);

RenderTexture buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

Graphics.Blit(buffer0, buffer1, blurMat, 0);

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

Graphics.Blit(buffer0, buffer1, blurMat, 1);

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

}

Graphics.Blit(buffer0, src);

RenderTexture.ReleaseTemporary(buffer0);

combineMat.SetTexture("_gbuffer3", gbuffer3);

Graphics.Blit(src, dst, combineMat);

RenderTexture.ReleaseTemporary(gbuffer3);

}

}

else

{

Graphics.Blit(src, dst);

}

}SubShader {

CGINCLUDE

#include "UnityCG.cginc"

sampler2D _MainTex;

half4 _MainTex_TexelSize;

float _BlurSize;

struct v2f {

float4 pos : SV_POSITION;

half2 uv[5]: TEXCOORD0;

};

v2f vertBlurVertical(appdata_img v) {

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

half2 uv = v.texcoord;

o.uv[0] = uv;

o.uv[1] = uv + float2(0.0, _MainTex_TexelSize.y * 1.0) * _BlurSize;

o.uv[2] = uv - float2(0.0, _MainTex_TexelSize.y * 1.0) * _BlurSize;

o.uv[3] = uv + float2(0.0, _MainTex_TexelSize.y * 2.0) * _BlurSize;

o.uv[4] = uv - float2(0.0, _MainTex_TexelSize.y * 2.0) * _BlurSize;

return o;

}

v2f vertBlurHorizontal(appdata_img v) {

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

half2 uv = v.texcoord;

o.uv[0] = uv;

o.uv[1] = uv + float2(_MainTex_TexelSize.x * 1.0, 0.0) * _BlurSize;

o.uv[2] = uv - float2(_MainTex_TexelSize.x * 1.0, 0.0) * _BlurSize;

o.uv[3] = uv + float2(_MainTex_TexelSize.x * 2.0, 0.0) * _BlurSize;

o.uv[4] = uv - float2(_MainTex_TexelSize.x * 2.0, 0.0) * _BlurSize;

return o;

}

fixed4 fragBlur(v2f i) : SV_Target {

float weight[3] = {0.4026, 0.2442, 0.0545};

fixed3 sum = tex2D(_MainTex, i.uv[0]).rgb * weight[0];

for (int it = 1; it < 3; it++) {

sum += tex2D(_MainTex, i.uv[it*2-1]).rgb * weight[it];

sum += tex2D(_MainTex, i.uv[it*2]).rgb * weight[it];

}

return fixed4(sum, 1.0);

}

ENDCG

ZTest Always Cull Off ZWrite Off

Pass {

NAME "GAUSSIAN_BLUR_VERTICAL"

CGPROGRAM

#pragma vertex vertBlurVertical

#pragma fragment fragBlur

ENDCG

}

Pass {

NAME "GAUSSIAN_BLUR_HORIZONTAL"

CGPROGRAM

#pragma vertex vertBlurHorizontal

#pragma fragment fragBlur

ENDCG

}

} SubShader

{

// No culling or depth

Cull Off ZWrite Off ZTest Always

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

sampler2D _gbuffer3;

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct v2f

{

float2 uv : TEXCOORD0;

float4 vertex : SV_POSITION;

};

v2f vert (appdata v)

{

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

o.uv = v.uv;

return o;

}

sampler2D _MainTex;

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

fixed4 ref = tex2D(_gbuffer3, i.uv);

return col + ref;

}

ENDCG

}

}加上一张贴图后效果如下:

感觉勉强能看了,接下来我们把模糊程度和反射距离关联上,将 raytrace 距离存储在 a 通道,通过模糊后的 a 通道对原始反射与模糊后的反射进行插值:

private void OnRenderImage(RenderTexture src, RenderTexture dst)

{

if (combineMat != null)

{

RenderTexture original_scene = RenderTexture.GetTemporary(src.width, src.height, 0);

Graphics.Blit(src, original_scene);

RenderTexture b4_blur_refl = RenderTexture.GetTemporary(src.width, src.height, 0, RenderTextureFormat.ARGB32);

if (reflectMat == null)

{

Graphics.Blit(src, dst);

}

else

{

reflectMat.SetFloat("_MaxLength", maxLength);

reflectMat.SetFloat("EPSION", epsion);

reflectMat.SetFloat("_StepSize", stepSize);

reflectMat.SetMatrix("_NormalMatrix", Camera.current.worldToCameraMatrix);

Graphics.Blit(src, b4_blur_refl, reflectMat, 0);

}

if (blurMat != null)

{

int rtW = b4_blur_refl.width / downSample;

int rtH = b4_blur_refl.height / downSample;

RenderTexture buffer0 = RenderTexture.GetTemporary(rtW, rtH, 0, RenderTextureFormat.ARGB32);

buffer0.filterMode = FilterMode.Bilinear;

Graphics.Blit(b4_blur_refl, buffer0);

for (int i = 0; i < iterations; i++)

{

blurMat.SetFloat("_BlurSize", 1.0f + i * blurSpread);

RenderTexture buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0, RenderTextureFormat.ARGB32);

Graphics.Blit(buffer0, buffer1, blurMat, 0);

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

Graphics.Blit(buffer0, buffer1, blurMat, 1);

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

}

RenderTexture after_blur_refl = RenderTexture.GetTemporary(buffer0.width, buffer0.height, 0, RenderTextureFormat.ARGB32);

Graphics.Blit(buffer0, after_blur_refl);

RenderTexture.ReleaseTemporary(buffer0);

combineMat.SetTexture("_b4Blur", b4_blur_refl);

combineMat.SetTexture("_gbuffer3", original_scene);

Graphics.Blit(after_blur_refl, dst, combineMat);

RenderTexture.ReleaseTemporary(original_scene);

RenderTexture.ReleaseTemporary(b4_blur_refl);

RenderTexture.ReleaseTemporary(after_blur_refl);

}

}

else

{

Graphics.Blit(src, dst);

}

}for (int i = 0; i < MAX_IT_COUNT && intersect == false; ++i)

{

p += dp;

k += dk;

zb = -1/k;

hitPixel = permute? p.yx : p;

length = i * _StepSize * invdx / rayLength * r;

intersect = isIntersectWithDepth(zb, normal, hitPixel);

}fixed4 fragBlur(v2f i) : SV_Target {

float weight[3] = {0.4026, 0.2442, 0.0545};

fixed4 col = tex2D(_MainTex, i.uv[0]);

fixed4 sum = col * weight[0];

for (int it = 1; it < 3; it++) {

sum += tex2D(_MainTex, i.uv[it*2-1]) * weight[it];

sum += tex2D(_MainTex, i.uv[it*2]) * weight[it];

}

return sum;

}fixed4 frag (v2f i) : SV_Target

{

fixed4 col_after = tex2D(_MainTex, i.uv);//blured reflect

fixed4 col_b4 = tex2D(_b4Blur, i.uv);//unblured reflect

fixed4 ref = tex2D(_gbuffer3, i.uv);//original scene

return fixed4(ref.rgb + lerp(col_b4.rgb, col_after.rgb, clamp(0,1, col_after.a * 2)), 1);

}但至少像个反射的样子了哎,莫名其妙已经挺长了,还有好多情况没涉及到,很多优秀的算法也还没去尝试,慢慢来吧,to be continue…