azkaban安装插件

如何在Azkaban安装插件(二)

温馨提示:要看高清无码套图,请使用手机打开并单击图片放大查看。Fayson的github:https://github.com/fayson/cdhproject提示:代码块部分可以左右滑动查看噢

1.文档编写目的

前面Fayson介绍了《如何编译安装Azkaban服务》和《如何编译Azkaban插件》及《如何在Azkaban中安装HDFS插件以及与CDH集成》,我们知道Azkaban支持的插件不止HDFS还有JobType、HadoopSecurityManager、JobSummary、Reportal等,前面Fayson已经介绍了HDFS插件的安装以及与CDH集群集成,本篇文章主要介绍JobType、JobSummary及Reportal插件的安装及使用。

- 内容概述

1.JobSummary插件安装

2.Jobtypes插件安装

3.Reportal插件安装

- 测试环境

1.Redhat7.2

2.使用root用户操作

3.Azkaban版本为3.43.0

2.JobSummary插件安装

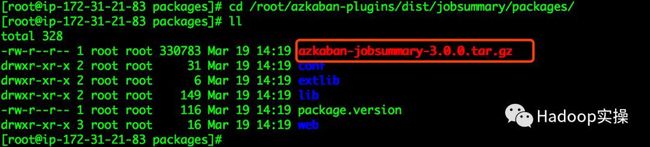

1.在前面的文章Fayson介绍了如何编译Azkaban插件,进入编译好的azkaban-plugins的如下目录

[root@ip-172-31-21-83 packages]# cd /root/azkaban-plugins/dist/jobsummary/packages/

(可左右滑动)

2.将azkaban-jobsummary-3.0.0.tar.gz拷贝至azkaban-web-server服务部署目录的plugins/viewers目录下,解压至当前目录并重命名为jobsummary

[root@ip-172-31-21-83 packages]# cp azkaban-jobsummary-3.0.0.tar.gz /opt/cloudera/azkaban/azkaban-web-server/plugins/viewer/ [root@ip-172-31-21-83 packages]# cd /opt/cloudera/azkaban/azkaban-web-server/plugins/viewer/ [root@ip-172-31-21-83 viewer]# tar -zxvf azkaban-jobsummary-3.0.0.tar.gz [root@ip-172-31-21-83 viewer]# mv azkaban-jobsummary-3.0.0 jobsummary

(可左右滑动)

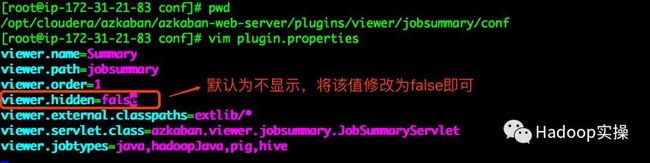

3.进入如下目录修改plugin.properties配置文件

[root@ip-172-31-21-83 conf]# pwd /opt/cloudera/azkaban/azkaban-web-server/plugins/viewer/jobsummary/conf [root@ip-172-31-21-83 conf]# vim plugin.properties

(可左右滑动)

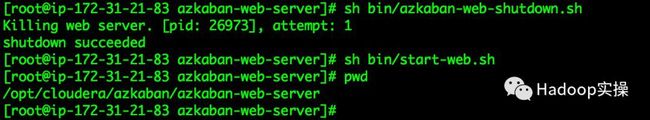

4.完成以上配置后,重启azkaban-web-server服务

[root@ip-172-31-21-83 azkaban-web-server]# sh bin/azkaban-web-shutdown.sh [root@ip-172-31-21-83 azkaban-web-server]# sh bin/start-web.sh [root@ip-172-31-21-83 azkaban-web-server]# pwd /opt/cloudera/azkaban/azkaban-web-server

(可左右滑动)

5.登录Azkaban的web界面查看插件是否安装成功

3.Jobtypes插件安装

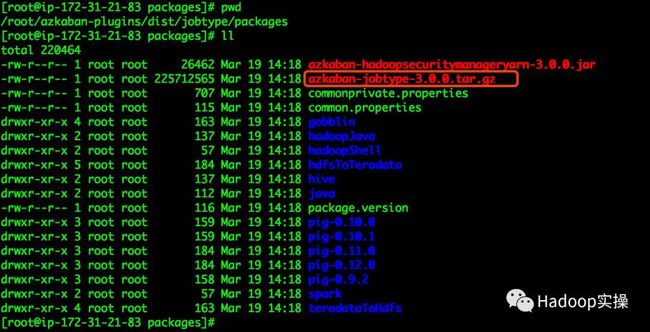

1.进入编译成功的Azkaban-plugins如下目录

[root@ip-172-31-21-83 packages]# pwd /root/azkaban-plugins/dist/jobtype/packages [root@ip-172-31-21-83 packages]# ll

(可左右滑动)

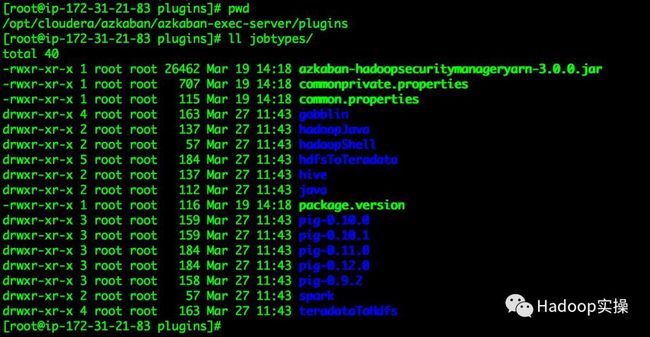

2.将如上目录下的azkaban-jobtype-3.0.0.tar.gz压缩包拷贝至azkaban-executor服务的plugins目录下解压并重命名为jobtypes

[root@ip-172-31-21-83 packages]# cp azkaban-jobtype-3.0.0.tar.gz /opt/cloudera/azkaban/azkaban-exec-server/plugins/ [root@ip-172-31-21-83 packages]# cd /opt/cloudera/azkaban/azkaban-exec-server/plugins/ [root@ip-172-31-21-83 plugins]# rm -rf jobtypes/ [root@ip-172-31-21-83 plugins]# tar -zxvf azkaban-jobtype-3.0.0.tar.gz [root@ip-172-31-21-83 plugins]# mv azkaban-jobtype-3.0.0 jobtypes

(可左右滑动)

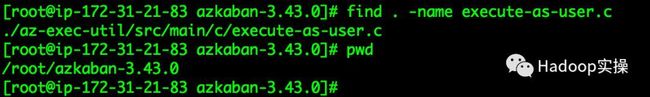

3.生成execute-as-user文件(非安全集群可跳过此步)

在azkaban源码包中找到execute-as-user.c文件

[root@ip-172-31-21-83 azkaban-3.43.0]# find . -name execute-as-user.c ./az-exec-util/src/main/c/execute-as-user.c [root@ip-172-31-21-83 azkaban-3.43.0]# pwd /root/azkaban-3.43.0 [root@ip-172-31-21-83 azkaban-3.43.0]#

(可左右滑动)

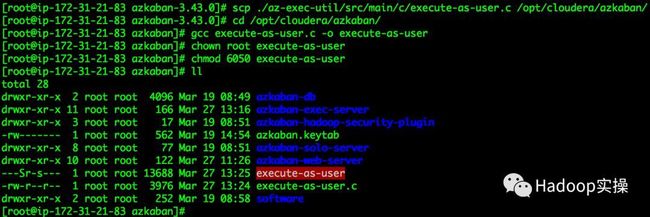

将execute-as-user.c文件拷贝至/opt/cloudera/azkaban目录,在该目录下执行如下命令生成execute-as-user文件并授权(注意:如下命令需要使用root用户执行)

[root@ip-172-31-21-83 azkaban-3.43.0]# scp ./az-exec-util/src/main/c/execute-as-user.c /opt/cloudera/azkaban/ [root@ip-172-31-21-83 azkaban-3.43.0]# cd /opt/cloudera/azkaban/ [root@ip-172-31-21-83 azkaban]# gcc execute-as-user.c -o execute-as-user [root@ip-172-31-21-83 azkaban]# chown root execute-as-user [root@ip-172-31-21-83 azkaban]# chmod 6050 execute-as-user [root@ip-172-31-21-83 azkaban]# ll

(可左右滑动)

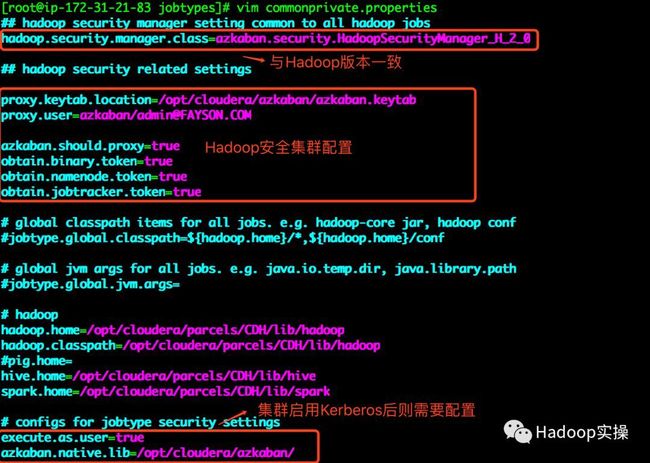

4.修改commomprivate.properties文件配置Hadoop环境及集群安全认证

[root@ip-172-31-21-83 jobtypes]# vim commonprivate.properties ## hadoop security manager setting common to all hadoop jobs hadoop.security.manager.class=azkaban.security.HadoopSecurityManager_H_2_0 ## hadoop security related settings proxy.keytab.location=/opt/cloudera/azkaban/azkaban.keytab proxy.user=azkaban/admin@FAYSON.COM azkaban.should.proxy=true obtain.binary.token=true obtain.namenode.token=true obtain.jobtracker.token=true # global classpath items for all jobs. e.g. hadoop-core jar, hadoop conf #jobtype.global.classpath=${hadoop.home}/*,${hadoop.home}/conf # global jvm args for all jobs. e.g. java.io.temp.dir, java.library.path #jobtype.global.jvm.args= # hadoop hadoop.home=/opt/cloudera/parcels/CDH/lib/hadoop hadoop.classpath=/opt/cloudera/parcels/CDH/lib/hadoop pig.home=/opt/cloudera/parcels/CDH/lib/pig hive.home=/opt/cloudera/parcels/CDH/lib/hive spark.home=/opt/cloudera/parcels/CDH/lib/spark # configs for jobtype security settings execute.as.user=true azkaban.native.lib=/opt/cloudera/azkaban/

(可左右滑动)

修改HadoopSecurityManager版本与Hadoop版本一致,Hadoop1.x使用azkaban.security.HadoopSecurityManager_H_1_0,Hadoop2.x使用azkaban.security.HadoopSecurityManager_H_2_0。

由于集群启用了Kerberos,所以需要配置proxy,如azkaban.shuould.proxy等。

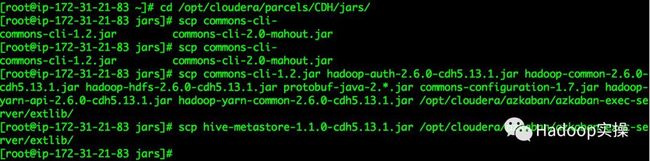

5.添加Hadoop依赖包到Azkaban-executor的extlib目录

[root@ip-172-31-21-83 ~]# cd /opt/cloudera/parcels/CDH/jars/ [root@ip-172-31-21-83 jars]# scp commons-cli- commons-cli-1.2.jar commons-cli-2.0-mahout.jar [root@ip-172-31-21-83 jars]# scp commons-cli- commons-cli-1.2.jar commons-cli-2.0-mahout.jar [root@ip-172-31-21-83 jars]# scp commons-cli-1.2.jar hadoop-auth-2.6.0-cdh5.13.1.jar hadoop-common-2.6.0-cdh5.13.1.jar hadoop-hdfs-2.6.0-cdh5.13.1.jar protobuf-java-2.*.jar commons-configuration-1.7.jar hadoop-yarn-api-2.6.0-cdh5.13.1.jar hadoop-yarn-common-2.6.0-cdh5.13.1.jar /opt/cloudera/azkaban/azkaban-exec-server/extlib/ [root@ip-172-31-21-83 jars]# scp hive-metastore-1.1.0-cdh5.13.1.jar /opt/cloudera/azkaban/azkaban-exec-server/extlib/

(可左右滑动)

6.将jobtypes下所有插件目录的azkaban-hadoopsecuritymanager-3.0.0.jar依赖包删除

[root@ip-172-31-21-83 jobtypes]# rm -rf gobblin/azkaban-hadoopsecuritymanager-3.0.0.jar [root@ip-172-31-21-83 jobtypes]# rm -rf hadoopJava/azkaban-hadoopsecuritymanager-3.0.0.jar [root@ip-172-31-21-83 jobtypes]# rm -rf hdfsToTeradata/azkaban-hadoopsecuritymanager-3.0.0.jar [root@ip-172-31-21-83 jobtypes]# rm -rf hive/azkaban-hadoopsecuritymanager-3.0.0.jar [root@ip-172-31-21-83 jobtypes]# rm -rf java/azkaban-hadoopsecuritymanager-3.0.0.jar [root@ip-172-31-21-83 jobtypes]# rm -rf pig-0.10.0/azkaban-hadoopsecuritymanager-3.0.0.jar [root@ip-172-31-21-83 jobtypes]# rm -rf pig-0.10.1/azkaban-hadoopsecuritymanager-3.0.0.jar [root@ip-172-31-21-83 jobtypes]# rm -rf pig-0.11.0/azkaban-hadoopsecuritymanager-3.0.0.jar [root@ip-172-31-21-83 jobtypes]# rm -rf pig-0.12.0/azkaban-hadoopsecuritymanager-3.0.0.jar [root@ip-172-31-21-83 jobtypes]# rm -rf pig-0.9.2/azkaban-hadoopsecuritymanager-3.0.0.jar [root@ip-172-31-21-83 jobtypes]# rm -rf teradataToHdfs/azkaban-hadoopsecuritymanager-3.0.0.jar

(可左右滑动)

由于Hadoop版本为2.x所以需要将插件下的azkaban-hadoopsecuritymanager-3.0.0.jar删除

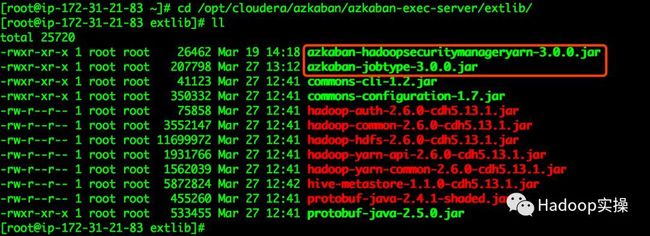

7.将azkaban-hadoopsecuritymanageryarn-3.0.0.jarr及azkaban-jobtype-3.0.0.jar拷贝至${AZKABAN-EXECUTOR-HOME}/extlib目录下

8.完成以上配置后重启azkaban-executor服务

[root@ip-172-31-21-83 azkaban-exec-server]# sh bin/azkaban-executor-shutdown.sh [root@ip-172-31-21-83 azkaban-exec-server]# sh bin/start-exec.sh

(可左右滑动)

9.验证executor插件是否加载成功

[root@ip-172-31-21-83 azkaban-exec-server]# pwd /opt/cloudera/azkaban/azkaban-exec-server [root@ip-172-31-21-83 azkaban-exec-server]# grep 'Loaded jobtype' logs/executorServerLog__2018-03-27+13\:38\:04.out

(可左右滑动)

4.Reportal插件安装

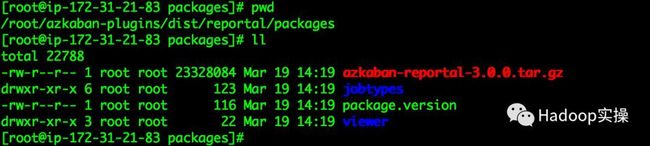

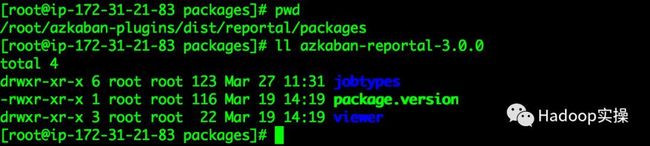

1.进入编译成功的Azkaban-plugins如下目录

[root@ip-172-31-21-83 packages]# pwd /root/azkaban-plugins/dist/reportal/packages [root@ip-172-31-21-83 packages]# ll

(可左右滑动)

2.在当前目录将azkaban-reportal-3.0.0.tar.gz压缩包解压

[root@ip-172-31-21-83 packages]# tar -zxvf azkaban-reportal-3.0.0.tar.gz

(可左右滑动)

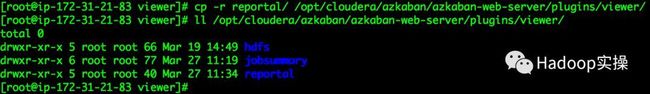

3.将azkaban-reportal-3.0.0/viewer目录下的reportal目录拷贝至azkaban-web-server服务的Plugins/viewer目录下

[root@ip-172-31-21-83 viewer]# cp -r reportal/ /opt/cloudera/azkaban/azkaban-web-server/plugins/viewer/ [root@ip-172-31-21-83 viewer]# ll /opt/cloudera/azkaban/azkaban-web-server/plugins/viewer/

(可左右滑动)

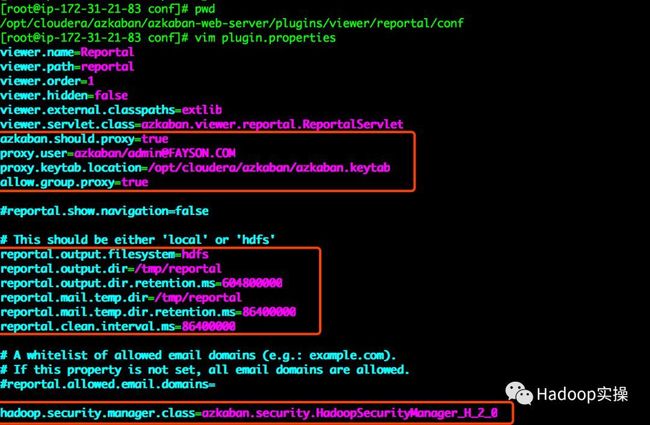

4.修改azkaban-web-server/plugins/viewer/reportal/conf目录下的plugins.properties文件,内容如下:

[root@ip-172-31-21-83 conf]# vim plugin.properties viewer.name=Reportal viewer.path=reportal viewer.order=1 viewer.hidden=false viewer.external.classpaths=extlib viewer.servlet.class=azkaban.viewer.reportal.ReportalServlet azkaban.should.proxy=true proxy.user=azkaban/admin@FAYSON.COM proxy.keytab.location=/opt/cloudera/azkaban/azkaban.keytab allow.group.proxy=true #reportal.show.navigation=false # This should be either 'local' or 'hdfs' reportal.output.filesystem=hdfs reportal.output.dir=/tmp/reportal reportal.output.dir.retention.ms=604800000 reportal.mail.temp.dir=/tmp/reportal reportal.mail.temp.dir.retention.ms=86400000 reportal.clean.interval.ms=86400000 # A whitelist of allowed email domains (e.g.: example.com). # If this property is not set, all email domains are allowed. #reportal.allowed.email.domains= hadoop.security.manager.class=azkaban.security.HadoopSecurityManager_H_2_0

(可左右滑动)

5.替换azkaban-web-server服务reportal插件lib目录下的依赖包

[root@ip-172-31-21-83 lib]# pwd /opt/cloudera/azkaban/azkaban-web-server/plugins/viewer/hdfs/lib [root@ip-172-31-21-83 lib]# cp azkaban-hadoopsecuritymanageryarn-3.0.0.jar ../../reportal/lib/ [root@ip-172-31-21-83 lib]# rm -rf ../../reportal/lib/azkaban-hadoopsecuritymanager-2.2.jar

(可左右滑动)

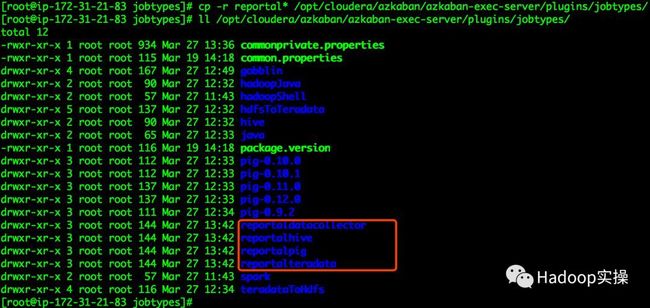

6.将azkaban-reportal-3.0.0/jobtypes目录下的所有目录拷贝到azkaban-executor服务的Plugins/jobtypes目录下

[root@ip-172-31-21-83 jobtypes]# pwd /root/azkaban-plugins/dist/reportal/packages/jobtypes [root@ip-172-31-21-83 jobtypes]# cp -r reportal* /opt/cloudera/azkaban/azkaban-exec-server/plugins/jobtypes/ [root@ip-172-31-21-83 jobtypes]# ll /opt/cloudera/azkaban/azkaban-exec-server/plugins/jobtypes/

(可左右滑动)

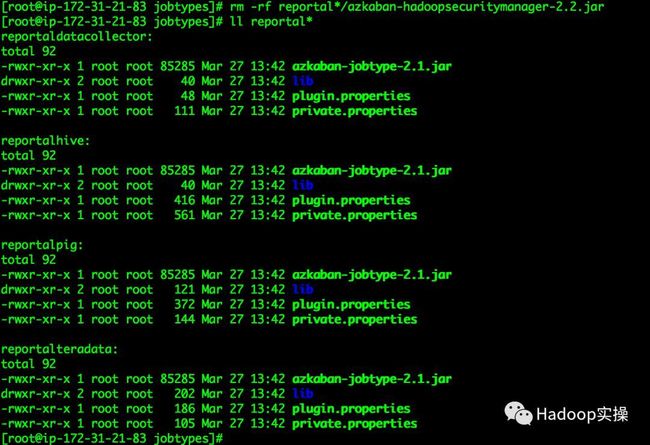

7.删除reportal*目录下的azkaban-hadoopsecuritymanager-2.2.jar依赖包

[root@ip-172-31-21-83 jobtypes]# pwd /opt/cloudera/azkaban/azkaban-exec-server/plugins/jobtypes [root@ip-172-31-21-83 jobtypes]# rm -rf reportal*/azkaban-hadoopsecuritymanager-2.2.jar [root@ip-172-31-21-83 jobtypes]# ll reportal*

(可左右滑动)

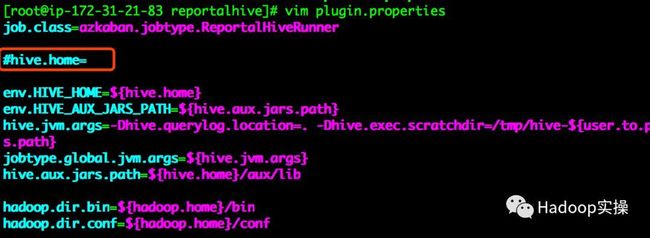

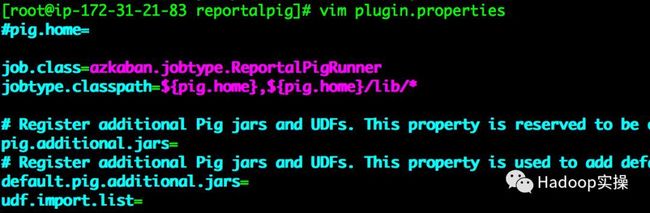

8.屏蔽reportalhive和reportalpig目录下plugin.properties文件对应组件的home配置如下所示:

由于在commonprivate.properties文件中已配置了hive和pig的home目录所以此处需要屏蔽否则会覆盖全局的配置。

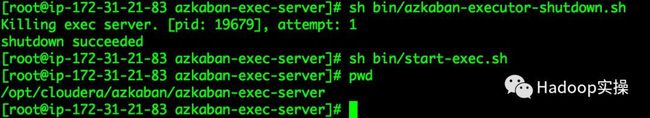

9.重启azkaban-executor服务,并验证插件是否加载成功

[root@ip-172-31-21-83 azkaban-exec-server]# sh bin/azkaban-executor-shutdown.sh [root@ip-172-31-21-83 azkaban-exec-server]# sh bin/start-exec.sh

(可左右滑动)

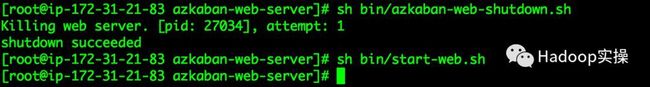

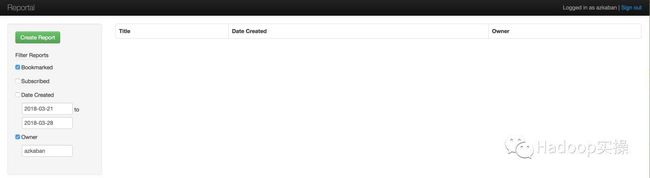

10.重启azkaban-web-server服务并验证reportal插件是否安装成功

[root@ip-172-31-21-83 azkaban-web-server]# sh bin/azkaban-web-shutdown.sh [root@ip-172-31-21-83 azkaban-web-server]# sh bin/start-web.sh

(可左右滑动)

登录azkaban-web-server管理界面查看

5.总结

- 安装插件时需要区分插件是Viewer还是Jobtype,如果为viewer则为Azkaban-web-server服务的插件,如果为jobtype则为Azkaban-executor服务的插件。

- 对于所有插件都需要的依赖包可将依赖包放置在服务HOME目录下的extlib目录。

- 文章中插件的安装有关Hadoop、Pig、Hive等相关服务已进行相应的环境配置,而gobblin、teradata等插件未进行配置,可根据自己的环境进行相应的配置。