Dopamine 使用教程

简介

基本信息介绍

现有的 RL 框架并没有结合灵活性和稳定性以及使研究人员能够有效地迭代 RL 方法,并因此探索可能没有直接明显益处的新研究方向。所以Google推出一个基于 Tensorflow 的框架,旨在为 RL 的研究人员提供灵活性、稳定性和可重复性。此版本还包括一组阐明如何使用整个框架的 colabs。

精简的代码(大约 15 个Python 文件)。通过专注于 Arcade 学习环境(一个成熟的,易于理解的基准)和四个基于 value 的智能体来实现的

- DQN:DeepMind 的深度 Q 网络,核心就是强化学习

- C51

- 一个精心策划的 Rainbow 智能体的简化版本

- 隐式分位数网络(Implicit Quantile Network)智能体

对于新的研究人员来说,能够根据既定方法快速对其想法进行基准测试非常重要。因此,我们为 Arcade 学习环境支持的 60 个游戏提供四个智能体的完整培训数据,可用作 Python pickle 文件(用于使用我们框架训练的智能体)和 JSON 数据文件(用于与受过其他框架训练的智能体进行比较);我们还提供了一个网站,你可以在其中快速查看 60 个游戏中所有智能体的训练运行情况。

ubuntu+python27+tensorflow下使用

dopamine工程

1:搭建基本环境

参见基本方法

2:Dopamine依赖

tensorflow上一步就装好了,接下来,按照工程中的步骤一步一步来

python

source activate easytensor27

sudo apt-get update && sudo apt-get install cmake zlib1g-dev

pip install absl-py atari-py gin-config gym opencv-python

git clone https://github.com/google/dopamine.git

pwd

ls

cd dopamine/

3:测试

3.1:基本测试

在shell中执行程序时,shell会提供一组环境变量。export可新增,修改或删除环境变量,供后续执行的程序使用。export的效力仅及于该次登陆操作。

(easytensor27) chen@chen-ThinkStation-D30:~/dopamine$ export PYTHONPATH=${PYTHONPATH}:.

(easytensor27) chen@chen-ThinkStation-D30:~/dopamine$ python tests/atari_init_test.py

2018-09-06 14:51:45.927207: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX

2018-09-06 14:51:46.254906: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1392] Found device 0 with properties:

name: Quadro K5200 major: 3 minor: 5 memoryClockRate(GHz): 0.771

pciBusID: 0000:05:00.0

totalMemory: 7.43GiB freeMemory: 6.93GiB

2018-09-06 14:51:46.254964: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1471] Adding visible gpu devices: 0

2018-09-06 14:51:53.170582: I tensorflow/core/common_runtime/gpu/gpu_device.cc:952] Device interconnect StreamExecutor with strength 1 edge matrix:

2018-09-06 14:51:53.170646: I tensorflow/core/common_runtime/gpu/gpu_device.cc:958] 0

2018-09-06 14:51:53.170661: I tensorflow/core/common_runtime/gpu/gpu_device.cc:971] 0: N

2018-09-06 14:51:53.171022: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1084] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 6707 MB memory) -> physical GPU (device: 0, name: Quadro K5200, pci bus id: 0000:05:00.0, compute capability: 3.5)

I0906 14:51:53.344151 140619312887552 tf_logging.py:115] Creating DQNAgent agent with the following parameters:

I0906 14:51:53.344964 140619312887552 tf_logging.py:115] gamma: 0.990000

I0906 14:51:53.345103 140619312887552 tf_logging.py:115] update_horizon: 1.000000

I0906 14:51:53.345235 140619312887552 tf_logging.py:115] min_replay_history: 20000

I0906 14:51:53.345361 140619312887552 tf_logging.py:115] update_period: 4

I0906 14:51:53.345479 140619312887552 tf_logging.py:115] target_update_period: 8000

I0906 14:51:53.345597 140619312887552 tf_logging.py:115] epsilon_train: 0.010000

I0906 14:51:53.345712 140619312887552 tf_logging.py:115] epsilon_eval: 0.001000

I0906 14:51:53.345829 140619312887552 tf_logging.py:115] epsilon_decay_period: 250000

I0906 14:51:53.345943 140619312887552 tf_logging.py:115] tf_device: /gpu:0

I0906 14:51:53.346056 140619312887552 tf_logging.py:115] use_staging: True

I0906 14:51:53.346170 140619312887552 tf_logging.py:115] optimizer:

I0906 14:51:53.359112 140619312887552 tf_logging.py:115] Creating a OutOfGraphReplayBuffer replay memory with the following parameters:

I0906 14:51:53.359287 140619312887552 tf_logging.py:115] observation_shape: 84

I0906 14:51:53.359469 140619312887552 tf_logging.py:115] stack_size: 4

I0906 14:51:53.359643 140619312887552 tf_logging.py:115] replay_capacity: 100

I0906 14:51:53.359824 140619312887552 tf_logging.py:115] batch_size: 32

I0906 14:51:53.359994 140619312887552 tf_logging.py:115] update_horizon: 1

I0906 14:51:53.360157 140619312887552 tf_logging.py:115] gamma: 0.990000

I0906 14:51:55.830522 140619312887552 tf_logging.py:115] Beginning training...

W0906 14:51:55.830992 140619312887552 tf_logging.py:125] num_iterations (0) < start_iteration(0)

..

----------------------------------------------------------------------

Ran 2 tests in 10.286s

OK

3.2:用DQN进行标准Atari 2600实验

执行 dopamine/atari/train.py这个文件

可以调整dopamine/agents/dqn/configs/dqn.gin中的文件来设置运行参数

(easytensor27) chen@chen-ThinkStation-D30:~/dopamine$ python -um dopamine.atari.train \

> --agent_name=dqn \

> --base_dir=/tmp/dopamine \

> --gin_files='dopamine/agents/dqn/configs/dqn.gin'

2018-09-06 14:56:43.897947: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX

2018-09-06 14:56:44.063299: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1392] Found device 0 with properties:

name: Quadro K5200 major: 3 minor: 5 memoryClockRate(GHz): 0.771

pciBusID: 0000:05:00.0

totalMemory: 7.43GiB freeMemory: 6.94GiB

2018-09-06 14:56:44.063355: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1471] Adding visible gpu devices: 0

2018-09-06 14:56:44.414463: I tensorflow/core/common_runtime/gpu/gpu_device.cc:952] Device interconnect StreamExecutor with strength 1 edge matrix:

2018-09-06 14:56:44.414538: I tensorflow/core/common_runtime/gpu/gpu_device.cc:958] 0

2018-09-06 14:56:44.414564: I tensorflow/core/common_runtime/gpu/gpu_device.cc:971] 0: N

2018-09-06 14:56:44.414862: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1084] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 6711 MB memory) -> physical GPU (device: 0, name: Quadro K5200, pci bus id: 0000:05:00.0, compute capability: 3.5)

I0906 14:56:44.550671 140445453162240 tf_logging.py:115] Creating DQNAgent agent with the following parameters:

I0906 14:56:44.551160 140445453162240 tf_logging.py:115] gamma: 0.990000

I0906 14:56:44.551281 140445453162240 tf_logging.py:115] update_horizon: 1.000000

I0906 14:56:44.551393 140445453162240 tf_logging.py:115] min_replay_history: 20000

I0906 14:56:44.551502 140445453162240 tf_logging.py:115] update_period: 4

I0906 14:56:44.551608 140445453162240 tf_logging.py:115] target_update_period: 8000

I0906 14:56:44.551712 140445453162240 tf_logging.py:115] epsilon_train: 0.010000

I0906 14:56:44.551840 140445453162240 tf_logging.py:115] epsilon_eval: 0.001000

I0906 14:56:44.551949 140445453162240 tf_logging.py:115] epsilon_decay_period: 250000

I0906 14:56:44.552051 140445453162240 tf_logging.py:115] tf_device: /gpu:0

I0906 14:56:44.552150 140445453162240 tf_logging.py:115] use_staging: True

I0906 14:56:44.552249 140445453162240 tf_logging.py:115] optimizer:

I0906 14:56:44.554611 140445453162240 tf_logging.py:115] Creating a OutOfGraphReplayBuffer replay memory with the following parameters:

I0906 14:56:44.554744 140445453162240 tf_logging.py:115] observation_shape: 84

I0906 14:56:44.554857 140445453162240 tf_logging.py:115] stack_size: 4

I0906 14:56:44.554966 140445453162240 tf_logging.py:115] replay_capacity: 1000000

I0906 14:56:44.555073 140445453162240 tf_logging.py:115] batch_size: 32

I0906 14:56:44.555180 140445453162240 tf_logging.py:115] update_horizon: 1

I0906 14:56:44.555284 140445453162240 tf_logging.py:115] gamma: 0.990000

I0906 14:56:46.071502 140445453162240 tf_logging.py:115] Beginning training...

I0906 14:56:46.071820 140445453162240 tf_logging.py:115] Starting iteration 0

下了相关的6篇论文(方法_作者_年份)

文档阅读

https://github.com/google/dopamine/tree/master/docs

colab

https://github.com/google/dopamine/tree/master/dopamine/colab

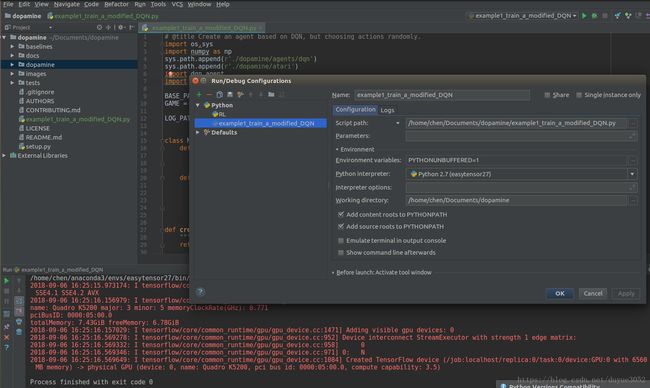

实验1

代码如下

# @title Create an agent based on DQN, but choosing actions randomly.

import os,sys

import numpy as np

sys.path.append(r'./dopamine')

from dopamine.agents.dqn import dqn_agent

from dopamine.atari import run_experiment

from dopamine.colab import utils as colab_utils

BASE_PATH = '/tmp/colab_dope_run'

GAME = 'Asterix'

LOG_PATH = os.path.join(BASE_PATH, 'random_dqn', GAME)

class MyRandomDQNAgent(dqn_agent.DQNAgent):

def __init__(self, sess, num_actions):

"""This maintains all the DQN default argument values."""

super(MyRandomDQNAgent, self).__init__(sess, num_actions)

def step(self, reward, observation):

"""Calls the step function of the parent class, but returns a random action.

"""

_ = super(MyRandomDQNAgent, self).step(reward, observation)

return np.random.randint(self.num_actions)

def create_random_dqn_agent(sess, environment):

"""The Runner class will expect a function of this type to create an agent."""

return MyRandomDQNAgent(sess, num_actions=environment.action_space.n)

# Create the runner class with this agent. We use very small numbers of steps

# to terminate quickly, as this is mostly meant for demonstrating how one can

# use the framework. We also explicitly terminate after 110 iterations (instead

# of the standard 200) to demonstrate the plotting of partial runs.

random_dqn_runner = run_experiment.Runner(LOG_PATH,

create_random_dqn_agent,

game_name=GAME,

num_iterations=200,

training_steps=10,

evaluation_steps=10,

max_steps_per_episode=100)

# @title Train MyRandomDQNAgent.

print('Will train agent, please be patient, may be a while...')

random_dqn_runner.run_experiment()

print('Done training!')

# @title Load the training logs.

random_dqn_data = colab_utils.read_experiment(LOG_PATH, verbose=True)

random_dqn_data['agent'] = 'MyRandomDQN'

random_dqn_data['run_number'] = 1

experimental_data[GAME] = experimental_data[GAME].merge(random_dqn_data,

how='outer')

# @title Plot training results.

import seaborn as sns

import matplotlib.pyplot as plt

fig, ax = plt.subplots(figsize=(16,8))

sns.tsplot(data=experimental_data[GAME], time='iteration', unit='run_number',

condition='agent', value='train_episode_returns', ax=ax)

plt.title(GAME)

plt.show()