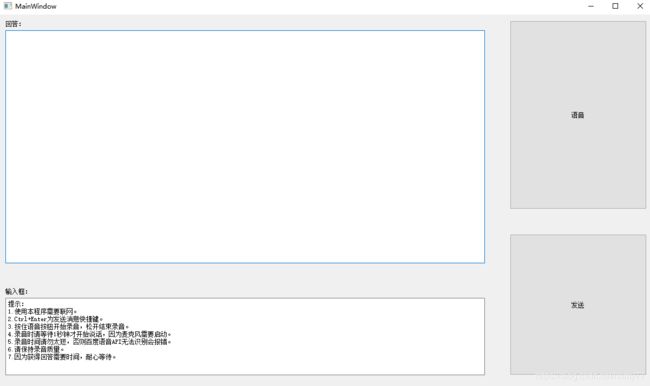

QT聊天机器人(百度语音+青云客API)

文章目录

- 效果

- 前期准备

- 设计

- 功能

- 代码

- 文字聊天代码

- 语音聊天代码

- 一些细节

- 可以改进的地方

代码已上传到github: https://github.com/HMY777/RobotChat

效果

前期准备

- 图灵机器人100条/天,根本不够聊啊,换成青云客API,无限制

- 接入用百度语音,方便,快

了解一下QT HTTP,post,get操作就行了

青云客API文档

百度语音API文档

设计

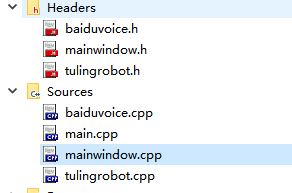

因为是计网的课设要赶时间所以我做得很简陋(其实是菜),所有操作都在MainWindow.cpp里面完成,一共就这几个类

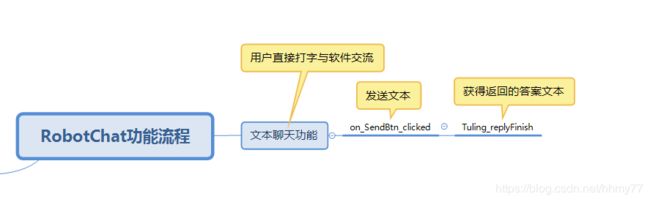

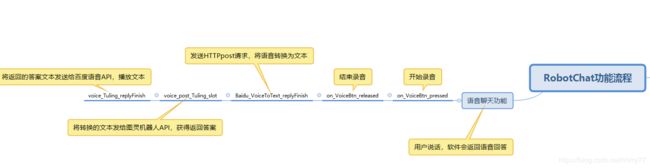

功能

-

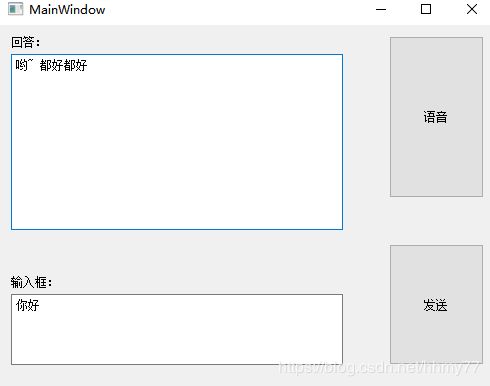

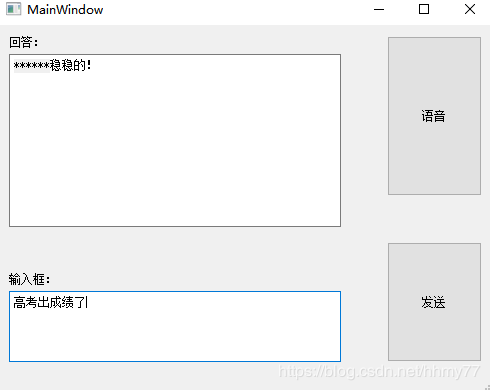

文字聊天功能

这个只涉及到图灵的API,具体流程是- 输入文本,按发送按钮

- 槽函数里面发送get命令来获取请求(官方

-

语音聊天功能

- 录音

- 百度API识别语音获得文本

- 文本发送给图灵机器人获得回答文本

- 显示回答文本和调用百度语音API播放音频

下面是代码,有一些是get比较适合,有一些是post比较适合,视情况采用就好。

HTTP GET POST区别

代码

文字聊天代码

青云客api get就好

//直接聊天

void MainWindow::on_SendBtn_clicked()

{

QString Url="http://api.qingyunke.com/api.php?key=free&appid=0&msg="+ui->InputTextEdit->toPlainText();

QUrl url;

url.setUrl(Url);

QNetworkRequest request(url);

QNetworkAccessManager *manager=new QNetworkAccessManager(this);

connect(manager,SIGNAL(finished(QNetworkReply*)),this,SLOT(Tuling_replyFinish(QNetworkReply*)));

manager->get(request);

}

相应的槽函数,想做的好一点可以多加几个if处理异常

//解析图灵API返回的json数据

void MainWindow::Tuling_replyFinish(QNetworkReply *reply)

{

QString data=reply->readAll();//读取回话信息

qDebug()<<"这是返回的结果 "+data;

QJsonParseError json_error;

//一定要toUtf8 否则会出错!

QJsonDocument json=QJsonDocument::fromJson(data.toUtf8(),&json_error);

//判断有没有错

if(json_error.error==QJsonParseError::NoError)

{

if(json.isObject())

{

QJsonObject jsonObj=json.object();

//取到text内容

if(jsonObj.contains("content"))

{

qDebug()<<"成功获取text";

QJsonValue text_value=jsonObj.take("content");

if(text_value.isString())

{

tuling_get_ans=text_value.toVariant().toString();

tuling_get_ans.replace("br","\n");

qDebug()<<"!!!返回的ans:"<<tuling_get_ans;

}

}

}

}

reply->deleteLater();

ui->OutputTextEdit->setText(tuling_get_ans);

}

语音聊天代码

一些需要用到的声明和初始化

//用于播放音频

QMediaPlayer* media_player;

//用于设置audio_input

QAudioDeviceInfo SpeechCurrentDevice;

//用于录音,数据存储在JsonBuffer里面

QAudioInput* audio_input=NULL;

QBuffer* JsonBuffer=NULL;

void MainWindow::AudioInit()

{

const auto &&availableDevices = QAudioDeviceInfo::availableDevices(QAudio::AudioInput);

if(!availableDevices.isEmpty())

{

SpeechCurrentDevice = availableDevices.first();

QAudioFormat format;

format.setSampleRate(8000);

format.setChannelCount(1);

format.setSampleSize(16);

format.setSampleType(QAudioFormat::SignedInt);

format.setByteOrder(QAudioFormat::LittleEndian);

format.setCodec("audio/pcm");

audio_input = new QAudioInput(SpeechCurrentDevice, format, this);

}

}

JsonBuffer是 QBuffer* JsonBuffer=NULL;,好像每次new一个都比较慢,建议放在外面new,我因为环境原因没法测试语音功能,想改良可以自己试一下

//当按下录音按钮后 开始录音

void MainWindow::on_VoiceBtn_pressed()

{

JsonBuffer=new QBuffer;

JsonBuffer->open(QIODevice::ReadWrite);

qDebug()<<"录音设备访问成功...";

audio_input->start(JsonBuffer);

qDebug()<<"录音设备运行成功...";

}

//松开按钮后,结束录音,发送请求

void MainWindow::on_VoiceBtn_released()

{

qDebug()<<"结束录音哦~~~";

audio_input->stop();

const auto &sendData=JsonBuffer->data();

JsonBuffer->deleteLater();

QNetworkRequest request(QUrl(p_BaiduVoice->VOP_URL));

request.setRawHeader("Content-Type", "application/json");

qDebug()<<"jason_speech:request设置头成功 ...";

QNetworkAccessManager *manager=new QNetworkAccessManager(this);

connect(manager,SIGNAL(finished(QNetworkReply *)),this,SLOT(Baidu_VoiceToText_replyFinish(QNetworkReply *)));

//这里应该放在百度类里面

QJsonObject append;

//设置json请求格式

append["format"] = "pcm";

append["rate"] = 8000;

append["channel"] = 1;

append["token"] = p_BaiduVoice->Access_Token;

append["lan"] = "zh";

append["cuid"] = p_BaiduVoice->MAC_cuid;

append["speech"] = QString(sendData.toBase64());

append["len"] = sendData.size();

//发送HTTP post请求

manager->post(request,QJsonDocument(append).toJson());

}

//声音转成文本

void MainWindow::Baidu_VoiceToText_replyFinish(QNetworkReply *reply)

{

qDebug()<<"进到槽函数了哦~~";

QByteArray JsonStr=reply->readAll();

qDebug()<<"返回信息 "<<JsonStr;

QJsonObject acceptedData(QJsonDocument::fromJson(JsonStr).object());

if(acceptedData.contains("err_no"))

{

QJsonValue version_value = acceptedData.take("err_no");

if(version_value.isDouble())

{

int version = version_value.toVariant().toInt();

if(version == 3301)

QMessageBox::information(NULL,tr("识别失败"),tr("请等待一秒后开始录音,确认录音质量良好"));

}

}

if(!acceptedData["result"].isNull())

{

QString message=acceptedData["result"].toArray()[0].toString();

ui->InputTextEdit->setText(message);

changeBaiduAudioAns(message);

}

}

//当声音识别成文本后,发射信号到此函数,然后将文本发送给图灵机器人

void MainWindow::voice_post_Tuling_slot()

{

qDebug()<<"successes get voice_post_Tuling_slot";

QString Url="http://api.qingyunke.com/api.php?key=free&appid=0&msg="+this->voice_get_ans;

QUrl url;

url.setUrl(Url);

QNetworkRequest request(url);

QNetworkAccessManager *manager=new QNetworkAccessManager(this);

//处理获得文本,连接到voice_Tuling_replyFinish函数

connect(manager,SIGNAL(finished(QNetworkReply *)),this,SLOT(voice_Tuling_replyFinish(QNetworkReply *)));

manager->get(request);

}

//走语音识别的流程 这是将语音识别文本发送给图灵机器人,然后解析json

void MainWindow::voice_Tuling_replyFinish(QNetworkReply *reply)

{

qDebug()<<"测试走到voice_Tuling_replyFinish...";

QString data=reply->readAll();//读取回话信息

qDebug()<<"这是返回的结果 "+data;

QString tstr;

QJsonParseError json_error;

//一定要toUtf8 否则会出错!

QJsonDocument json=QJsonDocument::fromJson(data.toUtf8(),&json_error);

//判断有没有错

if(json_error.error==QJsonParseError::NoError)

{

if(json.isObject())

{

QJsonObject jsonObj=json.object();

//取到text内容

if(jsonObj.contains("content"))

{

qDebug()<<"成功获取text";

QJsonValue text_value=jsonObj.take("content");

if(text_value.isString())

{

tstr=text_value.toVariant().toString();

}

}

}

}

reply->deleteLater();

// 获得返回文本后

this->setUIString(tstr);

}

最后一步 直接从网页上播放音频

//获得图灵机器人的回答后再播放这个回答

void MainWindow::Baidu_TextToVoice_replyFinish()

{

//最后一步 播放文本音频

//设置url以及必要的请求格式

QByteArray url="http://tsn.baidu.com/text2audio?";

url.append(QString("&lan=zh"));

//本机MAC地址

// url.append(QString("&cuid=A0-8C-FD-1D-CF-0E"));

url.append(QString("&cuid="+p_BaiduVoice->MAC_cuid));

url.append("&ctp=1");

url.append(QString("&tok="+p_BaiduVoice->Access_Token));

url.append(QString("&pit=5"));

url.append(QString("&per=4"));

url.append(QString("&tex="));

url.append(QUrl::toPercentEncoding(this->UI_ANS_String));

qDebug()<<url;

//播放url里面的音频

media_player->setMedia(QUrl::fromLocalFile(url));

media_player->play();

}

一些细节

百度的Token时间只有一个月,把上次获取token的日期写在.ini文件里面,每次判断是否需要刷新token

bool MainWindow::JudgeTokenTime()

{

//https://blog.csdn.net/qq_18286031/article/details/78538769 时间互转

QSettings *setting=new QSettings("GetTokenTime.ini",QSettings::IniFormat);

//当前时间

QDateTime current_date_time=QDateTime::currentDateTime();

QDateTime file_date_time;

//读取的文件时间

QString file_date_str=setting->value("/TIME/last_time").toString();

file_date_time=QDateTime::fromString(file_date_str,"yyyy-MM-dd");

// qDebug()<<"file_date_time"<

uint stime = file_date_time.toTime_t();

uint etime = current_date_time.toTime_t();

int ndaysec = 24*60*60;

int Day= (etime - stime)/(ndaysec) + ((etime - stime)%(ndaysec)+(ndaysec-1))/(ndaysec) - 1;

//Token 有效期内

if(Day<30) return true;

else

{

//写时间

setting->beginGroup("TIME");

setting->setValue("last_time",current_date_time.toString("yyyy-MM-dd"));

setting->endGroup();

return false;

}

}

void MainWindow::TokenInit()

{

if(JudgeTokenTime()) return;

QUrl url=QUrl(p_BaiduVoice->Get_Token_URL+

"?grant_type=client_credentials"+

"&client_id="+p_BaiduVoice->API_KEY+

"&client_secret="+p_BaiduVoice->Secret_Key);

QNetworkAccessManager *manager=new QNetworkAccessManager(this);

QNetworkRequest request(url);

connect(manager,SIGNAL(finished(QNetworkReply *)),this,SLOT(get_Token_slot(QNetworkReply *)));

manager->get(request);

}

void MainWindow::get_Token_slot(QNetworkReply *reply)

{

QByteArray JsonStr=reply->readAll();

qDebug()<<JsonStr;

QJsonObject acceptedData(QJsonDocument::fromJson(JsonStr).object());

if(acceptedData.contains("access_token"))

{

QJsonValue json_value = acceptedData.take("access_token");

p_BaiduVoice->Access_Token=json_value.toString();

qDebug()<<"success get token:"<<p_BaiduVoice->Access_Token;

}

}

百度语音还需要唯一标识符即MAC地址来POST,下面是获得MAC的方法

//获取本机MAC地址

void MainWindow::getMacAddress()

{

QList<QNetworkInterface> nets = QNetworkInterface::allInterfaces();// 获取所有网络接口列表

int nCnt = nets.count();

for(int i = 0; i < nCnt; i ++)

{

// 如果此网络接口被激活并且正在运行并且不是回环地址,则就是我们需要找的Mac地址

if(nets[i].flags().testFlag(QNetworkInterface::IsUp) && nets[i].flags().testFlag(QNetworkInterface::IsRunning) && !nets[i].flags().testFlag(QNetworkInterface::IsLoopBack))

{

p_BaiduVoice->MAC_cuid = nets[i].hardwareAddress();

break;

}

}

p_BaiduVoice->MAC_cuid.replace(":","-");

qDebug()<<"自动获取的MAC地址 "<<p_BaiduVoice->MAC_cuid;

}

可以改进的地方

QT可以获取麦克风音量,然后开一个线程判断当前麦克风是否超过某个值,超过了就开始录音,然后识别…这样更智能,不用按按钮说话。