Tensorflow实战9:Googel Inception net实现即时间测评

1.Google Inception Net简介

Google Inception Net首次出现在ILSVRC 2014的比赛中(和VGGNet同年),就以较大优势取得了第一名,在比赛中使用的Inception V1通常被称作为Inception V1,虽然这个网络结构有22层,但是只有500万个参数量,是之前我们讲过的AlexNet参数量的1/12。为什么要降低参数量呢?第一点,参数量越大需要的数据量也就越大,但实际上高质量的数据并不是很多,第二点,在深度学习中计算资源是有限的,怎么利用有限的资源来更好的训练网络是非常重要的,如果参数量很大,那么计算损耗是很大的。

那么问题来了,Inception Net是怎么减少参数的呢?第一,去除了全连接层,改用全局平局池化层。第二,使用了Inception Module提高了参数的利用率。即大网络中嵌套小网络,然后重复使用这个小网络。

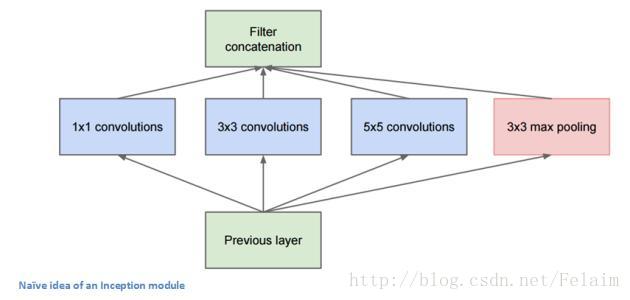

这个就是Inception module的结构图,我们可以看到一个module有4个分支,1×1的卷积核主要是用来跨通道组织信息,提高网络的表达能力,同时可以通过改变卷积核的个数对输出通道进行升维或者降维。原论文中提到了稀疏结构是基于Hebbian原理,百度了一下:描述了突触可塑性的基本原理,即突触前神经元向突触后神经元的持续重复的刺激可以导致突触传递效能的增加。这一理论由唐纳德·赫布于1949年提出,又被称为赫布定律(Hebb's rule)、赫布假说(Hebb's postulate)、细胞结集理论(cell assembly theory)等。

此外,在Inception Net中还使用了辅助分类节点(auxiliary classifiers),就是将中间某一层的输出用作分类,并按照一个较小的权重(0.3)加到最终的分类结果去,对于神经网络超参数的设计也是很重要的,这需要很多技巧和实战经验,具体的调参也是一个很艰巨的任务,或者说也是一门很高深的学科。

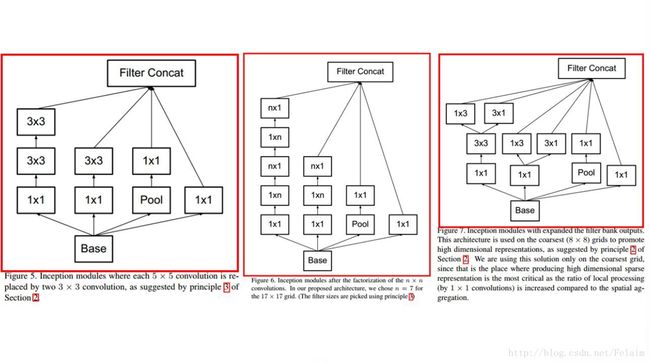

Inception V2是用两个3×3的卷积核代替5×5的卷积核,并且还提出来著名的Batch Normalization(下面简称BN)。

Inception V3主要是引入了Factorization into small convolutions的思想,把3×3的卷积核分成了1×3和3×1,不仅节约了参数,同时还增加了一层非线性扩展模型的能力。还有一个改进是Inception V3又花了Inceotion module的结构,有三种不同的结构。

上图是不同的Inception V3的结构图,我们待会儿实现的代码就是Inception V3。一共有42层哦!

Inception V4是在V3的基础上结合了后面一个实战会讲到的微软出品的ResNet。

2.Google Inception net V3的代码实现

#coding:utf-8

#这里加了一个可以写中文的代码

#定义函数,导入tensorflow等相关依赖库,产生截断的正态分布函数

import tensorflow as tf

import time

from datetime import datetime

import math

slim = tf.contrib.slim

trunc_normal = lambda stddev: tf.truncated_normal_initializer(0, 0, stddev)

#定义函数inception_v3_arg_scope,用来生成网络中常用到的函数的默认参数

def incepton_v3_arg_scope(weight_decay = 0.00004, stddev = 0.1, batch_norm_var_collection = 'moving_vars'):

batch_norm_params = {

'decay': 0.9997,

'epsilon': 0.001,

'updates_collections': tf.GraphKeys.UPDATE_OPS,

'variables_collections': {

'beta': None,

'gamma': None,

'moving_mean': [batch_norm_var_collection],

'moving_variance': [batch_norm_var_collection],

}

}

with slim.arg_scope([slim.conv2d, slim.fully_connected], weights_regularizer = slim.l2_regularizer(weight_decay)):

with slim.arg_scope([slim.conv2d],

weights_initializer = tf.truncated_normal_initializer(stddev = stddev),

activation_fn = tf.nn.relu,

normalizer_fn = slim.batch_norm,

normalizer_params = batch_norm_params) as sc:

return sc

#定义inception_v3_base,生成Inception V3网络的卷积部分

def inception_v3_base(inputs, scope = None):

end_points = {}#用来保存某些关键点

with tf.variable_scope(scope, 'InceptionV3', [inputs]):

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d],

stride = 1, padding = 'VALID'):

net = slim.conv2d(inputs, 32, [3, 3], stride = 2, scope = 'Conv2d_1a_3x3')

#net = slim.conv2d(inputs, 32, [3, 3], stride = 2, scope = 'Conv2d_1a_3×3')

net = slim.conv2d(net, 32, [3, 3], scope = 'Conv2d_2a_3x3')

net = slim.conv2d(net, 64, [3, 3], padding = 'SAME', scope = 'Conv2d_2b_3x3')

net = slim.max_pool2d(net, [3, 3], stride = 2, scope = 'MaxPool_3a_3x3')

net = slim.conv2d(net, 80, [1, 1], scope = 'Conv2d_3b_1x1')

net = slim.conv2d(net, 192, [3, 3], scope = 'Conv2d_4a_3x3')

net = slim.max_pool2d(net, [3, 3], stride = 2, scope = 'MaxPool_5a_3x3')

#接下来就是三个连续的Inception模块组,这个按照实际网络写参数就可以了

#第1个Inception模块组的第一个module

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d], stride = 1, padding = 'SAME'):

with tf.variable_scope('Mixed_5b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope = 'Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 32, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

#第二个module

with tf.variable_scope('Mixed_5c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope = 'Conv2d_0b_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope = 'Conv2d_0c_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2,branch_3], 3)

#第三个module

with tf.variable_scope('Mixed_5d'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 48, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 64, [5, 5], scope = 'Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope = 'Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)#把每个分支的输出以第三个维度合并,即通道数合并

#第2个Inception的第一个module

with tf.variable_scope('Mixed_6a'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 384, [3, 3], stride = 2, padding = 'VALID', scope = 'Conv2d_1a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 64, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 96, [3, 3], scope = 'Conv2d_0b_3x3')

branch_1 = slim.conv2d(branch_1, 96, [3, 3], stride = 2, padding = 'VALID', scope = 'Conv2d_1a_1x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net, [3, 3], stride = 2, padding = 'VALID', scope = 'Conv2d_1a_3x3')

net = tf.concat([branch_0, branch_1, branch_2], 3)

#第二个module

with tf.variable_scope('Mixed_6b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 128, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 128, [1, 7], scope = 'Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope = 'Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 128, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 128, [7, 1], scope = 'Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 128, [1, 7], scope = 'Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 128, [7, 1], scope = 'Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope = 'Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

#第三个module

with tf.variable_scope('Mixed_6c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 160, [1, 7], scope = 'Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope = 'Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 160, [1, 7], scope = 'Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope = 'Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

#第四个module

with tf.variable_scope('Mixed_6d'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 160, [1, 7], scope = 'Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope = 'Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 160, [1, 7], scope = 'Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope = 'Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

#第五个module

with tf.variable_scope('Mixed_6e'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 160, [1, 7], scope = 'Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope = 'Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 160, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2, 160, [1, 7], scope = 'Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2, 160, [7, 1], scope = 'Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2, 192, [1, 7], scope = 'Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

end_points['Mixed_6e'] = net#把Mixed_6e作为保存的输出,作为辅助分类节点

#第3个Inception的第一个module

with tf.variable_scope('Mixed_7a'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

branch_0 = slim.conv2d(branch_0, 320, [3, 3], stride = 2, padding = 'VALID', scope = 'Conv2d_0b_3x3')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 192, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1, 192, [1, 7], scope = 'Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1, 192, [7, 1], scope = 'Conv2d_0c_7x1')

branch_1 = slim.conv2d(branch_1, 192, [3, 3], stride = 2, padding = 'VALID', scope = 'Conv2d_0e_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net, [3, 3], stride = 2, padding = 'VALID', scope = 'MaxPool_1a_3x3')

net = tf.concat([branch_0, branch_1, branch_2], 3)

#第二个module

with tf.variable_scope('Mixed_7b'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 320, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 384, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1, 384, [1, 3], scope = 'Conv2d_0b_1x3'),

slim.conv2d(branch_1, 384, [3, 1], scope = 'Conv2d_0c_3x1')], 3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 448, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 384, [3, 3], scope = 'Conv2d_0a_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2, 384, [1, 3], scope = 'Conv2d_0c_1x3'),

slim.conv2d(branch_2, 384, [3, 1], scope = 'Conv2d_0e_3x1')], 3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

#第三个module

with tf.variable_scope('Mixed_7c'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 320, [1, 1], scope = 'Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net, 384, [1, 1], scope = 'Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1, 384, [1, 3], scope = 'Conv2d_0b_1x3'),

slim.conv2d(branch_1, 384, [3, 1], scope = 'Conv2d_0c_3x1')], 3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net, 448, [1, 1], scope = 'Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2, 384, [3, 3], scope = 'Conv2d_0a_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2, 384, [1, 3], scope = 'Conv2d_0c_1x3'),

slim.conv2d(branch_2, 384, [3, 1], scope = 'Conv2d_0e_3x1')], 3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net, [3, 3], scope = 'MaxPool_0a_3x3')

branch_3 = slim.conv2d(branch_3, 192, [1, 1], scope = 'Conv2d_0b_1x1')

net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3)

return net, end_points#返回计算出的结果和作为辅助分类的节点的结果

#定义Inception V3网络的全局平均池化,Softmax和Auxiliary Logits

def inception_v3(inputs, num_classes = 1000, is_training = True, dropout_keep_prob = 0.8,

prediction_fn = slim.softmax, spatial_squeeze = True, reuse = None, scope = 'InceptionV3'):

with tf.variable_scope(scope, 'InceptionV3', [inputs, num_classes], reuse = reuse) as scope:

with slim.arg_scope([slim.batch_norm, slim.dropout], is_training = is_training):

net, end_points = inception_v3_base(inputs, scope = scope)

#处理辅助作用的节点

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d], stride = 1, padding = 'SAME'):

aux_logits = end_points['Mixed_6e']

with tf.variable_scope('AuxLogits'):

aux_logits = slim.avg_pool2d(aux_logits, [5, 5], stride = 3, padding = 'VALID', scope = 'AvgPool_1a_5x5')

aux_logits = slim.conv2d(aux_logits, 128, [1, 1], scope = 'Conv2d_1b_1x1')

aux_logits = slim.conv2d(aux_logits, 768, [5, 5], weights_initializer = trunc_normal(0.01),

padding = 'VALID', scope = 'Conv2d_2a_5x5')

aux_logits = slim.conv2d(aux_logits, num_classes, [1, 1], activation_fn = None,

normalizer_fn = None, weights_initializer = trunc_normal(0.001), scope = 'Conv2d_2b_1x1')

if spatial_squeeze:

aux_logits = tf.squeeze(aux_logits, [1, 2], name = 'SpatialSqueeze')

end_points['AuxLogits'] = aux_logits

#处理最终的输出的net

with tf.variable_scope('Logits'):

net = slim.avg_pool2d(net, [8, 8], padding = 'VALID', scope = 'AvgPool_1a_8x8')

net = slim.dropout(net, keep_prob = dropout_keep_prob, scope = 'Dropout_1b')

end_points['PreLogits'] = net

logits = slim.conv2d(net, num_classes, [1, 1], activation_fn = None, normalizer_fn = None, scope = 'Conv2d_1c_1x1')

if spatial_squeeze:

logits = tf.squeeze(logits, [1, 2], name = 'SpatialSqueeze')

end_points['Predictions'] = prediction_fn(logits, scope = 'Predictions')

return logits, end_points

#测试性能定义的函数

def time_tensorflow_run(session, target, info_string):

num_steps_burn_in = 10

total_duration = 0.0

total_duration_squared = 0.0

for i in range(num_batches + num_steps_burn_in):

start_time = time.time()

_ = session.run(target)

duration = time.time() - start_time

if i >= num_steps_burn_in:

if not i % 10:

print('%s: step %d, duration = %.3f' %(datetime.now(), i - num_steps_burn_in, duration))

total_duration += duration

total_duration_squared += duration * duration

mn = total_duration / num_batches

vr = total_duration_squared / num_batches - mn * mn

sd = math.sqrt(vr)

print('%s: %s across %d steps, %.3f +/- %.3f sec / batch' %(datetime.now(), info_string, num_batches, mn, sd))

#对Inception V3进行运算性能测试

batch_size = 32

height, width = 299, 299

inputs = tf.random_uniform((batch_size, height, width, 3))

with slim.arg_scope(incepton_v3_arg_scope()):

logits, end_points = inception_v3(inputs, is_training = False)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

num_batches = 100

time_tensorflow_run(sess, logits, "Forward")

LZ运行出来的结果大概是0.149s每个batch。好了,到此,我们就实现了Inception V3啦O(∩_∩)O