图像处理(十)——特征检测与匹配

文章目录

- 内容:

- SIFT:

- SURF:

- ORB:

- 微信公众号

- 代码

内容:

• 了解OpenCV中实现的SIFT, SURF, ORB等特征检测器的用法,并进行实验。将检测到的特征点用不同大小的圆表示,比较不同方法的效率、效果等。

• 了解OpenCV的特征匹配方法,并进行实验。

SIFT:

SIFT算法的过程实质是在不同尺度空间上查找特征点(关键点),用128维方向向量的方式对特征点进行描述,最后通过对比描述向量实现目标匹配。

概括起来主要有三大步骤:

1、 提取关键点;

2、 对关键点附加详细的信息(局部特征)也就是所谓的描述器;

3、 通过两方特征点(附带上特征向量的关键点)的两两比较找出相互匹配的若干对特征点,建立物体间的对应关系。

SURF:

Opencv中Surf算子提取特征,生成特征描述子,匹配特征的流程跟Sift是完全一致的。

这里要着重说一下绘制使用的drawKeypoints方法:

void drawKeypoints( const Mat& image, const vector& keypoints, CV_OUT Mat& outImage, const Scalar& color=Scalar::all(-1), int flags=DrawMatchesFlags::DEFAULT );

第一个参数image:原始图像,可以使三通道或单通道图像;

第二个参数keypoints:特征点向量,向量内每一个元素是一个

KeyPoint对象,包含了特征点的各种属性信息;

第三个参数outImage:特征点绘制的画布图像,可以是原图像;

第四个参数color:绘制的特征点的颜色信息,默认绘制的是随机彩色;

第五个参数flags:特征点的绘制模式,其实就是设置特征点的那些信息需要绘制,那些不需要绘制。

而在进行特征点匹配时,当仅使用筛选出的最优匹配点进行匹配的时候,意味着会有很多非最优的特征点不会被匹配,这时候可以设置flags=DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS ,意味着那些不是最优特征点的点不会被绘制出来。

ORB:

ORB - (Oriented Fast and Rotated BRIEF)算法是基于FAST特征检测与BRIEF特征描述子匹配实现,相比BRIEF算法中依靠随机方式获取二值点对,ORB通过FAST方法,FAST方式寻找候选特征点方式是假设灰度图像像素点A周围的像素存在连续大于或者小于A的灰度值,选择任意一个像素点P,假设半径为3,周围16个像素表示如下:

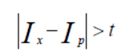

假设存在连续N个点满足

则像素点P被标记为候选特征点、通常N取值为9、12,上图N=9。 为了简化计算,我们可以只计算1、9、5、13四个点,至少其中三个点满足上述不等式条件,即可将P视为候选点。

ORB比BRIEF方式更加合理,同时具有旋转不变性特征与噪声抑制效果,ORB实现选择不变性特征,是通过对BRIEF描述子的特征点区域的计算得到角度方向参数。

完整的ORB特征描述子算法流程图如下:

运行结果:

此外,需要注意的是在使用这些方法之前,要对opencv进行扩展,使其能够调用nonfree.hpp、legacy.hpp以及features2d.hpp,而扩展方法是使用cmake对从官网上下载的扩展包进行安装,具体教程参见

https://blog.csdn.net/streamchuanxi/article/details/51044929

微信公众号

代码

// CVE9sift.cpp : 此文件包含 "main" 函数。程序执行将在此处开始并结束。

//

#include "pch.h"

#include > matcher;

FlannBasedMatcher matcher;

vector<DMatch> matchePoints;

matcher.match(imageDesc1, imageDesc2, matchePoints, Mat());

//提取强特征点

double minMatch = 1;

double maxMatch = 0;

for (int i = 0; i < matchePoints.size(); i++)

{

//匹配值最大最小值获取

minMatch = minMatch > matchePoints[i].distance ? matchePoints[i].distance : minMatch;

maxMatch = maxMatch < matchePoints[i].distance ? matchePoints[i].distance : maxMatch;

}

//最大最小值输出

cout << "最佳匹配值是: " << minMatch << endl;

cout << "最差匹配值是: " << maxMatch << endl;

//获取排在前边的几个最优匹配结果

vector<DMatch> goodMatchePoints;

for (int i = 0; i < matchePoints.size(); i++)

{

if (matchePoints[i].distance < minMatch + (maxMatch - minMatch) / 2)

{

goodMatchePoints.push_back(matchePoints[i]);

}

}

//绘制最优匹配点

Mat imageOutput;

drawMatches(image01, keyPoint1, image02, keyPoint2, goodMatchePoints, imageOutput, Scalar::all(-1),

Scalar::all(-1), vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

imshow("Mathch Points", imageOutput);

waitKey();

return 0;

}

//ORB

#include