NLP基础实验②:TextCNN实现THUCNews新闻文本分类

一、TextCNN

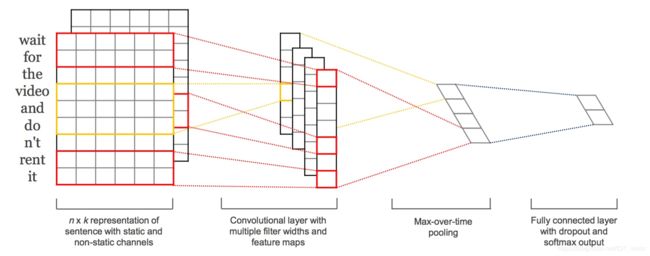

下图是14年这篇文章提出的TextCNN的结构。fastText 中的网络结果是完全没有考虑词序信息的,而它用的 n-gram 特征 trick 恰恰说明了局部序列信息的重要意义。卷积神经网络(CNN Convolutional Neural Network)最初在图像领域取得了巨大成功,CNN原理就不讲了,核心点在于可以捕捉局部相关性,具体到文本分类任务中可以利用CNN来提取句子中类似 n-gram 的关键信息。

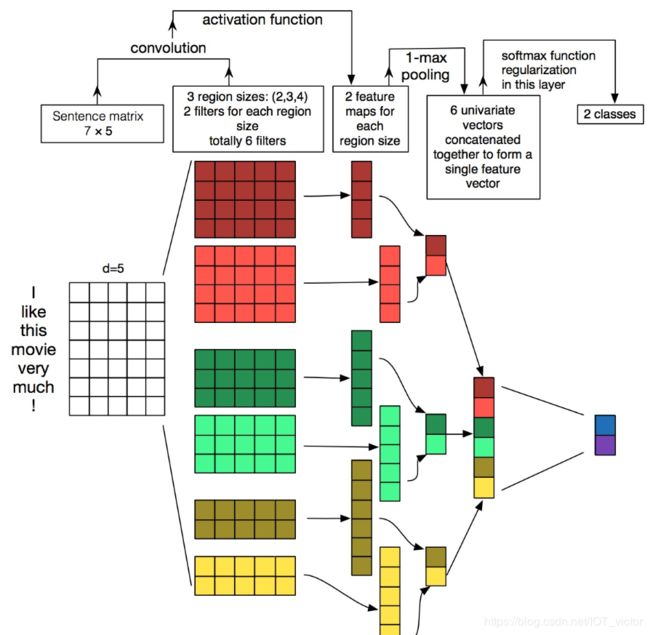

TextCNN的详细过程原理图见下:

TextCNN详细过程:第一层是图中最左边的7乘5的句子矩阵,每行是词向量,维度=5,这个可以类比为图像中的原始像素点了。然后经过有 filter_size=(2,3,4) 的一维卷积层,每个filter_size 有两个输出 channel。第三层是一个1-max pooling层,这样不同长度句子经过pooling层之后都能变成定长的表示了,最后接一层全连接的 softmax 层,输出每个类别的概率。

特征:这里的特征就是词向量,有静态(static)和非静态(non-static)方式。static方式采用比如word2vec预训练的词向量,训练过程不更新词向量,实质上属于迁移学习了,特别是数据量比较小的情况下,采用静态的词向量往往效果不错。non-static则是在训练过程中更新词向量。推荐的方式是 non-static 中的 fine-tunning方式,它是以预训练(pre-train)的word2vec向量初始化词向量,训练过程中调整词向量,能加速收敛,当然如果有充足的训练数据和资源,直接随机初始化词向量效果也是可以的。

通道(Channels):图像中可以利用 (R, G, B) 作为不同channel,而文本的输入的channel通常是不同方式的embedding方式(比如 word2vec或Glove),实践中也有利用静态词向量和fine-tunning词向量作为不同channel的做法。

一维卷积(conv-1d):图像是二维数据,经过词向量表达的文本为一维数据,因此在TextCNN卷积用的是一维卷积。一维卷积带来的问题是需要设计通过不同 filter_size 的 filter 获取不同宽度的视野。

Pooling层:利用CNN解决文本分类问题的文章还是很多的,比如这篇 A Convolutional Neural Network for Modelling Sentences 最有意思的输入是在 pooling 改成 (dynamic) k-max pooling ,pooling阶段保留 k 个最大的信息,保留了全局的序列信息。比如在情感分析场景,举个例子:

“ 我觉得这个地方景色还不错,但是人也实在太多了 ”

虽然前半部分体现情感是正向的,全局文本表达的是偏负面的情感,利用 k-max pooling能够很好捕捉这类信息。

二、THUCNews新闻文本分类

1、数据集

实验采用了清华NLP组提供的THUCNews新闻文本分类数据集的一个子集(原始的数据集大约74万篇文档,训练起来需要花较长的时间)。

官方全部数据集下载

http://thuctc.thunlp.org/

本次训练使用了其中的10个分类,每个分类6500条,总共65000条新闻数据。

2、模型

CNN模型参数配置

class TCNNConfig(object):

"""CNN配置参数"""

embedding_dim = 64 # 词向量维度

seq_length = 600 # 序列长度

num_classes = 10 # 类别数

num_filters = 128 # 卷积核数目

kernel_size = 5 # 卷积核尺寸

vocab_size = 5000 # 词汇表达小

hidden_dim = 128 # 全连接层神经元

dropout_keep_prob = 0.5 # dropout保留比例

learning_rate = 1e-3 # 学习率

batch_size = 64 # 每批训练大小

num_epochs = 10 # 总迭代轮次

print_per_batch = 100 # 每多少轮输出一次结果

save_per_batch = 10 # 每多少轮存入tensorboard3、结果

cmd运行 python run_cnn.py train

Training and evaluating...

Epoch: 1

Iter: 0, Train Loss: 2.3, Train Acc: 15.62%, Val Loss: 2.3, Val Acc: 9.98%, Time: 0:00:02 *

Iter: 100, Train Loss: 1.0, Train Acc: 67.19%, Val Loss: 1.1, Val Acc: 68.36%, Time: 0:00:05 *

Iter: 200, Train Loss: 0.51, Train Acc: 81.25%, Val Loss: 0.61, Val Acc: 80.54%, Time: 0:00:08 *

Iter: 300, Train Loss: 0.33, Train Acc: 90.62%, Val Loss: 0.41, Val Acc: 87.16%, Time: 0:00:10 *

Iter: 400, Train Loss: 0.27, Train Acc: 89.06%, Val Loss: 0.36, Val Acc: 89.80%, Time: 0:00:13 *

Iter: 500, Train Loss: 0.2, Train Acc: 93.75%, Val Loss: 0.3, Val Acc: 92.14%, Time: 0:00:16 *

Iter: 600, Train Loss: 0.14, Train Acc: 95.31%, Val Loss: 0.29, Val Acc: 92.64%, Time: 0:00:18 *

Iter: 700, Train Loss: 0.092, Train Acc: 95.31%, Val Loss: 0.29, Val Acc: 92.50%, Time: 0:00:21

Epoch: 2

Iter: 800, Train Loss: 0.093, Train Acc: 98.44%, Val Loss: 0.27, Val Acc: 92.94%, Time: 0:00:24 *

Iter: 900, Train Loss: 0.048, Train Acc: 100.00%, Val Loss: 0.25, Val Acc: 93.08%, Time: 0:00:27 *

Iter: 1000, Train Loss: 0.034, Train Acc: 100.00%, Val Loss: 0.29, Val Acc: 92.06%, Time: 0:00:29

Iter: 1100, Train Loss: 0.15, Train Acc: 98.44%, Val Loss: 0.26, Val Acc: 92.92%, Time: 0:00:32

Iter: 1200, Train Loss: 0.12, Train Acc: 96.88%, Val Loss: 0.25, Val Acc: 92.64%, Time: 0:00:34

Iter: 1300, Train Loss: 0.21, Train Acc: 92.19%, Val Loss: 0.21, Val Acc: 94.16%, Time: 0:00:37 *

Iter: 1400, Train Loss: 0.15, Train Acc: 95.31%, Val Loss: 0.23, Val Acc: 93.74%, Time: 0:00:39

Iter: 1500, Train Loss: 0.088, Train Acc: 96.88%, Val Loss: 0.29, Val Acc: 90.94%, Time: 0:00:42

Epoch: 3

Iter: 1600, Train Loss: 0.071, Train Acc: 98.44%, Val Loss: 0.21, Val Acc: 93.80%, Time: 0:00:44

Iter: 1700, Train Loss: 0.046, Train Acc: 98.44%, Val Loss: 0.19, Val Acc: 94.82%, Time: 0:00:47 *

Iter: 1800, Train Loss: 0.094, Train Acc: 96.88%, Val Loss: 0.23, Val Acc: 93.60%, Time: 0:00:50

Iter: 1900, Train Loss: 0.073, Train Acc: 96.88%, Val Loss: 0.2, Val Acc: 94.30%, Time: 0:00:52

Iter: 2000, Train Loss: 0.017, Train Acc: 100.00%, Val Loss: 0.2, Val Acc: 94.48%, Time: 0:00:55

Iter: 2100, Train Loss: 0.039, Train Acc: 100.00%, Val Loss: 0.2, Val Acc: 95.02%, Time: 0:00:58 *

Iter: 2200, Train Loss: 0.0094, Train Acc: 100.00%, Val Loss: 0.22, Val Acc: 93.96%, Time: 0:01:00

Iter: 2300, Train Loss: 0.069, Train Acc: 98.44%, Val Loss: 0.2, Val Acc: 94.52%, Time: 0:01:03

Epoch: 4

Iter: 2400, Train Loss: 0.018, Train Acc: 98.44%, Val Loss: 0.18, Val Acc: 95.22%, Time: 0:01:05 *

Iter: 2500, Train Loss: 0.082, Train Acc: 98.44%, Val Loss: 0.22, Val Acc: 94.06%, Time: 0:01:08

Iter: 2600, Train Loss: 0.012, Train Acc: 100.00%, Val Loss: 0.2, Val Acc: 95.04%, Time: 0:01:10

Iter: 2700, Train Loss: 0.077, Train Acc: 98.44%, Val Loss: 0.18, Val Acc: 95.46%, Time: 0:01:13 *

Iter: 2800, Train Loss: 0.025, Train Acc: 98.44%, Val Loss: 0.18, Val Acc: 95.36%, Time: 0:01:16

Iter: 2900, Train Loss: 0.091, Train Acc: 95.31%, Val Loss: 0.23, Val Acc: 93.90%, Time: 0:01:18

Iter: 3000, Train Loss: 0.036, Train Acc: 96.88%, Val Loss: 0.17, Val Acc: 95.40%, Time: 0:01:21

Iter: 3100, Train Loss: 0.039, Train Acc: 98.44%, Val Loss: 0.19, Val Acc: 95.26%, Time: 0:01:23

Epoch: 5

Iter: 3200, Train Loss: 0.012, Train Acc: 100.00%, Val Loss: 0.19, Val Acc: 95.08%, Time: 0:01:26

Iter: 3300, Train Loss: 0.0018, Train Acc: 100.00%, Val Loss: 0.19, Val Acc: 95.42%, Time: 0:01:28

Iter: 3400, Train Loss: 0.0025, Train Acc: 100.00%, Val Loss: 0.19, Val Acc: 95.04%, Time: 0:01:31

Iter: 3500, Train Loss: 0.053, Train Acc: 98.44%, Val Loss: 0.21, Val Acc: 94.64%, Time: 0:01:33

Iter: 3600, Train Loss: 0.022, Train Acc: 98.44%, Val Loss: 0.22, Val Acc: 94.14%, Time: 0:01:36

Iter: 3700, Train Loss: 0.0015, Train Acc: 100.00%, Val Loss: 0.18, Val Acc: 95.12%, Time: 0:01:38

No optimization for a long time, auto-stopping...在验证集上的最佳效果为95.12%,且只经过了5轮迭代就已经停止。

参考文献

[1] CNN字符级中文文本分类-基于TensorFlow实现

https://blog.csdn.net/u011439796/article/details/77692621

[2] code

https://github.com/gaussic/text-classification-cnn-rnn

[3] TextCNN

https://zhuanlan.zhihu.com/p/25928551