一个简单的神经网络例子

神经系统的搭建

import tensorflow as tf

import numpy as np

import matplotlib,pylab as plt

def add_layer(input,in_size,out_size,activation_function = None):

Weights = tf.Variable(tf.random_normal([in_size,out_size]))

biases = tf.Variable(tf.zeros([1,out_size])+0.1)

Wx_plus_b = tf.matmul(input,Weights)+biases

if activation_function is None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b)

return outputs

#输入层到神经层

x_data = np.linspace(-1,1,300)[:,np.newaxis] #产生等差数列

noise = np.random.normal(0,0.05,x_data.shape)

y_data = np.square(x_data)-0.5+noise #对x_data进行平方

xs = tf.placeholder(tf.float32,[None,1])

ys = tf.placeholder(tf.float32,[None,1])

#神经层到输出层

l1 = add_layer(xs,1,10,activation_function=tf.nn.relu)

prediction = add_layer(l1,10,1,activation_function=None)

loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys-prediction),reduction_indices=[1])) #将每个y_data-prediction值取平方,然后求和后去平均值

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

init = tf.initialize_all_variables()

sess = tf.Session()

sess.run(init)

fig = plt.figure()

ax = fig.add_subplot(1,1,1)

ax.scatter(x_data,y_data)

plt.ion() #使程序不暂停,图像可变动

plt.show()

for i in range(1000):

sess.run(train_step,feed_dict ={xs:x_data,ys:y_data} )

if i%50==0:

try:

ax.lines.remove(lines[0])

except Exception:

pass

prediction_value = sess.run(prediction,feed_dict={xs:x_data})

lines = ax.plot(x_data,prediction_value,'r-',lw = 5)

plt.pause(0.1)

#print(sess.run(loss,feed_dict ={xs:x_data,ys:y_data}))```

将其可视化

import numpy as np

import matplotlib,pylab as plt

def add_layer(input,in_size,out_size,n_layer,activation_function = None):

layer_name = 'layer%s'% n_layer

with tf.name_scope(layer_name):

with tf.name_scope('weights'):

Weights = tf.Variable(tf.random_normal([in_size,out_size]),name = 'w')

tf.summary.histogram(layer_name+'/weights',Weights)

with tf.name_scope('biases'):

biases = tf.Variable(tf.zeros([1,out_size])+0.1)

tf.summary.histogram(layer_name + '/biases', biases)

with tf.name_scope('Wx_plus_b'):

Wx_plus_b = tf.matmul(input,Weights)+biases

if activation_function is None:

outputs = Wx_plus_b

tf.summary.histogram(layer_name + '/outputs', outputs)

else:

outputs = activation_function(Wx_plus_b)

return outputs

#输入层到神经层

x_data = np.linspace(-1,1,300)[:,np.newaxis] #产生等差数列

noise = np.random.normal(0,0.05,x_data.shape)

y_data = np.square(x_data)-0.5+noise #对x_data进行平方

with tf.name_scope('input'):

xs = tf.placeholder(tf.float32, [None, 1], name='x_input')

ys = tf.placeholder(tf.float32, [None, 1], name='y_input')

**将其可视化**

l1 = add_layer(xs,1,10,n_layer = 1,activation_function=tf.nn.relu)

prediction = add_layer(l1,10,1,n_layer = 2,activation_function=None)

with tf.name_scope('loss'):

loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys-prediction),reduction_indices=[1])) #将每个y_data-prediction值取平方,然后求和后去平均值

tf.summary.scalar('loss',loss) #event图

with tf.name_scope('train'):

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

init = tf.initialize_all_variables()

sess = tf.Session()

merged = tf.summary.merge_all()

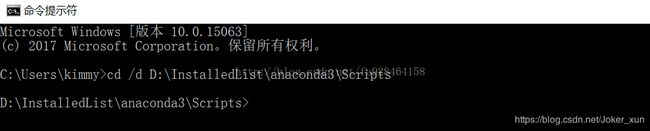

writer = tf.summary.FileWriter("C:/Users/Administrator/Desktop/model",sess.graph)

sess.run(init)

for i in range(1000):

sess.run(train_step,feed_dict={xs:x_data,ys:y_data})

if i%50==0:

result = sess.run(merged,feed_dict={xs:x_data,ys:y_data})

writer.add_summary(result,i)

fig = plt.figure()

ax = fig.add_subplot(1,1,1)

ax.scatter(x_data,y_data)

plt.ion() #使程序不暂停,图像可变动

plt.show()

for i in range(1000):

sess.run(train_step,feed_dict ={xs:x_data,ys:y_data} )

if i%50==0:

try:

ax.lines.remove(lines[0])

except Exception:

pass

prediction_value = sess.run(prediction,feed_dict={xs:x_data})

lines = ax.plot(x_data,prediction_value,'r-',lw = 5)

plt.pause(0.1)

#print(sess.run(loss,feed_dict ={xs:x_data,ys:y_data}))