Hadoop————全排序和二次排序

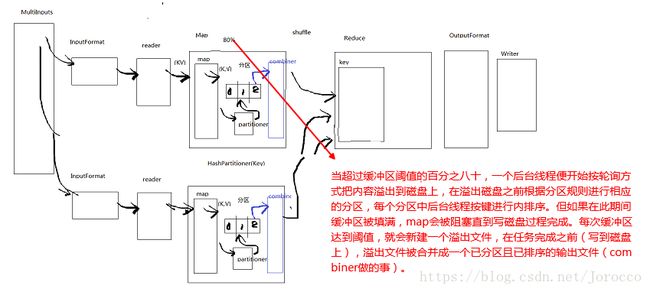

1、多输入

使用多个输入作为job的输入来源,也就是在InputFormat 前把添加各种不同的序列源里面的方法也就是 addInputPath等等,map也可以在这个流程中套进来。

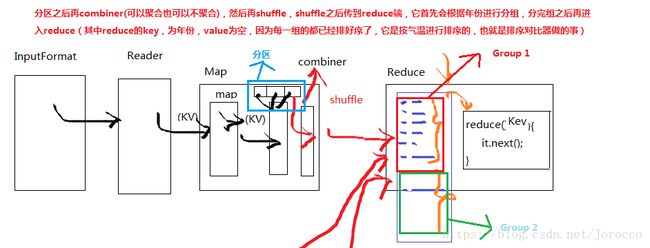

combiner:合成,map的reduce(聚合) 在分区内聚合,分区后产生数据后在分区内聚合(每个分区都会有一个)。

代码示例

WCTextMapper.java(文本输入格式)

package cn.ctgu.mr.multiinput;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCTextMapper extends Mapper<LongWritable,Text,Text,IntWritable> {

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

Text keyout=new Text();

IntWritable valueOut=new IntWritable();

String[] arr=value.toString().split(" ");

for(String s:arr){

keyout.set(s);

valueOut.set(1);

context.write(keyout,valueOut);

}

}

}WCSequenceMapper.java(序列化文件输入格式)

package cn.ctgu.mr.multiinput;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCSequenceMapper extends Mapper<IntWritable,Text,Text,IntWritable> {

protected void map(IntWritable key, Text value, Context context) throws IOException, InterruptedException {

Text keyout=new Text();

IntWritable valueOut=new IntWritable();

String[] arr=value.toString().split(" ");

for(String s:arr){

keyout.set(s);

valueOut.set(1);

context.write(keyout,valueOut);

}

}

}WCReducer.java

package cn.ctgu.mr.multiinput;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCReducer extends Reducer<Text,IntWritable,Text,IntWritable>{

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

int count=0;

for(IntWritable iw:values){

count=count+iw.get();

}

context.write(key,new IntWritable(count));

}

} WCApp.java

package cn.ctgu.mr.multiinput;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.MultipleInputs;

import org.apache.hadoop.mapreduce.lib.input.SequenceFileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCApp {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","file:///");

Job job=Job.getInstance(conf);

//设置job的各种属性

job.setJobName("MaxTempApp");//作业名称

job.setJarByClass(WCApp.class);//搜索类

//多个输入

MultipleInputs.addInputPath(job,new Path("file:///J:/mr/text"),TextInputFormat.class,WCTextMapper.class);

MultipleInputs.addInputPath(job,new Path("file:///J:/mr/seq"), SequenceFileInputFormat.class,WCSequenceMapper.class);

//设置输出

FileOutputFormat.setOutputPath(job,new Path(args[0]));

job.setReducerClass(WCReducer.class);//reduce类

job.setNumReduceTasks(3);//reduce个数

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);//

job.setOutputValueClass(IntWritable.class);

job.waitForCompletion(true);

}

}2、全排序

所谓全排序就是不仅在每个分区上是有序的,它所有的分区按大小合并起来也是有序的。该案例是求取每年的最高气温。

实现全排序的方式有三种:

1、只定义一个reduce任务,容易产生数据倾斜。

2、自定义分区函数,即自行设置分解区间

3、使用hadoop采样器机制,通过采样器生成分区文件,结合hadoop的TotalOrderPartitioner进行分区划分分区。

2.1 采用自定义分区函数实现

YearPartitioner.java

package cn.ctgu.MaxTemp;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Partitioner;

/*

*

* 重定义分区的方式

*

* 将100年的数据划分为3个区

* 小于33的为一个区

* 33~66的为第二个区

* 66~100的为第三个区

*

*

* */

public class YearPartitioner extends Partitioner<IntWritable,IntWritable>{

public int getPartition(IntWritable year,IntWritable temp,int numPartitions){

int y=year.get()-1970;

if(y<33){

return 0;

}

else if(y>=33&&y<66){

return 1;

}

else{

return 2;

}

}

}MaxTempMapper.java

package cn.ctgu.MaxTemp;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class MaxTempMapper extends Mapper<LongWritable,Text,IntWritable,IntWritable> {

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line=value.toString();

String arr[]=line.split(" ");

context.write(new IntWritable(Integer.parseInt(arr[0])),new IntWritable(Integer.parseInt(arr[1])));

}

}MaxTempReducer.java

package cn.ctgu.MaxTemp;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class MaxTempReducer extends Reducer<IntWritable,IntWritable,IntWritable,IntWritable>{

protected void reduce(IntWritable key, Iterable values, Context context) throws IOException, InterruptedException {

int max=Integer.MIN_VALUE;

for(IntWritable iw:values){

max=max>iw.get()?max:iw.get();

}

context.write(key,new IntWritable(max));

}

} MaxTempApp.java

package cn.ctgu.MaxTemp;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*

* 全排序:不仅在每个分区上是有序的,它所有的分区按大小合并起来也是有序的

* 全排序两种方式:1、定义一个reduce;2、自定义分区;3、采样器

* 求每年的最高气温

*/

public class MaxTempApp {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","file:///");

Job job=Job.getInstance(conf);

//设置job的各种属性

job.setJobName("MaxTempApp");//作业名称

job.setJarByClass(MaxTempApp.class);//搜索类

job.setInputFormatClass(TextInputFormat.class);//设置输入格式

//添加输入路径

FileInputFormat.addInputPath(job,new Path(args[0]));

//设置输出路径

FileOutputFormat.setOutputPath(job,new Path(args[1]));

job.setPartitionerClass(YearPartitioner.class);//设置分区类

job.setMapperClass(MaxTempMapper.class);//mapper类

job.setReducerClass(MaxTempReducer.class);//reduce类

job.setNumReduceTasks(3);//reduce个数,它会根据我们重定义的分区方式进行将数据进行分区,在每个分区上做mapreduce操作,即最终得到的结果在整体上也是有序的

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(IntWritable.class);//

job.setOutputValueClass(IntWritable.class);

job.waitForCompletion(true);

}

}2.2 采用hadoop采样机制实现

MaxTempMapper.java

package cn.ctgu.allsort;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class MaxTempMapper extends Mapper<IntWritable,IntWritable,IntWritable,IntWritable> {

protected void map(IntWritable key, IntWritable value, Context context) throws IOException, InterruptedException {

context.write(key,value);

}

}MaxTempReducer.java

package cn.ctgu.allsort;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class MaxTempReducer extends Reducer<IntWritable,IntWritable,IntWritable,IntWritable>{

protected void reduce(IntWritable key, Iterable values, Context context) throws IOException, InterruptedException {

int max=Integer.MIN_VALUE;

for(IntWritable iw:values){

max=max>iw.get()?max:iw.get();

}

context.write(key,new IntWritable(max));

}

} MaxTempApp.java

package cn.ctgu.allsort;

import cn.ctgu.MaxTemp.YearPartitioner;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapred.lib.InputSampler;

import org.apache.hadoop.mapred.lib.TotalOrderPartitioner;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.SequenceFileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*

* 全排序:不仅在每个分区上是有序的,它所有的分区按大小合并起来也是有序的

* 全排序两种方式:1、定义一个reduce;2、自定义分区;3、采样器

* 求每年的最高气温

*/

public class MaxTempApp {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","file:///");

Job job=Job.getInstance(conf);

//设置job的各种属性

job.setJobName("MaxTempApp");//作业名称

job.setJarByClass(MaxTempApp.class);//搜索类

job.setInputFormatClass(SequenceFileInputFormat.class);//设置输入格式,全排序分区类的输入格式由于只能是IntWritable格式的,所以这里只能用sequenceFileInputFormat,当然也可以用其他的格式,只要能满足IntWritable就行

//添加输入路径

FileInputFormat.addInputPath(job,new Path(args[0]));

//设置输出路径

FileOutputFormat.setOutputPath(job,new Path(args[1]));

job.setMapperClass(MaxTempMapper.class);//mapper类

job.setReducerClass(MaxTempReducer.class);//reduce类

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(IntWritable.class);//

job.setOutputValueClass(IntWritable.class);

//创建随机采样器对象

//freq:每个key被选中的概率,概率为1则是全选

//numSample:抽取样本的总数

//maxSplitSampled:最大采样切片数

InputSampler.Samplersampler=

new InputSampler.RandomSampler(1,10000,10);

job.setNumReduceTasks(3);//reduce个数,它会根据我们重定义的分区方式进行将数据进行分区,在每个分区上做mapreduce操作,即最终得到的结果在整体上也是有序的

//将sample数据写入分区文件

TotalOrderPartitioner.setPartitionFile(job.getConfiguration(),new Path("file:///J:/mr/par.lst"));

job.setPartitionerClass(TotalOrderPartitioner.class);//设置全排序分区类

InputSampler.writePartitionFile(job,sampler);

job.waitForCompletion(true);

}

} TotalOrderPartitioner //全排序分区类,读取外部生成的分区文件确定区间。使用时采样代码在最后端,否则会出现错误。

//分区文件设置,设置的job的配置对象,不要是之前的conf. TotalOrderPartitioner.setPartitionFile(job.getConfiguration(),new Path("d:/mr/par.lst"));3、二次排序

Key是可以排序的,需要对value排序。

二次排序就是首先按照第一字段排序,然后再对第一字段相同的行按照第二字段排序,且不能破坏第一次排序的结果。

二次排序分为以下几个步骤:

1、自定义组合key,即使得该原生数据的value能进行排序,原生的key-value组成一个key

2、自定义分区类,按照年份分区

3、定义分组对比器,使得同一组的数据调用同一个reduce方法

4、定义key排序对比器

3.1 自定义组合Key

ComboKey.java

package cn.ctgu.secondarysort;

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

//自定义的组合key

public class ComboKey implements WritableComparable{

private int year;

private int temp;

public int getYear() {

return year;

}

public void setYear(int year) {

this.year = year;

}

public int getTemp() {

return temp;

}

public void setTemp(int temp) {

this.temp = temp;

}

//序列化过程

public void write(DataOutput out) throws IOException {

//年份

out.writeInt(year);

//气温

out.writeInt(temp);

}

//反序列化过程

public void readFields(DataInput in) throws IOException {

year=in.readInt();

temp=in.readInt();

}

//对key进行比较实现,它只是对气温进行排序的

public int compareTo(ComboKey o) {

int y0=o.getYear();

int t0=o.getTemp();

//年份升序

// 年份相同,返回0则相等,

if(year==y0){

//气温降序

return -(temp-t0);//小于0是降序

}

else {

return year-y0;

}

}

} 3.2 自定义分区类

YearPartitioner.java

package cn.ctgu.secondarysort;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Partitioner;

/*

*

* 自定义分区类,保证把同一个年份的分到同一个分区上(当然还有不是同一个年份的数据)

* 分区在map端,先分区再combiner,即将原生数据进行map之后再进行这个分区

* 而分组是在reduce端,即将分完区之后的数据再进行分组,分完组再进入reduce,它属于reduce的范畴

* */

public class YearPartitioner extends Partitioner<ComboKey,NullWritable>{

public int getPartition(ComboKey key, NullWritable nullWritable, int numPartitions) {

int year=key.getYear();

return year%numPartitions;

}

}3.3 自定义分组对比器

YearGroupComparator.java

package cn.ctgu.secondarysort;

import org.apache.hadoop.io.RawComparator;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

//按照年份进行分组对比器实现,即同一年份为一组,也就是同一组的进入一个reduce里面

public class YearGroupComparator extends WritableComparator {

protected YearGroupComparator(){

super(ComboKey.class,true);

}

public int compare(WritableComparable o1, WritableComparable o2) {

ComboKey k1= (ComboKey) o1;

ComboKey k2= (ComboKey) o2;

return k1.getYear()-k2.getYear();

}

}3.4 排序对比器

ComboKeyComparator.java

package cn.ctgu.secondarysort;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

/*

*

* 排序对比器,对年份相同的气温进行排序

* */

public class ComboKeyComparator extends WritableComparator {

protected ComboKeyComparator(){

super(ComboKey.class,true);

}

public int compare(WritableComparable o1, WritableComparable o2) {

ComboKey k1= (ComboKey) o1;

ComboKey k2= (ComboKey) o2;

return k1.compareTo(k2);

}

}3.5 编写Mapper

MaxTempMapper.java

package cn.ctgu.secondarysort;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.junit.Test;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class MaxTempMapper extends Mapper<LongWritable,Text,ComboKey,NullWritable> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line=value.toString();

String[]arr=line.split(" ");

ComboKey keyOut=new ComboKey();

keyOut.setYear(Integer.parseInt(arr[0]));

keyOut.setTemp(Integer.parseInt(arr[1]));

context.write(keyOut,NullWritable.get());

}

}3.6 编写Reduce

package cn.ctgu.secondarysort;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class MaxTempReducer extends Reducer<ComboKey,NullWritable,IntWritable,IntWritable>{

/*

*

* */

protected void reduce(ComboKey key, Iterable values, Context context) throws IOException, InterruptedException {

int year=key.getYear();

int temp=key.getTemp();

System.out.println("===========分组============reduce");

for(NullWritable v:values){

System.out.println(key.getYear()+":"+key.getTemp());

}

context.write(new IntWritable(year),new IntWritable(temp));

}

} 3.7 编写主函数

MaxTempApp.java

package cn.ctgu.secondarysort;

import cn.ctgu.MaxTemp.*;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapred.lib.InputSampler;

import org.apache.hadoop.mapred.lib.TotalOrderPartitioner;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.SequenceFileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*

*

* 二次排序:在每个分区上它是按年份排序的,年份相同则按气温从大到小排序

* 求每年的最高气温

*/

public class MaxTempApp {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","file:///");

Job job=Job.getInstance(conf);

//设置job的各种属性

job.setJobName("MaxTempApp");//作业名称

job.setJarByClass(MaxTempApp.class);//搜索类

job.setInputFormatClass(TextInputFormat.class);

//添加输入路径

FileInputFormat.addInputPath(job,new Path(args[0]));

//设置输出路径

FileOutputFormat.setOutputPath(job,new Path(args[1]));

job.setMapperClass(MaxTempMapper.class);//mapper类

job.setReducerClass(MaxTempReducer.class);//reduce类

//设置map输出类型

job.setMapOutputKeyClass(ComboKey.class);

job.setMapOutputValueClass(NullWritable.class);

//设置reduceOutput类型

job.setOutputKeyClass(IntWritable.class);//

job.setOutputValueClass(IntWritable.class);

//设置分区类

job.setPartitionerClass(YearPartitioner.class);

//设置分组对比器

job.setGroupingComparatorClass(YearGroupComparator.class);

//设置排序对比器

job.setSortComparatorClass(ComboKeyComparator.class);

job.setNumReduceTasks(3);//reduce个数,它会根据我们重定义的分区方式进行将数据进行分区,在每个分区上做mapreduce操作,即最终得到的结果在整体上也是有序的。不设置,默认就为1

job.waitForCompletion(true);

}

}4、数据倾斜

解决数据倾斜问题分为两个阶段:

1、自定义分区函数

2、随机分配

4.1 自定义分区函数实现

RandomPartitioner.java

package cn.ctgu.skew;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

import java.util.Random;

/*

*

* 自定义分区函数

* */

public class RandomPartitioner extends Partitioner<Text,IntWritable>{

public int getPartition(Text text, IntWritable intWritable, int numPartitions) {

return new Random().nextInt(numPartitions);

}

}WCSkewMapper.java

package cn.ctgu.skew;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCSkewMapper extends Mapper<LongWritable,Text,Text,IntWritable> {

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] arr=value.toString().split(" ");

Text keyout=new Text();

IntWritable valueOut=new IntWritable();

for(String s:arr){

keyout.set(s);

valueOut.set(1);

context.write(keyout,valueOut);

}

}

}WCSkewReducer.java

package cn.ctgu.skew;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCSkewReducer extends Reducer<Text,IntWritable,Text,IntWritable>{

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

int count=0;

for(IntWritable iw:values){

count=count+iw.get();

}

context.write(key,new IntWritable(count));

}

} WCSkewApp.java

package cn.ctgu.skew;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*

* 解决数据倾斜问题

*/

public class WCSkewApp {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","file:///");

Job job=Job.getInstance(conf);

//设置job的各种属性

job.setJobName("WCSkewApp2");//作业名称

job.setJarByClass(WCSkewApp.class);//搜索类

job.setInputFormatClass(TextInputFormat.class);//设置输入格式

//添加输入路径

FileInputFormat.addInputPath(job,new Path("J:/Program/file/skew"));

//设置输出路径

FileOutputFormat.setOutputPath(job,new Path("J:/Program/file/skew/out"));

//设置分区类

job.setPartitionerClass(RandomPartitioner.class);

job.setMapperClass(WCSkewMapper.class);//mapper类

job.setReducerClass(WCSkewReducer.class);//reduce类

job.setNumReduceTasks(4);//reduce个数

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);//

job.setOutputValueClass(IntWritable.class);

job.waitForCompletion(true);

}

}4.2 随机分配(建立在上面的基础之上的)

WCSkewMapper2.java

package cn.ctgu.skew.Stage2;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCSkewMapper2 extends Mapper<LongWritable,Text,Text,IntWritable> {

public WCSkewMapper2(){

System.out.println("new MaxTempMapper");

}

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] arr=value.toString().split("\t");

context.write(new Text(arr[0]),new IntWritable(Integer.parseInt(arr[1])));

}

}WCSkewReducer2.java

package cn.ctgu.skew.Stage2;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCSkewReducer2 extends Reducer<Text,IntWritable,Text,IntWritable>{

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

int count=0;

for(IntWritable iw:values){

count=count+iw.get();

}

context.write(key,new IntWritable(count));

}

} WCSkewApp2.java

package cn.ctgu.skew.Stage2;

import cn.ctgu.skew.RandomPartitioner;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*

* 解决数据倾斜问题:随机分配

*/

public class WCSkewApp2 {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","file:///");

Job job=Job.getInstance(conf);

//设置job的各种属性

job.setJobName("WCSkewApp2");//作业名称

job.setJarByClass(WCSkewApp2.class);//搜索类

job.setInputFormatClass(TextInputFormat.class);//设置输入格式

//添加输入路径

FileInputFormat.addInputPath(job,new Path("J:/Program/file/skew/out/part-r-00000"));

FileInputFormat.addInputPath(job,new Path("J:/Program/file/skew/out/part-r-00001"));

FileInputFormat.addInputPath(job,new Path("J:/Program/file/skew/out/part-r-00002"));

FileInputFormat.addInputPath(job,new Path("J:/Program/file/skew/out/part-r-00003"));

//设置输出路径

FileOutputFormat.setOutputPath(job,new Path("J:/Program/file/skew/out2"));

job.setMapperClass(WCSkewMapper2.class);//mapper类

job.setReducerClass(WCSkewReducer2.class);//reduce类

job.setNumReduceTasks(4);//reduce个数

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);//

job.setOutputValueClass(IntWritable.class);

job.waitForCompletion(true);

}

}4.3 随机分配的第二种实现方式

WCSkewMapper2.java

package cn.ctgu.skew.stage3;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCSkewMapper2 extends Mapper<Text,Text,Text,IntWritable> {

protected void map(Text key, Text value, Context context) throws IOException, InterruptedException {

context.write(key,new IntWritable(Integer.parseInt(value.toString())));

}

}WCSkewReducer2.java

package cn.ctgu.skew.stage3;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCSkewReducer2 extends Reducer<Text,IntWritable,Text,IntWritable>{

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

int count=0;

for(IntWritable iw:values){

count=count+iw.get();

}

context.write(key,new IntWritable(count));

}

} WCSkewApp2.java

package cn.ctgu.skew.stage3;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.KeyValueTextInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*

* 解决数据倾斜问题:随机分配

*/

public class WCSkewApp2 {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","file:///");

Job job=Job.getInstance(conf);

//设置job的各种属性

job.setJobName("WCSkewApp2");//作业名称

job.setJarByClass(WCSkewApp2.class);//搜索类

job.setInputFormatClass(KeyValueTextInputFormat.class);//设置为KeyValue格式,它会自动按\t符将其切割,输出的格式为Text Text(输入到map中的)

//添加输入路径

FileInputFormat.addInputPath(job,new Path("J:/Program/file/skew/out/part-r-00000"));

FileInputFormat.addInputPath(job,new Path("J:/Program/file/skew/out/part-r-00001"));

FileInputFormat.addInputPath(job,new Path("J:/Program/file/skew/out/part-r-00002"));

FileInputFormat.addInputPath(job,new Path("J:/Program/file/skew/out/part-r-00003"));

//设置输出路径

FileOutputFormat.setOutputPath(job,new Path("J:/Program/file/skew/out2"));

job.setMapperClass(WCSkewMapper2.class);//mapper类

job.setReducerClass(WCSkewReducer2.class);//reduce类

job.setNumReduceTasks(4);//reduce个数

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);//

job.setOutputValueClass(IntWritable.class);

job.waitForCompletion(true);

}

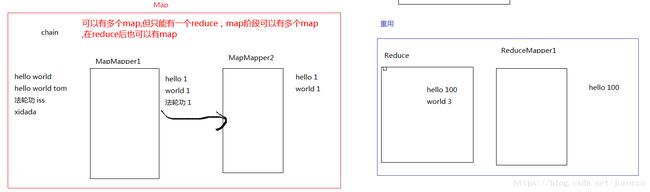

}Map阶段

WCMapMapper1.java

package cn.ctgu.chain;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCMapMapper1 extends Mapper<LongWritable,Text,Text,IntWritable> {

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

Text keyout=new Text();

IntWritable valueOut=new IntWritable();

String[] arr=value.toString().split(" ");

for(String s:arr){

keyout.set(s);

valueOut.set(1);

context.write(keyout,valueOut);

}

}

}WCMapMapper2.java

package cn.ctgu.chain;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

* 过滤掉敏感词汇,过滤falungong

*/

public class WCMapMapper2 extends Mapper<Text,IntWritable,Text,IntWritable> {

protected void map(Text key, IntWritable value, Context context) throws IOException, InterruptedException {

if(!key.toString().equals("falungong")){

context.write(key,value);

}

}

}Reduce阶段

WCReducer.java

package cn.ctgu.chain;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*/

public class WCReducer extends Reducer<Text,IntWritable,Text,IntWritable>{

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

int count=0;

for(IntWritable iw:values){

count=count+iw.get();

}

context.write(key,new IntWritable(count));

}

} WCReducerMapper1.java

package cn.ctgu.chain;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/*

*

* 考虑单词个数,过滤掉小于5个单词的

* */

public class WCReduceMapper1 extends Mapper<Text,IntWritable,Text,IntWritable>{

protected void map(Text key, IntWritable value, Context context) throws IOException, InterruptedException {

if(value.get()>5){

context.write(key,value);

}

}

}主函数

WCChainApp.java

package cn.ctgu.chain;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.chain.ChainMapper;

import org.apache.hadoop.mapreduce.lib.chain.ChainReducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

* 链条式job任务

*/

public class WCChainApp {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","file:///");

Job job=Job.getInstance(conf);

//设置job的各种属性

job.setJobName("WCChainApp");//作业名称

job.setJarByClass(WCChainApp.class);//搜索类

job.setInputFormatClass(TextInputFormat.class);//设置输入格式

//添加输入路径

FileInputFormat.addInputPath(job,new Path("J:\\Program\\file\\skew"));

//设置输出路径

FileOutputFormat.setOutputPath(job,new Path("J:\\Program\\file\\skew\\out"));

//在mapper链条上增加mapper1

ChainMapper.addMapper(job,WCMapMapper1.class,LongWritable.class,Text.class,Text.class,IntWritable.class,conf);

//在mapper链条上增加mapper2

ChainMapper.addMapper(job,WCMapMapper2.class,Text.class,IntWritable.class,Text.class,IntWritable.class,conf);

//在reduce链条上设置reduce

ChainReducer.setReducer(job,WCReducer.class,Text.class,IntWritable.class,Text.class,IntWritable.class,conf);

//在reduce链条上设置增加mapper2

ChainMapper.addMapper(job,WCReduceMapper1.class,Text.class,IntWritable.class,Text.class,IntWritable.class,conf);

job.setNumReduceTasks(3);//reduce个数

job.waitForCompletion(true);

}

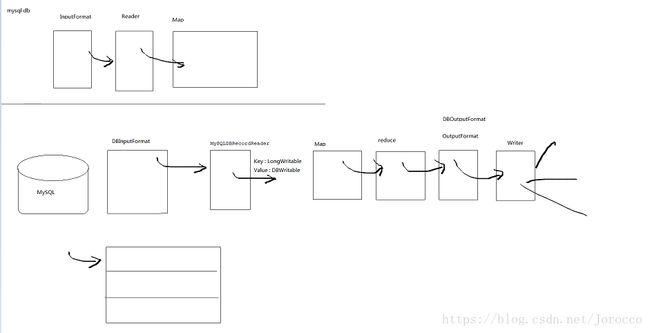

}jdbc的交互

[写操作]

Class.forName("com.mysql.jdbc.Driver");

Connection conn = DriverMananger.getConnection("jdbc:mysql://localhost:3306/big4","root","root");

PreparedStatement ppst = conn.preparedStatement("insert into test(id,name,age) values(?,?,?)");

//绑定参数

ppst.setInteger(1,1);

ppst.setInteger(2,"tom");

ppst.setInteger(3,12);

ppst.executeUpdate();

ppst.close();

conn.close();

[读操作]

Class.forName("com.mysql.jdbc.Driver");

Connection conn = DriverMananger.getConnection("jdbc:mysql://localhost:3306/big4","root","root");

//

ppst = conn.preparedStatement("select id,name from test ");

ResultSet rs = ppst.executeQuery();

while(rs.next()){

int id = rs.getInt("id");

String name = rs.getInt("name");

}

rs.close();

conn.close();使用DBWritable完成同mysql交互

1.准备数据库

create database big4 ;

use big4 ;

create table words(id int primary key auto_increment , name varchar(20) , txt varchar(255));

insert into words(name,txt) values('tomas','hello world tom');

insert into words(txt) values('hello tom world');

insert into words(txt) values('world hello tom');

insert into words(txt) values('world tom hello');

2.编写hadoop MyDBWritable.从mysql中读取数据

MyDBWritable.java

package cn.ctgu.mysql;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.lib.db.DBWritable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

public class MyDBWritable implements DBWritable,Writable{

private int id=0;

private String name="";

private String txt="";

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String getTxt() {

return txt;

}

public void setTxt(String txt) {

this.txt = txt;

}

//序列化

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeInt(id);

dataOutput.writeUTF(name);

dataOutput.writeUTF(txt);

}

//反序列化

public void readFields(DataInput dataInput) throws IOException {

id=dataInput.readInt();

name=dataInput.readUTF();

txt=dataInput.readUTF();

}

//写入DB

public void write(PreparedStatement preparedStatement) throws SQLException {

preparedStatement.setInt(1,id);

preparedStatement.setString(2,txt);

preparedStatement.setString(3,name);

}

//从DB读取

public void readFields(ResultSet resultSet) throws SQLException {

id=resultSet.getInt(1);

txt=resultSet.getString(2);

name=resultSet.getString(3);

}

}WCMapper.java

package cn.ctgu.mysql;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class WCMapper extends Mapper {

@Override

protected void map(LongWritable key, MyDBWritable value, Context context) throws IOException, InterruptedException {

System.out.println(key);

String line=value.getTxt();

System.out.println(value.getId()+","+value.getName()+value.getTxt());

String[]arr=line.split(" ");

for(String s:arr){

context.write(new Text(s),new IntWritable(1));

}

}

} WCReducer.java

package cn.ctgu.mysql;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class WCReducer extends Reducer<Text,IntWritable,Text,IntWritable>{

@Override

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

int count=0;

for(IntWritable w:values){

count=count+w.get();

}

context.write(key,new IntWritable(count));

}

} WCMySqlApp.java

package cn.ctgu.mysql;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.db.DBConfiguration;

import org.apache.hadoop.mapreduce.lib.db.DBInputFormat;;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* Created by Administrator on 2018/6/9.

*

* 读取mysql中的数据

*/

public class WCMySqlApp {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","file:///");

Job job=Job.getInstance(conf);

//设置job的各种属性

job.setJobName("WCMySqlApp");//作业名称

job.setJarByClass(WCMySqlApp.class);//搜索类

//配置数据库信息

String driverclass="com.mysql.jdbc.Driver";

String url="jdbc:mysql://localhost:3306/bigdata";

String username="root";

String password="123456";

DBConfiguration.configureDB(job.getConfiguration(),driverclass,url,username,password);

//设置数据输入内容

DBInputFormat.setInput(job,MyDBWritable.class,"select id,name,txt from words","select count(*) from words");

//设置输出路径

FileOutputFormat.setOutputPath(job,new Path("J:\\Program\\file\\sql"));

job.setMapperClass(WCMapper.class);//mapper类

job.setReducerClass(WCReducer.class);//reduce类

job.setNumReduceTasks(3);//reduce个数

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);//

job.setOutputValueClass(IntWritable.class);

job.waitForCompletion(true);

}

}往mysql中写入数据

MyDBWritable.java

package cn.ctgu.mysql.Write;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.lib.db.DBWritable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

public class MyDBWritable implements DBWritable,Writable{

private int id=0;

private String name="";

private String txt="";

private String word="";

private int wordcount=0;

public String getWord() {

return word;

}

public void setWord(String word) {

this.word = word;

}

public int getWordcount() {

return wordcount;

}

public void setWordcount(int wordcount) {

this.wordcount = wordcount;

}

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String getTxt() {

return txt;

}

public void setTxt(String txt) {

this.txt = txt;

}

//序列化

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeInt(id);

dataOutput.writeUTF(name);

dataOutput.writeUTF(txt);

dataOutput.writeUTF(word);

dataOutput.writeInt(wordcount);

}

//反序列化

public void readFields(DataInput dataInput) throws IOException {

id=dataInput.readInt();

name=dataInput.readUTF();

txt=dataInput.readUTF();

word=dataInput.readUTF();

wordcount=dataInput.readInt();

}

//写入DB

public void write(PreparedStatement preparedStatement) throws SQLException {

preparedStatement.setString(1,word);

preparedStatement.setInt(2,wordcount);

}

//从DB读取

public void readFields(ResultSet resultSet) throws SQLException {

id=resultSet.getInt(1);

txt=resultSet.getString(2);

name=resultSet.getString(3);

}

}WCMapper.java

package cn.ctgu.mysql.Write;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class WCMapper extends Mapper {

@Override

protected void map(LongWritable key, MyDBWritable value, Context context) throws IOException, InterruptedException {

System.out.println(key);

String line=value.getTxt();

System.out.println(value.getId()+","+value.getName()+value.getTxt());

String[]arr=line.split(" ");

for(String s:arr){

context.write(new Text(s),new IntWritable(1));

}

}

} WCReducer.java

package cn.ctgu.mysql.Write;

import cn.ctgu.mysql.*;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class WCReducer extends Reducer{

@Override

protected void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException {

int count=0;

for(IntWritable w:values){

count=count+w.get();

}

MyDBWritable keyOut=new MyDBWritable();

keyOut.setWord(key.toString());

keyOut.setWordcount(count);

context.write(keyOut,NullWritable.get());

}

} WCMySqlApp.java

package cn.ctgu.mysql.Write;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.db.DBConfiguration;

import org.apache.hadoop.mapreduce.lib.db.DBInputFormat;

import org.apache.hadoop.mapreduce.lib.db.DBOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

;

/**

* Created by Administrator on 2018/6/9.

*

* 写入mysql中的数据

*/

public class WCMySqlApp {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf=new Configuration();

conf.set("fs.defaultFS","file:///");

Job job=Job.getInstance(conf);

//设置job的各种属性

job.setJobName("WCMySqlApp");//作业名称

job.setJarByClass(WCMySqlApp.class);//搜索类

//配置数据库信息

String driverclass="com.mysql.jdbc.Driver";

String url="jdbc:mysql://localhost:3306/bigdata";

String username="root";

String password="123456";

DBConfiguration.configureDB(job.getConfiguration(),driverclass,url,username,password);

//设置数据输入内容

DBInputFormat.setInput(job,MyDBWritable.class,"select id,name,txt from words","select count(*) from words");

//设置输出路径

DBOutputFormat.setOutput(job,"stats","word","c");

job.setMapperClass(WCMapper.class);//mapper类

job.setReducerClass(WCReducer.class);//reduce类

job.setNumReduceTasks(3);//reduce个数

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);//

job.setOutputValueClass(IntWritable.class);

job.waitForCompletion(true);

}

}若要将程序放到集群上运行,还需往集群上上传一个mysql驱动。

pom.xml增加mysql驱动

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>5.1.17version>

dependency>