视觉检测——双目相机的标定(简单原理+Opencv实现)

深度相机原理揭秘--双目立体视觉

双目相机空间坐标计算:(good)

https://www.cnblogs.com/zyly/p/9373991.html

深度相机原理:

https://blog.csdn.net/electech6/article/details/78526800

上文的博客总结双目相机和单目相机的区别和原理很好,值得学习。

在这里我总结一下主要是以下几点:

深度相机主要有一下几种:

- 基于TOF(time of flight)飞行时间测距法--发射反射接收

- 基于结构光:结构光成像的硬件:1.相机、2.投射器。 原理:1.投射到被测物体表面 2.由单个或多个相机拍摄表面即得的结构光 3.基于三角测量原理,经图像三维解析计算--》三维重建 常见的仪器:kinect

- 基于双目的立体视觉

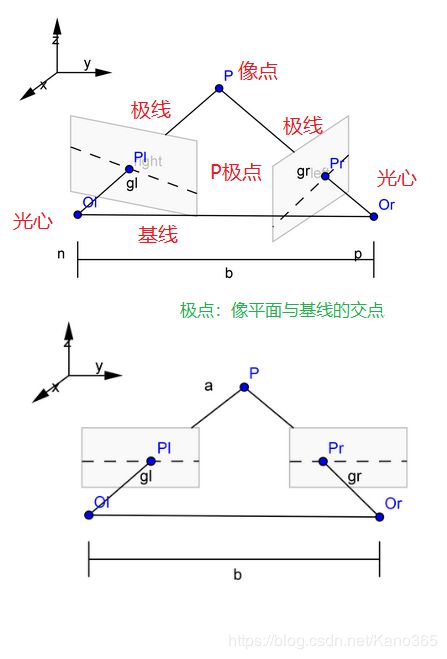

我们主要总结下双目立体视觉:

在单目的视野A情况下,点的远近是看不出的,加上视野B我们就可以看出

而通过相机A、B我们就可以判断三点的远近

在Opencv中我们可以很好的实现双目相机的标定等一系列操作:

- 分别校正左相机和右相机,得到他们的内参和畸变系数

- 找到左右标定图的内角点坐标,加上三维的世界坐标,加上上面的左右相机内参矩阵和畸变系数,求出双目的标定参数矩阵R、T、E、F(旋转矩阵、平移矩阵、本征矩阵、基础矩阵)

- 根据上面的标定结果,对图像进行矫正--------极线约束,矫正后两图像位于同一平面且互相平行

- 对矫正后的两张图像进行像素匹配(有很多算法),获得视差图,计算每个图像的深度,获得深度图

左右棋盘图如下:

https://pan.baidu.com/s/1-U_1v6idEKKvTS1Z6_kXig 提取码:0k3u

在Opencv中的代码如下,有详细的注释:

main.cpp

#include"11.h"

int main()

{

calibrator calib(LEFT_FOLDER, RIGHT_FOLDER, 1.f, 5, 4);

calib.calc_image_points(true); //找到左右标定图的角点坐标

bool done = calib.calibrate(); //双目标定计算,并保存stereo_calib.xml,得到 R, T, E, F

// R– 输出第一和第二相机坐标系之间的旋转矩阵。

// T– 输出第一和第二相机坐标系之间的旋转矩阵平移向量。

// E–输出本征矩阵。

// F–输出基础矩阵。

if (!done)

{

cout << "立体标定失败......." << endl;

}

else

{

cout << "立体标定成功......." << endl;

}

Size image_size(320, 240);

rectifier rec(filename11, image_size);//标定过的摄像机进行校正,stereoRectify(),得到 Rl, Rr, Pl, Pr, Q

//R1– 输出第一个相机的3x3矫正变换(旋转矩阵) .

//R2– 输出第二个相机的3x3矫正变换(旋转矩阵) .

//P1–在第一台相机的新的坐标系统(矫正过的)输出 3x4 的投影矩阵

//P2–在第二台相机的新的坐标系统(矫正过的)输出 3x4 的投影矩阵

//Q–输出深度视差映射矩阵

rec.show_rectified(image_size);

disparity disp(filename11, image_size); //SGBM求视差图,进而求解深度图

disp.show_disparity(image_size);

system("pause");

return 0;

}

11.h

#pragma once

// Program illustrate stereo camera calibration

// Author: Samarth Manoj Brahmbhatt, University of Pennsylvania

#include

#include

#include

#define LEFT_FOLDER "./left/"

#define RIGHT_FOLDER "./right/"

#define DATA_FOLDER "./cam_calib/"

#define filename11 "./cam_calib/stereo_calib.xml"

using namespace cv;

using namespace std;

class calibrator

{

public:

calibrator(string, string, float, int, int); //初始化构造函数

Size get_image_size(); //获取单张图片的大小

void calc_image_points(bool); //通过检测棋盘格角点计算图像点

//得到 l_image_points, r_image_points

bool calibrate(); //双目标定计算

private:

string l_path, r_path;

Mat l_cameraMatrix, l_distCoeffs;

Mat r_cameraMatrix, r_distCoeffs;

vector l_images, r_images;

bool show_chess_corners;

float side_length; //棋盘格的边长

int width, height; //棋盘格的内角点数量

vector > l_image_points, r_image_points;

vector > object_points;

Mat R, T, E, F; //立体相机参数

};

//////////////////////////////////////////////////////////////////////////////

class rectifier //立体摄像机校正

{

public:

rectifier(string, Size); //constructor

void show_rectified(Size); //function to show live rectified feed from stereo camera

private:

Mat map_l1, map_l2, map_r1, map_r2; //pixel maps for rectification

string path;

};

//////////////////////////////////////////////////////////////////////////////

class disparity

{

public:

disparity(string, Size); //constructor

void show_disparity(Size); // show live disparity by processing stereo camera feed

private:

Mat map_l1, map_l2, map_r1, map_r2, Q; // rectification pixel maps

int mindisparity = 0;

int ndisparities = 64;

int SADWindowSize = 11;

cv::Ptr sgbm = cv::StereoSGBM::create(mindisparity, ndisparities, SADWindowSize);

// 用于视差计算的立体匹配对象

Mat framel = imread("L.jpg");

Mat framer = imread("R.jpg");

}; 11.cpp

#include"11.h"

calibrator::calibrator(string _l_path, string _r_path, float _side_length, int _width, int _height)

{

side_length = _side_length;

width = _width;

height = _height;

l_path = _l_path;

r_path = _r_path;

cout << "左相机的棋盘图位置在:" << _l_path << endl;

cout << "右相机的棋盘图位置在:" << _r_path << endl;

// Read images

std::vector image_file_L;

std::vector image_file_R;

glob(l_path, image_file_L); //将目录下的文件读取到容器中

glob(r_path, image_file_R);

for (int i = 0; i < image_file_L.size(); i++)

{

l_images.push_back(imread(image_file_L[i]));

}

for (int ii = 0; ii < image_file_R.size(); ii++)

{

r_images.push_back(imread(image_file_R[ii]));

}

}

void calibrator::calc_image_points(bool show)

{

//计算物体坐标系中的物体点(左上角原点)

//三维点根据棋盘格边长大小、内角点数量初始化

vector ob_p;

for (int i = 0; i < height; i++)

{

for (int j = 0; j < width; j++)

{

ob_p.push_back(Point3f(j * side_length, i * side_length, 0.f));

}

}

if (show)

{

namedWindow("Left Chessboard corners");

namedWindow("Right Chessboard corners");

}

for (int i = 0; i < l_images.size(); i++)

{

Mat lim = l_images[i];

Mat rim = r_images[i];

vector l_im_p;

vector r_im_p; //20个内角点坐标

bool l_pattern_found = findChessboardCorners(lim, Size(width, height), l_im_p, CALIB_CB_ADAPTIVE_THRESH + CALIB_CB_NORMALIZE_IMAGE + CALIB_CB_FAST_CHECK);

bool r_pattern_found = findChessboardCorners(rim, Size(width, height), r_im_p, CALIB_CB_ADAPTIVE_THRESH + CALIB_CB_NORMALIZE_IMAGE + CALIB_CB_FAST_CHECK);

if (l_pattern_found && r_pattern_found)

{

object_points.push_back(ob_p);

Mat gray;

cvtColor(lim, gray, CV_BGR2GRAY);

cornerSubPix(gray, l_im_p, Size(5, 5), Size(-1, -1), TermCriteria(CV_TERMCRIT_EPS + CV_TERMCRIT_ITER, 30, 0.1));

cvtColor(rim, gray, CV_BGR2GRAY);

cornerSubPix(gray, r_im_p, Size(5, 5), Size(-1, -1), TermCriteria(CV_TERMCRIT_EPS + CV_TERMCRIT_ITER, 30, 0.1));

l_image_points.push_back(l_im_p); // 存放20个内角点的容器的容器 31(imgs)*20(points)

r_image_points.push_back(r_im_p);

if (show)

{

Mat im_show = lim.clone();

drawChessboardCorners(im_show, Size(width, height), l_im_p, true);

imshow("Left Chessboard corners", im_show);

im_show = rim.clone();

drawChessboardCorners(im_show, Size(width, height), r_im_p, true);

imshow("Right Chessboard corners", im_show);

while (char(waitKey(1)) != '\r')

{

}

}

}

else

{

l_images.erase(l_images.begin() + i);

r_images.erase(r_images.begin() + i);

}

}

}

bool calibrator::calibrate()

{

string filename = DATA_FOLDER + string("left_cam_calib.xml"); //读取左右相机的标定参数

FileStorage fs(filename, FileStorage::READ);

fs["cameraMatrix"] >> l_cameraMatrix;

fs["distCoeffs"] >> l_distCoeffs;

fs.release();

filename = DATA_FOLDER + string("right_cam_calib.xml");

fs.open(filename, FileStorage::READ);

fs["cameraMatrix"] >> r_cameraMatrix;

fs["distCoeffs"] >> r_distCoeffs;

fs.release();

if (!l_cameraMatrix.empty() && !l_distCoeffs.empty() && !r_cameraMatrix.empty() && !r_distCoeffs.empty())

{

double rms = stereoCalibrate(object_points, l_image_points, r_image_points,

l_cameraMatrix, l_distCoeffs, r_cameraMatrix, r_distCoeffs, l_images[0].size(), R, T, E, F);

// R– 输出第一和第二相机坐标系之间的旋转矩阵。

// T– 输出第一和第二相机坐标系之间的旋转矩阵平移向量。

// E-包含了两个摄像头相对位置关系的本征矩阵Essential Matrix(3*3)

//其物理意义是左右图像坐标系相互转换的矩阵

// F-既包含两个摄像头相对位置关系、也包含摄像头各自内参信息的基础矩阵

cout << "校正后的立体相机的均方根误差为:" << rms << endl;

filename = DATA_FOLDER + string("stereo_calib.xml");

fs.open(filename, FileStorage::WRITE);

fs << "l_cameraMatrix" << l_cameraMatrix;

fs << "r_cameraMatrix" << r_cameraMatrix;

fs << "l_distCoeffs" << l_distCoeffs;

fs << "r_distCoeffs" << r_distCoeffs;

fs << "R" << R;

fs << "T" << T;

fs << "E" << E;

fs << "F" << F;

cout << "校准参数保存至:" << filename << endl;

return true;

}

else

{

return false;

}

}

Size calibrator::get_image_size()

{

return l_images[0].size();

}

///////////////////////////////////////////////////////////////////////////////////////////

///////////////////////////////////////////////////////////////////////////////////////////

rectifier::rectifier(string filename, Size image_size)

{

// Read individal camera calibration information from saved XML file

Mat l_cameraMatrix, l_distCoeffs, r_cameraMatrix, r_distCoeffs, R, T;

FileStorage fs(filename, FileStorage::READ);

fs["l_cameraMatrix"] >> l_cameraMatrix;

fs["l_distCoeffs"] >> l_distCoeffs;

fs["r_cameraMatrix"] >> r_cameraMatrix;

fs["r_distCoeffs"] >> r_distCoeffs;

fs["R"] >> R;

fs["T"] >> T;

fs.release();

if (l_cameraMatrix.empty() || r_cameraMatrix.empty() || l_distCoeffs.empty() || r_distCoeffs.empty()

|| R.empty() || T.empty())

{

cout << "Rectifier: Loading of files not successful" << endl;

}

// Calculate transforms for rectifying images

Mat Rl, Rr, Pl, Pr, Q;

stereoRectify(l_cameraMatrix, l_distCoeffs, r_cameraMatrix, r_distCoeffs,

image_size, R, T, Rl, Rr, Pl, Pr, Q);

//R1– 输出第一个相机的3x3矫正变换(旋转矩阵) .

//R2– 输出第二个相机的3x3矫正变换(旋转矩阵) .

//P1–在第一台相机的新的坐标系统(矫正过的)输出 3x4 的投影矩阵

//P2–在第二台相机的新的坐标系统(矫正过的)输出 3x4 的投影矩阵

//Q–输出深度视差映射矩阵

// Calculate pixel maps for efficient rectification of images via lookup tables

initUndistortRectifyMap(l_cameraMatrix, l_distCoeffs, Rl, Pl, image_size, CV_16SC2, map_l1, map_l2);

initUndistortRectifyMap(r_cameraMatrix, r_distCoeffs, Rr, Pr, image_size, CV_16SC2, map_r1, map_r2);

fs.open(filename, FileStorage::APPEND); //追加矫正数据,映射表

fs << "Rl" << Rl;

fs << "Rr" << Rr;

fs << "Pl" << Pl;

fs << "Pr" << Pr;

fs << "Q" << Q;

fs << "map_l1" << map_l1;

fs << "map_l2" << map_l2;

fs << "map_r1" << map_r1;

fs << "map_r2" << map_r2;

fs.release();

}

void rectifier::show_rectified(Size image_size) {

//VideoCapture capr(1), capl(2);

////reduce frame size

//capl.set(CV_CAP_PROP_FRAME_HEIGHT, image_size.height);

//capl.set(CV_CAP_PROP_FRAME_WIDTH, image_size.width);

//capr.set(CV_CAP_PROP_FRAME_HEIGHT, image_size.height);

//capr.set(CV_CAP_PROP_FRAME_WIDTH, image_size.width);

//destroyAllWindows();

//namedWindow("Combo");

//while (char(waitKey(1)) != 'q')

//{

// //grab raw frames first

// capl.grab();

// capr.grab();

// //decode later so the grabbed frames are less apart in time

// Mat framel, framel_rect, framer, framer_rect;

// capl.retrieve(framel);

// capr.retrieve(framer);

// if (framel.empty() || framer.empty())

// break;

// // Remap images by pixel maps to rectify

// remap(framel, framel_rect, map_l1, map_l2, INTER_LINEAR);

// remap(framer, framer_rect, map_r1, map_r2, INTER_LINEAR);

// // Make a larger image containing the left and right rectified images side-by-side

// Mat combo(image_size.height, 2 * image_size.width, CV_8UC3);

// framel_rect.copyTo(combo(Range::all(), Range(0, image_size.width)));

// framer_rect.copyTo(combo(Range::all(), Range(image_size.width, 2 * image_size.width)));

// // Draw horizontal red lines in the combo image to make comparison easier

// for (int y = 0; y < combo.rows; y += 20)

// line(combo, Point(0, y), Point(combo.cols, y), Scalar(0, 0, 255));

// imshow("Combo", combo);

//}

//capl.release();

//capr.release();

Mat framel, framel_rect, framer, framer_rect;

framel = imread("L.jpg");

framer = imread("R.jpg");

if (framel.empty() || framer.empty())

{

cout << "读取需要矫正的图像失败!!" << endl;

}

//Remap images by pixel maps to rectify

remap(framel, framel_rect, map_l1, map_l2, INTER_LINEAR);

remap(framer, framer_rect, map_r1, map_r2, INTER_LINEAR);

//Size imageSize(2048, 1536); //将两幅图像拼接在一起,size.width=2048,size.height=1536

//Mat showImage(1536, 2 * 2048, CV_8UC1);//rows * columns

//Rect rectLeft(0, 0, imageSize.width, imageSize.height);

//Rect rectRight(imageSize.width, 0, imageSize.width, imageSize.height);//创建两个矩阵

//tmpL_img.copyTo(showImage(rectLeft));//把左右矫正后的图片放入showimage对应的左右上

//tmpR_img.copyTo(showImage(rectRight));

Mat combo(image_size.height, 2 * image_size.width, CV_8UC3);

Rect rectLeft(0, 0, image_size.width, image_size.height);

Rect rectRight(image_size.width, 0, image_size.width, image_size.height);//创建两个矩阵

framel_rect.copyTo(combo(rectLeft));

framer_rect.copyTo(combo(rectRight));

// Draw horizontal red lines in the combo image to make comparison easier

for (int y = 0; y < combo.rows; y += 20)

{

line(combo, Point(0, y), Point(combo.cols, y), Scalar(0, 0, 255));

}

imshow("Combo", combo);

imwrite("Combo.jpg", combo);

}

///////////////////////////////////////////////////////////////////////////////////////////

///////////////////////////////////////////////////////////////////////////////////////////

disparity::disparity(string filename, Size image_size)

{

// 读取双目标定参数

FileStorage fs(filename, FileStorage::READ);

fs["map_l1"] >> map_l1;

fs["map_l2"] >> map_l2;

fs["map_r1"] >> map_r1;

fs["map_r2"] >> map_r2;

fs["Q"] >> Q;

if (map_l1.empty() || map_l2.empty() || map_r1.empty() || map_r2.empty())

{

cout << "WARNING: Loading of mapping matrices not successful" << endl;

}

cv::Ptr sgbm = cv::StereoSGBM::create(mindisparity, ndisparities, SADWindowSize);

int P1 = 8 * framel.channels() * SADWindowSize* SADWindowSize;

int P2 = 32 * framel.channels() * SADWindowSize* SADWindowSize;

sgbm->setP1(P1);

sgbm->setP2(P2);

sgbm->setPreFilterCap(15);

sgbm->setUniquenessRatio(10);

sgbm->setSpeckleRange(2);

sgbm->setSpeckleWindowSize(100);

sgbm->setDisp12MaxDiff(1);

//sgbm->setMode(cv::StereoSGBM::MODE_HH);

}

void disparity::show_disparity(Size image_size)

{

Mat disp, disp32F;

Mat framel_rect, framer_rect;

if (framel.empty() || framer.empty())

{

cout << "读取需要矫正的图像失败!!" << endl;

}

//SGBM

remap(framel, framel_rect, map_l1, map_l2, INTER_LINEAR);

remap(framer, framer_rect, map_r1, map_r2, INTER_LINEAR);

sgbm->compute(framel_rect, framer_rect, disp);

disp.convertTo(disp, CV_32F, 1.0 / 16);

Mat disp8U = Mat(disp.rows, disp.cols, CV_8UC1);

normalize(disp, disp8U, 0, 255, NORM_MINMAX, CV_8UC1);

disp.convertTo(disp32F, CV_32F, 1.f / 16.f);

imshow("SGBM视差图", disp8U);

imwrite("SGBM视差图.jpg", disp8U);

Mat pointcloud;

// 从视差图像中计算三维坐标

reprojectImageTo3D(disp8U, pointcloud, Q, true);

//绘制一个红色矩形,大约40像素宽的正方形面积im图像

int xmin = framel.cols / 2 - 20, xmax = framel.cols / 2 + 20, ymin = framel.rows / 2 - 20, ymax = framel.rows / 2 + 20;

rectangle(framel_rect, Point(xmin, ymin), Point(xmax, ymax), Scalar(0, 0, 255));

//提取深度为40px的矩形,并打印出它们的平均值

pointcloud = pointcloud(Range(ymin, ymax), Range(xmin, xmax));

Mat z_roi(pointcloud.size(), CV_32FC1);

int from_to[] = { 2, 0 };

mixChannels(&pointcloud, 1, &z_roi, 1, from_to, 1);

cout << "Depth: " << mean(z_roi) << " mm" << endl;

/*FindStereoCorrespondenceBM

imshow("Left", framel_rect);

imwrite("深度图.jpg", framel_rect);*/

} 最后效果:

双目标定我们得到:

图像去畸变 :

- stereoCalibrate()立体标定

- ----------------------------------------------------------------------

- R– 第一和第二相机坐标系之间的旋转矩阵。

- T– 输出第一和第二相机坐标系之间的平移向量。

- E–本征矩阵。

- F–基础矩阵。

- ----------------------------------------------------------------------

- 立体校正的目的就是,把实际中消除畸变后的非共面行对准的两幅图像,校正成共面行对准。

- stereoRectify()双目矫正 六个参数:cameraMatrix × 2 distCoeffs × 2 R T矩阵 六个参数

- ----------------------------------------------------------------------

- R1– 第一个相机的3x3矫正变换(旋转矩阵)

- R2– 第二个相机的3x3矫正变换(旋转矩阵)

- P1–在第一台相机的新的坐标系统(矫正过的)输出 3x4 的投影矩阵

- P2–在第二台相机的新的坐标系统(矫正过的)输出 3x4 的投影矩阵

- Q–深度视差映射矩阵

- ----------------------------------------------------------------------

initUndistortRectifyMap(l_cameraMatrix, l_distCoeffs, Rl, Pl, image_size, CV_16SC2, map_l1, map_l2);

initUndistortRectifyMap(r_cameraMatrix, r_distCoeffs, Rr, Pr, image_size, CV_16SC2, map_r1, map_r2);得到对应左相机右相机的矫正映射表map_L1、map_L2、map_R1、map_R2