波士顿房价预测(回归)

背景:

本节将就预测20世纪70年代波士顿郊区房屋价格的中位数展开讨论。

代码清单1 加载房价数据

from keras.datasets import boston_housing

(train_data, train_targets), (test_data, test_targets) = boston_housing.load_data()分析数据:

<<结果可知我们有404个测试样本和102个测试样本,每个样本有13个数值特征,分别为犯罪率,住宅面积,交通便利度等。房价大都在1w~5w美元。

代码清单2 数据标准化

由于该数据集各样本取值范围差异很大,直接将数据输入到神经网络中的话,学习将会变得困难。普遍的解决方法是对每个特征做标准化,即用每个数据的输入特征(输入数据矩阵的列),减去特征平均值(mean),除以标准差(std),这样,特征平均值就变成了0,标准差是1。

mean = train_data.mean(axis=0)

train_data -= mean

std = train_data.std(axis=0)

train_data /= std

test_data -= mean

test_data /= std很重要的一点是:我们用到的平均值和标准差都是在训练数据上得到的,绝对不能借用测试数据。

代码清单3 模型定义

因为样本数量很小,所以我们使用一个很小的网络,他包含2个隐藏层,每层64个单元。我们用较小的网络来降低训练数据过小所带来的过拟合问题。

from keras import models

from keras import layers

def build_model():

model = model.Sequential()

model.add(layers.Dense(64, activation='relu', input_shape=(train_data.shape[1],)))

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(1))

model.compile(optimizer='rmsprop', loss='mse', metrics=['mae'])

return model网络最后一层只有一个单元,没有激活函数,是一个线性层,这是标量回归(标量回归是预测单一连续值的回归)的典型设置,添加激活函数反而会限制输出范围(纯线性可以预测任意范围内的值)。

注意,编译网络用的是mse损失函数,即均方误差(MSE, mean aquared error),预测值与目标值之差的平方,这是回归问题常用的损失函数。

我们还使用了新的监控指标:平均绝对误差(MAE, mean absolute error),表示预测值与目标值之差的绝对值。

代码清单4 K折验证

import numpy as np

k = 4

num_val_samples = len(train_data) // k

num_epochs = 100

all_mae_histories = []

for i in range(k):

print('processing fold #', i)

val_data = train_data[i * num_val_samples: (i + 1) * num_val_samples]

val_targets = train_targets[i * num_val_samples: (i + 1) * num_val_samples]

partial_train_data = np.concatenate([train_data[: i * num_val_samples],

train_data[(i + 1) * num_val_samples:]], axis=0)

partial_train_targets = np.concatenate([train_targets[: i * num_val_samples],

train_targets[(i + 1) * num_val_samples:]],

axis=0)

model = build_model()

model.fit(partial_train_data, partial_train_targets,

epochs=num_epochs, batch_size=1, verbose=0)

val_mse, val_mae = model.evaluate(val_data, val_targets, verbose=0)

all_scores.append(val_mae)设置num_epochs = 100,运行结果如下:

>>>all_scores

[2.079510043163111, 2.125610089538121, 2.9143613801144137, 2.429211360393184]

由上面结果可知,每次运行模型得到的验证分数差异很大,所以我们让训练时间更长一点,设置epochs = 500。为了记录模型在每轮的表现,我们修改训练循环。

import numpy as np

k = 4

num_val_samples = len(train_data) // k

num_epochs = 500

all_mae_histories = []

for i in range(k):

print('processing fold #', i)

val_data = train_data[i * num_val_samples: (i + 1) * num_val_samples]

val_targets = train_targets[i * num_val_samples: (i + 1) * num_val_samples]

partial_train_data = np.concatenate([train_data[: i * num_val_samples],

train_data[(i + 1) * num_val_samples:]], axis=0)

partial_train_targets = np.concatenate([train_targets[: i * num_val_samples],

train_targets[(i + 1) * num_val_samples:]],

axis=0)

model = build_model()

history = model.fit(partial_train_data, partial_train_targets,

validation_data=(val_data, val_targets),

epochs=num_epochs, batch_size=1, verbose=0)

mae_history = history.history['val_mean_absolute_error']

all_mae_histories.append(mae_history) 计算所有轮次中K折验证平均值:

average_mae_history = [np.mean([x[i] for x in all_mae_histories])

for i in range(num_epochs)]

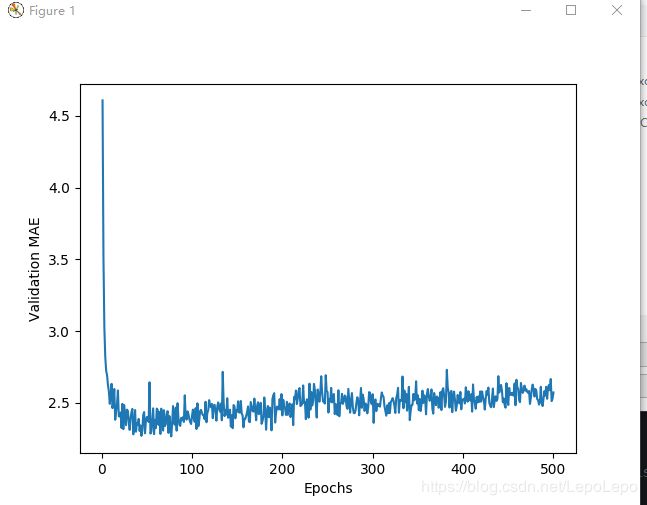

代码清单5 绘制验证分数

import matplotlib.pyplot as plt

plt.plot(range(1, len(average_mae_history) + 1), average_mae_history)

plt.xlabel('Epochs')

plt.ylabel('Validation MAE')

plt.show()

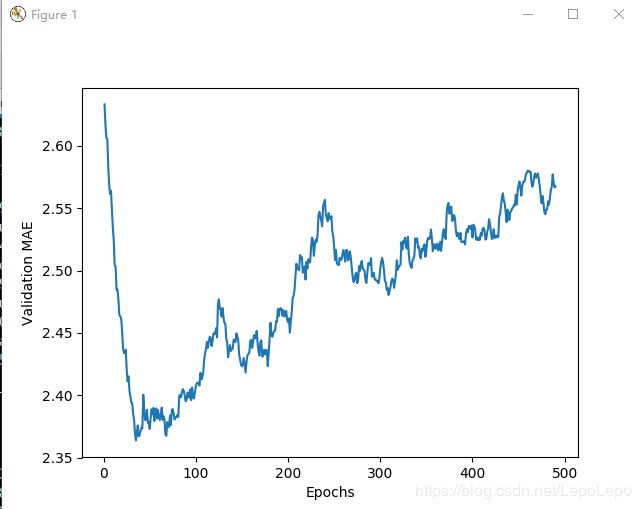

纵轴范围太大,且数据方差较大,所以重新绘制。

(1)删除前十个数据点。

(2)将每个数据点替换为上一个数据点的指数移动平均值,以求得到光滑的曲线。

def smooth_curve(points, factor=0.9):

smoothed_points = []

for point in points:

if smoothed_points:

previous_point = smoothed_points[-1]

smoothed_points.append(factor * previous_point + (1 - factor) * point)

else:

smoothed_points.append(point)

return smoothed_points

smooth_mae_history = smooth_curve(average_mae_history[10:])

import matplotlib.pyplot as plt

plt.plot(range(1, len(smooth_mae_history) + 1), smooth_mae_history)

plt.xlabel('Epochs')

plt.ylabel('Validation MAE')

plt.show()

图形如下所示:

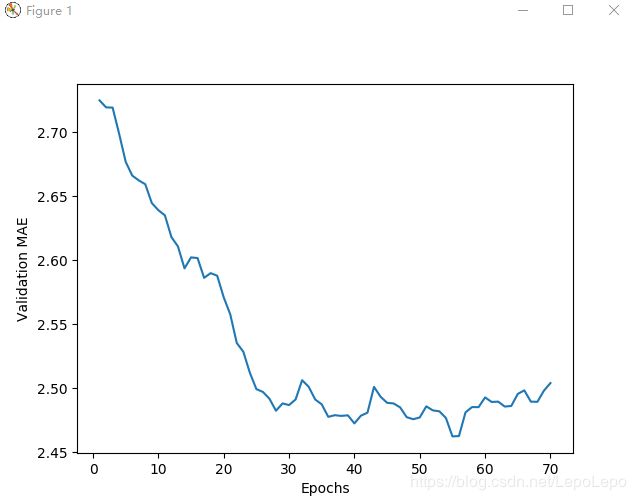

代码清单6 训练最终模型

由上图我们可以看出,验证MAE在80轮后不再显著降低,所以将num_epochs设置为80。最终结果如下:

>>>prediction = model.predict(test_data)

The price that predict:",prediction[77][0]

('The price that predict:', 24.3494)

>>>Actual price:",test_targets[77]

('Actual price:', 28.1)

如图:

最终结果:

>>>test_mae_score

2.5160629328559425

所以我们预测的房价还是和实际相差2500美元。

小结

(1)训练数据过少时,我们使用较小隐藏层来避免严重的过拟合。

(2)数据过少时,我们使用K折验证来评估模型。

(3)回归问题常用均方误差(MSE)作为损失函数,平均绝对误差(MAE)作为回归指标。

(4)对于数据具有不同取值范围的情况,应先进行预处理,对每个特征单独进行缩放。

完整代码

from keras.datasets import boston_housing

(train_data, train_targets), (test_data, test_targets) = boston_housing.load_data()

mean = train_data.mean(axis=0)

train_data -= mean

std = train_data.std(axis=0)

train_data /= std

test_data -= mean

test_data /= std

from keras import models

from keras import layers

def build_model():

model = models.Sequential()

model.add(layers.Dense(64, activation='relu', input_shape=(train_data.shape[1],)))

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(1))

model.compile(optimizer='rmsprop', loss='mse', metrics=['mae'])

return model

import numpy as np

k = 4

num_val_samples = len(train_data) // k

all_mae_histories = []

num_epochs = 80

for i in range(k):

print('processing fold #', i)

val_data = train_data[i * num_val_samples: (i + 1) * num_val_samples]

val_targets = train_targets[i * num_val_samples: (i + 1) * num_val_samples]

partial_train_data = np.concatenate([train_data[: i * num_val_samples], train_data[(i + 1) * num_val_samples:]], axis=0)

partial_train_targets = np.concatenate([train_targets[: i * num_val_samples], train_targets[(i + 1) * num_val_samples:]], axis=0)

model = build_model()

history = model.fit(partial_train_data, partial_train_targets, validation_data=(val_data, val_targets), epochs=num_epochs, batch_size=1, verbose=0)

mae_history = history.history['val_mean_absolute_error']

all_mae_histories.append(mae_history)

test_mse_score, test_mae_score = model.evaluate(test_data, test_targets)

print('test_mae_score is: ', test_mae_score)

average_mae_history = [np.mean([x[i] for x in all_mae_histories]) for i in range(num_epochs)]

def smooth_curve(points, factor=0.9):

smoothed_points = []

for point in points:

if smoothed_points:

previous_point = smoothed_points[-1]

smoothed_points.append(factor * previous_point + (1 - factor) * point)

else:

smoothed_points.append(point)

return smoothed_points

smooth_mae_history = smooth_curve(average_mae_history[10:])

import matplotlib.pyplot as plt

plt.plot(range(1, len(smooth_mae_history) + 1), smooth_mae_history)

plt.xlabel('Epochs')

plt.ylabel('Validation MAE')

plt.show()

prediction = model.predict(test_data)

print ("The price that predict:",prediction[77][0])

print ("Actual price:",test_targets[77])