测试说明

写2G文件,分批次写入,每批次写入128MB;

分别在Win7系统(3G内存,双核,32位,T系列处理器)和MacOS系统(8G内存,四核,64位,i7系列处理器)下运行测试。理论上跟硬盘类型和配置也有关系,这里不再贴出了。

测试代码

package rwbigfile;

import java.io.ByteArrayInputStream;

import java.io.File;

import java.io.IOException;

import java.io.RandomAccessFile;

import java.lang.reflect.Method;

import java.nio.ByteBuffer;

import java.nio.MappedByteBuffer;

import java.nio.channels.Channels;

import java.nio.channels.FileChannel;

import java.nio.channels.FileChannel.MapMode;

import java.nio.channels.ReadableByteChannel;

import java.security.AccessController;

import java.security.PrivilegedAction;

import util.StopWatch;

/**

* NIO写大文件比较

* @author Will

*

*/

public class WriteBigFileComparison {

// data chunk be written per time

private static final int DATA_CHUNK = 128 * 1024 * 1024;

// total data size is 2G

private static final long LEN = 2L * 1024 * 1024 * 1024L;

public static void writeWithFileChannel() throws IOException {

File file = new File("e:/test/fc.dat");

if (file.exists()) {

file.delete();

}

RandomAccessFile raf = new RandomAccessFile(file, "rw");

FileChannel fileChannel = raf.getChannel();

byte[] data = null;

long len = LEN;

ByteBuffer buf = ByteBuffer.allocate(DATA_CHUNK);

int dataChunk = DATA_CHUNK / (1024 * 1024);

while (len >= DATA_CHUNK) {

System.out.println("write a data chunk: " + dataChunk + "MB");

buf.clear(); // clear for re-write

data = new byte[DATA_CHUNK];

for (int i = 0; i < DATA_CHUNK; i++) {

buf.put(data[i]);

}

data = null;

buf.flip(); // switches a Buffer from writing mode to reading mode

fileChannel.write(buf);

fileChannel.force(true);

len -= DATA_CHUNK;

}

if (len > 0) {

System.out.println("write rest data chunk: " + len + "B");

buf = ByteBuffer.allocateDirect((int) len);

data = new byte[(int) len];

for (int i = 0; i < len; i++) {

buf.put(data[i]);

}

buf.flip(); // switches a Buffer from writing mode to reading mode, position to 0, limit not changed

fileChannel.write(buf);

fileChannel.force(true);

data = null;

}

fileChannel.close();

raf.close();

}

/**

* write big file with MappedByteBuffer

* @throws IOException

*/

public static void writeWithMappedByteBuffer() throws IOException {

File file = new File("e:/test/mb.dat");

if (file.exists()) {

file.delete();

}

RandomAccessFile raf = new RandomAccessFile(file, "rw");

FileChannel fileChannel = raf.getChannel();

int pos = 0;

MappedByteBuffer mbb = null;

byte[] data = null;

long len = LEN;

int dataChunk = DATA_CHUNK / (1024 * 1024);

while (len >= DATA_CHUNK) {

System.out.println("write a data chunk: " + dataChunk + "MB");

mbb = fileChannel.map(MapMode.READ_WRITE, pos, DATA_CHUNK);

data = new byte[DATA_CHUNK];

mbb.put(data);

data = null;

len -= DATA_CHUNK;

pos += DATA_CHUNK;

}

if (len > 0) {

System.out.println("write rest data chunk: " + len + "B");

mbb = fileChannel.map(MapMode.READ_WRITE, pos, len);

data = new byte[(int) len];

mbb.put(data);

}

data = null;

unmap(mbb); // release MappedByteBuffer

fileChannel.close();

}

public static void writeWithTransferTo() throws IOException {

File file = new File("e:/test/transfer.dat");

if (file.exists()) {

file.delete();

}

RandomAccessFile raf = new RandomAccessFile(file, "rw");

FileChannel toFileChannel = raf.getChannel();

long len = LEN;

byte[] data = null;

ByteArrayInputStream bais = null;

ReadableByteChannel fromByteChannel = null;

long position = 0;

int dataChunk = DATA_CHUNK / (1024 * 1024);

while (len >= DATA_CHUNK) {

System.out.println("write a data chunk: " + dataChunk + "MB");

data = new byte[DATA_CHUNK];

bais = new ByteArrayInputStream(data);

fromByteChannel = Channels.newChannel(bais);

long count = DATA_CHUNK;

toFileChannel.transferFrom(fromByteChannel, position, count);

data = null;

position += DATA_CHUNK;

len -= DATA_CHUNK;

}

if (len > 0) {

System.out.println("write rest data chunk: " + len + "B");

data = new byte[(int) len];

bais = new ByteArrayInputStream(data);

fromByteChannel = Channels.newChannel(bais);

long count = len;

toFileChannel.transferFrom(fromByteChannel, position, count);

}

data = null;

toFileChannel.close();

fromByteChannel.close();

}

/**

* 在MappedByteBuffer释放后再对它进行读操作的话就会引发jvm crash,在并发情况下很容易发生

* 正在释放时另一个线程正开始读取,于是crash就发生了。所以为了系统稳定性释放前一般需要检

* 查是否还有线程在读或写

* @param mappedByteBuffer

*/

public static void unmap(final MappedByteBuffer mappedByteBuffer) {

try {

if (mappedByteBuffer == null) {

return;

}

mappedByteBuffer.force();

AccessController.doPrivileged(new PrivilegedAction

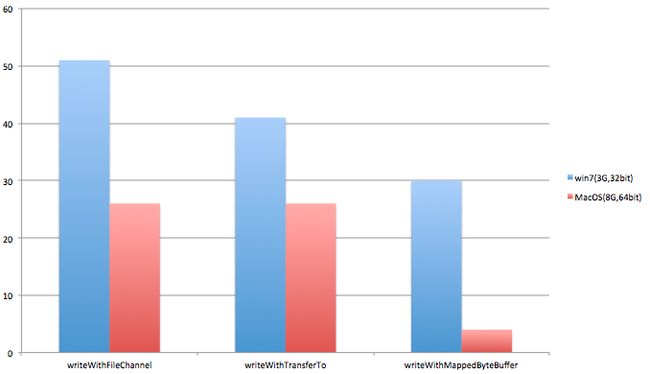

测试结果(Y轴是耗时秒数)

- 显然writeWithMappedByteBuffer方式性能最好,且在硬件配置较高情况下优势越加明显

- 在硬件配置较低情况下,writeWithTransferTo比writeWithFileChannel性能稍好

- 在硬件配置较高情况下,writeWithTransferTo和writeWithFileChannel的性能基本持平

- 此外,注意writeWithMappedByteBuffer方式除了占用JVM堆内存外,还要占用额外的native内存(Direct Byte Buffer内存)

内存映射文件使用经验

MappedByteBuffer需要占用“双倍”的内存(对象JVM堆内存和Direct Byte Buffer内存),可以通过-XX:MaxDirectMemorySize参数设置后者最大大小

不要频繁调用MappedByteBuffer的force()方法,因为这个方法会强制OS刷新内存中的数据到磁盘,从而只能获得些微的性能提升(相比IO方式),可以用后面的代码实例进行定时、定量刷新

如果突然断电或者服务器突然Down,内存映射文件数据可能还没有写入磁盘,这时就会丢失一些数据。为了降低这种风险,避免用MappedByteBuffer写超大文件,可以把大文件分割成几个小文件,但不能太小(否则将失去性能优势)

ByteBuffer的rewind()方法将position属性设回为0,因此可以重新读取buffer中的数据;limit属性保持不变,因此可读取的字节数不变

ByteBuffer的flip()方法将一个Buffer由写模式切换到读模式

ByteBuffer的clear()和compact()可以在我们读完ByteBuffer中的数据后重新切回写模式。不同的是clear()会将position设置为0,limit设为capacity,换句话说Buffer被清空了,但Buffer内的数据并没有被清空。如果Buffer中还有未被读取的数据,那调用clear()之后,这些数据会被“遗忘”,再写入就会覆盖这些未读数据。而调用compcat()之后,这些未被读取的数据仍然可以保留,因为它将所有还未被读取的数据拷贝到Buffer的左端,然后设置position为紧随未读数据之后,limit被设置为capacity,未读数据不会被覆盖

定时、定量刷新内存映射文件到磁盘

import java.io.File;

import java.io.IOException;

import java.io.RandomAccessFile;

import java.nio.MappedByteBuffer;

import java.nio.channels.FileChannel;

public class MappedFile {

// 文件名

private String fileName;

// 文件所在目录路径

private String fileDirPath;

// 文件对象

private File file;

private MappedByteBuffer mappedByteBuffer;

private FileChannel fileChannel;

private boolean boundSuccess = false;

// 文件最大只能为50MB

private final static long MAX_FILE_SIZE = 1024 * 1024 * 50;

// 最大的脏数据量512KB,系统必须触发一次强制刷

private long MAX_FLUSH_DATA_SIZE = 1024 * 512;

// 最大的刷间隔,系统必须触发一次强制刷

private long MAX_FLUSH_TIME_GAP = 1000;

// 文件写入位置

private long writePosition = 0;

// 最后一次刷数据的时候

private long lastFlushTime;

// 上一次刷的文件位置

private long lastFlushFilePosition = 0;

public MappedFile(String fileName, String fileDirPath) {

super();

this.fileName = fileName;

this.fileDirPath = fileDirPath;

this.file = new File(fileDirPath + "/" + fileName);

if (!file.exists()) {

try {

file.createNewFile();

} catch (IOException e) {

e.printStackTrace();

}

}

}

/**

*

* 内存映照文件绑定

* @return

*/

public synchronized boolean boundChannelToByteBuffer() {

try {

RandomAccessFile raf = new RandomAccessFile(file, "rw");

this.fileChannel = raf.getChannel();

} catch (Exception e) {

e.printStackTrace();

this.boundSuccess = false;

return false;

}

try {

this.mappedByteBuffer = this.fileChannel

.map(FileChannel.MapMode.READ_WRITE, 0, MAX_FILE_SIZE);

} catch (IOException e) {

e.printStackTrace();

this.boundSuccess = false;

return false;

}

this.boundSuccess = true;

return true;

}

/**

* 写数据:先将之前的文件删除然后重新

* @param data

* @return

*/

public synchronized boolean writeData(byte[] data) {

return false;

}

/**

* 在文件末尾追加数据

* @param data

* @return

* @throws Exception

*/

public synchronized boolean appendData(byte[] data) throws Exception {

if (!boundSuccess) {

boundChannelToByteBuffer();

}

writePosition = writePosition + data.length;

if (writePosition >= MAX_FILE_SIZE) { // 如果写入data会超出文件大小限制,不写入

flush();

writePosition = writePosition - data.length;

System.out.println("File="

+ file.toURI().toString()

+ " is written full.");

System.out.println("already write data length:"

+ writePosition

+ ", max file size=" + MAX_FILE_SIZE);

return false;

}

this.mappedByteBuffer.put(data);

// 检查是否需要把内存缓冲刷到磁盘

if ( (writePosition - lastFlushFilePosition > this.MAX_FLUSH_DATA_SIZE)

||

(System.currentTimeMillis() - lastFlushTime > this.MAX_FLUSH_TIME_GAP

&& writePosition > lastFlushFilePosition) ) {

flush(); // 刷到磁盘

}

return true;

}

public synchronized void flush() {

this.mappedByteBuffer.force();

this.lastFlushTime = System.currentTimeMillis();

this.lastFlushFilePosition = writePosition;

}

public long getLastFlushTime() {

return lastFlushTime;

}

public String getFileName() {

return fileName;

}

public String getFileDirPath() {

return fileDirPath;

}

public boolean isBundSuccess() {

return boundSuccess;

}

public File getFile() {

return file;

}

public static long getMaxFileSize() {

return MAX_FILE_SIZE;

}

public long getWritePosition() {

return writePosition;

}

public long getLastFlushFilePosition() {

return lastFlushFilePosition;

}

public long getMAX_FLUSH_DATA_SIZE() {

return MAX_FLUSH_DATA_SIZE;

}

public long getMAX_FLUSH_TIME_GAP() {

return MAX_FLUSH_TIME_GAP;

}

}

以上就是本文的全部内容,希望对大家的学习有所帮助,也希望大家多多支持脚本之家。