insightface数据制作全过程记录更新

insightface数据制作全过程记录

本文总结如何构建insightface的数据集,我们以LFW数据集与CFP数据集为例展开实验,读者也可也用自己的数据集。

1数据对齐

在这之前你应该准备好你的数据,可以爬虫爬来,也可以自己找,这里我们采用LFW官方数据集,你的数据文件目录应该如下:

–class1 --pic1.jpg

-------------pic2.jpg

-------------pic3.jpg

–class2 --pic1.jpg

-------------pic2.jpg

给出LFW数据的地址:

lfw数据

http://vis-www.cs.umass.edu/lfw/

我下载的是这个原图像https://drive.google.com/file/d/1p1wjaqpTh_5RHfJu4vUh8JJCdKwYMHCp/view?usp=sharing

数据集有了之后,我们这里做的是人脸识别,所以人脸检测与对齐部分我们直接跑模型即可。

datasets/lfwdata下存放原始数据(lfwdata下分目录存放每一类的数据,每个人一个文件夹,里面存图。)

跳转到src/align,这里提供了人脸对齐的代码,检测加对齐MTCNN;运行align_lfw.py。两个参数input为原始数据文件夹;output是你要存放对齐后的数据。

python3 align_lfw.py --input-dir ../../datasets/lfwdata --output-dir ../../datasets/lfw2

附录:运行./src/align/align_lfw.py遇到的错误

1>tf

pip3 install tensorflow-gpu -i https://pypi.douban.com/simple --user

2>Question:ValueError: Object arrays cannot be loaded when allow_pickle=False

Answer:pip3 install numpy==1.16.1 -i https://pypi.douban.com/simple --user

3>Question:AttributeError: module ‘scipy.misc’ has no attribute ‘imread’

Answer:pip3 install scipy==1.1.0 -i https://pypi.douban.com/simple --user

PS:以下为CFP-FP不同的地方,读者如果构建自己的数据集或者LFW数据集可以不看。

注意:这里cfp-fp数据集的对齐和lfw不一样,原因在于目录层级不一样,cfp-fp数据目录下每个person还分正脸侧脸,所以在遍历时不太一致,需要深度两层遍历,我们又写了align_cfp.py文件。

这里我们复制一份align_lfw.py到align_cfp.py。然后改main函数里

1、数据路径遍历方式变为ytf,这个你去看face_image有定义

dataset = face_image.get_dataset('ytf', args.input_dir)

print('dataset size', 'lfw', len(dataset))

2、113行改一下生成新的路径存储图片这里

img = img[:,:,0:3]

_paths = fimage.image_path.split('/')

a,b,c = _paths[-3], _paths[-2],_paths[-1]

这样就可以两层遍历找所有的图片了!

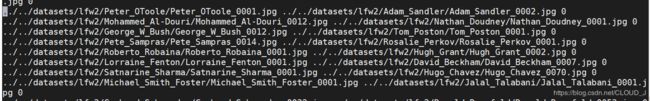

2生成list文件

所以我们找了个程序附录2代码来生成list。

…/…/datasets/lfw/train.lst输出目录最后train.lst代表list的名字。(这里我们就用lfw代表最后存储lst、rec等文件的位置;)

…/…/datasets/lfw2对齐后的图片目录

python3 generatelst.py --dataset-dir ../../datasets/lfw2 --list-file ../../datasets/lfw/train.lst --img-ext '.jpg'

注意:这里生成的lst格式如下:

1 path/Adam_Brody/Adam_Brody_277.png 25

其中1代表对齐,25代表class label 中间是地址;需要用\t表示tab键,不能用空格间隔。

3生成rec &idx 文件(依托于list)

生成lst文件后,直接用face2rec2文件生成rec与idx,用python3存在一些问题;可以用python2

python face2rec2.py ../../datasets/lfw/

或者修改face2rec2代码,修改image_encode部分pack原来是’ '改为加一个b

header = mx.recordio.IRHeader(item.flag, item.label, item.id, 0)

#print('write', item.flag, item.id, item.label)

s = mx.recordio.pack(header,b'')

q_out.put((i, s, oitem))

然后在运行python3即可

python3 face2rec2.py ../../datasets/lfw/

4创建property配置文件

上述完成了训练所需的rec与dix文件的生成,这里还需要创建一个配置文件,直接创建一个名为property的文件,没有后缀

写1000,112,112代表ID数量,尺寸,尺寸

1000,112,112

目前datasets/lfw/目录下存在train.idx train.lst train.rec property

5 创建pair文件

为了做测试我们需要生成验证集用的bin文件,bin文件生成前需要做pair文件,就是一对一对的数据,每一行分别是

对于LFW和CFP这些官方的数据,直接利用官方提供的pair文件即可。我们自己构建的数据,可以利用下面我写的一个脚本简单生成。

利用generate_image_pairs.py(源文件有问题,已修改)

稍后上传,附录1有

…/…/datasets/lfw2对齐图像目录

…/…/datasets/lfw/train.txt存放txt

3000要多少个正样本数据,会同时生成同样负样本;

python3 generate_image_pairs.py --data-dir ../../datasets/lfw2 --outputtxt ../../datasets/lfw/train.txt --num-samepairs 3000

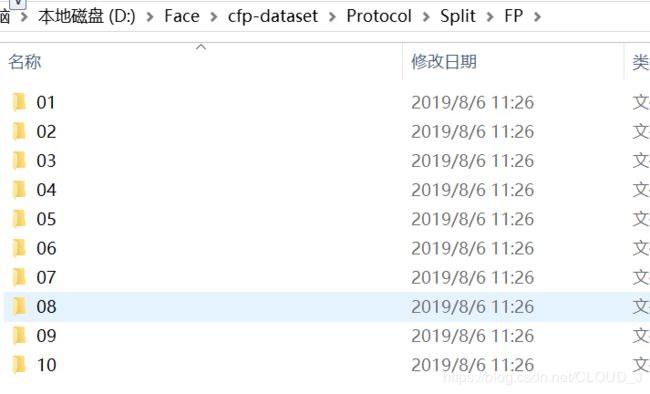

PS:CFP可以往后看,非CFP可以绕过了!

注意:这里生成pairs的方法不太好,数据集给了一些标准的pairs文件,我们可以写一个脚本取解读,具体如下:

lfw在insightface里面有pair.txt

cfp没有,只有一组FP对,需要我们自己写个脚本,这里我写好了放到附录3中。我们依然放到src/data下cfp_make_bin.py

cfp给了这个目录有一组对

前面对齐后数据存放在cfpdataimg里面,但是Protocol没有拷过来,我们手动复制过来。

python3 cfp_make_bin.py --data-dir …/…/datasets/cfpdataimg --output …/…/datasets/cfp/cfp.bin

6 生成验证集bin文件

完事后利用/src/data/下的 lfw2pack.py生成bin文件

但是有点问题,需要修改下,参考这篇博客https://blog.csdn.net/hanjiangxue_wei/article/details/86566348

修改lfw2pack.py中19行,打#的为更改的,改为两个参数,一个是txt读出来的列表,另一个是总数量。

注意:cfp跳过就可以了

import mxnet as mx

from mxnet import ndarray as nd

import argparse

import pickle

import sys

import os

sys.path.append(os.path.join(os.path.dirname(__file__), '..', 'eval'))

import lfw

parser = argparse.ArgumentParser(description='Package LFW images')

# general

parser.add_argument('--data-dir', default='', help='')

parser.add_argument('--image-size', type=str, default='112,112', help='')

parser.add_argument('--output', default='', help='path to save.')

parser.add_argument('--num-samepairs',default=100)

args = parser.parse_args()

lfw_dir = args.data_dir

image_size = [int(x) for x in args.image_size.split(',')]

lfw_pairs = lfw.read_pairs(os.path.join(lfw_dir, 'train.txt'))

print(lfw_pairs)

lfw_paths, issame_list = lfw.get_paths(lfw_pairs,int(args.num_samepairs)+1)#, 'jpg')

lfw_bins = []

#lfw_data = nd.empty((len(lfw_paths), 3, image_size[0], image_size[1]))

print(len(issame_list))

i = 0

for path in lfw_paths:

with open(path, 'rb') as fin:

_bin = fin.read()

lfw_bins.append(_bin)

#img = mx.image.imdecode(_bin)

#img = nd.transpose(img, axes=(2, 0, 1))

#lfw_data[i][:] = img

i+=1

if i%1000==0:

print('loading lfw', i)

with open(args.output, 'wb') as f:

pickle.dump((lfw_bins, issame_list), f, protocol=pickle.HIGHEST_PROTOCOL)

对应的get_paths这个文件存在src\eval\lfw.py下,把他也改了

def get_paths(pairs, same_pairs):

nrof_skipped_pairs = 0

path_list = []

issame_list = []

cnt = 1

for pair in pairs:

path0 = pair[0]

path1 = pair[1]

if cnt < same_pairs:

issame = True

else:

issame = False

if os.path.exists(path0) and os.path.exists(path1): # Only add the pair if both paths exist

path_list += (path0,path1)

issame_list.append(issame)

else:

print('not exists', path0, path1)

nrof_skipped_pairs += 1

cnt += 1

if nrof_skipped_pairs>0:

print('Skipped %d image pairs' % nrof_skipped_pairs)

return path_list, issame_list

之后再运行

python3 lfw2pack.py --data-dir ../../datasets/lfw --output ../../datasets/lfw/lfw.bin --num-samepairs 300

附录1 pair文件生成代码

generate_image_pairs.py、

# coding:utf-8

import sys

import os

import random

import time

import itertools

import pdb

import argparse

#src = '../../datasets/lfw2'

#dst = open('../../datasets/lfw/train.txt', 'a')

parser = argparse.ArgumentParser(description='generate image pairs')

# general

parser.add_argument('--data-dir', default='', help='')

parser.add_argument('--outputtxt', default='', help='path to save.')

parser.add_argument('--num-samepairs',default=100)

args = parser.parse_args()

cnt = 0

same_list = []

diff_list = []

list1 = []

list2 = []

folders_1 = os.listdir(args.data_dir)

dst = open(args.outputtxt, 'a')

count = 0

dst.writelines('\n')

# 产生相同的图像对

for folder in folders_1:

sublist = []

same_list = []

imgs = os.listdir(os.path.join(args.data_dir, folder))

for img in imgs:

img_root_path = os.path.join(args.data_dir, folder, img)

sublist.append(img_root_path)

list1.append(img_root_path)

for item in itertools.combinations(sublist, 2):

for name in item:

same_list.append(name)

if len(same_list) > 10 and len(same_list) < 13:

for j in range(0, len(same_list), 2):

if count < int(args.num_samepairs):#数量可以修改

dst.writelines(same_list[j] + ' ' + same_list[j+1]+ ' ' + '1' + '\n')

count += 1

if count >= int(args.num_samepairs):

break

list2 = list1.copy()

# 产生不同的图像对

diff = 0

print(count)

# 如果不同的图像对远远小于相同的图像对,则继续重复产生,直到两者相差很小

while True:

random.seed(time.time() * 100000 % 10000)

random.shuffle(list2)

for p in range(0, len(list2) - 1, 2):

if list2[p] != list2[p + 1]:

dst.writelines(list2[p] + ' ' + list2[p + 1] + ' ' + '0'+ '\n')

diff += 1

if diff >= count:

break

#print(diff)

if diff < count:

#print('--')

continue

else:

break

附录2 产生lst文件代码

import os

import random

import argparse

class PairGenerator:

def __init__(self, data_dir, pairs_filepath, img_ext):

"""

Parameter data_dir, is your data directory.

Parameter pairs_filepath, where is the pairs.txt that belongs to.

Parameter img_ext, is the image data extension for all of your image data.

"""

self.data_dir = data_dir

self.pairs_filepath = pairs_filepath

self.img_ext = img_ext

# splitting the database content into 10 random sets

def split_to_10(self):

folders = []

cnt = 0

for name in os.listdir(self.data_dir):

folders.append(name)

folders = sorted(folders) # sorting names in abc order

a = []

# names of folders - e.g. Talgat Bigeldinov, Kairat Nurtas, etc.

for name in folders:

# f = open(self.pairs_filepath, 'a+')

# looping through image files in one folder

for file in os.listdir(self.data_dir + '/' + name):

# a.append(data_dir + name + '/' + file)

a.append(name)

cnt = cnt + 1

cnt = cnt + 1

random.shuffle(a)

# splitting the database content into 10 random sets

def write_similar(self, lst):

f = open(self.pairs_filepath, 'a+')

for i in range(20):

left = random.choice(lst)

right = random.choice(lst)

f.write(left + '\t' + right + '\t' + '1\n')

# writing 1 IMAGE_PATH LABEL like insightface lst file needs

def write_item_label(self):

cnt = 0

for name in os.listdir(self.data_dir):

if name == ".DS_Store":

continue

# print(name)

a = []

f = open(self.pairs_filepath, 'a+')

for file in os.listdir(self.data_dir + '/' + name):

if file == ".DS_Store":

continue

a.append(data_dir + '/' + name + '/' + file)

f.write(str(1) + '\t' + data_dir + '/' + name + '/' + file + '\t' + str(cnt) + '\n')

cnt = cnt + 1

# writing 1 IMAGE_PATH LABEL like insightface lst file needs in alphabetic order

def write_item_label_abc(self):

cnt = 0

names = []

for name in os.listdir(self.data_dir):

names.append(name)

names = sorted(names)

for name in names:

print(name)

a = []

f = open(self.pairs_filepath, 'a+')

for file in os.listdir(self.data_dir + '/' + name):

if file == ".DS_Store":

continue

a.append(data_dir + '/' + name + '/' + file)

f.write(str(1) + '\t' + data_dir + '/' + name + '/' + file + '\t' + str(cnt) + '\n')

cnt = cnt + 1

def write_different(self, lst1, lst2):

f = open(self.pairs_filepath, 'a+')

for i in range(500):

left = random.choice(lst1)

right = random.choice(lst2)

f.write(left + '\t' + right + '\t' + '0\n')

f.close()

def generate_pairs(self):

for name in os.listdir(self.data_dir):

if name == ".DS_Store":

continue

a = []

for file in os.listdir(self.data_dir + '/' + name):

if file == ".DS_Store":

continue

a.append(name + '/' + file)

generatePairs.write_similar(a)

def generate_non_pairs(self):

folder_list = []

for folder in os.listdir(self.data_dir):

folder_list.append(folder)

folder_list.sort(reverse=True)

# print(folder_list)

i = 0

a = []

for dir in os.listdir(self.data_dir):

if dir == ".DS_Store":

continue

for file in os.listdir(self.data_dir + dir):

if file == ".DS_Store":

continue

a.append(dir + '/' + file)

# print(a)

b = []

for dir in os.listdir(self.data_dir):

if dir == ".DS_Store":

continue

for file in os.listdir(self.data_dir + folder_list[i]):

if file == ".DS_Store":

continue

b.append(folder_list[i] + '/' + file)

# print(b)

i = i + 1

generatePairs.write_different(a, b)

if __name__ == '__main__':

# data_dir = "/home/ti/Downloads/DATASETS/out_data_crop/"

# pairs_filepath = "/home/ti/Downloads/insightface/src/data/pairs.txt"

# alternative_lst = "/home/ti/Downloads/insightface/src/data/crop.lst"

# test_txt = "/home/ti/Downloads/DATASETS/out_data_crop/test.txt"

# img_ext = ".png"

# arguments to pass in command line

parser = argparse.ArgumentParser(description='Rename images in the folder according to LFW format: Name_Surname_0001.jpg, Name_Surname_0002.jpg, etc.')

parser.add_argument('--dataset-dir', default='', help='Full path to the directory with peeople and their names, folder should denote the Name_Surname of the person')

parser.add_argument('--list-file', default='', help='Full path to the directory with peeople and their names, folder should denote the Name_Surname of the person')

parser.add_argument('--img-ext', default='', help='Full path to the directory with peeople and their names, folder should denote the Name_Surname of the person')

# reading the passed arguments

args = parser.parse_args()

data_dir = args.dataset_dir

lst = args.list_file

img_ext = args.img_ext

# generatePairs = PairGenerator(data_dir, pairs_filepath, img_ext)

# generatePairs.write_item_label()

# generatePairs = PairGenerator(data_dir, pairs_filepath, img_ext)

generatePairs = PairGenerator(data_dir, lst, img_ext)

generatePairs.write_item_label_abc() # looping through our dataset and creating 1 ITEM_PATH LABEL lst file

# generatePairs.generate_pairs() # to use, please uncomment this line

# generatePairs.generate_non_pairs() # to use, please uncomment this line

# generatePairs = PairGenerator(dataset_dir, test_txt, img_ext)

# generatePairs.split_to_10()

附录3 CFP数据集生成pair文件

import mxnet as mx

from mxnet import ndarray as nd

import argparse

import pickle

import sys

import os

parser = argparse.ArgumentParser(description='Package LFW images')

# general

parser.add_argument('--data-dir', default='', help='')

parser.add_argument('--image-size', type=str, default='112,112', help='')

parser.add_argument('--output', default='', help='path to save.')

args = parser.parse_args()

data_dir = args.data_dir

image_size = [int(x) for x in args.image_size.split(',')]

pairs_end = []

def get_paths():

pairs = []

prefix = os.path.join(data_dir,'Protocol/')

#prefix = "/Split/"

prefix_F = os.path.join(prefix, "Pair_list_F.txt")

pairs_F = []

prefix_P = os.path.join(prefix,"Pair_list_P.txt")

pairs_P = []

pairs_end = []

issame_list = []

#读pairlist文件

with open(prefix_F, 'r') as f:

for line in f.readlines()[0:]:

pair = line.strip().split()

pairs_F.append(pair[1])

print(len(pairs_F))

with open(prefix_P, 'r') as f:

for line in f.readlines()[0:]:

pair = line.strip().split()

pairs_P.append(pair[1])

print(len(pairs_P))

#读pair文件

prefix = os.path.join(data_dir,"Protocol/Split/FP")

folders_1 = os.listdir(prefix)

for folder in folders_1:

sublist = []

same_list = []

pairtxt = os.listdir(os.path.join(prefix, folder))

for pair in pairtxt:

img_root_path = os.path.join(prefix, folder, pair)

with open(img_root_path, 'r') as f:

for line in f.readlines()[0:]:

#print(line)

pair1 = line.strip().split(',')

#print(pair)

pairs_end += (os.path.join(data_dir,'Protocol/',pairs_F[int(pair1[0])-1]),os.path.join(data_dir,'Protocol/',pairs_P[int(pair1[1])-1]))

#print(pair)

if pair == 'same.txt':

#print('ok!')

issame_list.append(True)

else:

issame_list.append(False)

return pairs_end,issame_list

lfw_paths, issame_list = get_paths()

lfw_bins = []

print(len(lfw_paths))

print(lfw_paths[0])

print(lfw_paths[1])

print(issame_list[0])

print(issame_list[1])

#lfw_data = nd.empty((len(lfw_paths), 3, image_size[0], image_size[1]))

print(len(issame_list))

i = 0

for path in lfw_paths:

with open(path, 'rb') as fin:

_bin = fin.read()

lfw_bins.append(_bin)

#img = mx.image.imdecode(_bin)

#img = nd.transpose(img, axes=(2, 0, 1))

#lfw_data[i][:] = img

i+=1

if i%1000==0:

print('loading lfw', i)

with open(args.output, 'wb') as f:

pickle.dump((lfw_bins, issame_list), f, protocol=pickle.HIGHEST_PROTOCOL)

有问题请留言,最近一两周在做这个可以更新帖子及问题

Next:

insightface测试验证集效果全过程

https://blog.csdn.net/CLOUD_J/article/details/98882718