kubernetes系列之十七:使用kops在AWS部署kubernetes并建立基于AWS Network Load Balancer的service

一、前言

使用kops,可以在赋予kops特定AWS权限的情况下,为你自动在AWS部署EC2虚拟机并在虚拟机上安装kubernetes。

而使用Kubernetes从1.9版本开始的对于NLB的支持,可以直接创建基于AWS NLB的kubernetes service,获取AWS分配的外部IP对外进行访问。

转载自https://blog.csdn.net/cloudvtech

二、使用kops安装kubernetes

2.1 配置环境

yum install wget

wget https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

yum install ./epel-release-latest-*.noarch.rpm

yum -y update

yum -y install python-pip2.2 安装aws-cli

pip install --upgrade pip

pip install awscli --upgrade —user

export PATH=~/.local/bin:$PATH2.3 安装kops

wget https://github.com/kubernetes/kops/releases/download/1.9.0/kops-linux-amd64

mv kops-linux-amd64 /bin/kops2.4 登陆原来的AWS账号

aws configure

AWS Access Key ID [None]: XXXXXXXXXXXXXXXXXXXX

AWS Secret Access Key [None]: YYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYY

Default region name [None]: us-west-1

Default output format [None]: 2.5 建立一个新的AWS group

aws iam create-group --group-name kops

{

"Group": {

"Path": "/",

"CreateDate": "2018-05-22T05:25:51.653Z",

"GroupId": "XXXXXXXXXXXXXXXX",

"Arn": "arn:aws:iam::1234567890:group/kops",

"GroupName": "kops"

}

}2.6 给AWS group赋予权限

aws iam attach-group-policy --policy-arn arn:aws:iam::aws:policy/AmazonEC2FullAccess --group-name kops

aws iam attach-group-policy --policy-arn arn:aws:iam::aws:policy/AmazonRoute53FullAccess --group-name kops

aws iam attach-group-policy --policy-arn arn:aws:iam::aws:policy/AmazonS3FullAccess --group-name kops

aws iam attach-group-policy --policy-arn arn:aws:iam::aws:policy/IAMFullAccess --group-name kops

aws iam attach-group-policy --policy-arn arn:aws:iam::aws:policy/AmazonVPCFullAccess --group-name kops2.7 建立一个AWS用户并且将用户加入权限group

aws iam create-user --user-name kops

{

"User": {

"UserName": "kops",

"Path": "/",

"CreateDate": "2018-05-22T05:26:16.080Z",

"UserId": "XXXXXXXXXXXXXXXX",

"Arn": "arn:aws:iam::1234567890:user/kops"

}

}

aws iam add-user-to-group --user-name kops --group-name kopsaws iam create-access-key --user-name kops

{

"AccessKey": {

"UserName": "kops",

"Status": "Active",

"CreateDate": "2018-05-22T05:26:26.089Z",

"SecretAccessKey": "xxxxxxxxxxxxxxxxxxxxx",

"AccessKeyId": "yyyyyyyyyyyyyyyyyyyyyyy"

}

}2.9 使用aws-cli登陆这个新用户

aws configure

AWS Access Key ID [****************7LHA]: xxxxxxxxxxxxxx

AWS Secret Access Key [****************2dyW]: yyyyyyyyyyyyyyyy

Default region name [us-west-1]: us-west-1a

Default output format [None]: 2.10 建立S3

export NAME=cluster.k8s.local

aws s3api create-bucket --bucket ${NAME}-state-store --create-bucket-configuration LocationConstraint=$AWS_REGION

{

"Location": "http://cluster.k8s.local-state-store.s3.amazonaws.com/"

}

export KOPS_STATE_STORE=s3://cluster.k8s.local-state-store 2.11 使用kops建立kubernetes cluster

/usr/local/bin/kops create cluster \

--name=${NAME} \

--image=ami-18726478 \

--zones=us-west-1a \

--master-count=1 \

--master-size="t2.xlarge" \

--node-count=2 \

--node-size="t2.xlarge" \

--vpc=vpc-bbbbbbbbb \

--networking=calico \

--ssh-public-key="~/.ssh/id_rsa.pub"最后的log:

Must specify --yes to apply changes

Cluster configuration has been created.

Suggestions:

* list clusters with: kops get cluster

* edit this cluster with: kops edit cluster cluster.k8s.local

* edit your node instance group: kops edit ig --name=cluster.k8s.local nodes

* edit your master instance group: kops edit ig --name=cluster.k8s.local master-us-west-1a

Finally configure your cluster with: kops update cluster cluster.k8s.local --yes2.12 启动cluster

kops update cluster cluster.k8s.local --yeslogs:

I0522 05:44:52.534291 20089 apply_cluster.go:456] Gossip DNS: skipping DNS validation

W0522 05:44:52.563080 20089 firewall.go:249] Opening etcd port on masters for access from the nodes, for calico. This is unsafe in untrusted environments.

I0522 05:44:52.761542 20089 executor.go:91] Tasks: 0 done / 79 total; 32 can run

I0522 05:44:53.160191 20089 vfs_castore.go:731] Issuing new certificate: "apiserver-aggregator-ca"

I0522 05:44:53.270980 20089 vfs_castore.go:731] Issuing new certificate: "ca"

I0522 05:44:53.418228 20089 executor.go:91] Tasks: 32 done / 79 total; 23 can run

I0522 05:44:53.741454 20089 vfs_castore.go:731] Issuing new certificate: "kubecfg"

I0522 05:44:53.805113 20089 vfs_castore.go:731] Issuing new certificate: "kubelet"

I0522 05:44:53.885277 20089 vfs_castore.go:731] Issuing new certificate: "apiserver-proxy-client"

I0522 05:44:53.972077 20089 vfs_castore.go:731] Issuing new certificate: "kubelet-api"

I0522 05:44:54.096859 20089 vfs_castore.go:731] Issuing new certificate: "kube-controller-manager"

I0522 05:44:54.117068 20089 vfs_castore.go:731] Issuing new certificate: "kube-scheduler"

I0522 05:44:54.141624 20089 vfs_castore.go:731] Issuing new certificate: "apiserver-aggregator"

I0522 05:44:54.165143 20089 vfs_castore.go:731] Issuing new certificate: "kube-proxy"

I0522 05:44:54.630570 20089 vfs_castore.go:731] Issuing new certificate: "kops"

I0522 05:44:54.741924 20089 executor.go:91] Tasks: 55 done / 79 total; 20 can run

I0522 05:44:54.950987 20089 launchconfiguration.go:341] waiting for IAM instance profile "nodes.cluster.k8s.local" to be ready

I0522 05:44:54.988838 20089 launchconfiguration.go:341] waiting for IAM instance profile "masters.cluster.k8s.local" to be ready

I0522 05:45:05.329692 20089 executor.go:91] Tasks: 75 done / 79 total; 3 can run

I0522 05:45:05.923202 20089 vfs_castore.go:731] Issuing new certificate: "master"

I0522 05:45:06.306295 20089 executor.go:91] Tasks: 78 done / 79 total; 1 can run

W0522 05:45:06.492131 20089 executor.go:118] error running task "LoadBalancerAttachment/api-master-us-west-1a" (9m59s remaining to succeed): error attaching autoscaling group to ELB: ValidationError: Provided Load Balancers may not be valid. Please ensure they exist and try again.

status code: 400, request id: 47ee7a1b-5d83-11e8-8909-f9b2d6aabfec

I0522 05:45:06.492161 20089 executor.go:133] No progress made, sleeping before retrying 1 failed task(s)

I0522 05:45:16.492436 20089 executor.go:91] Tasks: 78 done / 79 total; 1 can run

W0522 05:45:16.662368 20089 executor.go:118] error running task "LoadBalancerAttachment/api-master-us-west-1a" (9m49s remaining to succeed): error attaching autoscaling group to ELB: ValidationError: Provided Load Balancers may not be valid. Please ensure they exist and try again.

status code: 400, request id: 4e013103-5d83-11e8-bdfc-4f93318e3eef

I0522 05:45:16.662398 20089 executor.go:133] No progress made, sleeping before retrying 1 failed task(s)

I0522 05:45:26.662694 20089 executor.go:91] Tasks: 78 done / 79 total; 1 can run

I0522 05:45:27.058456 20089 executor.go:91] Tasks: 79 done / 79 total; 0 can run

I0522 05:45:27.058645 20089 kubectl.go:134] error running kubectl config view --output json

I0522 05:45:27.058656 20089 kubectl.go:135]

I0522 05:45:27.058662 20089 kubectl.go:136]

W0522 05:45:27.058678 20089 update_cluster.go:279] error reading kubecfg: error getting config from kubectl: error running kubectl: exec: "kubectl": executable file not found in $PATH

I0522 05:45:27.098105 20089 update_cluster.go:291] Exporting kubecfg for cluster

kops has set your kubectl context to cluster.k8s.local

Cluster changes have been applied to the cloud.

Changes may require instances to restart: kops rolling-update cluster2.13 查看信息

[root@ip-10-0-103-135 .ssh]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-10-0-41-218.us-west-1.compute.internal Ready master 15m v1.9.3

ip-10-0-49-17.us-west-1.compute.internal Ready node 10m v1.9.3

ip-10-0-53-149.us-west-1.compute.internal Ready node 9m v1.9.32.14 ssh到k8s node

[root@ip-10-0-103-135 ~]# ssh [email protected]

Last login: Tue May 22 06:04:58 2018 from ip-10-0-103-135.us-west-1.compute.internal

[ec2-user@ip-10-0-53-149 ~]$ sudo su

[root@ip-10-0-53-149 ec2-user]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2d34f2985513 gcr.io/google_containers/k8s-dns-sidecar-amd64@sha256:535d108a4951f0c9c479949ff96878f353403458ec908266db36a98e0449c8b6 "/sidecar --v=2 --..." 10 minutes ago Up 10 minutes k8s_sidecar_kube-dns-7785f4d7dc-xpx5v_kube-system_c5f02eac-5d83-11e8-8bf9-02ef9dce711c_0

be2d5fbf37d6 gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64@sha256:4278a5d5fae8ad1612402eae4e5aea40aad7af3c63fbfbc1ca23e14c6a8dcd71 "/dnsmasq-nanny -v..." 10 minutes ago Up 10 minutes k8s_dnsmasq_kube-dns-7785f4d7dc-xpx5v_kube-system_c5f02eac-5d83-11e8-8bf9-02ef9dce711c_0

84277b8995ea gcr.io/google_containers/cluster-proportional-autoscaler-amd64@sha256:003f98d9f411ddfa6ff6d539196355e03ddd69fa4ed38c7ffb8fec6f729afe2d "/cluster-proporti..." 10 minutes ago Up 10 minutes k8s_autoscaler_kube-dns-autoscaler-787d59df8f-gtr5n_kube-system_c5f0169d-5d83-11e8-8bf9-02ef9dce711c_0

918de60b761b gcr.io/google_containers/k8s-dns-kube-dns-amd64@sha256:7d3d06a0c5577f6f546d34d4bbde5b495157ee00b55d83052d68f723421827da "/kube-dns --domai..." 10 minutes ago Up 10 minutes k8s_kubedns_kube-dns-7785f4d7dc-xpx5v_kube-system_c5f02eac-5d83-11e8-8bf9-02ef9dce711c_0

6ee8444f5c88 gcr.io/google_containers/pause-amd64:3.0 "/pause" 10 minutes ago Up 10 minutes k8s_POD_kube-dns-autoscaler-787d59df8f-gtr5n_kube-system_c5f0169d-5d83-11e8-8bf9-02ef9dce711c_0

358977e7ff85 gcr.io/google_containers/pause-amd64:3.0 "/pause" 10 minutes ago Up 10 minutes k8s_POD_kube-dns-7785f4d7dc-xpx5v_kube-system_c5f02eac-5d83-11e8-8bf9-02ef9dce711c_0

69ecd8e47993 quay.io/calico/cni@sha256:3a23e093b1e98cf232a226fedff591d33919f5297f016a41d8012efc83b23a84 "/install-cni.sh" 10 minutes ago Up 10 minutes k8s_install-cni_calico-node-5wqch_kube-system_968f8fae-5d84-11e8-90f4-02ef9dce711c_0

8bb93c57e11e quay.io/calico/node@sha256:7758c25549fcfe677699bbcd3c279b3a174e7cbbbf9d16f3d71713d68f695dfb "start_runit" 11 minutes ago Up 11 minutes k8s_calico-node_calico-node-5wqch_kube-system_968f8fae-5d84-11e8-90f4-02ef9dce711c_0

511c7fa8a052 gcr.io/google_containers/pause-amd64:3.0 "/pause" 11 minutes ago Up 11 minutes k8s_POD_calico-node-5wqch_kube-system_968f8fae-5d84-11e8-90f4-02ef9dce711c_0

039f90d7ce35 protokube:1.9.0 "/usr/bin/protokub..." 18 minutes ago Up 18 minutes loving_payne

b271e18c1c64 gcr.io/google_containers/kube-proxy@sha256:19277373ca983423c3ff82dbb14f079a2f37b84926a4c569375314fa39a4ee96 "/bin/sh -c 'mkfif..." 18 minutes ago Up 18 minutes k8s_kube-proxy_kube-proxy-ip-10-0-53-149.us-west-1.compute.internal_kube-system_634b68e811d24cbe6f22fc91a57ebe53_0

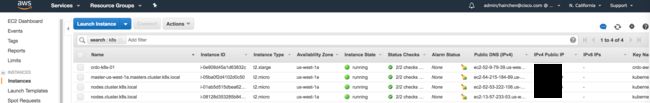

564bae247807 gcr.io/google_containers/pause-amd64:3.0 "/pause" 18 minutes ago Up 18 minutes k8s_POD_kube-proxy-ip-10-0-53-149.us-west-1.compute.internal_kube-system_634b68e811d24cbe6f22fc91a57ebe53_02.15 AWS web console的信息

转载自https://blog.csdn.net/cloudvtech

三、部署nginx deployment和external load balancer

3.1 部署nginx deployment

nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80[root@ip-10-0-103-135 ec2-user]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-6c54bd5869-8rnsb 1/1 Running 0 8m

nginx-deployment-6c54bd5869-h6d9t 1/1 Running 0 8m

nginx-deployment-6c54bd5869-zdz28 1/1 Running 0 8m3.2 部署基于NLB的k8s负载均衡service

nlb.yml

apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: default

labels:

app: nginx

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: "nlb"

spec:

externalTrafficPolicy: Local

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

type: LoadBalancer3.3 查看service状态

kubectl describe svc nginx

Name: nginx

Namespace: default

Labels: app=nginx

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"v1","kind":"Service","metadata":{"annotations":{"service.beta.kubernetes.io/aws-load-balancer-type":"nlb"},"labels":{"app":"nginx"},"nam...

service.beta.kubernetes.io/aws-load-balancer-type=nlb

Selector: app=nginx

Type: LoadBalancer

IP: 100.65.228.231

LoadBalancer Ingress: a6000e4955d8811e8a77e02ef9dce711-6e78fd3ea87bc9af.elb.us-west-1.amazonaws.com

Port: http 80/TCP

TargetPort: 80/TCP

NodePort: http 31973/TCP

Endpoints: 100.113.75.194:80,100.113.75.195:80,100.99.250.67:80

Session Affinity: None

External Traffic Policy: Local

HealthCheck NodePort: 30353

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal EnsuringLoadBalancer 4m service-controller Ensuring load balancer

Normal EnsuredLoadBalancer 4m service-controller Ensured load balancer3.4 查看web console

load balancer

target group

3.5 访问NLB