caffe下使用Faster-rcnn训练自己的数据集

caffe下使用Faster-rcnn训练自己的数据集

1、 根据自己的数据集修改文件

以vgg_cnn_m_1024为例,用end2end的方式训练。

① 打开py-faster-rcnn-master\models\pascal_voc\VGG_CNN_M_1024\faster_rcnn_end2end\train.prototxt修改4处。

name: "VGG_CNN_M_1024"

layer {

name: 'input-data'

type: 'Python'

top: 'data'

top: 'im_info'

top: 'gt_boxes'

python_param {

module: 'roi_data_layer.layer'

layer: 'RoIDataLayer'

param_str: "'num_classes': 2" #这里改为训练类别数+1

}

}

layer {

name: 'roi-data'

type: 'Python'

bottom: 'rpn_rois'

bottom: 'gt_boxes'

top: 'rois'

top: 'labels'

top: 'bbox_targets'

top: 'bbox_inside_weights'

top: 'bbox_outside_weights'

python_param {

module: 'rpn.proposal_target_layer'

layer: 'ProposalTargetLayer'

param_str: "'num_classes': 2" #这里改为训练类别数+1

}

}

inner_product_param {

num_output: 2 #这里改为训练类别数+1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

inner_product_param {

num_output: 8 #这里改为(类别数+1)*4

weight_filler {

type: "gaussian"

std: 0.001

}

bias_filler {

type: "constant"

value: 0

}

}

}

② 打开py-faster-rcnn-master\models\pascal_voc\VGG_CNN_M_1024\faster_rcnn_end2end\ test.prototxt修改2处。

layer {

name: "cls_score"

type: "InnerProduct"

bottom: "fc7"

top: "cls_score"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 2 #改为训练类别数+1

layer {

name: "bbox_pred"

type: "InnerProduct"

bottom: "fc7"

top: "bbox_pred"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 8 #改为(训练类别数+1)*4

③ 在文件夹py-faster-rcnn-master中找到imdb.py修改。

def append_flipped_images(self):

num_images = self.num_images

#widths = self._get_widths()

widths = [PIL.Image.open(self.image_path_at(i)).size[0]

for i in xrange(num_images)]

for i in xrange(num_images):

boxes = self.roidb[i]['boxes'].copy()

oldx1 = boxes[:, 0].copy()

oldx2 = boxes[:, 2].copy()

boxes[:, 0] = widths[i] - oldx2 - 1

boxes[:, 2] = widths[i] - oldx1 - 1

for b in range(len(boxes)):

if boxes[b][2] < boxes[b][0]:

boxes[b][0] = 0

assert (boxes[:, 2] >= boxes[:, 0]).all()

entry = {'boxes' : boxes,

'gt_overlaps' : self.roidb[i]['gt_overlaps'],

'gt_classes' : self.roidb[i]['gt_classes'],

'flipped' : True}

self.roidb.append(entry)

self._image_index = self._image_index * 2

④ 在文件夹py-faster-rcnn-master\lib\rpn中找到如图第1个和第5个文件,将其中的param_str_全部改为param_str。在文件夹py-faster-rcnn-master中找到layer.py,将其中的param_str_全部改为param_str。

def setup(self, bottom, top):

"""Setup the RoIDataLayer."""

# parse the layer parameter string, which must be valid YAML

layer_params = yaml.load(self.param_str)

self._num_classes = layer_params['num_classes']

self._name_to_top_map = {}

⑤ 在文件夹py-faster-rcnn-master中找到config.py修改。

# Train using these proposals

#__C.TRAIN.PROPOSAL_METHOD = 'selective_search'

__C.TRAIN.PROPOSAL_METHOD = 'gt'

# Test using these proposals

#__C.TEST.PROPOSAL_METHOD = 'selective_search'

__C.TEST.PROPOSAL_METHOD = 'gt'

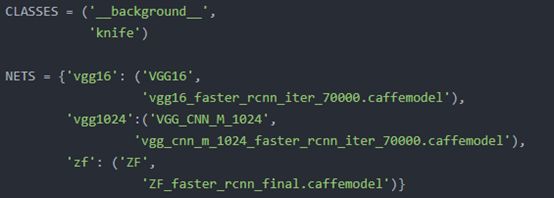

⑥ 在文件夹py-faster-rcnn-master中找到pascal_voc.py修改。

def __init__(self, image_set, year, devkit_path=None):

imdb.__init__(self, 'voc_' + year + '_' + image_set)

self._year = year

self._image_set = image_set

self._devkit_path = self._get_default_path() if devkit_path is None \

else devkit_path

self._data_path = os.path.join(self._devkit_path, 'VOC' + self._year)

self._classes = ('__background__', # always index 0

'knife')

self._class_to_ind = dict(zip(self.classes, xrange(self.num_classes)))

self._image_ext = '.jpg'

Windows操作下需做此修改:

def _do_python_eval(self, output_dir = 'output'):

annopath = os.path.join(

self._devkit_path,

'VOC' + self._year,

'Annotations',

'{}.xml')

imagesetfile = os.path.join(

2、 end2end训练

① 清除缓存:

每次训练前将py-faster-rcnn-master\data\cache和py-faster-rcnn-master\data\VOCdevkit2007\annotations_cache中的文件删除。为防止与之前的模型搞混,训练前把output文件夹删除(或改个其他名)。

② 修改迭代次数:

打开py-faster-rcnn-master\experiments\scripts目录下的faster_rcnn_end2end.sh文件进行修改。

③ 开始训练:

安装Git并在py-faster-rcnn的根目录下右键打开git bash,输入“./experiments/scripts/faster_rcnn_end2end.sh

0 VGG_CNN_M_1024 pascal_voc”。

Git下载地址:https://git-scm.com/downloads/

Git安装参考:

https://blog.csdn.net/mengdc/article/details/76566049

https://blog.csdn.net/wangrenbao123/article/details/55511461/

④ 训练过程中的问题:

错误1:

I1116 20:28:35.41Loading pretrained model weights from data/imagenet_models/VGG_CNN_M_1024.v2.caffemodel

***Check failure stack trace: ***

解决:下载预训练模型imagenet_models.rar,解压后放到py-faster-rcnn-master\data中。或者使用命令下载:

$ cd py-fasyer-rcnn

$./data/scripts/fetch_imagenet_models.sh

下载地址:https://dl.dropbox.com/s/gstw7122padlf0l/imagenet_models.tgz?dl=0

错误2:

TypeError: slice indices must be integers or None or have an index method

解决:在文件夹py-faster-rcnn中找到minibatch.py第172行和proposal_target_layer.py第123行,修改代码。

原代码:

for ind in inds:

cls = clss[ind]

start = 4 * cls

end = start + 4

bbox_targets[ind, start:end] = bbox_target_data[ind, 1:]

bbox_inside_weights[ind, start:end] = cfg.TRAIN.BBOX_INSIDE_WEIGHTS

return bbox_targets, bbox_inside_weights

改为:

for ind in inds:

ind = int(ind)

cls = clss[ind]

start = int(4 * cls)

end = int(start + 4)

bbox_targets[ind, start:end] = bbox_target_data[ind, 1:]

bbox_inside_weights[ind, start:end] = cfg.TRAIN.BBOX_INSIDE_WEIGHTS

return bbox_targets, bbox_inside_weights

错误3:

解决:出现这个错误说明下载的VOCdevkit2007文件不完整,在相应目录下新建所需文件夹。

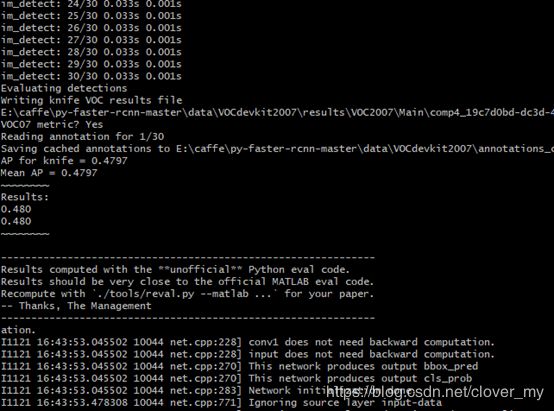

⑤训练结果:

3、 进行测试

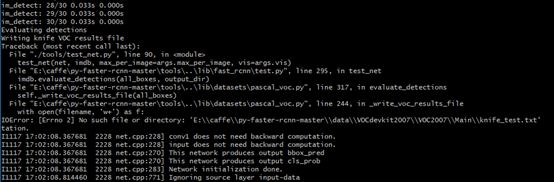

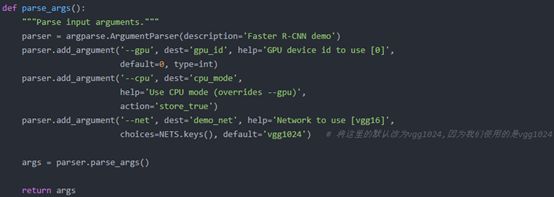

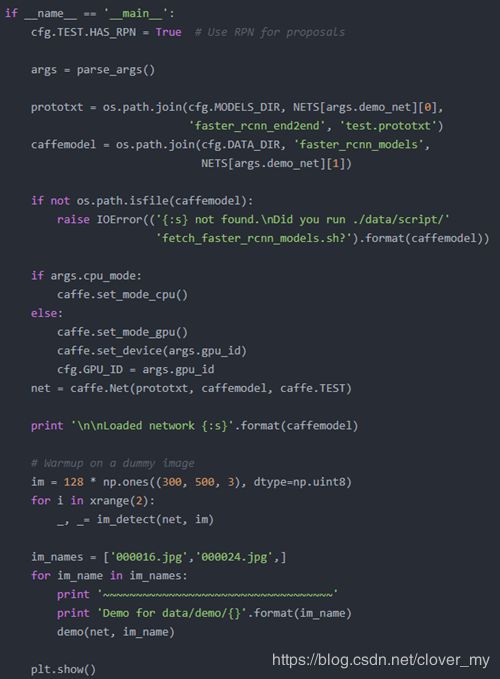

① py-faster-rcnn-master\tools目录下复制粘贴demo.py改为自己的demo_mine.py并进行修改。

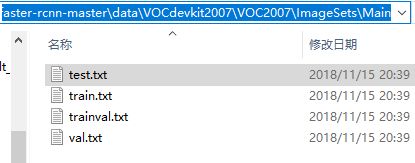

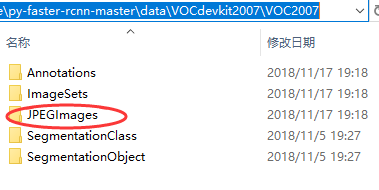

② 在demo_mine.py的im_names里填写需要测试的图片编号(编号从测试集test.txt中挑选),从JPEGImages文件夹中将挑选的编号对应的图片复制粘贴到py-faster-rcnn-master\data\demo目录下。

③ 第二步训练好的模型在py-faster-rcnn-master\output\faster_rcnn_end2end\voc_2007_trainval目录下,将这个caffemodel复制到py-faster-rcnn-master\data\faster_rcnn_models目录下。

④ 打开cmd,运行“python demo_mine.py”,运行结束得到结果。