pytorch学习笔记4--pytorch数据类型

文章目录

- pytorch 数据类型

- 创建tensor

- 将numpy数据转化为Tensor:

- 从python list中导入数据:

- uninitialized

- 设置默认类型:

- 随机初始化,rand/rand_like,randint

- 正态分布:randn

- 全部赋值为相同的元素:

- torch.arange(start,end,step)

- 等分:

- torch.eye(d1,d2) or torch.eye(num)

- randperm

- 索引切片

- 普通切片

- 隔行采样:

- 指定具体索引采样

- select by ... :tensor[...]

- select by mask

- take

- 维度变换

- View=reshape

- Squeeze/unsqueeze

- unsqueeze

- squeeze

- Transpose/t/permute

- t 只能用于2D的矩阵

- transpose

- permute

- Expand/repeat:

- expand:只有维度是1的才能扩张,-1 表示维度不变

- repeat

- Broadcast 自动扩展

- 拼接与拆分

- cat

- stack

- split

- chunk

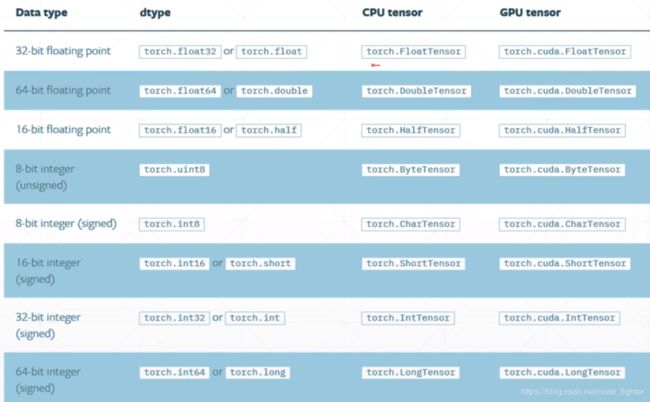

pytorch 数据类型

| python | pytorch |

|---|---|

| Int | IntTensor of size() |

| float | FloatTensor of size() |

| Int array | IntTensor of size [d1,d2,…] |

| Float array | FloatTensor of size [d1,d2,…] |

| string | ont-hot or Embedding(Word2Vec,glove) |

- loss常常是一个shape为0的标量

- Dim 1 、 rank 1 :一维数组.

- Dim 1、rank 2: 二维数组

- 对于

- [ 1 6 9 7 9 0 9 5 0 ] \left[\begin{array}{cccc}1 & 6 & 9 \\ 7 & 9 & 0 \\9 & 5 & 0\end{array}\right] ⎣⎡179695900⎦⎤

- dim : 2

- size/shape:[3,3]

创建tensor

将numpy数据转化为Tensor:

- torch.from_numpy(a)

从python list中导入数据:

- torch.tensor([2.,3.2])

- torch.tensor():接收的是现有的数据(标量或者是一个list)

- torch.Tensor 或者torch.FloatTensor():接收的是数据的维度,(也可以使用现有的数据,但是容易和数据维度混淆,不推荐使用):torch.FloatTensor(d1,d2,d3)

uninitialized

不使用初始化创建tensor,但是会出现torch.nan,torch.inf类数值,造成问题。

-

Torch.empty(),里面的数值是随机的。

-

a = torch.empty([2,3]) result: tensor([[0.0000e+00, 0.0000e+00, 3.1214e-22], [7.7492e-43, 0.0000e+00, 0.0000e+00]])

-

-

Torch.IntTensor(d1,d2,d3),数值也是随机的

-

a = torch.IntTensor(1,2,3) 结果: tensor([[[ 0, 0, -922707328], [ 471, 0, 0]]],dtype=torch.int32)

-

设置默认类型:

torch.set_default_tensor_type(torch.DoubleTensor)

- torch.tensor([1.2,3]).type()查看tensor类型

随机初始化,rand/rand_like,randint

- a = torch.rand(3,3)

- torch.rand_like(a):产生一个和a张量相同维度的维度

- torch.randint(min,max,[d1,d2])

-

a = torch.randint(1,10,[3,3]) result: tensor([[4, 3, 4], [4, 4, 1], [3, 7, 2]])

-

正态分布:randn

- 均值为0,方差为1:N(0,1)

-

a = torch.randn(2,3) tensor([[ 2.3060, 1.1874, -2.4466], [-0.1616, -0.3543, -2.2587]])

-

- 自定义方差:torch.normal(mean=mean,std=std)

-

a = torch.normal(mean=torch.full([10],0),std=torch.arange(1,0,-0.1)) result: tensor([ 0.2510, -0.4065, 0.7919, 0.3058, 0.6588, 0.3406, -0.4193, -0.0743,-0.0826, 0.0197])

-

全部赋值为相同的元素:

torch.full([d1,d2,…],num)

-

torch.full([2,3],7) result: tensor([[7., 7., 7.], [7., 7., 7.]]) 标量: torch.full([],7) result: tensor(7.) 数组: torch.full([1],7) result: tensor([7.])

torch.arange(start,end,step)

等分:

torch.linspace(min,max,num):从min,到max等分num个数字,包含max

-

torch.linspace(1,10,steps=10) result: tensor([ 1., 2., 3., 4., 5., 6., 7., 8., 9., 10.]) - torch.logspace(min,max,num)

torch.eye(d1,d2) or torch.eye(num)

-

torch.eye(4,3) result: tensor([[1., 0., 0.], [0., 1., 0.], [0., 0., 1.], [0., 0., 0.]]) torch.eye(3) result: tensor([[1., 0., 0.], [0., 1., 0.], [0., 0., 1.]])

randperm

生成随机索引,常用于样本和标签协同shuffle

-

torch.randperm(10) result: tensor([0, 1, 5, 9, 8, 7, 2, 4, 6, 3]) example: a = torch.rand(2,3)# 样本 b = torch.tensor([[1],[0]])# 标签 print(a) print(b) idx = torch.randperm(2) print(a[idx]) print(b[idx]) result: tensor([[0.5678, 0.4272, 0.5417], [0.0929, 0.9854, 0.2113]]) tensor([[1], [0]]) tensor([[0.0929, 0.9854, 0.2113], [0.5678, 0.4272, 0.5417]]) tensor([[0], [1]])

索引切片

普通切片

隔行采样:

- a[:,:,0:28:2,0:28:2]

- a[:,:,::2,::2]

指定具体索引采样

- new_tensor = torch.index_select(tensor,dim,indeces)

-

a = torch.rand(4,3,28,28) indices = torch.LongTensor([0,2]) torch.index_select(a, 0,indices)

select by … :tensor[…]

-

a = torch.rand(4,3,28,28) print(a[...].shape) print(a[0,...].shape) print(a[:,1:,...].shape) print(a[0,...,::2].shape) result: torch.Size([4, 3, 28, 28]) torch.Size([3, 28, 28]) torch.Size([4, 2, 28, 28]) torch.Size([3, 28, 14])

select by mask

-

x = torch.randn(3,4) tensor([[-0.6237, 0.0166, -0.0689, -1.0247], [-0.4650, -0.1222, -1.0476, -0.6294], [ 1.4714, -2.7954, 1.0995, -1.4252]]) mask = x.ge(0.5) tensor([[0, 0, 0, 0], [0, 0, 0, 0], [1, 0, 1, 0]], dtype=torch.uint8) torch.masked_select(x,mask) tensor([1.4714, 1.0995])

take

- 先把多维tensor变成一维,然后按照index取值

-

src = torch.tensor([[4,5,3],[6,7,8]]) torch.take(src,torch.tensor([0,2])) tensor([4, 3])

维度变换

View=reshape

-

a = torch.rand(4,1,28,28) a.view(4,28*28) tensor([[0.1783, 0.7374, 0.6013, ..., 0.5440, 0.0668, 0.1665], [0.0388, 0.7934, 0.7743, ..., 0.1795, 0.5644, 0.6409], [0.3364, 0.9005, 0.4570, ..., 0.5787, 0.9630, 0.4332], [0.3976, 0.6089, 0.2346, ..., 0.8186, 0.7931, 0.4657]]) a.reshape(4,28*28) tensor([[0.1783, 0.7374, 0.6013, ..., 0.5440, 0.0668, 0.1665], [0.0388, 0.7934, 0.7743, ..., 0.1795, 0.5644, 0.6409], [0.3364, 0.9005, 0.4570, ..., 0.5787, 0.9630, 0.4332], [0.3976, 0.6089, 0.2346, ..., 0.8186, 0.7931, 0.4657]])

Squeeze/unsqueeze

unsqueeze

注意:假如b是a的扩张,对b修改,会改变a的内容

a.shpe

torch.Size([4, 1, 28, 28])

a.unsqueeze(0).shape

torch.Size([1, 4, 1, 28, 28])

a.unsqueeze(-1).shape

torch.Size([4, 1, 28, 28, 1])

example:

b = torch.rand(32)

f = torch.rand(4,32,14,14)

b = b.unsqueeze(1),unsqueeze(2).unsqueeze(0)

: b.Size([1,32,1,1])

squeeze

b.shape

: torch.Size([1,32,1,1])

b.squeeze().shape

: torch.Size([32])

b.squeeze(0).shape

: torch.Size([32,1,1])

b.squeeze(1).shape

: torch.Size([1,32,1,1])

Transpose/t/permute

t 只能用于2D的矩阵

a = torch.randn(3,4)

a.t().shape

:torch.Size([4,3])

transpose

transpose会把数据的维度打乱,所以要用contiguous()

a.shape

: torch.Size([4,3,32,32])

a.transpose(1,3) #把1,3 维度调换

: torch.Size([4,32,32,3])

a1 = a.transpose(1,3).contiguous().view(4,3 * 32 * 32).view(4,32,32,3).transpose(1,3)

torch.all(torch.eq(a,a1)) # 如果所有数据都相同,返回1

: tensor(1,dtype=torch.uint8)

transpose的缺点:

b = torch.rand(4,3,28,32)# b c w h

b.transpose(1,3).shape

: torch.Size([4,32,28,3])# b c w h -> b w h c

b.transpose(1,3).transpose(1,2).shape

: torech.Size([4,28,32,3]) # b c w h -> b w h c -> b w h c

permute

b = torch.rand(4,3,28,32)# b c w h

b.permute(0,2,3,1).shape

: torech.Size([4,28,32,3]) # b c w h -> b w h c

Expand/repeat:

Expand:broadcasting

Repeat:memory copied

expand:只有维度是1的才能扩张,-1 表示维度不变

a = torch.rand(4,32,14,14)

b.shape

: torch.Size([1,32,1,1])

b.expand(4,32,14,14).shape

: torch.Size([4,32,14,14])

b.expand(-1,32,-1,-1).shape #-1 表示维度不变

: torch.Size([1,32,1,1])

repeat

b.shape

: torch.Size([1,32,1,1])

b.repeat(4,32,1,1).shape # 4 表示第一维拷贝4次,32表示第二位拷贝32次

: torch.Size([4,1024,1,1])

b.repeat(4,1,1,1)

: torch.Size([4,32,1,1])

b.repeat(4,1,32,32).shape

: torch.Size([4,32,32,32])

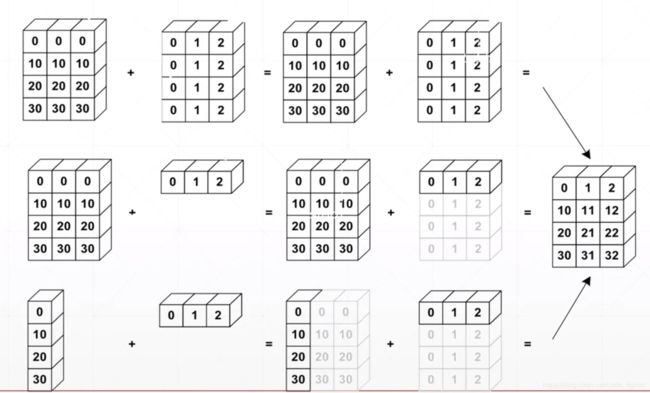

Broadcast 自动扩展

- 自带Expand

- without copying data

已知:feature map[4,32,14,14]

bias:[32]

则:

bias[32]->[32,1,1] 这一步需要自己手工做 -->[1,32,1,1]–>[4,32,14,14]

使用条件:

- 如果当前维度为1(列表),expand to same

- 如果当前维度是0(标量),插入一维,expand to same

- 否则,not broadcasting-able

example 1:

[4,32,14,14]

[1,32,1,1] ->[4,32,14,14]

example 2 :

[4,32,14,14]

[14,14]->[1,1,14,14]->[4,32,14,14]

example 3(not broadcasting-able):

[4,32,14,14]

[2,32,14,14]

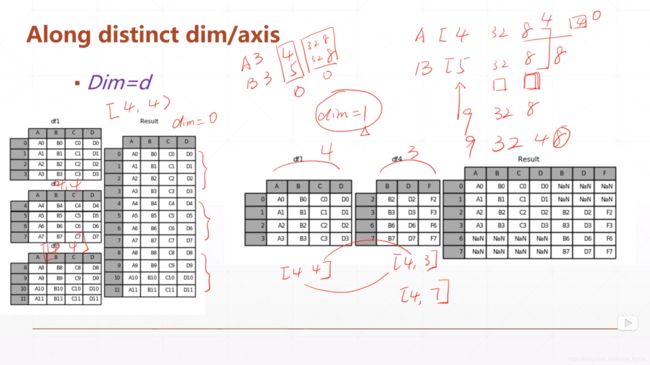

拼接与拆分

cat

a1 = torch.rand(4,3,32,32)

a2 = torch.rand(5,3,32,32)

torch.cat([a1,a2],dim=0).shape

: torhc.Size([9,3,32,32])

a2 = torch.rand(4,1,32,32)

torcha.cat([a1,a2],dim=0).shape

: Sizes of tensors must match except in dimension 0

torch.cat([a1,a2],dim=1).shape

: torch.Size([4,4,32,32])

a1 = torch.rand(4,3,16,32)

a2 = torch.rand(4,3,16,32)

torch.cat([a1,a2],dim=2).shape

:torch.Size([4,3,32,32])

stack

要求两个维度必须保持一致

a1 = torch.rand(4,3,32,32)

a2 = torch.rand(5,3,32,32)

torch.stack([a1,a2],dim=2).shape

:torch.Size([4,3,2,16,32])

a = torch.rand(32,8)

b = troch.rand(32,8)

torch.stack([a,b],dim=0).shape

:torch.Size([2,32,8])

split

b = torch.rand(3,32,8)

a1,a2 = b.split([2,1],dim=0)

a1.shape

:torch.Size([2,32,8])

a2.shape

:torch.Size({1,32,8})

a1,a2,a3 = b.split(1,dim=0)

chunk

chunk by num

b = torch.rand(8,32,8)

a1,a2,a3,a4 = b.chunk(2,dim=0)