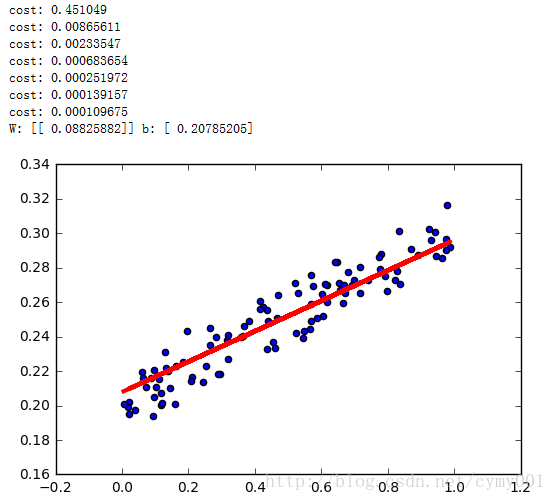

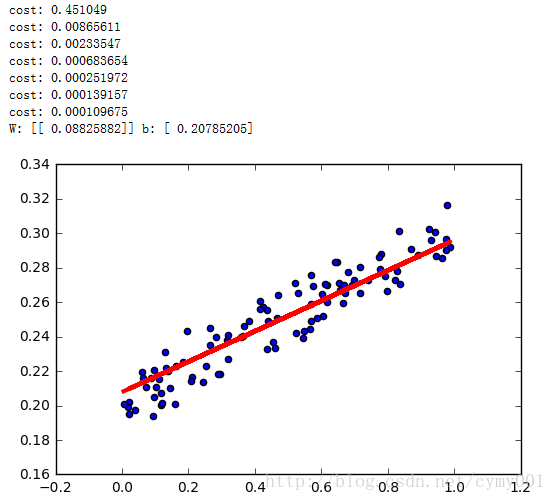

线性回归

import keras

import numpy as np

import matplotlib.pyplot as plt

from keras.models import Sequential

from keras.layers import Dense

model=Sequential()

model.add(Dense(units=1,input_dim=1))

model.compile(optimizer='sgd',loss='mse')

for step in range(3001):

cost=model.train_on_batch(x_data,y_data)

if step%500==0:

print('cost:',cost)

W,b=model.layers[0].get_weights()

print('W:',W,'b:',b)

y_pred=model.predict(x_data)

plt.scatter(x_data,y_data)

plt.plot(x_data,y_pred,'r-',lw=3)

plt.show()

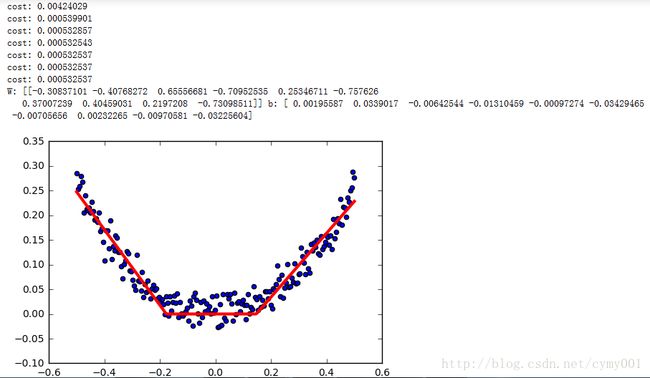

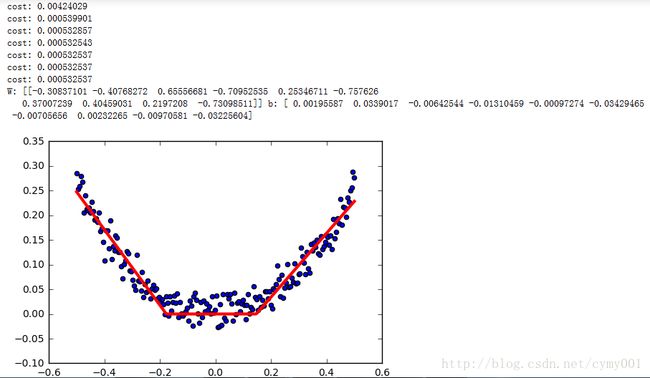

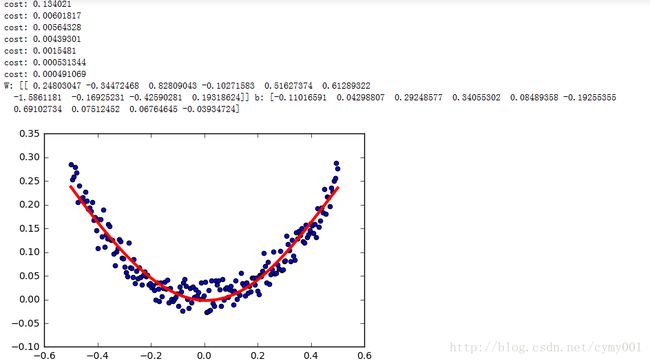

非线性回归(非线性激活函数)

from keras.optimizers import SGD

from keras.layers import Dense,Activation

import numpy as np

np.random.seed(0)

x_data=np.linspace(-0.5,0.5,200)

noise=np.random.normal(0,0.02,x_data.shape)

y_data=np.square(x_data)+noise

model=Sequential()

model.add(Dense(units=10,input_dim=1))

model.add(Activation('tanh'))

model.add(Dense(units=1))

model.add(Activation('tanh'))

defsgd=SGD(lr=0.3)

model.compile(optimizer=defsgd,loss='mse')

for step in range(3001):

cost=model.train_on_batch(x_data,y_data)

if step%500==0:

print('cost:',cost)

W,b=model.layers[0].get_weights()

print('W:',W,'b:',b)

y_pred=model.predict(x_data)

plt.scatter(x_data,y_data)

plt.plot(x_data,y_pred,'r-',lw=3)

plt.show()

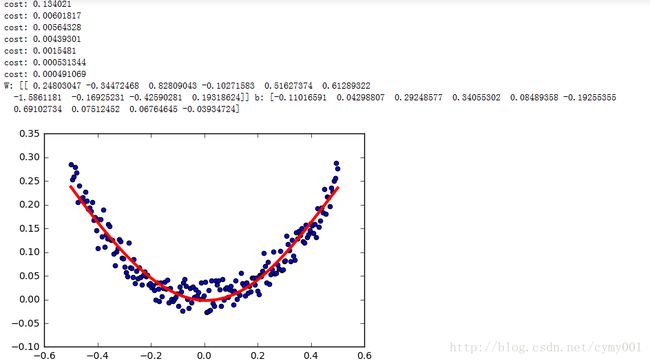

from keras.optimizers import SGD

from keras.layers import Dense,Activation

model=Sequential()

model.add(Dense(units=10,input_dim=1,activation='relu'))

model.add(Dense(units=1,activation='relu'))

defsgd=SGD(lr=0.3)

model.compile(optimizer=defsgd,loss='mse')

for step in range(3001):

cost=model.train_on_batch(x_data,y_data)

if step%500==0:

print('cost:',cost)

W,b=model.layers[0].get_weights()

print('W:',W,'b:',b)

y_pred=model.predict(x_data)

plt.scatter(x_data,y_data)

plt.plot(x_data,y_pred,'r-',lw=3)

plt.show()