- 机器学习(Machine Learning)

七指琴魔御清绝

大数据学习

原文链接:http://blog.csdn.net/zhoubl668/article/details/42921187希望转载的朋友,你可以不用联系我.但是一定要保留原文链接,因为这个项目还在继续也在不定期更新.希望看到文章的朋友能够学到更多.《BriefHistoryofMachineLearning》介绍:这是一篇介绍机器学习历史的文章,介绍很全面,从感知机、神经网络、决策树、SVM、Ada

- LWC-KD:图结构感知的推荐系统增量学习对比知识蒸馏

宇直不会放弃

GKD-Middlelayer人工智能pythonchatgptgpu算力深度学习机器学习神经网络

LWC-KD:图结构感知的推荐系统增量学习对比知识蒸馏《GraphStructureAwareContrastiveKnowledgeDistillationforIncrementalLearninginRecommenderSystems》2021作者是YueningWang、YingxueZhang和MarkCoates论文地址:https://dl.acm.org/doi/10.1145/

- 第五周作业——第十章动手试一试

hongsqi

10-1Python学习笔记学习笔记:在文本编辑器中新建一个文件,写几句话来总结一下你至此学到的Python知识,其中每一行都以“InPythonyoucan”打头。将这个文件命名为learning_python.txt,并将其存储到为完成本章练习而编写的程序所在的目录中。编写一个程序,它读取这个文件,并将你所写的内容打印三次:第一次打印时读取整个文件;第二次打印时遍历文件对象;第三次打印时将各行

- Python 变量起名全攻略:新手避坑与大神指南

科雷learning

学习AIpython编程python开发语言

学习AI科雷learning2025年03月10日22:19江苏一、引言:变量起名的“玄学”难题在Python编程的世界里,变量命名看似简单,实则暗藏玄机,常常让新手们踩坑不断。本文将带你深入了解Python变量命名规则,助你从新手小白变身命名大神。二、基础规则:保命口诀要牢记小白的困惑小白:(举着写满报错的代码)大神快看!我就写了个3D效果=True,Python竟然说我语法错误?专家的解答专家

- XGBClassifiler函数介绍

浊酒南街

#算法机器学习XGB

目录前言函数介绍示例前言XGBClassifier是XGBoost库中用于分类任务的类。XGBoost是一种高效且灵活的梯度提升决策树(GBDT)实现,它在多种机器学习竞赛中表现出色,尤其擅长处理表格数据。函数介绍XGBClassifiler(max_depth=3,learning_rate=0.1,n_estimators=100,objective='binary:logistic',boo

- 【大模型】DeepSeek-R1-Distill-Qwen部署及API调用

油泼辣子多加

大模型实战算法gptlangchain人工智能

DeepSeek-R1-Distill-Qwen是由中国人工智能公司深度求索(DeepSeek)开发的轻量化大语言模型,基于阿里巴巴的Qwen系列模型通过知识蒸馏技术优化而来。当前模型开源后,我们可以将其部署,使用API方式进行本地调用1.部署环境本文中的部署基础环境如下所示:PyTorch2.5.1Python3.12(ubuntu22.04)Cuda12.4GPURTX3090(24GB)*1

- 深度学习和机器学习的差异

The god of big data

教程深度学习机器学习人工智能

一、技术架构的本质差异传统机器学习(MachineLearning)建立在统计学和数学优化基础之上,其核心技术是通过人工设计的特征工程(FeatureEngineering)构建模型。以支持向量机(SVM)为例,算法通过核函数将数据映射到高维空间,但特征提取完全依赖工程师的领域知识。这种"人工特征+浅层模型"的结构在面对复杂非线性关系时容易遭遇性能瓶颈。深度学习(DeepLearning)作为机器

- opencv cuda例程 OpenCV和Cuda结合编程

weixin_44602056

opencvC++

本文转载自:https://www.fuwuqizhijia.com/linux/201704/70863.html此网页,仅保存下来供随时查看一、利用OpenCV中提供的GPU模块目前,OpenCV中已提供了许多GPU函数,直接使用OpenCV提供的GPU模块,可以完成大部分图像处理的加速操作。该方法的优点是使用简单,利用GpuMat管理CPU与GPU之间的数据传输,而且不需要关注内核函数调用参

- 利用CUDA与OpenCV实现高效图像处理:全面指南

快撑死的鱼

C++(C语言)算法大揭秘opencv图像处理人工智能

利用CUDA与OpenCV实现高效图像处理:全面指南前言在现代计算机视觉领域,图像处理的需求日益增加。无论是自动驾驶、安防监控,还是医疗影像分析,图像处理技术都扮演着至关重要的角色。然而,图像处理的计算量非常大,往往需要强大的计算能力来保证实时性和高效性。幸运的是,CUDA和OpenCV为我们提供了一种高效的图像处理解决方案。本篇文章将详细介绍如何结合CUDA与OpenCV,利用GPU的强大计算能

- linux 下 CUDA + Opencv 编程 之 CMakeLists.txt

maxruan

编程图像处理CUDAopencvlinuxc++cuda

CMAKE_MINIMUM_REQUIRED(VERSION2.8)PROJECT(medianFilterGPU)#CUDApackageFIND_PACKAGE(CUDAREQUIRED)INCLUDE(FindCUDA)#CUDAincludedirectoriesINCLUDE_DIRECTORIES(/usr/local/cuda/include)#OpenCVpackageFIND_P

- Win11及CUDA 12.1环境下PyTorch安装及避坑指南:深度学习开发者的福音

郁云爽

Win11及CUDA12.1环境下PyTorch安装及避坑指南:深度学习开发者的福音【下载地址】Win11及CUDA12.1环境下PyTorch安装及避坑指南本资源文件旨在为在Windows11操作系统及CUDA12.1环境下安装PyTorch的用户提供详细的安装步骤及常见问题解决方案。无论你是初学者还是有经验的开发者,这份指南都将帮助你顺利完成PyTorch的安装,并避免常见的坑项目地址:htt

- PyBroker: 使用Python进行机器学习驱动的算法交易指南

任铃冰Flourishing

PyBroker:使用Python进行机器学习驱动的算法交易指南pybrokerAlgorithmicTradinginPythonwithMachineLearning项目地址:https://gitcode.com/gh_mirrors/py/pybroker一、项目目录结构及介绍PyBroker项目遵循了清晰的组织结构来简化其源码管理和维护。以下是该仓库的主要目录及其简介:├──docs#文

- CUDA编程之OpenCV与CUDA结合使用

byxdaz

CUDAopencv人工智能计算机视觉

OpenCV与CUDA的结合使用可显著提升图像处理性能。一、版本匹配与环境配置CUDA与OpenCV版本兼容性OpenCV各版本对CUDA的支持存在差异,例如OpenCV4.5.4需搭配CUDA10.02,而较新的OpenCV4.8.0需使用更高版本CUDA。需注意部分模块(如级联检测器)可能因CUDA版本更新而不再支持。OpenCV版本CUDA版本4.5.x推荐CUDA11.x及以下

- 大话机器学习三大门派:监督、无监督与强化学习

安意诚Matrix

机器学习笔记机器学习人工智能

以武侠江湖为隐喻,系统阐述了机器学习的三大范式:监督学习(少林派)凭借标注数据精准建模,擅长图像分类等预测任务;无监督学习(逍遥派)通过数据自组织发现隐藏规律,在生成对抗网络(GAN)等场景大放异彩;强化学习(明教)依托动态环境交互优化策略,驱动AlphaGo、自动驾驶等突破性应用。文章融合技术深度与江湖趣味,既解析了CNN、PCA、Q-learning等核心算法的"武功心法"(数学公式与代码实现

- Pyhton网络编程_UDP_TCP(IP地址--端口--socket编程)

Felix-微信(Felixzfb)

网络编程TCPUDP

Python高级语法——网络编程——进阶学习笔记项目中案例参考:https://github.com/FangbaiZhang/Python_advanced_learning/tree/master/03_Python_network_programming1网络通信使用网络能够把多方链接在一起,然后可以进行数据传递所谓的网络编程就是,让在不同的电脑上的软件能够进行数据传递,即进程之间的通信1.

- 论文阅读笔记——Learning Fine-Grained Bimanual Manipulation with Low-Cost Hardware

寻丶幽风

论文阅读笔记论文阅读笔记人工智能深度学习机器人

ALOHA论文ALOHA解决了策略中的错误可能随时间累积,且人类演示可能是非平稳的,提出了ACT(ActionChunkingwithTransformers)方法。ActionChunking模仿学习中,compoundingerror是致使任务失败的主要原因。具体来说,当智能体(agent)在测试时遇到训练集中未见过的情况时,可能会产生预测误差。这些误差会逐步累积,导致智能体进入未知状态,最终

- Pycharm搭建CUDA,Pytorch教程(匹配版本,安装,搭建全保姆教程)_cuda12(1)

2401_84557821

程序员pycharmpytorchide

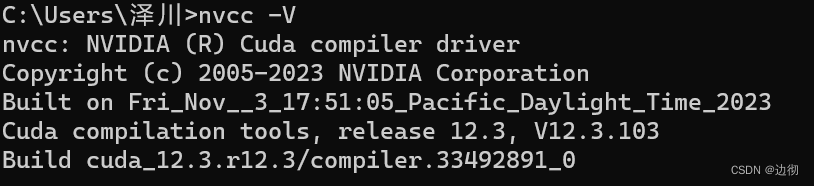

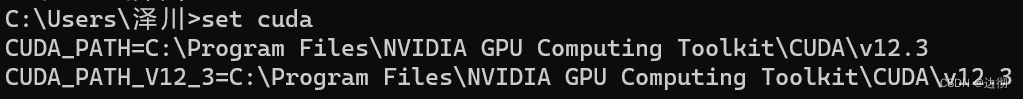

查看cuda版本输入setcuda查看环境变量如上两图即为下载成功!##二、安装Pytorch#

- 【OpenAI官方课程】第一课:GPT-Prompt 的构建原则指南

euffylee

ChatGPTPrompt官方课程gptprompt人工智能

欢迎来到ChatGPT开发人员提示工程课程(ChatGPTPromptEngineeringforDevelopers)!本课程将教您如何通过OpenAIAPI有效地利用大型语言模型(LLM)来创建强大的应用程序。本课程由OpenAI的IsaFulford和DeepLearning.AI的AndrewNg主讲,深入了解LLM的运作方式,提供即时工程的最佳实践,并演示LLMAPI在各种应用程序中的使

- jupyter notebook参数化运行python

HackerTom

乱搞pythonjupyternotebook

Updates(2019.8.1419:53)吃饭前用这个方法实战了一下,吃完回来一看好像不太行:跑完一组参数之后,到跑下一组参数时好像没有释放之占用的GPU,于是notebook上的结果,后面好几条都报错说cudaoutofmemory。现在改成:将notebook中的代码写在一个python文件中,然后用命令行运行这个文件,比如:#autorun.pyimportos#print(os.get

- win11编译llama_cpp_python cuda128 RTX30/40/50版本

System_sleep

llamapythonwindowscuda

Geforce50xx系显卡最低支持cuda128,llama_cpp_python官方源只有cpu版本,没有cuda版本,所以自己基于0.3.5版本源码编译一个RTX30xx/40xx/50xx版本。1.前置条件1.访问https://developer.download.nvidia.cn/compute/cuda/12.8.0/local_installers/cuda_12.8.0_571

- MapReduce:分布式并行编程的基石

JAZJD

mapreduce分布式大数据

目录概述分布式并行编程分布式并行编程模型分布式并行编程框架MapReduce模型简介Map和Reduce函数Map函数Map函数的输入和输出Map函数的常见操作Reduce函数Reduce函数的输入和输出Reduce函数的常见操作工作流程概述各个阶段1.输入分片2.Map阶段3.Shuffle阶段4.Reduce阶段MapReduce工作流程总结Shuffle过程详解1.分区(Partitioni

- 当深度学习遇见禅宗:用东方智慧重新诠释DQN算法

带上一无所知的我

智能体的自我修炼:强化学习指南深度学习算法人工智能DQN

当深度学习遇见禅宗:用东方智慧重新诠释DQN算法“好的代码如同山水画,既要工笔细描,又要留白写意”——一个在终端前顿悟的开发者DQN是Q-Learning算法与深度神经网络的结合体,通过神经网络近似Q值函数,解决传统Q-Learning在高维状态空间下的"维度灾难"问题。引言:代码与禅的碰撞♂️在某个调试代码到凌晨三点的夜晚,我突然意识到:强化学习的过程,竟与佛家修行惊人地相似。智能体在环境中探索

- python | flower,一个强大的 Python 库!

双木的木

python拓展学习python库python开发语言计算机视觉人工智能算法联邦学习深度学习

本文来源公众号“python”,仅用于学术分享,侵权删,干货满满。原文链接:flower,一个强大的Python库!大家好,今天为大家分享一个强大的Python库-flower。Github地址:https://github.com/mher/flower随着机器学习模型应用的增长,联邦学习(FederatedLearning,FL)逐渐成为一个重要方向。联邦学习允许多个客户端在不共享原始数据的情

- 深入探索Deeplearning4j(DL4J):Java深度学习的全面指南

软件职业规划

java深度学习开发语言

一、DL4J框架概述Deeplearning4j(DL4J)是一个开源的深度学习框架,专为Java和Scala设计,运行在Java虚拟机(JVM)上。它由Skymind公司开发并维护,旨在将深度学习技术应用于大规模商业应用。DL4J支持多种深度学习模型,包括卷积神经网络(CNN)、循环神经网络(RNN)、长短期记忆网络(LSTM)等。自2014年首次发布以来,DL4J已经成为Java深度学习领域的

- GPU编程实战指南03:CUDA开发快速上手示例,GPU性能碾压实测

anda0109

CUDA并行编程linux运维服务器

上一节《GPU编程指南02:CUDA开发快速上手示例》中我们完成了一个使用GPU进行加减乘除四则运算的例子。没有学习的可以先跳转学习这一节,因为它有详细的代码注释,学习完这一篇,你就基本入门了GPU编程。在这个例子中,我们使用GPU进行运算,同时也会用CPU进行运算,然后将两者的结果进行对比,以确保我们代码运行的结果是正确的。既然CPU可以计算,为什么要用GPU呢?因为GPU可以进行并行计算,计算

- DeepSeek R1-32B医疗大模型的完整微调实战分析(全码版)

Allen_LVyingbo

医疗高效编程研发健康医疗人工智能python

DeepSeekR1-32B微调实战指南├──1.环境准备│├──1.1硬件配置││├─全参数微调:4*A10080GB││└─LoRA微调:单卡24GB│├──1.2软件依赖││├─PyTorch2.1.2+CUDA││└─Unsloth/ColossalAI│└──1.3模型加载│├─4bit量化加载│└─FlashAttention2加速├──2.数据集构建│├──2.1数据源││├─CMD

- 记录 | python os添加系统环境变量

极智视界

pythonlinuxos系统环境变量

python中通过os来添加系统环境变量:#设置os系统环境变量os.environ['CUDA_VISIBLE_DEVICES']='0'os.environ['p2c']='1'os.environ['p2o']='0'os.environ['io']='0'#获取os系统环境变量os.getenv('CUDA_VISIBLE_DEVICES')os.getenv('p2c')...

- GPU编程实战指南01:CUDA编程极简手册

anda0109

CUDA并行编程算法

目录1.CUDA基础概念1.1线程层次结构1.2内存层次结构2.CUDA编程核心要素2.1核函数2.2内存管理2.3同步机制3.CUDA优化技巧3.1内存访问优化3.2共享内存使用3.3线程分配优化4.常见问题和解决方案5.实际案例分析1.CUDA基础概念1.1线程层次结构CUDA采用层次化的线程组织结构,从小到大依次为:线程(Thread):最基本的执行单元每个线程执行相同的核函数代码通过thr

- 安装CUDA12.1和torch2.2.1下的DKG

超级无敌大好人

python

1.创建python虚拟环境setNO_PROXY=*condadeactivatecondaenvremove-nfindkgcondacreate-nfindkgpython=3.11condaactivatefindkgcondainstallpackagingsetuptoolspipuninstallnumpycondainstallnumpy=1.24.3请注意,DKG需要python

- 34.二叉树进阶3(平衡二叉搜索树 - AVL树及其旋转操作图解)

橘子真甜~

C++基础/STL/IO学习数据结构与算法数据结构C++c++二叉搜索树AVL树平衡搜索树

⭐上篇文章:34.二叉树进阶3(C++STL关联式容器,set/map的介绍与使用)-CSDN博客⭐本篇代码:c++学习/19.map和set的使用用与模拟·橘子真甜/c++-learning-of-yzc-码云-开源中国(gitee.com)⭐标⭐是比较重要的部分一.二叉搜索树的缺点之前文章中提到,普通的二叉搜索树在某些情况下会退出成链表,或者根节点的左右子树的高度差非常大。这个时候就会导致其搜

- java工厂模式

3213213333332132

java抽象工厂

工厂模式有

1、工厂方法

2、抽象工厂方法。

下面我的实现是抽象工厂方法,

给所有具体的产品类定一个通用的接口。

package 工厂模式;

/**

* 航天飞行接口

*

* @Description

* @author FuJianyong

* 2015-7-14下午02:42:05

*/

public interface SpaceF

- nginx频率限制+python测试

ronin47

nginx 频率 python

部分内容参考:http://www.abc3210.com/2013/web_04/82.shtml

首先说一下遇到这个问题是因为网站被攻击,阿里云报警,想到要限制一下访问频率,而不是限制ip(限制ip的方案稍后给出)。nginx连接资源被吃空返回状态码是502,添加本方案限制后返回599,与正常状态码区别开。步骤如下:

- java线程和线程池的使用

dyy_gusi

ThreadPoolthreadRunnabletimer

java线程和线程池

一、创建多线程的方式

java多线程很常见,如何使用多线程,如何创建线程,java中有两种方式,第一种是让自己的类实现Runnable接口,第二种是让自己的类继承Thread类。其实Thread类自己也是实现了Runnable接口。具体使用实例如下:

1、通过实现Runnable接口方式 1 2

- Linux

171815164

linux

ubuntu kernel

http://kernel.ubuntu.com/~kernel-ppa/mainline/v4.1.2-unstable/

安卓sdk代理

mirrors.neusoft.edu.cn 80

输入法和jdk

sudo apt-get install fcitx

su

- Tomcat JDBC Connection Pool

g21121

Connection

Tomcat7 抛弃了以往的DBCP 采用了新的Tomcat Jdbc Pool 作为数据库连接组件,事实上DBCP已经被Hibernate 所抛弃,因为他存在很多问题,诸如:更新缓慢,bug较多,编译问题,代码复杂等等。

Tomcat Jdbc P

- 敲代码的一点想法

永夜-极光

java随笔感想

入门学习java编程已经半年了,一路敲代码下来,现在也才1w+行代码量,也就菜鸟水准吧,但是在整个学习过程中,我一直在想,为什么很多培训老师,网上的文章都是要我们背一些代码?比如学习Arraylist的时候,教师就让我们先参考源代码写一遍,然

- jvm指令集

程序员是怎么炼成的

jvm 指令集

转自:http://blog.csdn.net/hudashi/article/details/7062675#comments

将值推送至栈顶时 const ldc push load指令

const系列

该系列命令主要负责把简单的数值类型送到栈顶。(从常量池或者局部变量push到栈顶时均使用)

0x02 &nbs

- Oracle字符集的查看查询和Oracle字符集的设置修改

aijuans

oracle

本文主要讨论以下几个部分:如何查看查询oracle字符集、 修改设置字符集以及常见的oracle utf8字符集和oracle exp 字符集问题。

一、什么是Oracle字符集

Oracle字符集是一个字节数据的解释的符号集合,有大小之分,有相互的包容关系。ORACLE 支持国家语言的体系结构允许你使用本地化语言来存储,处理,检索数据。它使数据库工具,错误消息,排序次序,日期,时间,货

- png在Ie6下透明度处理方法

antonyup_2006

css浏览器FirebugIE

由于之前到深圳现场支撑上线,当时为了解决个控件下载,我机器上的IE8老报个错,不得以把ie8卸载掉,换个Ie6,问题解决了,今天出差回来,用ie6登入另一个正在开发的系统,遇到了Png图片的问题,当然升级到ie8(ie8自带的开发人员工具调试前端页面JS之类的还是比较方便的,和FireBug一样,呵呵),这个问题就解决了,但稍微做了下这个问题的处理。

我们知道PNG是图像文件存储格式,查询资

- 表查询常用命令高级查询方法(二)

百合不是茶

oracle分页查询分组查询联合查询

----------------------------------------------------分组查询 group by having --平均工资和最高工资 select avg(sal)平均工资,max(sal) from emp ; --每个部门的平均工资和最高工资

- uploadify3.1版本参数使用详解

bijian1013

JavaScriptuploadify3.1

使用:

绑定的界面元素<input id='gallery'type='file'/>$("#gallery").uploadify({设置参数,参数如下});

设置的属性:

id: jQuery(this).attr('id'),//绑定的input的ID

langFile: 'http://ww

- 精通Oracle10编程SQL(17)使用ORACLE系统包

bijian1013

oracle数据库plsql

/*

*使用ORACLE系统包

*/

--1.DBMS_OUTPUT

--ENABLE:用于激活过程PUT,PUT_LINE,NEW_LINE,GET_LINE和GET_LINES的调用

--语法:DBMS_OUTPUT.enable(buffer_size in integer default 20000);

--DISABLE:用于禁止对过程PUT,PUT_LINE,NEW

- 【JVM一】JVM垃圾回收日志

bit1129

垃圾回收

将JVM垃圾回收的日志记录下来,对于分析垃圾回收的运行状态,进而调整内存分配(年轻代,老年代,永久代的内存分配)等是很有意义的。JVM与垃圾回收日志相关的参数包括:

-XX:+PrintGC

-XX:+PrintGCDetails

-XX:+PrintGCTimeStamps

-XX:+PrintGCDateStamps

-Xloggc

-XX:+PrintGC

通

- Toast使用

白糖_

toast

Android中的Toast是一种简易的消息提示框,toast提示框不能被用户点击,toast会根据用户设置的显示时间后自动消失。

创建Toast

两个方法创建Toast

makeText(Context context, int resId, int duration)

参数:context是toast显示在

- angular.identity

boyitech

AngularJSAngularJS API

angular.identiy 描述: 返回它第一参数的函数. 此函数多用于函数是编程. 使用方法: angular.identity(value); 参数详解: Param Type Details value

*

to be returned. 返回值: 传入的value 实例代码:

<!DOCTYPE HTML>

- java-两整数相除,求循环节

bylijinnan

java

import java.util.ArrayList;

import java.util.List;

public class CircleDigitsInDivision {

/**

* 题目:求循环节,若整除则返回NULL,否则返回char*指向循环节。先写思路。函数原型:char*get_circle_digits(unsigned k,unsigned j)

- Java 日期 周 年

Chen.H

javaC++cC#

/**

* java日期操作(月末、周末等的日期操作)

*

* @author

*

*/

public class DateUtil {

/** */

/**

* 取得某天相加(减)後的那一天

*

* @param date

* @param num

*

- [高考与专业]欢迎广大高中毕业生加入自动控制与计算机应用专业

comsci

计算机

不知道现在的高校还设置这个宽口径专业没有,自动控制与计算机应用专业,我就是这个专业毕业的,这个专业的课程非常多,既要学习自动控制方面的课程,也要学习计算机专业的课程,对数学也要求比较高.....如果有这个专业,欢迎大家报考...毕业出来之后,就业的途径非常广.....

以后

- 分层查询(Hierarchical Queries)

daizj

oracle递归查询层次查询

Hierarchical Queries

If a table contains hierarchical data, then you can select rows in a hierarchical order using the hierarchical query clause:

hierarchical_query_clause::=

start with condi

- 数据迁移

daysinsun

数据迁移

最近公司在重构一个医疗系统,原来的系统是两个.Net系统,现需要重构到java中。数据库分别为SQL Server和Mysql,现需要将数据库统一为Hana数据库,发现了几个问题,但最后通过努力都解决了。

1、原本通过Hana的数据迁移工具把数据是可以迁移过去的,在MySQl里面的字段为TEXT类型的到Hana里面就存储不了了,最后不得不更改为clob。

2、在数据插入的时候有些字段特别长

- C语言学习二进制的表示示例

dcj3sjt126com

cbasic

进制的表示示例

# include <stdio.h>

int main(void)

{

int i = 0x32C;

printf("i = %d\n", i);

/*

printf的用法

%d表示以十进制输出

%x或%X表示以十六进制的输出

%o表示以八进制输出

*/

return 0;

}

- NsTimer 和 UITableViewCell 之间的控制

dcj3sjt126com

ios

情况是这样的:

一个UITableView, 每个Cell的内容是我自定义的 viewA viewA上面有很多的动画, 我需要添加NSTimer来做动画, 由于TableView的复用机制, 我添加的动画会不断开启, 没有停止, 动画会执行越来越多.

解决办法:

在配置cell的时候开始动画, 然后在cell结束显示的时候停止动画

查找cell结束显示的代理

- MySql中case when then 的使用

fanxiaolong

casewhenthenend

select "主键", "项目编号", "项目名称","项目创建时间", "项目状态","部门名称","创建人"

union

(select

pp.id as "主键",

pp.project_number as &

- Ehcache(01)——简介、基本操作

234390216

cacheehcache简介CacheManagercrud

Ehcache简介

目录

1 CacheManager

1.1 构造方法构建

1.2 静态方法构建

2 Cache

2.1&

- 最容易懂的javascript闭包学习入门

jackyrong

JavaScript

http://www.ruanyifeng.com/blog/2009/08/learning_javascript_closures.html

闭包(closure)是Javascript语言的一个难点,也是它的特色,很多高级应用都要依靠闭包实现。

下面就是我的学习笔记,对于Javascript初学者应该是很有用的。

一、变量的作用域

要理解闭包,首先必须理解Javascript特殊

- 提升网站转化率的四步优化方案

php教程分享

数据结构PHP数据挖掘Google活动

网站开发完成后,我们在进行网站优化最关键的问题就是如何提高整体的转化率,这也是营销策略里最最重要的方面之一,并且也是网站综合运营实例的结果。文中分享了四大优化策略:调查、研究、优化、评估,这四大策略可以很好地帮助用户设计出高效的优化方案。

PHP开发的网站优化一个网站最关键和棘手的是,如何提高整体的转化率,这是任何营销策略里最重要的方面之一,而提升网站转化率是网站综合运营实力的结果。今天,我就分

- web开发里什么是HTML5的WebSocket?

naruto1990

Webhtml5浏览器socket

当前火起来的HTML5语言里面,很多学者们都还没有完全了解这语言的效果情况,我最喜欢的Web开发技术就是正迅速变得流行的 WebSocket API。WebSocket 提供了一个受欢迎的技术,以替代我们过去几年一直在用的Ajax技术。这个新的API提供了一个方法,从客户端使用简单的语法有效地推动消息到服务器。让我们看一看6个HTML5教程介绍里 的 WebSocket API:它可用于客户端、服

- Socket初步编程——简单实现群聊

Everyday都不同

socket网络编程初步认识

初次接触到socket网络编程,也参考了网络上众前辈的文章。尝试自己也写了一下,记录下过程吧:

服务端:(接收客户端消息并把它们打印出来)

public class SocketServer {

private List<Socket> socketList = new ArrayList<Socket>();

public s

- 面试:Hashtable与HashMap的区别(结合线程)

toknowme

昨天去了某钱公司面试,面试过程中被问道

Hashtable与HashMap的区别?当时就是回答了一点,Hashtable是线程安全的,HashMap是线程不安全的,说白了,就是Hashtable是的同步的,HashMap不是同步的,需要额外的处理一下。

今天就动手写了一个例子,直接看代码吧

package com.learn.lesson001;

import java

- MVC设计模式的总结

xp9802

设计模式mvc框架IOC

随着Web应用的商业逻辑包含逐渐复杂的公式分析计算、决策支持等,使客户机越

来越不堪重负,因此将系统的商业分离出来。单独形成一部分,这样三层结构产生了。

其中‘层’是逻辑上的划分。

三层体系结构是将整个系统划分为如图2.1所示的结构[3]

(1)表现层(Presentation layer):包含表示代码、用户交互GUI、数据验证。

该层用于向客户端用户提供GUI交互,它允许用户