愉快的学习就从翻译开始吧_A Gentle Introduction to RNN Unrolling

Recurrent neural networks are a type of neural network where the outputs from previous time steps are fed as input to the current time step.

递归神经网络是一种前一步的输出作为现在步输入的一种神经网络。

This creates a network graph or circuit diagram with cycles, which can make it difficult to understand how information moves through the network.

这将创建一个带循环的网络图或者循环表,这将使它难以被理解,信息是如何在网络中传递的。

In this post, you will discover the concept of unrolling or unfolding recurrent neural networks.

在这篇文章中,你将了解非卷起的,或非折叠(都是展开的意思)的循环神经网络概念。

After reading this post, you will know:

阅读这篇文章后,你将了解:

- The standard conception of recurrent neural networks with cyclic connections.

循环链接递归神经网络的标准概念 - The concept of unrolling of the forward pass when the network is copied for each input time step.

在每个时间步复制网络时正向传递展开的概念。 - The concept of unrolling of the backward pass for updating network weights during training.

反向传递更新网络权重的概念

Let’s get started.

让我们开始吧

Unrolling Recurrent Neural Networks/展开递归神经网络

Recurrent neural networks are a type of neural network where outputs from previous time steps are taken as inputs for the current time step.

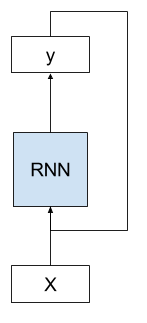

We can demonstrate this with a picture.

我们可以用一张图来解释这个

Below we can see that the network takes both the output of the network from the previous time step as input and uses the internal state from the previous time step as a starting point for the current time step.

下面我们可以看到网络用前一时间步的输出(和当前时间步的输入)作为输入,并使用前一时间步的内部状态作为当前时间步的起点。

RNNs are fit and make predictions over many time steps. We can simplify the model by unfolding or unrolling the RNN graph over the input sequence.

RNNs适合在许多时间步上做预测。我们可以通过在输入序列上展开RNN图来简化模型(只是容易理解)

A useful way to visualise RNNs is to consider the update graph formed by ‘unfolding’ the network along the input sequence.

一种可视化RNNs的有效方法是考虑沿输入序列建立更新图

— Supervised Sequence Labelling with Recurrent Neural Networks, 2008.

Unrolling the Forward Pass/展开正向传递

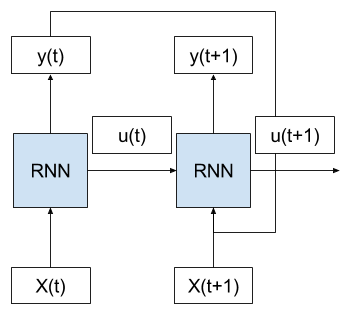

Consider the case where we have multiple time steps of input (X(t), X(t+1), …), multiple time steps of internal state (u(t), u(t+1), …), and multiple time steps of outputs (y(t), y(t+1), …).

考虑我们有多个时间步的输入(X(t),X(t + 1),...),内部状态的多个时间步骤(u(t),u(t + 1),...)和 输出的多个时间步长(y(t),y(t + 1),...)。

We can unfold the above network schematic into a graph without any cycles.

我们可以将上述网络原理图展开成一个没有任何循环的图形。

We can see that the cycle is removed and that the output (y(t)) and internal state (u(t)) from the previous time step are passed on to the network as inputs for processing the next time step.

我们可以看到循环被删除,并且来自前一时间步的输出(y(t))和内部状态(u(t))作为输入传递给网络,用于处理下一个时间步。

Key in this conceptualization is that the network (RNN) does not change between the unfolded time steps. Specifically, the same weights are used for each time step and it is only the outputs and the internal states that differ.

这种概念化的关键在于网络(RNN)在展开的时间步之间不会改变。 具体而言,每个时间步使用相同的权重,只有输出和内部状态不同。

In this way, it is as though the whole network (topology and weights) are copied for each time step in the input sequence.

这样,就好像整个网络(拓扑和权重)在输入序列中的每个时间步骤被复制。

Further, each copy of the network may be thought of as an additional layer of the same feed forward neural network.

此外,网络的每个副本可以被认为是相同的前馈神经网络的附加层。

RNNs, once unfolded in time, can be seen as very deep feedforward networks in which all the layers share the same weights.

— Deep learning, Nature, 2015

This is a useful conceptual tool and visualization to help in understanding what is going on in the network during the forward pass. It may or may not also be the way that the network is implemented by the deep learning library.

这是一个有用的概念和可视化工具,可以帮助你理解正向传递期间网络中发生的事情。它可能是或者也可能不是由深度学习库实施的方式。

Unrolling the Backward Pass/展开反向传递

The idea of network unfolding plays a bigger part in the way recurrent neural networks are implemented for the backward pass.

网络展开的想法在递归神经网络实施反向传递的方式中扮演了更重要的角色。

As is standard with [backpropagation through time] , the network is unfolded over time, so that connections arriving at layers are viewed as coming from the previous timestep.

按照[时间反向传播]的标准,网络随着时间推移展开,这样到达层的连接被视为来自前一时间步。

— Framewise phoneme classification with bidirectional LSTM and other neural network architectures, 2005

Importantly, the backpropagation of error for a given time step depends on the activation of the network at the prior time step.

重要的是,给定时间步的误差反向传播取决于先前时间步网络的激活。

In this way, the backward pass requires the conceptualization of unfolding the network.

通过这种方式,反向传递需要展开网络的概念。

Error is propagated back to the first input time step of the sequence so that the error gradient can be calculated and the weights of the network can be updated.

误差传播回序列的第一个输入时间步,以便可以计算误差梯度并更新网络的权重。

Like standard backpropagation, [backpropagation through time] consists of a repeated application of the chain rule. The subtlety is that, for recurrent networks, the loss function depends on the activation of the hidden layer not only through its influence on the output layer, but also through its influence on the hidden layer at the next timestep.

与标准反向传播一样,[反向传播通过时间]包含重复应用链式规则。 微妙之处在于,对于递归网络,损失函数依赖于隐藏层的激活,不仅通过其影响输出层,而且通过其在下一时间步影响隐藏层。

— Supervised Sequence Labelling with Recurrent Neural Networks, 2008

Unfolding the recurrent network graph also introduces additional concerns. Each time step requires a new copy of the network, which in turn takes up memory, especially for larger networks with thousands or millions of weights. The memory requirements of training large recurrent networks can quickly balloon as the number of time steps climbs into the hundreds.

展开经常性网络图还阐明了其他问题。 每个时间步骤需要一个新的网络副本,而这个副本又占用了内存,特别是对于数千或数百万权重的大型网络。 训练大型递归网络的记忆需求可以随着时间步数攀升到数百个而迅速增加。

… it is required to unroll the RNNs by the length of the input sequence. By unrolling an RNN N times, every activations of the neurons inside the network are replicated N times, which consumes a huge amount of memory especially when the sequence is very long. This hinders a small footprint implementation of online learning or adaptation. Also, this “full unrolling” makes a parallel training with multiple sequences inefficient on shared memory models such as graphics processing units (GPUs)

需要按照输入序列的长度展开RNN。 通过展开RNN N次,网络内部的神经元的每次激活都被复制N次,这消耗了大量的记忆,尤其是当序列很长时。 这妨碍了在线学习或适应的小脚印实施。 而且,这种“完全展开”使得多个序列的并行训练在诸如图形处理单元(GPU)之类的共享存储器模型上效率低下,

— Online Sequence Training of Recurrent Neural Networks with Connectionist Temporal Classification, 2015

Further Reading

This section provides more resources on the topic if you are looking go deeper.

如果您正在深入研究,本节将提供更多有关该主题的资源。

Papers

- Online Sequence Training of Recurrent Neural Networks with Connectionist Temporal Classification, 2015

基于联结时间分类的递归神经网络在线序列训练(2015) - Framewise phoneme classification with bidirectional LSTM and other neural network architectures, 2005

具有双向LSTM和其他神经网络结构的框架音素分类,2005 - Supervised Sequence Labelling with Recurrent Neural Networks, 2008

带有递归神经网络的监督序列标签,2008 - Deep learning, Nature, 2015

Deep learning, Nature, 2015

Articles/引用

- A Gentle Introduction to Backpropagation Through Time

通过时间反向传播的简单介绍 - Understanding LSTM Networks, 2015

理解LSTM网络,2015 - Rolling and Unrolling RNNs, 2016

卷起和展开的RNNS,2016 - Unfolding RNNs, 2017

展开 RNNs,2017

Summary/总结

In this tutorial, you discovered the visualization and conceptual tool of unrolling recurrent neural networks.

在本教程中,您了解了展开循环神经网络的可视化和概念工具。

Specifically, you learned:

具体来说,你了解到:

- The standard conception of recurrent neural networks with cyclic connections.

循环连接递归神经网络的标准概念。 - The concept of unrolling of the forward pass when the network is copied for each input time step.

在每个输入时间步复制网络时展开正向传递的概念。 - The concept of unrolling of the backward pass for updating network weights during training.

训练期间更新网络权重的反向传递的展开概念。

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

在下面的评论中提出您的问题,我会尽我所能来回答。

总的来说本文介绍的RNN神经元的正向,反向传递,比网络上流传的其他文章更容易理解!