上一篇文章中,我们分析了checkBlockSanity()的完整过程,了解了对区块结构验证的过程,如对区块头中目标难度值、工作量证明、时间戳和Merkle树及区块中的交易集合的验证,这些验证通过之后,节点就会调用maybeAcceptBlock()对区块上下文进一步验证,并最终将区块写入区块链。maybeAcceptBlock()将基于链上预期的难度值进一步检查区块头中的难度值,还要检查交易中的输入是否可花费(spendable)、是否有双重支付、是否有重复交易等等,同时决定是延长主链还是侧链,延长侧链后是否将需要Reorganize将侧链变成主链;在区块加入区块链后,还要将区块交易中花费的UTXO(s)变成spentTxOut,新生成的UTXO(s)添加到UTXO集合中。同时,区块加入主链后,节点还要更新交易池mempool以及通知“矿工”停止当前“挖矿”过程,进入下一个区块的求解,这一过程我们将在后文中详细介绍。maybeAcceptBlock()涉及的步骤较checkBlockSanity()更为复杂,本文将逐步分析其中的过程。

我们先来看maybeAcceptBlock()的实现:

//btcd/blockchain/accept.go

// maybeAcceptBlock potentially accepts a block into the block chain and, if

// accepted, returns whether or not it is on the main chain. It performs

// several validation checks which depend on its position within the block chain

// before adding it. The block is expected to have already gone through

// ProcessBlock before calling this function with it.

//

// The flags modify the behavior of this function as follows:

// - BFDryRun: The memory chain index will not be pruned and no accept

// notification will be sent since the block is not being accepted.

//

// The flags are also passed to checkBlockContext and connectBestChain. See

// their documentation for how the flags modify their behavior.

//

// This function MUST be called with the chain state lock held (for writes).

func (b *BlockChain) maybeAcceptBlock(block *btcutil.Block, flags BehaviorFlags) (bool, error) {

dryRun := flags&BFDryRun == BFDryRun

// Get a block node for the block previous to this one. Will be nil

// if this is the genesis block.

prevNode, err := b.index.PrevNodeFromBlock(block) (1)

if err != nil {

log.Errorf("PrevNodeFromBlock: %v", err)

return false, err

}

// The height of this block is one more than the referenced previous

// block.

blockHeight := int32(0)

if prevNode != nil {

blockHeight = prevNode.height + 1

}

block.SetHeight(blockHeight) (2)

// The block must pass all of the validation rules which depend on the

// position of the block within the block chain.

err = b.checkBlockContext(block, prevNode, flags) (3)

if err != nil {

return false, err

}

// Insert the block into the database if it's not already there. Even

// though it is possible the block will ultimately fail to connect, it

// has already passed all proof-of-work and validity tests which means

// it would be prohibitively expensive for an attacker to fill up the

// disk with a bunch of blocks that fail to connect. This is necessary

// since it allows block download to be decoupled from the much more

// expensive connection logic. It also has some other nice properties

// such as making blocks that never become part of the main chain or

// blocks that fail to connect available for further analysis.

err = b.db.Update(func(dbTx database.Tx) error {

return dbMaybeStoreBlock(dbTx, block) (4)

})

if err != nil {

return false, err

}

// Create a new block node for the block and add it to the in-memory

// block chain (could be either a side chain or the main chain).

blockHeader := &block.MsgBlock().Header

newNode := newBlockNode(blockHeader, block.Hash(), blockHeight) (5)

if prevNode != nil {

newNode.parent = prevNode

newNode.height = blockHeight

newNode.workSum.Add(prevNode.workSum, newNode.workSum)

}

// Connect the passed block to the chain while respecting proper chain

// selection according to the chain with the most proof of work. This

// also handles validation of the transaction scripts.

isMainChain, err := b.connectBestChain(newNode, block, flags) (6)

if err != nil {

return false, err

}

// Notify the caller that the new block was accepted into the block

// chain. The caller would typically want to react by relaying the

// inventory to other peers.

if !dryRun {

b.chainLock.Unlock()

b.sendNotification(NTBlockAccepted, block) (7)

b.chainLock.Lock()

}

return isMainChain, nil

}

其主要过程是:

- 调用blockIndex的PrevNodeFromBlock()方法查找父区块。我们前面介绍过, index用于索引实例化后内存中的各区块,PrevNodeFromBlock()先从内存中查找,如果未找到,再从数据库中查找并构造区块的实例化类型blockNode并返回;

- 找到父区块后,将当前区块的高度值设为父区块高度加1。请注意,区块结构本身并没有高度字段,辅助类型btcutil.Block中记录了区块的高度,在随后的验证中需要用到高度值。BIP34建议,version为2及以上的区块中的coinbase交易的解锁脚本(scriptSig)开头将包含区块高度值;

- 调用checkBlockContext()检查区块上下文,我们随后进一步分析它;

- 对区块验证通过后,将其(wire.MsgBlock)写入数据库(区块文件),如代码(4)处所示。请注意,这里区块还没有写入区块链;

- 为当前区块创建实例化对象blockNode,并计算工作量之和,如代码(5)处所示;

- 在区块的上下文检查通过之后,调用connectBestChain()将区块写入区块链,我们随后进一步分析它;

- 当区块成功写入主链或者侧链后,向blockManager通知NTBlockAccepted事件,blockManager会向所有Peer节点发送inv或header消息,将新区块通告给Peer(s);

在maybeAcceptBlock()中对区块的处理涉及到了blockNode类型,它的定义如下:

//btcd/blockchain/blockindex.go

// blockNode represents a block within the block chain and is primarily used to

// aid in selecting the best chain to be the main chain. The main chain is

// stored into the block database.

type blockNode struct {

// parent is the parent block for this node.

parent *blockNode

// children contains the child nodes for this node. Typically there

// will only be one, but sometimes there can be more than one and that

// is when the best chain selection algorithm is used.

children []*blockNode

// hash is the double sha 256 of the block.

hash chainhash.Hash

// parentHash is the double sha 256 of the parent block. This is kept

// here over simply relying on parent.hash directly since block nodes

// are sparse and the parent node might not be in memory when its hash

// is needed.

parentHash chainhash.Hash

// height is the position in the block chain.

height int32

// workSum is the total amount of work in the chain up to and including

// this node.

workSum *big.Int

// inMainChain denotes whether the block node is currently on the

// the main chain or not. This is used to help find the common

// ancestor when switching chains.

inMainChain bool

// Some fields from block headers to aid in best chain selection and

// reconstructing headers from memory. These must be treated as

// immutable and are intentionally ordered to avoid padding on 64-bit

// platforms.

version int32

bits uint32

nonce uint32

timestamp int64

merkleRoot chainhash.Hash

}

blockNode可以看作是区块在内存中的实例化类型,它的主要字段是:

- parent: 指向父区块;

- children: 指向子区块;

- hash: 区块的Hash,指区块头的双Hash。请注意,区块头中只包含父区块的头Hash,而不包含自身的头Hash;

- parentHash: 父区块的Hash;

- height: 区块高度;

- workSum: 从该区块到创世区块的工作量之和;

- inMainChain: 指明区块是否位于主链之上;

- version、bits、nonce、timestamp、merkleRoot: 对应于区块头中各字段;

找到父区块对应的blockNode对象后,checkBlockContext()根据当前区块和父区块来检查进行上下文检查:

//btcd/blockchain/validate.go

// checkBlockContext peforms several validation checks on the block which depend

// on its position within the block chain.

//

// The flags modify the behavior of this function as follows:

// - BFFastAdd: The transaction are not checked to see if they are finalized

// and the somewhat expensive BIP0034 validation is not performed.

//

// The flags are also passed to checkBlockHeaderContext. See its documentation

// for how the flags modify its behavior.

//

// This function MUST be called with the chain state lock held (for writes).

func (b *BlockChain) checkBlockContext(block *btcutil.Block, prevNode *blockNode, flags BehaviorFlags) error {

// The genesis block is valid by definition.

if prevNode == nil {

return nil

}

// Perform all block header related validation checks.

header := &block.MsgBlock().Header

err := b.checkBlockHeaderContext(header, prevNode, flags) (1)

if err != nil {

return err

}

fastAdd := flags&BFFastAdd == BFFastAdd

if !fastAdd {

// Obtain the latest state of the deployed CSV soft-fork in

// order to properly guard the new validation behavior based on

// the current BIP 9 version bits state.

csvState, err := b.deploymentState(prevNode, chaincfg.DeploymentCSV) (2)

if err != nil {

return err

}

// Once the CSV soft-fork is fully active, we'll switch to

// using the current median time past of the past block's

// timestamps for all lock-time based checks.

blockTime := header.Timestamp

if csvState == ThresholdActive {

medianTime, err := b.index.CalcPastMedianTime(prevNode)

if err != nil {

return err

}

blockTime = medianTime (3)

}

// The height of this block is one more than the referenced

// previous block.

blockHeight := prevNode.height + 1

// Ensure all transactions in the block are finalized.

for _, tx := range block.Transactions() {

if !IsFinalizedTransaction(tx, blockHeight, (4)

blockTime) {

str := fmt.Sprintf("block contains unfinalized "+

"transaction %v", tx.Hash())

return ruleError(ErrUnfinalizedTx, str)

}

}

// Ensure coinbase starts with serialized block heights for

// blocks whose version is the serializedHeightVersion or newer

// once a majority of the network has upgraded. This is part of

// BIP0034.

if ShouldHaveSerializedBlockHeight(header) && (5)

blockHeight >= b.chainParams.BIP0034Height {

coinbaseTx := block.Transactions()[0]

err := checkSerializedHeight(coinbaseTx, blockHeight) (6)

if err != nil {

return err

}

}

}

return nil

}

其主要步骤是:

- 调用checkBlockHeaderContext()对区块头进行上下文检查,与checkBlockHeaderSanity()不同的是,checkBlockHeaderContext()要检查区块头中的难度值、时间戳等是否满足链上的要求,随后我们会进一步分析;

- 调用deploymentState()计算父区块的子区块的CSV(包含BIP68、BIP112和BIP113)部署的状态,也即链上预期的CSV部署状态,如果是Active的,则根据BIP[113]的建议,在检查交易的LockTime时用MTP(Median Time Past,指前11个区块的timestamp的中位值)而不是区块头中的timestamp来比较,如代码(3)处所示,这样做是为了防止“矿工”故意修改区块头中的timestamp,将locktime小于正常区块生成时间的交易打包进来,以赚取更多的“小费”。链上某个部署的thresholdState状态及状态之间的转移,我们将在后文中详细介绍;

- 随后,代码(4)处调用IsFinalizedTransaction()检查区块中每一个交易是否均Finalized,它是通过比较区块的MTP和交易中的LockTime来确定的: 当LockTime为区块高度时(LockTime值小于5x10^8),LockTime值小于区块高度时才被认为是Finalized;当LockTime为区块生成时间时,LockTime值要小于区块的MTP才被认为是Finalized。也就是说,只有交易只能被打包进高度或者MTP大于其LockTime值的区块中。特别地,LockTime为零,或者交易所有输入的Sequence均为OxFFFFFFFF时,交易也被认为是Finalized,可以被打包进任何区块中。请注意,IsFinalizedTransaction()是直接用交易的LockTime进行比较的,并没有采用[BIP68]中的相对LockTime值,后面我们将会看到,在区块最终写入主链且CSV部署状态为Active时,还会根据BIP[68]中的建议计算交易的相对LockTime值,它是根据交易的每个输入的Sequence值来计算的,且选择最大值作为交易的LockTime,我们将在区块写入主链时详细介绍;

- 代码(5)处检查区[BIP34]是否应用到区块中,如果区块头中的版本大于2(高度为227835是最后一个版本为1的区块)且区块高度大于227931,则按[BIP34]中的描述,检查coinbase中是否包含了正确的区块高度;

- 代码6处调用checkSerializedHeight检查coinbase中的解锁脚本的起始处是否包含了正确的区块高度值;

接下来,我们来看checkBlockHeaderContext()的实现:

//btcd/blockchain/validate.go

func (b *BlockChain) checkBlockHeaderContext(header *wire.BlockHeader, prevNode *blockNode, flags BehaviorFlags) error {

// The genesis block is valid by definition.

if prevNode == nil {

return nil

}

fastAdd := flags&BFFastAdd == BFFastAdd

if !fastAdd {

// Ensure the difficulty specified in the block header matches

// the calculated difficulty based on the previous block and

// difficulty retarget rules.

expectedDifficulty, err := b.calcNextRequiredDifficulty(prevNode,

header.Timestamp) (1)

if err != nil {

return err

}

blockDifficulty := header.Bits

if blockDifficulty != expectedDifficulty { (2)

str := "block difficulty of %d is not the expected value of %d"

str = fmt.Sprintf(str, blockDifficulty, expectedDifficulty)

return ruleError(ErrUnexpectedDifficulty, str)

}

// Ensure the timestamp for the block header is after the

// median time of the last several blocks (medianTimeBlocks).

medianTime, err := b.index.CalcPastMedianTime(prevNode) (3)

if err != nil {

log.Errorf("CalcPastMedianTime: %v", err)

return err

}

if !header.Timestamp.After(medianTime) { (4)

str := "block timestamp of %v is not after expected %v"

str = fmt.Sprintf(str, header.Timestamp, medianTime)

return ruleError(ErrTimeTooOld, str)

}

}

// The height of this block is one more than the referenced previous

// block.

blockHeight := prevNode.height + 1

// Ensure chain matches up to predetermined checkpoints.

blockHash := header.BlockHash()

if !b.verifyCheckpoint(blockHeight, &blockHash) { (5)

str := fmt.Sprintf("block at height %d does not match "+

"checkpoint hash", blockHeight)

return ruleError(ErrBadCheckpoint, str)

}

// Find the previous checkpoint and prevent blocks which fork the main

// chain before it. This prevents storage of new, otherwise valid,

// blocks which build off of old blocks that are likely at a much easier

// difficulty and therefore could be used to waste cache and disk space.

checkpointBlock, err := b.findPreviousCheckpoint()

if err != nil {

return err

}

if checkpointBlock != nil && blockHeight < checkpointBlock.Height() { (6)

str := fmt.Sprintf("block at height %d forks the main chain "+

"before the previous checkpoint at height %d",

blockHeight, checkpointBlock.Height())

return ruleError(ErrForkTooOld, str)

}

// Reject outdated block versions once a majority of the network

// has upgraded. These were originally voted on by BIP0034,

// BIP0065, and BIP0066.

params := b.chainParams

if header.Version < 2 && blockHeight >= params.BIP0034Height || (7)

header.Version < 3 && blockHeight >= params.BIP0066Height ||

header.Version < 4 && blockHeight >= params.BIP0065Height {

str := "new blocks with version %d are no longer valid"

str = fmt.Sprintf(str, header.Version)

return ruleError(ErrBlockVersionTooOld, str)

}

return nil

}

其中主要步骤为:

- 调用calcNextRequiredDifficulty()根据难度调整算法计算链上下一个区块预期的目标难度值,并与当前区块头中的难度值进行比较,如代码(1)、(2)处所示,如果区块头中的难度值不符合预期值,则验证失败,这可以防止“矿工”故意选择难度“小”的值;

- 代码(3)、(4)处调用CalcPastMedianTime()计算链上最后一个区块的MTP,并与当前区块头中的时间戳比较,如果区块头中的时间值小于MTP则验证失败,这保证区块的MTP是单调增长的;

- 随后,验证待加入区块是否对应一个预置的Checkpoint点,如果是,则比较区块Hash是否与预置的Hash值一致,如果不一致则验证失败,这保证了Checkpoint点的正确性;

- 调用findPreviousCheckpoint()查找区块链上最近的Checkpoint点,请注意,这里不是查找待加入区块父区块之前的Checkpoint点,而是节点上区块链上高度最高的Checkpoint点,如果待加入区块的高度小于最近Checkpoint点的高度,即区块试图在Checkpoint点之间进行分叉,则验证失败,因为Checkpoint点之前分叉的侧链很可能因工作量之和小于主链的工作量之和而没有机会成为主链;

- 最后,代码(7)处检查BIP34、BIP66和BIP65所对应的区块高度和版本号是否达到要求,我们前面提到过,区块高度大于等于227931的区块BIP34应该部署,且区块的版本号应该大于1;类似地,BIP66在区块高度大于等于363725的区块中已经部署,要求区块版本号不低于3,BIP65在区块高度大于等于388381的区块中部署,要求区块版本号不低于4;

可以看到,相较于checkBlockHeaderSanity(),checkBlockHeaderContext()是检查区块头中的难度值、时间戳、高度及版本号是否符号链上的要求。其中calcNextRequiredDifficulty()涉及到难度调整算法,我们来看看它的实现:

//btcd/blockchain/difficulty.go

// calcNextRequiredDifficulty calculates the required difficulty for the block

// after the passed previous block node based on the difficulty retarget rules.

// This function differs from the exported CalcNextRequiredDifficulty in that

// the exported version uses the current best chain as the previous block node

// while this function accepts any block node.

//

// This function MUST be called with the chain state lock held (for writes).

func (b *BlockChain) calcNextRequiredDifficulty(lastNode *blockNode, newBlockTime time.Time) (uint32, error) {

// Genesis block.

if lastNode == nil {

return b.chainParams.PowLimitBits, nil

}

// Return the previous block's difficulty requirements if this block

// is not at a difficulty retarget interval.

if (lastNode.height+1)%b.blocksPerRetarget != 0 { (1)

// For networks that support it, allow special reduction of the

// required difficulty once too much time has elapsed without

// mining a block.

if b.chainParams.ReduceMinDifficulty {

......

}

// For the main network (or any unrecognized networks), simply

// return the previous block's difficulty requirements.

return lastNode.bits, nil (2)

}

// Get the block node at the previous retarget (targetTimespan days

// worth of blocks).

firstNode := lastNode

for i := int32(0); i < b.blocksPerRetarget-1 && firstNode != nil; i++ {

// Get the previous block node. This function is used over

// simply accessing firstNode.parent directly as it will

// dynamically create previous block nodes as needed. This

// helps allow only the pieces of the chain that are needed

// to remain in memory.

var err error

firstNode, err = b.index.PrevNodeFromNode(firstNode) (3)

if err != nil {

return 0, err

}

}

if firstNode == nil {

return 0, AssertError("unable to obtain previous retarget block")

}

// Limit the amount of adjustment that can occur to the previous

// difficulty.

actualTimespan := lastNode.timestamp - firstNode.timestamp

adjustedTimespan := actualTimespan (4)

if actualTimespan < b.minRetargetTimespan {

adjustedTimespan = b.minRetargetTimespan

} else if actualTimespan > b.maxRetargetTimespan {

adjustedTimespan = b.maxRetargetTimespan

}

// Calculate new target difficulty as:

// currentDifficulty * (adjustedTimespan / targetTimespan)

// The result uses integer division which means it will be slightly

// rounded down. Bitcoind also uses integer division to calculate this

// result.

oldTarget := CompactToBig(lastNode.bits)

newTarget := new(big.Int).Mul(oldTarget, big.NewInt(adjustedTimespan))

targetTimeSpan := int64(b.chainParams.TargetTimespan / time.Second)

newTarget.Div(newTarget, big.NewInt(targetTimeSpan)) (5)

// Limit new value to the proof of work limit.

if newTarget.Cmp(b.chainParams.PowLimit) > 0 {

newTarget.Set(b.chainParams.PowLimit) (6)

}

// Log new target difficulty and return it. The new target logging is

// intentionally converting the bits back to a number instead of using

// newTarget since conversion to the compact representation loses

// precision.

newTargetBits := BigToCompact(newTarget) (7)

......

return newTargetBits, nil

}

其主要步骤如下:

- 如果待加入区块的高度在一个调整周期(2016个区块)内,则期望的难度值就是父区块的难度值,即不需要调整难度值,如代码(1)、(2)处所示;

- 如果待加入区块的高度是2016的整数倍,则需要进行难度调整,首先找到上一次调整周期的起始区块,它的高度也是2016的整数倍,如代码(3)处所示;

- 代码(4)处计算上一次调整周期内经过的时间差,即周期内最后一个区块与起始区块的时间戳的差值,其值的有效范围为3.5天到56天,如果限过这一范围,则取其上限或下限;

- 代码(5)处按如下公式计算新的难度值:

其中targetTimespan是14天,当上一次调整周期(即currentTimespan)超过14天时,说明“出块”速度变慢,要降低难度值;当currentTimespan小于14天时,说明“出块”速度过快,要增加难度值。可以看出,困难调整算法就是为了稳定“出块”速度;

- 如果调整后的目标难度值大于设定的上限,则将其值直接设为该限,即2^224 - 1。请注意,难度值越大,说明“出块”的难度越小,这里的上限实际上是设定了“出块”的最低难度;

- 最后调用BigToCompact()将目标难度值编码为难度Bits,如代码(7)处所示;

在通过checkBlockContext()检查区块上下文通过之后,maybeAcceptBlock()将调用connectBestChain()将区块写入区块链。

//btcd/blockchain/chain.go

// connectBestChain handles connecting the passed block to the chain while

// respecting proper chain selection according to the chain with the most

// proof of work. In the typical case, the new block simply extends the main

// chain. However, it may also be extending (or creating) a side chain (fork)

// which may or may not end up becoming the main chain depending on which fork

// cumulatively has the most proof of work. It returns whether or not the block

// ended up on the main chain (either due to extending the main chain or causing

// a reorganization to become the main chain).

//

// The flags modify the behavior of this function as follows:

// - BFFastAdd: Avoids several expensive transaction validation operations.

// This is useful when using checkpoints.

// - BFDryRun: Prevents the block from being connected and avoids modifying the

// state of the memory chain index. Also, any log messages related to

// modifying the state are avoided.

//

// This function MUST be called with the chain state lock held (for writes).

func (b *BlockChain) connectBestChain(node *blockNode, block *btcutil.Block, flags BehaviorFlags) (bool, error) {

fastAdd := flags&BFFastAdd == BFFastAdd

dryRun := flags&BFDryRun == BFDryRun

// We are extending the main (best) chain with a new block. This is the

// most common case.

if node.parentHash.IsEqual(&b.bestNode.hash) { (1)

// Perform several checks to verify the block can be connected

// to the main chain without violating any rules and without

// actually connecting the block.

view := NewUtxoViewpoint() (2)

view.SetBestHash(&node.parentHash) (3)

stxos := make([]spentTxOut, 0, countSpentOutputs(block))

if !fastAdd {

err := b.checkConnectBlock(node, block, view, &stxos) (4)

if err != nil {

return false, err

}

}

......

// Connect the block to the main chain.

err := b.connectBlock(node, block, view, stxos) (5)

if err != nil {

return false, err

}

// Connect the parent node to this node.

if node.parent != nil {

node.parent.children = append(node.parent.children, node)

}

return true, nil

}

......

// We're extending (or creating) a side chain which may or may not

// become the main chain, but in either case the entry is needed in the

// index for future processing.

b.index.Lock()

b.index.index[node.hash] = node (6)

b.index.Unlock()

// Connect the parent node to this node.

node.inMainChain = false

node.parent.children = append(node.parent.children, node)

......

// We're extending (or creating) a side chain, but the cumulative

// work for this new side chain is not enough to make it the new chain.

if node.workSum.Cmp(b.bestNode.workSum) <= 0 { (7)

......

return false, nil (8)

}

// We're extending (or creating) a side chain and the cumulative work

// for this new side chain is more than the old best chain, so this side

// chain needs to become the main chain. In order to accomplish that,

// find the common ancestor of both sides of the fork, disconnect the

// blocks that form the (now) old fork from the main chain, and attach

// the blocks that form the new chain to the main chain starting at the

// common ancenstor (the point where the chain forked).

detachNodes, attachNodes := b.getReorganizeNodes(node) (9)

// Reorganize the chain.

if !dryRun {

log.Infof("REORGANIZE: Block %v is causing a reorganize.",

node.hash)

}

err := b.reorganizeChain(detachNodes, attachNodes, flags) (10)

if err != nil {

return false, err

}

return true, nil

}

connectBestChain()的输入是待加入区块的blockNode对象和btcutil.Block辅助对象,输出的第一个参数指明是否添加到主链,其主要步骤为:

- 如果父区块的Hash就是主链是“尾”区块的Hash,则区块将被写入主链,如代码(1)处所示;

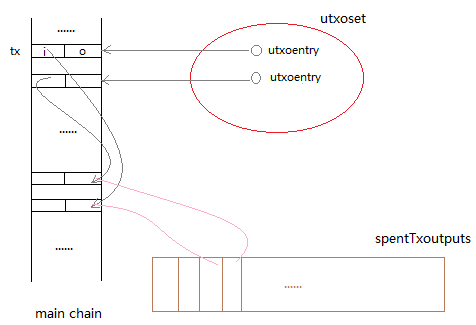

- 创建一个UtxoViewpoint对象,并将view的观察点设为主链的链尾,如代码(2)、(3)处所示。UtxoViewpoint表示区块链上从创世区块到观察点之间所有包含UTXO的交易及其中的UTXO(s)的集合,值得注意的是,UtxoViewpoint不仅仅记录了UTXO(s),而且记录所有包含未花费输出的交易,它将用来验证重复交易、双重支付等,它记录的utxoset是保证各节点上区块链一致性的重要对象,我们随后详细介绍它;

- 接下,通过countSpentOutputs()计算区块内所有交易花费的交易输出的个数,即所有交易的总的输入个数,并创建一个容量为相应大小的spentTxOut slice;

- 代码(4)处调用checkConnectBlock()对区块内的交易作验证,并更新utxoset,我们随后进一步分析它的实现;

- 交易验证通过后,代码(5)处调用connectBlock()将区块相关的信息写入数据库,真正实现将区块写入主链,我们随后详细分析它的实现;

- 如果父区块的Hash不是主链是“尾”区块的Hash,则区块将被写入侧链,根据侧链的工作量之各,侧链有可能被调整为主链。无论侧链是否被调整为主链,先将当前区块更新到内存索引器blockIndex中,如代码(6)处所示,因为后续对区块的处理需要通过blockIndex来查找区块,如我们前面提到的通过PrevNodeFromBlock()来查找父区块;如果区块被写入主链,connectBlock()也会将区块更新到blockIndex中,我们随后将会看到;

- 如果侧链的工作量之和小于主链的工作量之和,则直接返回,如代码(7)、(8)处所示,如果区块的父区块在主链上,则从当前区块开始分叉;如果区块的父区块不在主链上,则它扩展了侧链;

- 如果侧链的工作量之和大于主链的工作量之和,则需要将侧链调整为主链。首先,调用getReorganizeNodes()找到分叉点以及侧链和主链上的区块节点,如代码(9)处所示;

- 然后,调用reorganizeChain()实现侧链变主链;

在checkConnectBlock()和connectBlock()中进行交易验证或者utxoset更新时都要涉及到对UtxoViewpoint的操作,为了便于后续理解验证重复交易、双重支付等过程,我们先来介绍UtxoViewpoint,其定义如下:

//btcd/blockchain/utxoviewpoint.go

type UtxoViewpoint struct {

entries map[chainhash.Hash]*UtxoEntry

bestHash chainhash.Hash

}

......

// UtxoEntry contains contextual information about an unspent transaction such

// as whether or not it is a coinbase transaction, which block it was found in,

// and the spent status of its outputs.

type UtxoEntry struct {

modified bool // Entry changed since load.

version int32 // The version of this tx.

isCoinBase bool // Whether entry is a coinbase tx.

blockHeight int32 // Height of block containing tx.

sparseOutputs map[uint32]*utxoOutput // Sparse map of unspent outputs.

}

......

type utxoOutput struct {

spent bool // Output is spent.

compressed bool // The amount and public key script are compressed.

amount int64 // The amount of the output.

pkScript []byte // The public key script for the output.

}

可以看到,UtxoViewpoint主要记录了主链上交易的Hash与对应的*UtxoEntry之间的映射集合,所以,UtxoEntry实际上代表一个包含了Utxo的交易,它的各字段意义如下:

- modified: 记录UtxoEntry是否被修改过,主要是sparseOutputs是否有变动;

- Version: UtxoEntry对应的交易的版本号;

- isCoinBase: UtxoEntry对应的交易是否是coinbase交易;

- blockHeight: UtxoEntry对应的交易所在的区块的高度;

- sparseOutputs: 交易的utxo的集合,其索引即交易输出的序号;

utxoOutput中各字段的意义如下:

- spent: 记录utxo是否已经被花费,如果已经花费,将会从UtxoEntry中移除;

- compressed: utxo的输出币值与锁定脚本是否是压缩形式存储;

- amout: utxo的输出币值;

- pkScript: 输入的锁定脚本;

当区块加入主链时,区块中的交易(包括coinbase)产生的UTXO将被加入到UtxoViewpoint对应的utxo集合中,交易的输入将从utxo集合中移除,可以说,主链的状态与utxo集合的状态是紧密联系的,区块链状态的一致性实质上指的是主链和utxo集合的状态的一致性。在后面的分析中我们还会看到,侧链可能通过reorganize变成主链,那么区块将被从主链上移除,这时它包含的所有交易中已经花费的交易输出将重新变成utxo,并进入utxo集合中,所以区块在加入主链时,它包含的交易的所有花费的spentTxOut也会被记录下来,并以压缩的形式写入数据库。后面的分析将会涉及到utxoset的操作,我们通过如下示意图直观理解它们之间的联系:

根据上图,我们可以提前思考下如何验证重复交易和双重支付。当新的区块欲加入主链时,主链的状态如图中所示,即链上有些交易的输出已经被花费,有些交易的输出还未被完全花费,未被完全花费的交易在utxoset中通过untxentry记录。要验证新的区块中有无交易与主链上的交易重复,可以通过查找区块中的交易是否已经在主链上来实现,但该种方式无疑是耗时的,因为随着交易量的增加,主链上的交易会越来越多,每次验证区块的时候都要搜索所有的交易不是一个好的方式。另一个思路是只验证新区块中的交易是否在utxoset中,因为utxoentry指向的交易肯定在主链上,而不在utxoset中的交易已经被完全花费(如果新区块被写入主链,则这些交易的确认数至少为1),即使新区块中有重复的交易,若这些交易因为后续分叉而被从主链上移除,utxoset也不会有任何影响,因为这些交易本来就不在utxoset中,这就避免了文章中提到的利用重复交易使“可花费交易变成不可花费”的攻击,这也是BIP30提议解决重复交易的方案: 只允许重复完全被花费了的交易。同样地,验证双重支付时,也是通过验证新区块中的交易的输入是否指向了utxoset中的uxtoentry来实现的。如果交易的输入未指向utxoset中任何记录,则说明它试图花费一个已经花费了的交易;如果交易的输入指向了utxoset中的utxoentry,但其已经被新区块中排在前面的交易花费掉了或者被“矿工”正在“挖”的区块里的交易花费掉了,则该交易也被认为是在双重支付;只有当交易的输入指向了utxoentry且其未被花费才能通过双重支付检查。了解了验证重复交易和双重支付的思路后,我们理解后续的代码实现将变得容易一些。

接下来,我们继续分析checkConnectBlock()的实现:

//btcd/blockchain/validate.go

func (b *BlockChain) checkConnectBlock(node *blockNode, block *btcutil.Block, view *UtxoViewpoint, stxos *[]spentTxOut) error {

......

// BIP0030 added a rule to prevent blocks which contain duplicate

// transactions that 'overwrite' older transactions which are not fully

// spent. See the documentation for checkBIP0030 for more details.

//

// There are two blocks in the chain which violate this rule, so the

// check must be skipped for those blocks. The isBIP0030Node function

// is used to determine if this block is one of the two blocks that must

// be skipped.

//

// In addition, as of BIP0034, duplicate coinbases are no longer

// possible due to its requirement for including the block height in the

// coinbase and thus it is no longer possible to create transactions

// that 'overwrite' older ones. Therefore, only enforce the rule if

// BIP0034 is not yet active. This is a useful optimization because the

// BIP0030 check is expensive since it involves a ton of cache misses in

// the utxoset.

if !isBIP0030Node(node) && (node.height < b.chainParams.BIP0034Height) { (1)

err := b.checkBIP0030(node, block, view) (2)

if err != nil {

return err

}

}

// Load all of the utxos referenced by the inputs for all transactions

// in the block don't already exist in the utxo view from the database.

//

// These utxo entries are needed for verification of things such as

// transaction inputs, counting pay-to-script-hashes, and scripts.

err := view.fetchInputUtxos(b.db, block) (3)

if err != nil {

return err

}

// BIP0016 describes a pay-to-script-hash type that is considered a

// "standard" type. The rules for this BIP only apply to transactions

// after the timestamp defined by txscript.Bip16Activation. See

// https://en.bitcoin.it/wiki/BIP_0016 for more details.

enforceBIP0016 := node.timestamp >= txscript.Bip16Activation.Unix() (4)

// The number of signature operations must be less than the maximum

// allowed per block. Note that the preliminary sanity checks on a

// block also include a check similar to this one, but this check

// expands the count to include a precise count of pay-to-script-hash

// signature operations in each of the input transaction public key

// scripts.

transactions := block.Transactions()

totalSigOps := 0

for i, tx := range transactions { (5)

numsigOps := CountSigOps(tx)

if enforceBIP0016 {

// Since the first (and only the first) transaction has

// already been verified to be a coinbase transaction,

// use i == 0 as an optimization for the flag to

// countP2SHSigOps for whether or not the transaction is

// a coinbase transaction rather than having to do a

// full coinbase check again.

numP2SHSigOps, err := CountP2SHSigOps(tx, i == 0, view) (6)

if err != nil {

return err

}

numsigOps += numP2SHSigOps

}

// Check for overflow or going over the limits. We have to do

// this on every loop iteration to avoid overflow.

lastSigops := totalSigOps

totalSigOps += numsigOps

if totalSigOps < lastSigops || totalSigOps > MaxSigOpsPerBlock { (7)

str := fmt.Sprintf("block contains too many "+

"signature operations - got %v, max %v",

totalSigOps, MaxSigOpsPerBlock)

return ruleError(ErrTooManySigOps, str)

}

}

// Perform several checks on the inputs for each transaction. Also

// accumulate the total fees. This could technically be combined with

// the loop above instead of running another loop over the transactions,

// but by separating it we can avoid running the more expensive (though

// still relatively cheap as compared to running the scripts) checks

// against all the inputs when the signature operations are out of

// bounds.

var totalFees int64

for _, tx := range transactions {

txFee, err := CheckTransactionInputs(tx, node.height, view, (8)

b.chainParams)

if err != nil {

return err

}

// Sum the total fees and ensure we don't overflow the

// accumulator.

lastTotalFees := totalFees

totalFees += txFee

if totalFees < lastTotalFees {

return ruleError(ErrBadFees, "total fees for block "+

"overflows accumulator")

}

// Add all of the outputs for this transaction which are not

// provably unspendable as available utxos. Also, the passed

// spent txos slice is updated to contain an entry for each

// spent txout in the order each transaction spends them.

err = view.connectTransaction(tx, node.height, stxos) (9)

if err != nil {

return err

}

}

// The total output values of the coinbase transaction must not exceed

// the expected subsidy value plus total transaction fees gained from

// mining the block. It is safe to ignore overflow and out of range

// errors here because those error conditions would have already been

// caught by checkTransactionSanity.

var totalSatoshiOut int64

for _, txOut := range transactions[0].MsgTx().TxOut {

totalSatoshiOut += txOut.Value

}

expectedSatoshiOut := CalcBlockSubsidy(node.height, b.chainParams) + (10)

totalFees

if totalSatoshiOut > expectedSatoshiOut {

str := fmt.Sprintf("coinbase transaction for block pays %v "+

"which is more than expected value of %v",

totalSatoshiOut, expectedSatoshiOut)

return ruleError(ErrBadCoinbaseValue, str)

}

// Don't run scripts if this node is before the latest known good

// checkpoint since the validity is verified via the checkpoints (all

// transactions are included in the merkle root hash and any changes

// will therefore be detected by the next checkpoint). This is a huge

// optimization because running the scripts is the most time consuming

// portion of block handling.

checkpoint := b.LatestCheckpoint()

runScripts := !b.noVerify

if checkpoint != nil && node.height <= checkpoint.Height {

runScripts = false (11)

}

......

// Enforce CHECKSEQUENCEVERIFY during all block validation checks once

// the soft-fork deployment is fully active.

csvState, err := b.deploymentState(node.parent, chaincfg.DeploymentCSV) (12)

if err != nil {

return err

}

if csvState == ThresholdActive {

// If the CSV soft-fork is now active, then modify the

// scriptFlags to ensure that the CSV op code is properly

// validated during the script checks bleow.

scriptFlags |= txscript.ScriptVerifyCheckSequenceVerify

// We obtain the MTP of the *previous* block in order to

// determine if transactions in the current block are final.

medianTime, err := b.index.CalcPastMedianTime(node.parent)

if err != nil {

return err

}

// Additionally, if the CSV soft-fork package is now active,

// then we also enforce the relative sequence number based

// lock-times within the inputs of all transactions in this

// candidate block.

for _, tx := range block.Transactions() {

// A transaction can only be included within a block

// once the sequence locks of *all* its inputs are

// active.

sequenceLock, err := b.calcSequenceLock(node, tx, view, (13)

false)

if err != nil {

return err

}

if !SequenceLockActive(sequenceLock, node.height, (14)

medianTime) {

str := fmt.Sprintf("block contains " +

"transaction whose input sequence " +

"locks are not met")

return ruleError(ErrUnfinalizedTx, str)

}

}

}

// Now that the inexpensive checks are done and have passed, verify the

// transactions are actually allowed to spend the coins by running the

// expensive ECDSA signature check scripts. Doing this last helps

// prevent CPU exhaustion attacks.

if runScripts {

err := checkBlockScripts(block, view, scriptFlags, b.sigCache) (15)

if err != nil {

return err

}

}

// Update the best hash for view to include this block since all of its

// transactions have been connected.

view.SetBestHash(&node.hash) (16)

return nil

}

checkConnectBlock()是区块加入主链前最后也是最复杂的检查过程,其主要步骤为:

- 代码(1)、(2)处根据BIP30的建议,检查区块中的交易是否与主链上的交易重复。交易是通过交易的Hash来标识的,那么有相同Hash值的交易就是重复的交易。我们在《Btcd区块链协议消息解析》中介绍过,Tx的Hash实际上是整个交易结构序列化后进行两次SHA256()后的结果,也就是对交易的版本号、输入、输出和LockTime进行双哈希的结果。由于coinbase交易没有输入,使之较其它交易而言更容易生成相同的Hash值,也就是说,攻击者更容易构造出相同的coinbase交易。如果攻击者跟踪从某个coinbase交易开始的交易链上的所有交易,通过复制交易中的锁定脚本或者解锁脚本,就有可能复制出这些交易。攻击者可能复制出交易并将其打包进一个区块中,这个区块随后可能因为区块链分叉而被从主链上移除。我们将在后面分析中看到,从主链上移除的区块中的交易产生的utxo将被从utxoset中移除。伪造的重复的交易被移除后,将导致原始交易产生的utxo也被移除,因为uxtoset中是通过交易的Hash来索引utxoentry的,重复的交易将指向同一个utxoentry。如果原始交易的utxoentry未被花费,则移除后将导致其被认为已经花费了。前面我们提到过BIP30建议,只允许重复已经完全花费的交易。代码(1)处针对区块高度小于227931且除高度为91842和91880以外的区块进行BIP30检查。高度大于227931的区块已经部署BIP34,即coibase的锁定脚本中包含了区块高度值,使得coinbase的Hash冲突的可能性变小,故不再做BIP30检查。代码(2)处checkBIP0030()的实现比较简单,即只有当区块中的所有交易不在utxoset中或者对应的utxoentry的所有output均已经花费掉,才算通过检查。

- 代码(3)处调用UtxoViewpoint的fetchInputUtxos()将区块中所有交易的输入引用的utxo从db中加载到内存中,我们随后分析它的实现;

- 代码(4)处判断区块是否已经支持P2SH(Pay to Script Hash)。BIP16定义了P2SH,它是一种交易脚本类型,其解锁和锁定脚有如下形式:

scriptSig: [signature] {[pubkey] OP_CHECKSIG}

scriptPubKey: OP_HASH160 [20-byte-hash of {[pubkey] OP_CHECKSIG} ] OP_EQUAL

我们在《曲线上的“加密货币”(一)》中介绍的脚本形式是P2PKH(Pay to Public Key Hash)脚本采用的形式。在P2SH脚本中,锁定脚本(scriptPubKey)中的160位Hash值不再是公钥的HASH160结果,而是一段更复杂的脚本的HASH160结果,这段更复杂的脚本即是赎回脚本,如:

{2 [pubkey1] [pubkey2] [pubkey3] 3 OP_CHECKMULTISIG} 或 {OP_CHECKSIG OP_IF OP_CHECKSIGVERIFY OP_ELSE OP_CHECKMULTISIGVERIFY OP_ENDIF}

当支持多签名支付或者条件支付时,较P2PKH脚本而言,P2SH脚本中锁定脚本占用的空间更小,相应地utxoset所占用的内存也更少。当花费P2SH交易时,解锁脚本提供签名和序列化后的赎回脚本,也就是说P2SH将提供赎回脚本的义务从支付方转移到了收款方。我们在《Btcd区块链的构建(二)》中提到过,每个区块中的脚本操作符总个数不能超过20000个,checkBlcokSanity()中只统计了区块交易中的锁定脚本和解锁脚本中操作符的总个数,对于P2SH脚本来说,解锁脚本中还包含了序列化的赎回脚本,因而还需要统计赎回脚本中的操作符个数,这也是这里要判断是否支持P2SH脚本的原因,BIP16建议验证Apr 1 00:00:00 UTC 2012后的交易时,将赎回脚本中的操作符个数计算在内;

- 代码(5)-(7)处计算区块中所有交易脚本中的操作符的个数,并统计P2SH脚本中赎回脚本的操作符个数,并检查总的个数是否超过20000个;

- 代码(8)处调用CheckTransactionInputs()检查双重支付,交易的输出额是否超过输入额以及coinbase交易能否被花费等等,并计算交易的费用,我们随后将进一步分析;

- 代码(9)处调用UtxoViewpoint的connectTransaction()方法,将交易中的输入utxo标记为已花费,并更新传入的slice stxos,同时,将交易的输出添加到utxoset中,我们随后进一步分析它的实现。值得注意的是,此时spentTxOutputs和utxoset中已经花费的utxoentry还没有最终更新,需要等到connectBlock()将区块最终连入主链时更新并将最新状态写入数据库;

- 在检查完交易的输入并计算出所有交易的费用之和后,开始检查coinbase交易的输出总值是否超过预期值,防止“矿工”随意伪造奖励。coinbase交易的输出即是对“矿工”“挖矿”的奖励,从代码(10)处可以看出,预期值包含“挖矿”的“补贴”和区块中所有交易的“费用”之和,其中“补贴”值约4年减半,初始值约为50 BTC,现在约“挖矿”的“补贴”约为12.5 BTC。

- 在上述检查均通过后,开始准备对交易中的脚本进行验证,这是一个相对耗时的操作,如果区块的高度是在预置的最新的Checkpoints之下,那么可以跳过脚本检查,将错误发现推迟到下一个Checkpoint检查点。如果交易有任何变化,则会影响区块的Hash,并进而改变下一个Checkpoint区块的Hash,使得我们前面在checkBlockHeaderContext()中提到的验证Checkpoint的过程失败。可以看出,区块必须有足够多的确认才能成为Checkpoint,当前Btcd版本中,区块必须至少有2016个确认才有可能成为Checkpoint;

- 代码(12)处调用deploymentState()查询CSV的部署状态,如果已经部署,则调用calcSequenceLock(),根据BIP[68]中的建议根据交易输入的Sequence值来计算交易的相对LockTime值,并通过SequenceLockActive()检查区块的MTP和高度是否已经超过交易的锁定时间和锁定高度,如果区块的高度小于交易锁定高度或者区块的MTP小于交易锁定时间,则交易不应该被打包进该区块;

- 如果区块在最新的Checkpoint区块之后,则继续进行脚本较验,这一过程在checkBlockScripts()中实现,我们将在后文中介绍;

- 通过上述所有验证后,将UtxoViewpoint的观察点更新为当前区块,因为随后该区块的相关状态会最终写入区块链;

从connectBestChain()的实现中我们知道,checkConnectBlock()通过后,会调用connectBlock()将区块最终写入主链并将相关状态写入数据库。checkConnectBlock()中涉及到的步骤比较复杂,其中涉及到的检查交易输入、更新utxoset、检查交易的相对锁定时间等的具体实现我们将在下篇文章中展开分析;同时,对于通过getReorganizeNodes()和reorganizeChain()将侧链变主链的具体实现也一并在下篇文章《Btcd区块链的构建(四)》中介绍。