Hadoop部署文档

文章目录

- 1. 先在阿里云主机按量付费配置三台主机

- 2.软件版本

- 3.集群规划

- 4.集群目录结构

- 5.环境准备

- 6.安装 Zookeeper

- 7. 安装 Hadoop(NameNode HA+ResourceManager HA)

- 8.启动集群(第一次系统启动,需要初始化)

- 遇到的问题

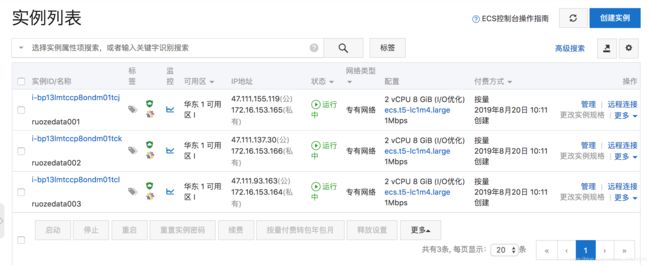

1. 先在阿里云主机按量付费配置三台主机

这三台阿里云主机作为三台集群的服务器,配置为2核,内存8G,centos 7.2

2.软件版本

| 组件名 | 版本 |

|---|---|

| hadoop | hadoop-2.6.0-cdh5.15.1.tar.gz |

| jre | jdk-8u45-linux-x64.gz |

| zookeeper | zookeeper-3.4.6.tar.gz |

下载地址: https://pan.baidu.com/s/1HAK9mNVrRaKQwhg0eSkj2A

3.集群规划

| IP | HOST | 软件 | 进程 |

|---|---|---|---|

| 47.111.155.119 | ruozedata001 | hadoop,jdk,zookeeper | QuorumPeerMain namenode datanode resourceManager nodeManager JournalNode JobHistoryServer DFSZKFailoverController |

| 47.111.137.30 | ruozedata002 | hadoop,jdk,zookeeper | QuorumPeerMain namenode datanode resourceManager nodeManager JournalNode DFSZKFailoverController |

| 47.111.93.163 | ruozedata003 | hadoop,jdk,zookeeper | QuorumPeerMain datanode nodeManager JournalNode DFSZKFailoverController |

4.集群目录结构

drwxrwxr-x 4 hadoop hadoop 4096 Aug 20 16:07 app

drwxrwxr-x 4 hadoop hadoop 4096 Aug 20 18:51 data

drwxrwxr-x 2 hadoop hadoop 4096 Aug 20 10:32 lib

drwxrwxr-x 2 hadoop hadoop 4096 Aug 20 10:32 maven_reps

drwxrwxr-x 2 hadoop hadoop 4096 Aug 20 10:32 script

drwxrwxr-x 2 hadoop hadoop 4096 Aug 20 10:52 software

drwxrwxr-x 2 hadoop hadoop 4096 Aug 20 10:32 source

drwxrwxr-x 3 hadoop hadoop 4096 Aug 20 18:25 tmp

5.环境准备

- ip 与 hostname 绑定(3 台)

[hadoop@zuozedata001 dfs]$ cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.153.165 ruozedata001

172.16.153.166 ruozedata002

172.16.153.164 ruozedata003

- 设置ssh通信

1.3台机器执行

[root@hadoop001 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

74:78:50:05:7e:c8:bb:2a:f1:45:c4:0a:9c:38:90:dc root@hadoop001

The key's randomart image is:

+--[ RSA 2048]----+

| ..+ o ..ooo. |

| o E + =o. |

| . .oo* . |

| ..o.o |

| S.. |

| . .. |

| o .. |

| . .. |

| .. |

+-----------------+

[root@hadoop001 ~]# cat /root/.ssh/id_rsa.pub>> /root/.ssh/authorized_keys

2.hadoop002 hadoop003传输id_rsa.pub文件到hadoop001

[root@hadoop002 ~]# cd .ssh

[root@hadoop002 .ssh]# ll

total 12

-rw-r--r--. 1 root root 396 Sep 2 21:37 authorized_keys

-rw-------. 1 root root 1675 Sep 2 21:37 id_rsa

-rw-r--r--. 1 root root 396 Sep 2 21:37 id_rsa.pub

[root@hadoop002 .ssh]# scp id_rsa.pub 192.168.137.130:/root/.ssh/id_rsa.pub2

[email protected]'s password:

id_rsa.pub 100% 396 0.4KB/s 00:00

[root@hadoop002 .ssh]#

[root@hadoop003 ~]# cd .ssh

[root@hadoop003 .ssh]# ll

total 12

-rw-r--r--. 1 root root 396 Sep 2 21:37 authorized_keys

-rw-------. 1 root root 1675 Sep 2 21:37 id_rsa

-rw-r--r--. 1 root root 396 Sep 2 21:37 id_rsa.pub

[root@hadoop003 .ssh]# scp id_rsa.pub 192.168.137.130:/root/.ssh/id_rsa.pub3

[email protected]'s password:

id_rsa.pub 100% 396 0.4KB/s 00:00

3.hadoop001机器 合并id_rsa.pub2、id_rsa.pub3到authorized_keys

[root@hadoop001 ~]# cd .ssh

[root@hadoop001 .ssh]# ll

total 20

-rw-r--r--. 1 root root 396 Sep 2 21:37 authorized_keys

-rw-------. 1 root root 1675 Sep 2 21:37 id_rsa

-rw-r--r--. 1 root root 396 Sep 2 21:37 id_rsa.pub

-rw-r--r--. 1 root root 396 Sep 2 21:42 id_rsa.pub2

-rw-r--r--. 1 root root 396 Sep 2 21:42 id_rsa.pub3

[root@hadoop001 .ssh]# cat id_rsa.pub2 >> authorized_keys

[root@hadoop001 .ssh]# cat id_rsa.pub3 >> authorized_keys

[root@hadoop001 .ssh]# cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA2dWIp5gGKuTkH7v0hj6IdldGkK0liMEzwXNnBD1iV9e0T12D2W9B4GnkMkCR3EZCKwfK593KPAr2cC3YADyMPaJn9x83pqOStvOBVUEEUYr9N/RUvkDq+JhmlGiTutSsqYNlu9LpCwNMWc+doANzwoM8xpyVVpl1l4LJdc0ShA8UCl2rJYMJgSal49weD58iSNMHB4tEEbAWzojbdkjfsFgtZTRsbckdV0gzDdW/9FoWYWlhqA4aw/SkxglssJ8B8XLSPZX45IdwhD65sTJUCQWkZYSiEq2MQOVLdB517KY4m0bHPid7NhM20g7oYL3H6271EQJ9tat7sFnpbuYdew== root@hadoop001

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAx+tMmk4tEQon/VZZMkfpmPkHGZ7IJg3wyLMpddAGcluWiT0ldzCBZIBY/qkPzwg9TukIuFQ4uqV9R14xLQjdkte2QKRTpp1NLfmVBkCb6Q/ucOlayrU1mXXXiHqbRhPNLK/7++fL+5iMbqzjyM35OuOAVwX+G8rQ7ALx6AgVOnM1bscI5xM4bpKX/uzDQ6Mo9YAalvrC0PF/jlUvyE9lEDIwGwLtxR+UDkhWSw6ucbAt8LxHXhVabg4mpPBA5M1vKujxDJBXK58QcLlUxy+b3gVTI7Ojrurw7KjHLynC439B8NXY9dcWyztIu3tPtopPg8/N3w/5VrifsQIvnpDEcw== root@hadoop002

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAqIZyHmKtxOarZyIcuYU0phVQUAHRvsB4jFffuW3X5G7+7RLApv3KsTNe0niTp6TH6B9/lENVKaZT9ut65mo5gQYIeoZqAlE0yA6NpymUkybfyS3bFS7kx2oO0pszQuOAQwFZZaGV1pdEAPWNFAwtUgsngo9x5wcVPdpSgpnVo/gU6smdbaAK2RWQOpZ8qoBmW5eMxEYuihRVetYlJ+erWxboAVW0O2tvdFBChejY7mt0BRIksahNqUhvQvoYRZbMOKiuBRpgxohI/Fz/FOKNYcRwzEHpZKrijttf62rxRt+YfuVETsZrXvWINPTzp9Dbw8qtt/kBvBFgSZYeWP8IDQ== root@hadoop003

4.将authorized_keys分发到hadoop002、hadoop003机器

[root@hadoop001 .ssh]# scp authorized_keys 192.168.137.131:/root/.ssh/

The authenticity of host '192.168.137.131 (192.168.137.131)' can't be established.

RSA key fingerprint is 76:c7:31:b6:20:56:4b:3e:29:c1:99:9f:fb:c0:9e:b8.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.137.131' (RSA) to the list of known hosts.

[email protected]'s password:

authorized_keys 100% 1188 1.2KB/s 00:00

[root@hadoop001 .ssh]# scp authorized_keys 192.168.137.132:/root/.ssh/

The authenticity of host '192.168.137.132 (192.168.137.132)' can't be established.

RSA key fingerprint is 09:f6:4a:f1:a0:bd:79:fd:34:e7:75:94:0b:3c:83:5a.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.137.132' (RSA) to the list of known hosts.

[email protected]'s password:

authorized_keys

5.验证(每台机器上执行下面3条命令,只输入yes,不输入密码,则这3台互相通信了)

ssh root@hadoop001 date

ssh root@hadoop002 date

ssh root@hadoop003 date

- 安装 JDK 和设置环境变量(3 台)

- 切换成root用户,将jdk解压在/usr/java目录下,若没有,则先创建

切记,解压完后发现jdk是临时目录,记得用改所属组

chown -R root:root jdk1.8.0_45- vi /etc/profile 增加内容如下:

#env export JAVA_HOME=/usr/java/jdk1.8.0_45 export PATH=$JAVA_HOME/bin:$PATH- 执行 source /etc/profile

- 验证:java –version

- 切换成root用户,将jdk解压在/usr/java目录下,若没有,则先创建

6.安装 Zookeeper

- 在hadoop用户下安装zookeeper到 ~/app目录下,并把文件名改为zookeeper.

- 复制一个conf/zoo.cfg,并修改配置

[hadoop@zuozedata001 conf]$ cd /home/hadoop/app/zookeeper/conf

[hadoop@zuozedata001 conf]$ ll

total 16

-rw-rw-r-- 1 hadoop hadoop 535 Feb 20 2014 configuration.xsl

-rw-rw-r-- 1 hadoop hadoop 2161 Feb 20 2014 log4j.properties

-rw-rw-r-- 1 hadoop hadoop 1033 Aug 20 15:13 zoo.cfg

-rw-rw-r-- 1 hadoop hadoop 922 Feb 20 2014 zoo_sample.cfg

[hadoop@zuozedata001 conf]$

[hadoop@zuozedata001 conf]$ vi zoo.cfg

修改临时目录,结尾追加代码:

dataDir=/home/hadoop/data/zookeeper

172.16.153.165 ruozedata001

172.16.153.166 ruozedata002

172.16.153.164 ruozedata003

- 追加myid,追加前先创建data/zookeeper/目录

echo 1 > /home/hadoop/data/zookeeper/myid

三台机器分别追加各自的myid,分别追加1,2,3

切记不可 echo 3>data/myid,将>前后空格保留,否则无法将 3 写入 myid 文件

7. 安装 Hadoop(NameNode HA+ResourceManager HA)

- 在hadoop用户下安装hadoop到 ~/app目录下,并把文件名改为hadoop.

- 修改环境变量并source,之前zookeeper没有配置,一起配置

[hadoop@zuozedata001 ~]$ vi .bash_profile

export ZOOKEEPER_HOME=/home/hadoop/app/zookeeper

export HADOOP_HOME=/home/hadoop/app/hadoop

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$PATH

- 修改hadoop的配置文件

- hadoop-env.sh中的JAVA_HOME

export JAVA_HOME=/usr/java/jdk1.8.0_45- 配置其他文件并上传

https://pan.baidu.com/s/1JGkkFQj4kRBzXsyMtQEtgg

其中slave文件要修改格式,因为win的文件格式不被linux识别,先下载dos2unix,然后用dos2unix转换文件格式

8.启动集群(第一次系统启动,需要初始化)

- 启动 zookeeper,三台都要启动

zkServer.sh start

检查zookeeper状态,初始时可能要等一下.等zookeeper都启动好,状态正常,最好也等1min.确保不会挂掉

[hadoop@zuozedata003 dfs]$ zkServer.sh status

JMX enabled by default

Using config: /home/hadoop/app/zookeeper/bin/../conf/zoo.cfg

Mode: follower

- 启动 hadoop(HDFS+YARN)

a. 第一台的NameNode 格式化

[hadoop@zuozedata001 dfs]$ hadoop namenode -format

19/08/20 23:15:23 INFO namenode.FSNamesystem: Maximum size of an xattr: 16384

19/08/20 23:15:24 INFO namenode.FSImage: Allocated new BlockPoolId: BP-194598049-172.16.153.165-1566314124127

19/08/20 23:15:24 INFO common.Storage: Storage directory /home/hadoop/data/dfs/name has been successfully formatted.

19/08/20 23:15:24 INFO namenode.FSImageFormatProtobuf: Saving image file /home/hadoop/data/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

19/08/20 23:15:24 INFO namenode.FSImageFormatProtobuf: Image file /home/hadoop/data/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 308 bytes saved in 0 seconds .

19/08/20 23:15:24 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

19/08/20 23:15:24 INFO util.ExitUtil: Exiting with status 0

19/08/20 23:15:24 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at ruozedata001/172.16.153.165

************************************************************/

看到下面这段信息就成功初始化namenode了

INFO common.Storage: Storage directory /home/hadoop/data/dfs/name has been successfully formatted.

b. 同步 NameNode 元数据

同步 ruozedata001 元数据到 ruozedata002

主要是:dfs.namenode.name.dir,dfs.namenode.edits.dir 还应该确保共享存储目录下 (dfs.namenode.shared.edits.dir ) 包含 NameNode 所有的元数据。

[hadoop@zuozedata001 ~]$ cd data/dfs/

[hadoop@zuozedata001 dfs]$ ll

total 28

drwxrwxr-x 3 hadoop hadoop 4096 Aug 20 23:15 jn

drwxrwxr-x 3 hadoop hadoop 4096 Aug 20 23:15 name

-rw-rw-r-- 1 hadoop hadoop 18326 Aug 20 23:13 zookeeper.out

[hadoop@zuozedata001 dfs]$

[hadoop@zuozedata001 dfs]$ scp -r name/ ruozedata002:/home/hadoop/data/dfs/

fsimage_0000000000000000000.md5 100% 62 0.1KB/s 00:00

VERSION 100% 206 0.2KB/s 00:00

fsimage_0000000000000000000 100% 308 0.3KB/s 00:00

seen_txid 100% 2 0.0KB/s 00:00

c.初始化 ZFCK

[hadoop@zuozedata001 dfs]$ hdfs zkfc -formatZK

19/08/20 23:35:27 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/ruozeclusterg7 in ZK.

19/08/20 23:35:27 INFO zookeeper.ZooKeeper: Session: 0x16caf974b4b0000 closed

19/08/20 23:35:27 INFO zookeeper.ClientCnxn: EventThread shut down

19/08/20 23:35:27 INFO tools.DFSZKFailoverController: SHUTDOWN_MSG:

d.启动 HDFS 分布式存储系统

[hadoop@zuozedata001 dfs]$ start-dfs.sh

Starting namenodes on [ruozedata001 ruozedata002]

ruozedata001: starting namenode, logging to /home/hadoop/app/hadoop/logs/hadoop-hadoop-namenode-zuozedata001.out

ruozedata002: starting namenode, logging to /home/hadoop/app/hadoop/logs/hadoop-hadoop-namenode-zuozedata002.out

ruozedata003: starting datanode, logging to /home/hadoop/app/hadoop/logs/hadoop-hadoop-datanode-zuozedata003.out

ruozedata001: starting datanode, logging to /home/hadoop/app/hadoop/logs/hadoop-hadoop-datanode-zuozedata001.out

ruozedata002: starting datanode, logging to /home/hadoop/app/hadoop/logs/hadoop-hadoop-datanode-zuozedata002.out

Starting journal nodes [ruozedata001 ruozedata002 ruozedata003]

ruozedata003: journalnode running as process 21238. Stop it first.

ruozedata002: journalnode running as process 23191. Stop it first.

ruozedata001: journalnode running as process 27837. Stop it first.

Starting ZK Failover Controllers on NN hosts [ruozedata001 ruozedata002]

ruozedata001: starting zkfc, logging to /home/hadoop/app/hadoop/logs/hadoop-hadoop-zkfc-zuozedata001.out

ruozedata002: starting zkfc, logging to /home/hadoop/app/hadoop/logs/hadoop-hadoop-zkfc-zuozedata002.out

e. 验证三台机器的进程

ruozedata001

28240 DataNode

27793 QuorumPeerMain

28114 NameNode

28626 Jps

28520 DFSZKFailoverController

27837 JournalNode

ruozedata002

23617 Jps

23523 DFSZKFailoverController

23141 QuorumPeerMain

23191 JournalNode

23290 NameNode

23387 DataNode

ruozedata003

21333 DataNode

21158 QuorumPeerMain

21238 JournalNode

21464 Jps

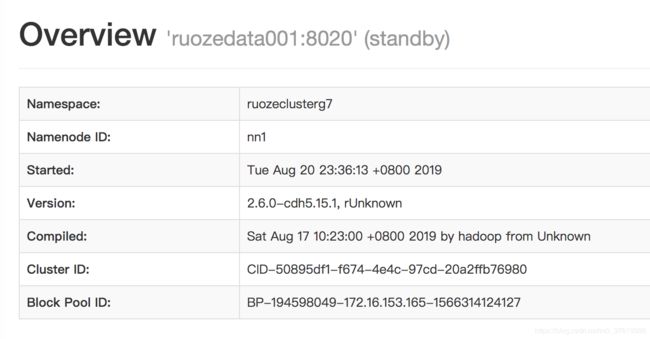

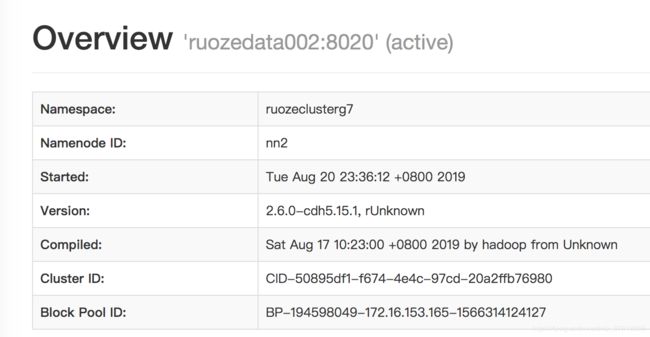

- 页面

ruozedata001

http://47.111.155.119:50070/dfshealth.html#tab-overview

ruozedata002

http://47.111.137.30:50070/dfshealth.html#tab-overview

f.启动 YARN 框架

- hadoop001 启动 Yarn,

[hadoop@zuozedata001 dfs]$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/app/hadoop/logs/yarn-hadoop-resourcemanager-zuozedata001.out

ruozedata003: starting nodemanager, logging to /home/hadoop/app/hadoop/logs/yarn-hadoop-nodemanager-zuozedata003.out

ruozedata002: starting nodemanager, logging to /home/hadoop/app/hadoop/logs/yarn-hadoop-nodemanager-zuozedata002.out

ruozedata001: starting nodemanager, logging to /home/hadoop/app/hadoop/logs/yarn-hadoop-nodemanager-zuozedata001.out

- hadoop002 备机启动 RM

[hadoop@zuozedata002 dfs]$ yarn-daemon.sh start resourcemanager

starting resourcemanager, logging to /home/hadoop/app/hadoop/logs/yarn-hadoop-resourcemanager-zuozedata002.out

g.验证 resourcemanager,nodemanager

- 进程

[hadoop@zuozedata001 dfs]$ jps

28240 DataNode

27793 QuorumPeerMain

28721 ResourceManager

28114 NameNode

28819 NodeManager

28520 DFSZKFailoverController

29145 Jps

27837 JournalNode

[hadoop@zuozedata002 dfs]$ jps

23523 DFSZKFailoverController

23141 QuorumPeerMain

23191 JournalNode

23927 Jps

23290 NameNode

23387 DataNode

23678 NodeManager

23855 ResourceManager

[hadoop@zuozedata003 dfs]$ jps

21507 NodeManager

21333 DataNode

21158 QuorumPeerMain

21238 JournalNode

21662 Jps

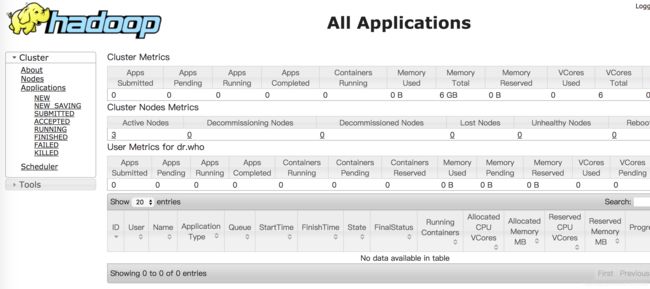

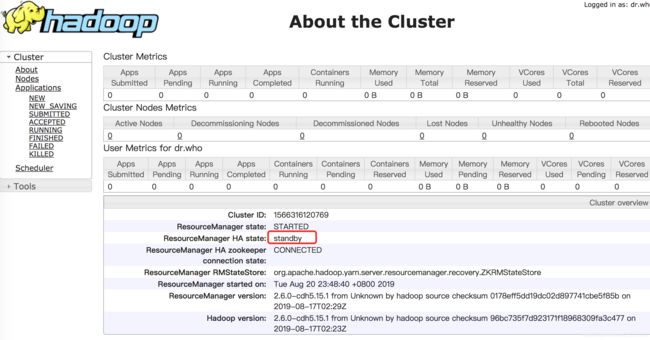

- 页面

ResourceManger(Active):

http://47.111.155.119:8088/cluster

ResourceManger(Standby):(注意8088后面要/cluster/cluster才可以访问)

http://47.111.137.30:8088/cluster/cluster

遇到的问题

- namenode重新初始化

- 停止所有进程

- 将data/dfs/ 下的name,data目录及内容全部删除,jn的内容删除

- 将hadoop下的log文件内容清除

- 从zookeeper启动开始重新操作

- 用到的命令

- root用户下安装,完成后可以用rz,sz上传下载文件

yum install -y lrzsz