【深度学习】DCGAN实现动漫头像生成详细说明

- DCGAN简单总结

DCGAN的全称是Deep Convolutional Generative Adversarial Networks ,

意即深度卷积对抗生成网络,它是由Alec Radford在论文Unsupervised

Representation Learning with Deep Convolutional Generative Adversarial

Networks中提出的。从名字上来看,它是在GAN的基础上增加深度卷积网

络结构,专门生成图像样本。

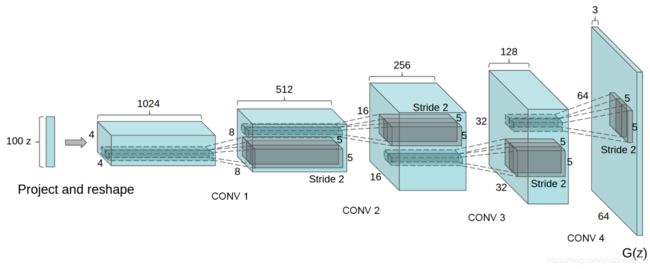

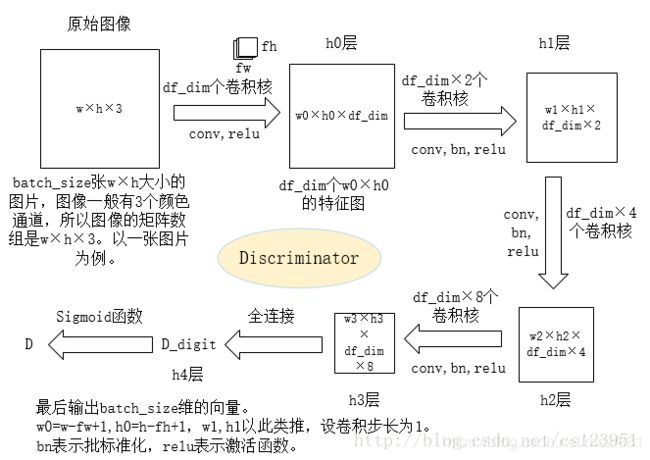

在GAN中 做了D 、G 的输入输出租损失的走义,但事实上, GAN 并没再对D 、G 的具体结构做出任何限制。DCGAN中的D 、G 的含义以及损失都和原始GAN中完全一致,但是它在D和G中采用了较为特殊的结构,以便对图片进行有效建模。对于判别器D,它的输入是一张图像,输出是这张图像为真实图像的概率。在DCGAN中,判别器D的结构是一个卷积神经网络,输入的图像经过若干层卷积后得到一个卷积特征,将得到的特征送入Logistic函数,输出可以看作是概率。

稳定的深度卷积GAN 架构指南:

- 所有的pooling层使用步幅卷积(判别网络)和微步幅度卷积(生成网络)进行替换。

- 在生成网络和判别网络上使用批处理规范化。

- 在生成网络和判别网络上使用批处理规范化。

- 对于更深的架构移除全连接隐藏层。

- 在生成网络的所有层上使用RelU激活函数,除了输出层使用Tanh激活函数。

- 在判别网络的所有层上使用LeakyReLU激活函数。

- 搜集原始数据集

首先我们需要用爬虫爬取大量的动漫图片,从著名的动漫图库网站爬取:http://konachan.net/

源代码如下:

import requests # http lib

from bs4 import BeautifulSoup # climb lib

import os # operation system

import traceback # trace deviance

def download(url,filename):

if os.path.exists(filename):

print('file exists!')

return

try:

r = requests.get(url,stream=True,timeout=60)

r.raise_for_status()

with open(filename,'wb') as f:

for chunk in r.iter_content(chunk_size=1024):

if chunk: # filter out keep-alove new chunks

f.write(chunk)

f.flush()

return filename

except KeyboardInterrupt:

if os.path.exists(filename):

os.remove(filename)

return KeyboardInterrupt

except Exception:

traceback.print_exc()

if os.path.exists(filename):

os.remove(filename)

if os.path.exists('imgs') is False:

os.makedirs('imgs')

start = 1

end = 8000

for i in range(start, end+1):

url = 'http://konachan.net/post?page=%d&tags=' % i

html = requests.get(url).text # gain the web's information

soup = BeautifulSoup(html,'html.parser') # doc's string and jie xi qi

for img in soup.find_all('img',class_="preview"):# 遍历所有preview类,找到img标签

target_url = img['src']

filename = os.path.join('imgs',target_url.split('/')[-1])

download(target_url,filename)

print('%d / %d' % (i,end))

现在已经有了基本的图像了,但我们的目标是生成动漫头像,不需要整张图像,而且其他的信息会干扰到训练,所以需要进行人脸检测截取人脸图像。

- 人脸检测截取人脸

通过基于opencv的人脸检测分类器,参考于lbpcascade_animeface。

首先,要使用这个分类器要先进行下载:

wget https://raw.githubusercontent.com/nagadomi/lbpcascade_animeface/master/lbpcascade_animeface.xml

下载完成后,运行以下代码对图像进行人脸截取。

import cv2

import sys

import os.path

from glob import glob

def detect(filename,cascade_file="lbpcascade_animeface.xml"):

if not os.path.isfile(cascade_file):

raise RuntimeError("%s: not found" % cascade_file)

cascade = cv2.CascadeClassifier(cascade_file)

image = cv2.imread(filename)

gray = cv2.cvtColor(image,cv2.COLOR_BGR2GRAY)

gray = cv2.equalizeHist(gray)

faces = cascade.detectMultiScale(

gray,

# detector options

scaleFactor = 1.1,

minNeighbors = 5,

minSize = (48,48)

)

for i,(x,y,w,h) in enumerate(faces):

face = image[y: y+h, x:x+w, :]

face = cv2.resize(face,(96,96))

save_filename = '%s.jpg' % (os.path.basename(filename).split('.')[0])

cv2.imwrite("faces/"+save_filename,face)

if __name__ == '__main__':

if os.path.exists('faces') is False:

os.makedirs('faces')

file_list = glob('imgs/*.jpg')

for filename in file_list:

detect(filename)

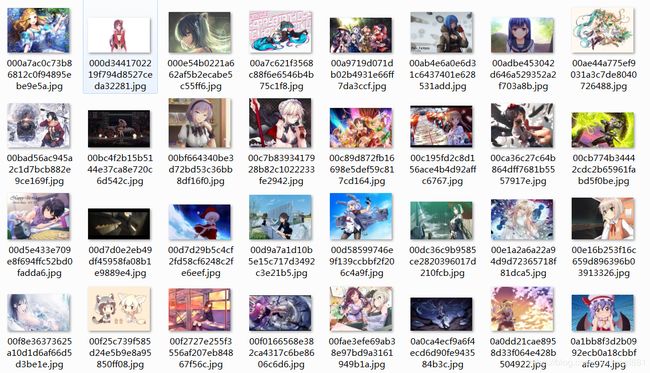

处理后的图像如下所示:

- 源代码分析

参照于DCGAN-tensorflow

总共获取18,466张图像,人脸检测后得到5,238张。

需要安装tensorflow,共4个文件,分别是main.py、model.py、ops.py、utils.py。

- model.py

from __future__ import division

import os

import time

import math

from glob import glob

import tensorflow as tf

import numpy as np

from six.moves import xrange

from ops import *

from utils import *

def conv_out_size_same(size, stride):

return int(math.ceil(float(size) / float(stride)))

class DCGAN(object):

def __init__(self, sess, input_height=108, input_width=108, crop=True,

batch_size=64, sample_num = 64, output_height=64, output_width=64,

y_dim=None, z_dim=100, gf_dim=64, df_dim=64,

gfc_dim=1024, dfc_dim=1024, c_dim=3, dataset_name='default',

input_fname_pattern='*.jpg', checkpoint_dir=None, sample_dir=None):

"""

Args:

sess: TensorFlow session

batch_size: The size of batch. Should be specified before training.

y_dim: (optional) Dimension of dim for y. [None]

z_dim: (optional) Dimension of dim for Z. [100]

gf_dim: (optional) Dimension of gen filters in first conv layer. [64]

df_dim: (optional) Dimension of discrim filters in first conv layer. [64]

gfc_dim: (optional) Dimension of gen units for for fully connected layer. [1024]

dfc_dim: (optional) Dimension of discrim units for fully connected layer. [1024]

c_dim: (optional) Dimension of image color. For grayscale input, set to 1. [3]

"""

self.sess = sess

self.crop = crop

self.batch_size = batch_size

self.sample_num = sample_num

self.input_height = input_height

self.input_width = input_width

self.output_height = output_height

self.output_width = output_width

self.y_dim = y_dim

self.z_dim = z_dim

self.gf_dim = gf_dim

self.df_dim = df_dim

self.gfc_dim = gfc_dim

self.dfc_dim = dfc_dim

# batch normalization : deals with poor initialization helps gradient flow

self.d_bn1 = batch_norm(name='d_bn1')

self.d_bn2 = batch_norm(name='d_bn2')

if not self.y_dim:

self.d_bn3 = batch_norm(name='d_bn3')

self.g_bn0 = batch_norm(name='g_bn0')

self.g_bn1 = batch_norm(name='g_bn1')

self.g_bn2 = batch_norm(name='g_bn2')

if not self.y_dim:

self.g_bn3 = batch_norm(name='g_bn3')

self.dataset_name = dataset_name

self.input_fname_pattern = input_fname_pattern

self.checkpoint_dir = checkpoint_dir

if self.dataset_name == 'mnist':

self.data_X, self.data_y = self.load_mnist()

self.c_dim = self.data_X[0].shape[-1]

else:

self.data = glob(os.path.join("./data", self.dataset_name, self.input_fname_pattern))

imreadImg = imread(self.data[0]);

if len(imreadImg.shape) >= 3: #check if image is a non-grayscale image by checking channel number

self.c_dim = imread(self.data[0]).shape[-1]

else:

self.c_dim = 1

self.grayscale = (self.c_dim == 1)

self.build_model()

def build_model(self):

if self.y_dim:

self.y= tf.placeholder(tf.float32, [self.batch_size, self.y_dim], name='y')

if self.crop:

image_dims = [self.output_height, self.output_width, self.c_dim]

else:

image_dims = [self.input_height, self.input_width, self.c_dim]

self.inputs = tf.placeholder(

tf.float32, [self.batch_size] + image_dims, name='real_images')

inputs = self.inputs

self.z = tf.placeholder(

tf.float32, [None, self.z_dim], name='z')

self.z_sum = histogram_summary("z", self.z)

if self.y_dim:

self.G = self.generator(self.z, self.y)

self.D, self.D_logits = \

self.discriminator(inputs, self.y, reuse=False)

self.sampler = self.sampler(self.z, self.y)

self.D_, self.D_logits_ = \

self.discriminator(self.G, self.y, reuse=True)

else:

self.G = self.generator(self.z)

self.D, self.D_logits = self.discriminator(inputs)

self.sampler = self.sampler(self.z)

self.D_, self.D_logits_ = self.discriminator(self.G, reuse=True)

self.d_sum = histogram_summary("d", self.D)

self.d__sum = histogram_summary("d_", self.D_)

self.G_sum = image_summary("G", self.G)

def sigmoid_cross_entropy_with_logits(x, y):

try:

return tf.nn.sigmoid_cross_entropy_with_logits(logits=x, labels=y)

except:

return tf.nn.sigmoid_cross_entropy_with_logits(logits=x, targets=y)

self.d_loss_real = tf.reduce_mean(

sigmoid_cross_entropy_with_logits(self.D_logits, tf.ones_like(self.D)))

self.d_loss_fake = tf.reduce_mean(

sigmoid_cross_entropy_with_logits(self.D_logits_, tf.zeros_like(self.D_)))

self.g_loss = tf.reduce_mean(

sigmoid_cross_entropy_with_logits(self.D_logits_, tf.ones_like(self.D_)))

self.d_loss_real_sum = scalar_summary("d_loss_real", self.d_loss_real)

self.d_loss_fake_sum = scalar_summary("d_loss_fake", self.d_loss_fake)

self.d_loss = self.d_loss_real + self.d_loss_fake

self.g_loss_sum = scalar_summary("g_loss", self.g_loss)

self.d_loss_sum = scalar_summary("d_loss", self.d_loss)

t_vars = tf.trainable_variables()

self.d_vars = [var for var in t_vars if 'd_' in var.name]

self.g_vars = [var for var in t_vars if 'g_' in var.name]

self.saver = tf.train.Saver()

def train(self, config):

d_optim = tf.train.AdamOptimizer(config.learning_rate, beta1=config.beta1) \

.minimize(self.d_loss, var_list=self.d_vars)

g_optim = tf.train.AdamOptimizer(config.learning_rate, beta1=config.beta1) \

.minimize(self.g_loss, var_list=self.g_vars)

try:

tf.global_variables_initializer().run()

except:

tf.initialize_all_variables().run()

self.g_sum = merge_summary([self.z_sum, self.d__sum,

self.G_sum, self.d_loss_fake_sum, self.g_loss_sum])

self.d_sum = merge_summary(

[self.z_sum, self.d_sum, self.d_loss_real_sum, self.d_loss_sum])

self.writer = SummaryWriter("./logs", self.sess.graph)

sample_z = np.random.uniform(-1, 1, size=(self.sample_num , self.z_dim))

if config.dataset == 'mnist':

sample_inputs = self.data_X[0:self.sample_num]

sample_labels = self.data_y[0:self.sample_num]

else:

sample_files = self.data[0:self.sample_num]

sample = [

get_image(sample_file,

input_height=self.input_height,

input_width=self.input_width,

resize_height=self.output_height,

resize_width=self.output_width,

crop=self.crop,

grayscale=self.grayscale) for sample_file in sample_files]

if (self.grayscale):

sample_inputs = np.array(sample).astype(np.float32)[:, :, :, None]

else:

sample_inputs = np.array(sample).astype(np.float32)

counter = 1

start_time = time.time()

could_load, checkpoint_counter = self.load(self.checkpoint_dir)

if could_load:

counter = checkpoint_counter

print(" [*] Load SUCCESS")

else:

print(" [!] Load failed...")

for epoch in xrange(config.epoch):

if config.dataset == 'mnist':

batch_idxs = min(len(self.data_X), config.train_size) // config.batch_size

else:

self.data = glob(os.path.join(

"./data", config.dataset, self.input_fname_pattern))

batch_idxs = min(len(self.data), config.train_size) // config.batch_size

for idx in xrange(0, batch_idxs):

if config.dataset == 'mnist':

batch_images = self.data_X[idx*config.batch_size:(idx+1)*config.batch_size]

batch_labels = self.data_y[idx*config.batch_size:(idx+1)*config.batch_size]

else:

batch_files = self.data[idx*config.batch_size:(idx+1)*config.batch_size]

batch = [

get_image(batch_file,

input_height=self.input_height,

input_width=self.input_width,

resize_height=self.output_height,

resize_width=self.output_width,

crop=self.crop,

grayscale=self.grayscale) for batch_file in batch_files]

if self.grayscale:

batch_images = np.array(batch).astype(np.float32)[:, :, :, None]

else:

batch_images = np.array(batch).astype(np.float32)

batch_z = np.random.uniform(-1, 1, [config.batch_size, self.z_dim]) \

.astype(np.float32)

if config.dataset == 'mnist':

# Update D network

_, summary_str = self.sess.run([d_optim, self.d_sum],

feed_dict={

self.inputs: batch_images,

self.z: batch_z,

self.y:batch_labels,

})

self.writer.add_summary(summary_str, counter)

# Update G network

_, summary_str = self.sess.run([g_optim, self.g_sum],

feed_dict={

self.z: batch_z,

self.y:batch_labels,

})

self.writer.add_summary(summary_str, counter)

# Run g_optim twice to make sure that d_loss does not go to zero (different from paper)

_, summary_str = self.sess.run([g_optim, self.g_sum],

feed_dict={ self.z: batch_z, self.y:batch_labels })

self.writer.add_summary(summary_str, counter)

errD_fake = self.d_loss_fake.eval({

self.z: batch_z,

self.y:batch_labels

})

errD_real = self.d_loss_real.eval({

self.inputs: batch_images,

self.y:batch_labels

})

errG = self.g_loss.eval({

self.z: batch_z,

self.y: batch_labels

})

else:

# Update D network

_, summary_str = self.sess.run([d_optim, self.d_sum],

feed_dict={ self.inputs: batch_images, self.z: batch_z })

self.writer.add_summary(summary_str, counter)

# Update G network

_, summary_str = self.sess.run([g_optim, self.g_sum],

feed_dict={ self.z: batch_z })

self.writer.add_summary(summary_str, counter)

# Run g_optim twice to make sure that d_loss does not go to zero (different from paper)

_, summary_str = self.sess.run([g_optim, self.g_sum],

feed_dict={ self.z: batch_z })

self.writer.add_summary(summary_str, counter)

errD_fake = self.d_loss_fake.eval({ self.z: batch_z })

errD_real = self.d_loss_real.eval({ self.inputs: batch_images })

errG = self.g_loss.eval({self.z: batch_z})

counter += 1

print("Epoch: [%2d] [%4d/%4d] time: %4.4f, d_loss: %.8f, g_loss: %.8f" \

% (epoch, idx, batch_idxs,

time.time() - start_time, errD_fake+errD_real, errG))

if np.mod(counter, 100) == 1:

if config.dataset == 'mnist':

samples, d_loss, g_loss = self.sess.run(

[self.sampler, self.d_loss, self.g_loss],

feed_dict={

self.z: sample_z,

self.inputs: sample_inputs,

self.y:sample_labels,

}

)

save_images(samples, image_manifold_size(samples.shape[0]),

'./{}/train_{:02d}_{:04d}.png'.format(config.sample_dir, epoch, idx))

print("[Sample] d_loss: %.8f, g_loss: %.8f" % (d_loss, g_loss))

else:

try:

samples, d_loss, g_loss = self.sess.run(

[self.sampler, self.d_loss, self.g_loss],

feed_dict={

self.z: sample_z,

self.inputs: sample_inputs,

},

)

save_images(samples, image_manifold_size(samples.shape[0]),

'./{}/train_{:02d}_{:04d}.png'.format(config.sample_dir, epoch, idx))

print("[Sample] d_loss: %.8f, g_loss: %.8f" % (d_loss, g_loss))

except:

print("one pic error!...")

if np.mod(counter, 500) == 2:

self.save(config.checkpoint_dir, counter)

def discriminator(self, image, y=None, reuse=False):

with tf.variable_scope("discriminator") as scope:

if reuse:

scope.reuse_variables()

if not self.y_dim:

h0 = lrelu(conv2d(image, self.df_dim, name='d_h0_conv'))

h1 = lrelu(self.d_bn1(conv2d(h0, self.df_dim*2, name='d_h1_conv')))

h2 = lrelu(self.d_bn2(conv2d(h1, self.df_dim*4, name='d_h2_conv')))

h3 = lrelu(self.d_bn3(conv2d(h2, self.df_dim*8, name='d_h3_conv')))

h4 = linear(tf.reshape(h3, [self.batch_size, -1]), 1, 'd_h4_lin')

return tf.nn.sigmoid(h4), h4

else:

yb = tf.reshape(y, [self.batch_size, 1, 1, self.y_dim])

x = conv_cond_concat(image, yb)

h0 = lrelu(conv2d(x, self.c_dim + self.y_dim, name='d_h0_conv'))

h0 = conv_cond_concat(h0, yb)

h1 = lrelu(self.d_bn1(conv2d(h0, self.df_dim + self.y_dim, name='d_h1_conv')))

h1 = tf.reshape(h1, [self.batch_size, -1])

h1 = concat([h1, y], 1)

h2 = lrelu(self.d_bn2(linear(h1, self.dfc_dim, 'd_h2_lin')))

h2 = concat([h2, y], 1)

h3 = linear(h2, 1, 'd_h3_lin')

return tf.nn.sigmoid(h3), h3

def generator(self, z, y=None):

with tf.variable_scope("generator") as scope:

if not self.y_dim:

s_h, s_w = self.output_height, self.output_width

s_h2, s_w2 = conv_out_size_same(s_h, 2), conv_out_size_same(s_w, 2)

s_h4, s_w4 = conv_out_size_same(s_h2, 2), conv_out_size_same(s_w2, 2)

s_h8, s_w8 = conv_out_size_same(s_h4, 2), conv_out_size_same(s_w4, 2)

s_h16, s_w16 = conv_out_size_same(s_h8, 2), conv_out_size_same(s_w8, 2)

# project `z` and reshape

self.z_, self.h0_w, self.h0_b = linear(

z, self.gf_dim*8*s_h16*s_w16, 'g_h0_lin', with_w=True)

self.h0 = tf.reshape(

self.z_, [-1, s_h16, s_w16, self.gf_dim * 8])

h0 = tf.nn.relu(self.g_bn0(self.h0))

self.h1, self.h1_w, self.h1_b = deconv2d(

h0, [self.batch_size, s_h8, s_w8, self.gf_dim*4], name='g_h1', with_w=True)

h1 = tf.nn.relu(self.g_bn1(self.h1))

h2, self.h2_w, self.h2_b = deconv2d(

h1, [self.batch_size, s_h4, s_w4, self.gf_dim*2], name='g_h2', with_w=True)

h2 = tf.nn.relu(self.g_bn2(h2))

h3, self.h3_w, self.h3_b = deconv2d(

h2, [self.batch_size, s_h2, s_w2, self.gf_dim*1], name='g_h3', with_w=True)

h3 = tf.nn.relu(self.g_bn3(h3))

h4, self.h4_w, self.h4_b = deconv2d(

h3, [self.batch_size, s_h, s_w, self.c_dim], name='g_h4', with_w=True)

return tf.nn.tanh(h4)

else:

s_h, s_w = self.output_height, self.output_width

s_h2, s_h4 = int(s_h/2), int(s_h/4)

s_w2, s_w4 = int(s_w/2), int(s_w/4)

# yb = tf.expand_dims(tf.expand_dims(y, 1),2)

yb = tf.reshape(y, [self.batch_size, 1, 1, self.y_dim])

z = concat([z, y], 1)

h0 = tf.nn.relu(

self.g_bn0(linear(z, self.gfc_dim, 'g_h0_lin')))

h0 = concat([h0, y], 1)

h1 = tf.nn.relu(self.g_bn1(

linear(h0, self.gf_dim*2*s_h4*s_w4, 'g_h1_lin')))

h1 = tf.reshape(h1, [self.batch_size, s_h4, s_w4, self.gf_dim * 2])

h1 = conv_cond_concat(h1, yb)

h2 = tf.nn.relu(self.g_bn2(deconv2d(h1,

[self.batch_size, s_h2, s_w2, self.gf_dim * 2], name='g_h2')))

h2 = conv_cond_concat(h2, yb)

return tf.nn.sigmoid(

deconv2d(h2, [self.batch_size, s_h, s_w, self.c_dim], name='g_h3'))

def sampler(self, z, y=None):

with tf.variable_scope("generator") as scope:

scope.reuse_variables()

if not self.y_dim:

s_h, s_w = self.output_height, self.output_width

s_h2, s_w2 = conv_out_size_same(s_h, 2), conv_out_size_same(s_w, 2)

s_h4, s_w4 = conv_out_size_same(s_h2, 2), conv_out_size_same(s_w2, 2)

s_h8, s_w8 = conv_out_size_same(s_h4, 2), conv_out_size_same(s_w4, 2)

s_h16, s_w16 = conv_out_size_same(s_h8, 2), conv_out_size_same(s_w8, 2)

# project `z` and reshape

h0 = tf.reshape(

linear(z, self.gf_dim*8*s_h16*s_w16, 'g_h0_lin'),

[-1, s_h16, s_w16, self.gf_dim * 8])

h0 = tf.nn.relu(self.g_bn0(h0, train=False))

h1 = deconv2d(h0, [self.batch_size, s_h8, s_w8, self.gf_dim*4], name='g_h1')

h1 = tf.nn.relu(self.g_bn1(h1, train=False))

h2 = deconv2d(h1, [self.batch_size, s_h4, s_w4, self.gf_dim*2], name='g_h2')

h2 = tf.nn.relu(self.g_bn2(h2, train=False))

h3 = deconv2d(h2, [self.batch_size, s_h2, s_w2, self.gf_dim*1], name='g_h3')

h3 = tf.nn.relu(self.g_bn3(h3, train=False))

h4 = deconv2d(h3, [self.batch_size, s_h, s_w, self.c_dim], name='g_h4')

return tf.nn.tanh(h4)

else:

s_h, s_w = self.output_height, self.output_width

s_h2, s_h4 = int(s_h/2), int(s_h/4)

s_w2, s_w4 = int(s_w/2), int(s_w/4)

# yb = tf.reshape(y, [-1, 1, 1, self.y_dim])

yb = tf.reshape(y, [self.batch_size, 1, 1, self.y_dim])

z = concat([z, y], 1)

h0 = tf.nn.relu(self.g_bn0(linear(z, self.gfc_dim, 'g_h0_lin'), train=False))

h0 = concat([h0, y], 1)

h1 = tf.nn.relu(self.g_bn1(

linear(h0, self.gf_dim*2*s_h4*s_w4, 'g_h1_lin'), train=False))

h1 = tf.reshape(h1, [self.batch_size, s_h4, s_w4, self.gf_dim * 2])

h1 = conv_cond_concat(h1, yb)

h2 = tf.nn.relu(self.g_bn2(

deconv2d(h1, [self.batch_size, s_h2, s_w2, self.gf_dim * 2], name='g_h2'), train=False))

h2 = conv_cond_concat(h2, yb)

return tf.nn.sigmoid(deconv2d(h2, [self.batch_size, s_h, s_w, self.c_dim], name='g_h3'))

def load_mnist(self):

data_dir = os.path.join("./data", self.dataset_name)

fd = open(os.path.join(data_dir,'train-images-idx3-ubyte'))

loaded = np.fromfile(file=fd,dtype=np.uint8)

trX = loaded[16:].reshape((60000,28,28,1)).astype(np.float)

fd = open(os.path.join(data_dir,'train-labels-idx1-ubyte'))

loaded = np.fromfile(file=fd,dtype=np.uint8)

trY = loaded[8:].reshape((60000)).astype(np.float)

fd = open(os.path.join(data_dir,'t10k-images-idx3-ubyte'))

loaded = np.fromfile(file=fd,dtype=np.uint8)

teX = loaded[16:].reshape((10000,28,28,1)).astype(np.float)

fd = open(os.path.join(data_dir,'t10k-labels-idx1-ubyte'))

loaded = np.fromfile(file=fd,dtype=np.uint8)

teY = loaded[8:].reshape((10000)).astype(np.float)

trY = np.asarray(trY)

teY = np.asarray(teY)

X = np.concatenate((trX, teX), axis=0)

y = np.concatenate((trY, teY), axis=0).astype(np.int)

seed = 547

np.random.seed(seed)

np.random.shuffle(X)

np.random.seed(seed)

np.random.shuffle(y)

y_vec = np.zeros((len(y), self.y_dim), dtype=np.float)

for i, label in enumerate(y):

y_vec[i,y[i]] = 1.0

return X/255.,y_vec

@property

def model_dir(self):

return "{}_{}_{}_{}".format(

self.dataset_name, self.batch_size,

self.output_height, self.output_width)

def save(self, checkpoint_dir, step):

model_name = "DCGAN.model"

checkpoint_dir = os.path.join(checkpoint_dir, self.model_dir)

if not os.path.exists(checkpoint_dir):

os.makedirs(checkpoint_dir)

self.saver.save(self.sess,

os.path.join(checkpoint_dir, model_name),

global_step=step)

def load(self, checkpoint_dir):

import re

print(" [*] Reading checkpoints...")

checkpoint_dir = os.path.join(checkpoint_dir, self.model_dir)

ckpt = tf.train.get_checkpoint_state(checkpoint_dir)

if ckpt and ckpt.model_checkpoint_path:

ckpt_name = os.path.basename(ckpt.model_checkpoint_path)

self.saver.restore(self.sess, os.path.join(checkpoint_dir, ckpt_name))

counter = int(next(re.finditer("(\d+)(?!.*\d)",ckpt_name)).group(0))

print(" [*] Success to read {}".format(ckpt_name))

return True, counter

else:

print(" [*] Failed to find a checkpoint")

return False, 0

model.py定义了DCGAN类,包括9个函数

init() 初始化参数

参数初始化,已讲过的input_height, input_width, crop, batch_size, output_height, output_width, dataset_name, input_fname_pattern, checkpoint_dir, sample_dir就不再说了

sample_numsample_num:大小和batch_size一样

y_dimy_dim:输出通道。训练mnist数据集时,y_dim=10训练mnist数据集时,y_dim=10,我想可能是因为mnist是图片数字,分为10类。如果不是mnist,则默认为none。

z_dimz_dim:噪声z的维度,默认为100

gf_dimgf_dim:G第一个卷积层的过滤器个数G第一个卷积层的过滤器个数,默认为64

df_dimdf_dim:D第一个卷积层的过滤器个数D第一个卷积层的过滤器个数,默认为64

gfc_dimgfc_dim:G第一个全连接层的G单元个数G第一个全连接层的G单元个数,默认为1024

dfc_dimdfc_dim:D第一个全连接层的D单元个数D第一个全连接层的D单元个数,默认为1024

c_dimc_dim:颜色通道,灰度图像设为1,彩色图像设为3,默认为3

其中self.d_bn1, self.d_bn2, g_bn0, g_bn1, g_bn2是batch标准化,见ops.py的batch_norm(object)。

如果是mnist数据集,d_bn3, g_bn3都要batch_norm。

self.data读取数据集。

build_model() 建立模型

inputs的形状为[batch_size, input_height, input_width, c_dim]。

如果crop=True,inputs的形状为[batch_size, output_height, output_width, c_dim]。

输入分为样本输入inputs和抽样输入sample_inputs。

噪声z的形状为[None, z_dim],第一个None是batch的大小。

然后取数据:

self.G = self.generator(self.z)#返回[batch_size, output_height, output_width, c_dim]形状的张量,也就是batch_size张图

self.D, self.D_logits = self.discriminator(inputs)#返回的D为是否是真样本的sigmoid概率,D_logits是未经sigmoid处理

self.sampler = self.sampler(self.z)#相当于测试,经过G网络模型,取样,代码和G很像,没有G训练的过程。

self.D_, self.D_logits_ = self.discriminator(self.G, reuse=True)

#D是真实数据,D_是假数据

用交叉熵计算损失,共有:d_loss_real、d_loss_fake、g_loss

self.d_loss_real = tf.reduce_mean(

sigmoid_cross_entropy_with_logits(self.D_logits, tf.ones_like(self.D)))

self.d_loss_fake = tf.reduce_mean(

sigmoid_cross_entropy_with_logits(self.D_logits_, tf.zeros_like(self.D_)))

self.g_loss = tf.reduce_mean(

sigmoid_cross_entropy_with_logits(self.D_logits_, tf.ones_like(self.D_)))

tf.ones_like:新建一个与给定tensor大小一致的tensor,其全部元素为1

d_loss_real是真样本输入的损失,要让D_logits接近于1,也就是D识别出真样本为真的

d_loss_fake是假样本输入的损失,要让D_logits_接近于0,D识别出假样本为假

d_loss = d_loss_real + d_loss_fake是D的目标,要最小化这个损失

g_loss:要让D识别假样本为真样本,G的目标是降低这个损失,D是提高这个损失

summary这几步是关于可视化,就不管了

train()

通过Adam优化器最小化d_loss和g_loss。

sample_z为从-1到1均匀分布的数,大小为[sample_num, z_dim]

从路径中读取原始样本sample,大小为[sample_num, output_height, output_width, c_dim]

接下来进行epoch个训练:

将data总数分为batch_idxs次训练,每次训练batch_size个样本。产生的样本为batch_images。

batch_z为训练的噪声,大小为[batch_num, z_dim]

d_optim = tf.train.AdamOptimizer(config.learning_rate, beta1=config.beta1) \

.minimize(self.d_loss, var_list=self.d_vars)

g_optim = tf.train.AdamOptimizer(config.learning_rate, beta1=config.beta1) \

.minimize(self.g_loss, var_list=self.g_vars)

首先输入噪声z和batch_images,通过优化d_optim更新D网络。

然后输入噪声z,优化g_optim来更新G网络。G网络更新两次,以免d_loss为0。这点不同于paper。

这样的训练,每过100个可以生成图片看看效果。

if np.mod(counter, 100) == 1

discriminator()

代码自定义了一个conv2d,对tf.nn.conv2d稍加修改了。下面贴出tf.nn.conv2dtf.nn.conv2d解释如下:

tf.nn.conv2d(input, filter, strides, padding, use_cudnn_on_gpu=None, name=None)

除去name参数用以指定该操作的name,与方法有关的一共五个参数:

第一个参数input:指需要做卷积的输入图像,它要求是一个Tensor,具有[batch, in_height, in_width, in_channels]这样的shape,具体含义是[训练时一个batch的图片数量, 图片高度, 图片宽度, 图像通道数],注意这是一个4维的Tensor,要求类型为float32和float64其中之一

第二个参数filter:相当于CNN中的卷积核,它要求是一个Tensor,具有[filter_height, filter_width, in_channels, out_channels]这样的shape,具体含义是[卷积核的高度,卷积核的宽度,图像通道数,卷积核个数],要求类型与参数input相同,有一个地方需要注意,第三维in_channels,就是参数input的第四维

第三个参数strides:卷积时在图像每一维的步长,这是一个一维的向量,长度4

第四个参数padding:string类型的量,只能是”SAME”,”VALID”其中之一,这个值决定了不同的卷积方式(后面会介绍)

第五个参数:use_cudnn_on_gpu:bool类型,是否使用cudnn加速,默认为true

结果返回一个Tensor,这个输出,就是我们常说的feature map

batch_norm(object)batch_norm(object)

tf.contrib.layers.batch_norm的代码见https://github.com/tensorflow/tensorflow/blob/master/tensorflow/contrib/layers/python/layers/layers.py

batchnormalization来自于http://arxiv.org/abs/1502.03167

加快训练。

激活函数lrelu见ops.py。四次卷积(其中三次卷积之前先批标准化)和激活之后。然后线性化,返回sigmoid函数处理后的结果。h3到h4的全连接相当于线性化,用一个矩阵将h3和h4连接起来,使h4是一个batch_size维的向量。

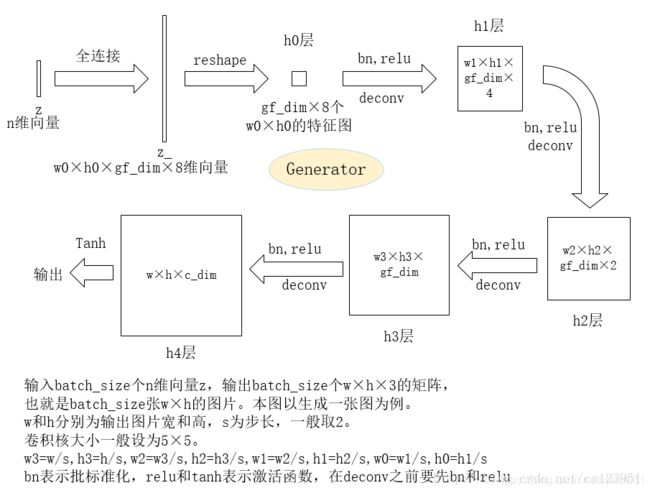

generator()

self.h0 = tf.reshape(self.z_, [-1, s_h16, s_w16, self.gf_dim * 8])改变z_的形状。-1代表的含义是不用我们自己指定这一维的大小,函数会自动计算,但列表中只能存在一个-1。(当然如果存在多个-1,就是一个存在多解的方程了)

deconv2d()deconv2d()

引用tf的反卷积函数tf.nn.conv2d_transpose或tf.nn.deconv2d。以tf.nn.conv2d_transpose为例。

defconv2d_transpose(value, filter, output_shape, strides,padding=”SAME”, data_format=”NHWC”, name=None):

- value: 是一个4维的tensor,格式为[batch, height, width, in_channels] 或者 [batch,

in_channels,height, width]。 - filter: 是一个4维的tensor,格式为[height, width, output_channels,

in_channels],过滤器的in_ channels的维度要和这个匹配。 - output_shape: 一维tensor,表示反卷积操作的输出shapeA

- strides: 针对每个输入的tensor维度,滑动窗口的步长。

- padding: “VALID”或者”SAME”,padding算法

- data_format: “NHWC”或者”NCHW” ,对应value的数据格式。

- name: 可选,返回的tensor名。

deconv= tf.nn.conv2d_transpose(input_, w, output_shape=output_shape,strides=[1,d_h, d_w, 1])

第一个参数是输入,即上一层的结果,

第二个参数是输出输出的特征图维数,是个4维的参数,

第三个参数卷积核的移动步长,[1, d_h, d_w, 1],其中第一个对应一次跳过batch中的多少图片,第二个d_h对应一次跳过图片中多少行,第三个d_w对应一次跳过图片中多少列,第四个对应一次跳过图像的多少个通道。这里直接设置为[1,2,2,1]。即每次反卷积后,图像的滑动步长为2,特征图会扩大缩小为原来2*2=4倍。

sampler()

和generator结构一样,用的也是它的参数。存在的意义可能在于共享参数?

将self.sampler = self.sampler(self.z, self.y)改为self.sampler = self.generator(self.z, self.y)

报错:

所以sampler的存在还是有意义的。

load_mnist(), save(), load()

这三个加载保存等就不仔细讲了。

download.py和ops.py好像也没什么好讲的。

utils.py包含可视化等函数

- main.py

import os

import scipy.misc

import numpy as np

from model import DCGAN

from utils import pp, visualize, to_json, show_all_variables

import tensorflow as tf

flags = tf.app.flags

#迭代次数

flags.DEFINE_integer("epoch", 25, "Epoch to train [25]")

flags.DEFINE_float("learning_rate", 0.0002, "Learning rate of for adam [0.0002]")

flags.DEFINE_float("beta1", 0.5, "Momentum term of adam [0.5]")

flags.DEFINE_float("train_size", np.inf, "The size of train images [np.inf]")

flags.DEFINE_integer("batch_size", 64, "The size of batch images [64]")

flags.DEFINE_integer("input_height", 108, "The size of image to use (will be center cropped). [108]")

flags.DEFINE_integer("input_width", None, "The size of image to use (will be center cropped). If None, same value as input_height [None]")

flags.DEFINE_integer("output_height", 64, "The size of the output images to produce [64]")

flags.DEFINE_integer("output_width", None, "The size of the output images to produce. If None, same value as output_height [None]")

flags.DEFINE_string("dataset", "celebA", "The name of dataset [celebA, mnist, lsun]")

flags.DEFINE_string("input_fname_pattern", "*.jpg", "Glob pattern of filename of input images [*]")

flags.DEFINE_string("checkpoint_dir", "checkpoint", "Directory name to save the checkpoints [checkpoint]")

flags.DEFINE_string("sample_dir", "samples", "Directory name to save the image samples [samples]")

flags.DEFINE_boolean("train", False, "True for training, False for testing [False]")

flags.DEFINE_boolean("crop", False, "True for training, False for testing [False]")

flags.DEFINE_boolean("visualize", False, "True for visualizing, False for nothing [False]")

FLAGS = flags.FLAGS

def main(_):

##打印参数

pp.pprint(flags.FLAGS.__flags)

if FLAGS.input_width is None:

FLAGS.input_width = FLAGS.input_height

if FLAGS.output_width is None:

FLAGS.output_width = FLAGS.output_height

##检测checkpoint和sample目录是否存在

if not os.path.exists(FLAGS.checkpoint_dir):

os.makedirs(FLAGS.checkpoint_dir)

if not os.path.exists(FLAGS.sample_dir):

os.makedirs(FLAGS.sample_dir)

##session参数设置 tf.ConfigProto()一般用于创建session时,对参数进行设置 详见:https://blog.csdn.net/u012436149/article/details/53837651

#gpu_options = tf.GPUOptions(per_process_gpu_memory_fraction=0.333)

run_config = tf.ConfigProto()

run_config.gpu_options.allow_growth=True

with tf.Session(config=run_config) as sess:

##mnist数据集

if FLAGS.dataset == 'mnist':

dcgan = DCGAN(

sess,

input_width=FLAGS.input_width,

input_height=FLAGS.input_height,

output_width=FLAGS.output_width,

output_height=FLAGS.output_height,

batch_size=FLAGS.batch_size,

sample_num=FLAGS.batch_size,

y_dim=10,

dataset_name=FLAGS.dataset,

input_fname_pattern=FLAGS.input_fname_pattern,

crop=FLAGS.crop,

checkpoint_dir=FLAGS.checkpoint_dir,

sample_dir=FLAGS.sample_dir)

else:

##其他数据集

dcgan = DCGAN(

sess,

input_width=FLAGS.input_width,

input_height=FLAGS.input_height,

output_width=FLAGS.output_width,

output_height=FLAGS.output_height,

batch_size=FLAGS.batch_size,

sample_num=FLAGS.batch_size,

dataset_name=FLAGS.dataset,

input_fname_pattern=FLAGS.input_fname_pattern,

crop=FLAGS.crop,

checkpoint_dir=FLAGS.checkpoint_dir,

sample_dir=FLAGS.sample_dir)

show_all_variables()

if FLAGS.train:

dcgan.train(FLAGS)

else:

if not dcgan.load(FLAGS.checkpoint_dir)[0]:

raise Exception("[!] Train a model first, then run test mode")

# to_json("./web/js/layers.js", [dcgan.h0_w, dcgan.h0_b, dcgan.g_bn0],

# [dcgan.h1_w, dcgan.h1_b, dcgan.g_bn1],

# [dcgan.h2_w, dcgan.h2_b, dcgan.g_bn2],

# [dcgan.h3_w, dcgan.h3_b, dcgan.g_bn3],

# [dcgan.h4_w, dcgan.h4_b, None])

# Below is codes for visualization

OPTION = 2

visualize(sess, dcgan, FLAGS, OPTION)

if __name__ == '__main__':

tf.app.run()

main.py说明

入口程序,事先定义所需参数的值。

FLAGS参数

epochepoch:训练回合,默认为25

learning_ratelearning_rate:AdamAdam的学习率,默认为0.0002

beta1beta1:Adam的动量项(Momentum term of Adam),默认为0.5

train_sizetrain_size:训练图像的个数,默认为np.inf

batch_sizebatch_size:批图像的个数,默认为64。后面生成的图片拼在一张图,因此batch_size最好取平方,比如64,36等后面生成的图片拼在一张图,因此batch_size最好取平方,比如64,36等

input_heightinput_height:所使用的图像的图像高度(将会被center croppedcenter cropped),默认为108

input_widthinput_width:所使用的图像的图像宽度(将会被center croppedcenter cropped),如果没有特别指定默认和input_height一样

output_heightoutput_height:所产生的图像的图像高度(将会被center croppedcenter cropped),默认为64

output_widthoutput_width:所产生的图像的图像宽度(将会被center croppedcenter cropped),如果没有特别指定默认和output_height一样

datasetdataset:所用数据集的名称,在文件夹data里面,可以选择celebA,mnist,lsun。也可以自己下载图片,把文件夹放到data文件夹里面。

input_fname_patterninput_fname_pattern:输入的图片类型,默认为*.jpg

checkpoint_dircheckpoint_dir:存放checkpoint的目录名,默认为checkpointcheckpoint

sample_dirsample_dir:存放生成图片的目录名,默认为samples

traintrain:训练为True,测试为False,默认为False

cropcrop:训练为True,测试为False,默认为False

visualizevisualize:可视化为True,不可视化为False,默认为False

- 训练

新建data\faces目录,把头像存放到face目录下,执行

python main.py --dataset faces --input_height 96 --input_width 96 --output_height 48 --output_width 48 --crop --train --epoch 20 --input_fname_pattern "*.jpg"