CUDA编程系列学习-从入门到放弃

本文主要从代码方面进行CUDA系列知识的学习,基础知识就不一一细讲了,毕竟很多大牛已经介绍得很详细了,,,,

bong bong bong 开始吧!

一、整数相加

由GPU计算两个整数求和,使用一个Block 一个thread就可以解决问题了。

代码如下:

__global__ void add(int *a, int *b, int *c) {

*c = *a + *b;

}

void firstDemo(){

//host value of a b c

int hA, hB, hC;

hA = 10, hB = 20, hC = 0;

//device value of a b c

int *devA, *devB, *devC;

//allocate device memery of a b c (use void**)

cudaMalloc((void**)&devA, sizeof(int));

cudaMalloc((void**)&devB, sizeof(int));

cudaMalloc((void**)&devC, sizeof(int));

//copy host value to device (use hA address &hA)

cudaMemcpy(devA, &hA, sizeof(int), cudaMemcpyHostToDevice);

cudaMemcpy(devB, &hB, sizeof(int), cudaMemcpyHostToDevice);

//launch add() kernel on GPU

add << <1, 1 >> > (devA, devB, devC);

//return the answer from the device

cudaMemcpy(&hC, devC, sizeof(int), cudaMemcpyDeviceToHost);

printf("C=%d\n", hC);

//Free the device memory

cudaFree(devA);

cudaFree(devB);

cudaFree(devC);

}在上面的例子的基础上,将整数改为数组,并且一个Block 里面只设置一个线程(实现Block层次的粗粒度并行),所以kernel函数里面下标的索引就用blockIdx.x。

注意:由整数变为数组后函数参数的变化,是否取地址符

代码如下:

__global__ void vectorAdd(int *a, int *b, int *c) {

//use blockidx.x to access block's index

c[blockIdx.x] = a[blockIdx.x] + b[blockIdx.x];

}

#define N 100

void secondDemo() {

//host value of a b c

int *hA, *hB, *hC;

size_t size = N * sizeof(int);

hA = (int*)malloc(size);

hB = (int*)malloc(size);

hC = (int*)malloc(size);

//srand( (unsigned) time(NULL) );

for (int i = 0; i < N; i++) {

hA[i] = rand()%20;

hB[i] = rand() % 20;

}

printf("A:\n");

for (int i = 0; i < N; i++) {

printf("%5d", hA[i]);

}

printf("\nB:\n");

for (int i = 0; i < N; i++) {

printf("%5d", hB[i]);

}

printf("\n");

//device value of a b c

int *devA, *devB, *devC;

//allocate device memery of a b c (use void**)

cudaMalloc((void**)&devA, size);

cudaMalloc((void**)&devB, size);

cudaMalloc((void**)&devC, size);

//copy host value to device (use hA address &hA)

cudaMemcpy(devA, hA, size, cudaMemcpyHostToDevice);

cudaMemcpy(devB, hB, size, cudaMemcpyHostToDevice);

//launch add() kernel on GPU

vectorAdd << > > (devA, devB, devC);

//return the answer from the device

cudaMemcpy(hC, devC, size, cudaMemcpyDeviceToHost);

printf("C:\n");

for (int i = 0; i < N; i++) {

printf("%5d", hC[i]);

}

printf("\n");

//Free the device memory

cudaFree(devA);

cudaFree(devB);

cudaFree(devC);

free(hA);

free(hB);

free(hC);

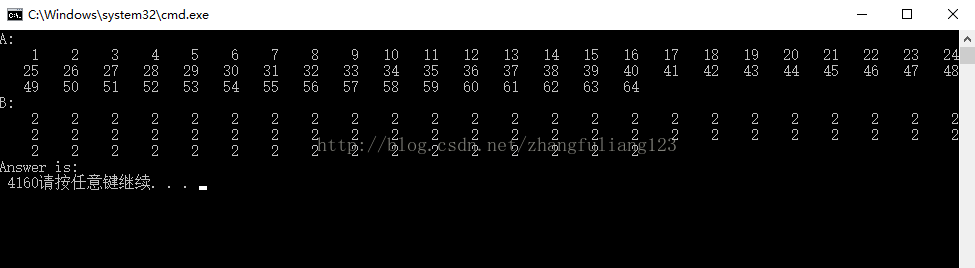

} 三、一维数组点乘求和-使用共享内存

该例子只用了一个Block,在该Block里面声明 了一块共享内存数组,通过线程索引将乘积保存到对应元素,利用栅栏同步函数等待所有线程计算完成后再用线程0来累加点乘之和。

kernel:

__global__ void dotProduct(int *a, int *b, int *c) {

__shared__ int shareArray[N];

shareArray[threadIdx.x] = a[threadIdx.x] * b[threadIdx.x];

__syncthreads();

if (0 == threadIdx.x) {

int sum = 0;

for (int i = 0; i < N; i++) {

sum += shareArray[i];

}

*c = sum;

}

}void thirdDemo_SharMem() {

//host value of a b c

int *hA, *hB, *hCone;

size_t size = N * sizeof(int);

hA = (int*)malloc(size);

hB = (int*)malloc(size);

hCone = (int*)malloc(sizeof(int));

//srand( (unsigned) time(NULL) );

for (int i = 0; i < N; i++) {

hA[i] = i+1;

hB[i] = 2;

}

printf("A:\n");

for (int i = 0; i < N; i++) {

printf("%5d", hA[i]);

}

printf("\nB:\n");

for (int i = 0; i < N; i++) {

printf("%5d", hB[i]);

}

printf("\n");

//device value of a b c

int *devA, *devB, *devC;

//allocate device memery of a b c (use void**)

cudaMalloc((void**)&devA, size);

cudaMalloc((void**)&devB, size);

cudaMalloc((void**)&devC, sizeof(int));

//copy host value to device (use hA address &hA)

cudaMemcpy(devA, hA, size, cudaMemcpyHostToDevice);

cudaMemcpy(devB, hB, size, cudaMemcpyHostToDevice);

//launch add() kernel on GPU use one Block

dotProduct << <1, N >> > (devA, devB, devC);

//return the answer from the device

cudaMemcpy(hCone, devC, sizeof(int), cudaMemcpyDeviceToHost);

printf("C:\n");

printf("%5d", *hCone);

//Free the device memory

cudaFree(devA);

cudaFree(devB);

cudaFree(devC);

free(hA);

free(hB);

free(hCone);

}

四: