pytorch 入门 GoogleNet(InceptionNet)

这篇内容并未debug

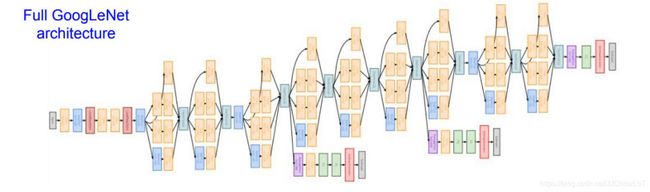

知识点1、GoogleNet的结构

知识点2、写大型网络的技巧

知识点3、batchnorm

知识点4、不改变图像长宽的s k p

知识点5、 torch.cat((), dim=1) 构造并联网络

知识点1

google net 的结构像是 电路中的 几个并联的串联

知识点2

对于一个大型的网络(GoogleNet),可以先拆分为几个小的网络(inception),先编好小的网络(inception),然后用小的网络(inception)组成大网络(GoogleNet)。为了变好小网络(inception),可以先编写一个更基础的小小网络(conv_relu),以便可以灵活的调用小网络(inception)

import torch

import numpy as np

from torch.autograd import Variable

from torchvision.datasets import CIFAR10

from torch import nn

知识点3

nn.BatchNorm 批标准化,可以理解为对channel之间的数据处理方式

def conv_relu(in_channel, out_channel, kernel, stride=1, padding=0):

layer = nn.Sequential(

nn.conv2d(in_channel, out_channel, kernel, stride, padding),

nn.BatchNorm(out_channel, out_channel, eps=1e-3),

nn.ReLU(True)

)

return layer

知识点4

s = 1 时 k = 1 / k = 3 , padding=1 / k = 5, padding=2 这些情况下的卷积池化不改变图片高宽

知识点5

用out = torch.cat((f1, f2, f3, f4), dim=1) 这种方式 构造“并联”的网络, 记住

class inception(nn.Module):

def __init__(self, in_channels, out1_1, out2_1, out2_3, out3_1, out3_5, out4_1):

super(inception, self).__init__()

self.branch1x1 = conv_relu(in_channels, out1_1, 1)

self.branch2x2 = nn.Sequential(

conv_relu(in_channels, out2_1, 1),

conv_relu(out2_1, out2_3, 3, padding=1)

)

self.branch3x3 = nn.Sequential(

conv_relu(in_channel, out3_1, 1),

conv_relu(out3_1, out3_5, 5, padding=2)

)

self.branch_pool = nn.Sequential(

nn.MaxPool2d(3, stride=1, padding=1),

nn.conv_relu(in_channels, out4_1, 1)

)

def forward(self, x):

f1 = self.branch1x1(x)

f2 = self.branch2x2(x)

f3 = self.branch3x3(x)

f4 = self.branch_pool(x)

out = torch.cat((f1, f2, f3, f4), dim=1) # 记住在这里插入代码片

return output

验证

test_net = inception(3, 64, 48, 64, 64, 96, 32)

test_x = Variable(torch.zeros(5, 3, 96, 96))

print('input shape: {} x {} x {} x {}'.format(test_x.shape[0], test_x.shape[1], test_x.shape[2], test_x.shape[3]))

test_y = test_net(test_x)

print('output shape: {} x {} x {} x {}'.format(test_y.shape[0], test_y.shape[1], test_y.shape[2], test_y.shape[3]))

class googlenet(nn.Module):

def __init__(self, in_channel, num_classes, verbose=False):

super(googlenet, self).__init__()

self.verbose = verbose

self.block1 = nn.Sequential(

conv_relu(in_channel, out_channel=64, kernel=7, stride=2, padding=3),

nn.MaxPool2d(3, 2)

)

self.block2 = nn.Sequential(

conv_relu(64, 64, kernel=1),

conv_relu(64, 192, kernel=3, padding=1),

nn.MaxPool2d(3, 2)

)

self.block3 = nn.Sequential(

inception(192, 64, 96, 128, 16, 32, 32),

inception(256, 128, 128, 192, 32, 96, 64),

nn.MaxPool2d(3, 2)

)

self.block4 = nn.Sequential(

inception(480, 192, 96, 208, 16, 48, 64),

inception(512, 160, 112, 224, 24, 64, 64),

inception(512, 128, 128, 256, 24, 64, 64),

inception(512, 112, 114, 288, 32, 64, 64),

inception(528, 256, 160, 320, 32, 128, 128),

nn.MaxPool2d(3, 2)

)

self.block5 = nn.Sequential(

inception(832, 256, 160, 320, 32, 128, 128),

inception(832, 384, 182, 384, 48, 128, 128),

nn.AvgPool2d(2)

)

self.classifier = nn.Linear(1024, num_classes)

def forward(self, x):

x = self.block1(x)

if self.verbose:

print('block 1 output: {}'.format(x.shape))

x = self.block2(x)

if self.verbose:

print('block 2 output: {}'.format(x.shape))

x = self.block3(x)

if self.verbose:

print('block 3 output: {}'.format(x.shape))

x = self.block4(x)

if self.verbose:

print('block 4 output: {}'.format(x.shape))

x = self.block5(x)

if self.verbose:

print('block 5 output: {}'.format(x.shape))

x = x.view(x.shape[0], -1)

x = self.classifier(x)

return x

test_net = googlenet(3, 10, True)

test_x = Variable(torch.zeros(1, 3, 96, 96))

test_y = test_net(test_x)

print('output: {}'.format(test_y.shape))

def data_tf(x):

x = x.resize((96, 96), 2)

x = np.array(x, dtype='float32') / 255

x = (x - 0.5) / 0.5

x = x.transpose((2, 0, 1))

x = torch.from_numpy(x)

return x

from torch.utils.data import DataLoader

from jc_utils import train

train_set = CIFAR10('./data', train=True, transform=data_tf)

train_data = DataLoader(train_set, batch_size=64, shuffle=True)

test_set = CIFAR10('./data', train=False, transform=data_tf)

test_data = DataLoader(test_set, batch_size=128, shuffle=False)

net = googlenet(3, 10)

optimizer = torch.optim.SGD(net.parameters(), lr=0.01)

criterion = nn.CrossEntropyLoss()

train(net, train_data, test_data, 20, optimizer, criterion)

完整代码,如果需要,请用这部分代码

import torch

import numpy as np

from torch import nn

from torch.autograd import Variable

from torchvision.datasets import CIFAR10

def conv_relu(in_channle, out_channel, kernel, stride=1, padding=0):

layer = nn.Sequential(

nn.Conv2d(in_channle, out_channel, kernel, stride, padding),

nn.BatchNorm2d(out_channel, eps=1e-3),

nn.ReLU(True)

)

return layer

class inception(nn.Module):

def __init__(self, in_channels, out1_1, out2_1, out2_3, out3_1, out3_5, out4_1):

super(inception, self).__init__()

self.branch1x1 = conv_relu(in_channels, out1_1, 1)

self.branch3x3 = nn.Sequential(

conv_relu(in_channels, out2_1, 1),

conv_relu(out2_1, out2_3, 3, padding=1)

)

self.branch5x5 = nn.Sequential(

conv_relu(in_channels, out3_1, 1),

conv_relu(out3_1, out3_5, 5, padding=2)

)

self.branch_pool = nn.Sequential(

nn.MaxPool2d(3, stride=1, padding=1),

conv_relu(in_channels, out4_1, 1)

)

def forward(self, x):

f1 = self.branch1x1(x)

f2 = self.branch3x3(x)

f3 = self.branch5x5(x)

f4 = self.branch_pool(x)

output = torch.cat((f1, f2, f3, f4), dim=1)

return output

test_net = inception(3, 64, 48, 64, 64, 96, 32)

test_x = Variable(torch.zeros(5, 3, 96, 96))

print('input shape: {} x {} x {} x {}'.format(test_x.shape[0], test_x.shape[1], test_x.shape[2], test_x.shape[3]))

test_y = test_net(test_x)

print('output shape: {} x {} x {} x {}'.format(test_y.shape[0], test_y.shape[1], test_y.shape[2], test_y.shape[3]))

class googlenet(nn.Module):

def __init__(self, in_channel, num_classes, verbose=False):

super(googlenet, self).__init__()

self.verbose = verbose

self.block1 = nn.Sequential(

conv_relu(in_channel, out_channel=64, kernel=7, stride=2, padding=3),

nn.MaxPool2d(3, 2)

)

self.block2 = nn.Sequential(

conv_relu(64, 64, kernel=1),

conv_relu(64, 192, kernel=3, padding=1),

nn.MaxPool2d(3, 2)

)

self.block3 = nn.Sequential(

inception(192, 64, 96, 128, 16, 32, 32),

inception(256, 128, 128, 192, 32, 96, 64),

nn.MaxPool2d(3, 2)

)

self.block4 = nn.Sequential(

inception(480, 192, 96, 208, 16, 48, 64),

inception(512, 160, 112, 224, 24, 64, 64),

inception(512, 128, 128, 256, 24, 64, 64),

inception(512, 112, 114, 288, 32, 64, 64),

inception(528, 256, 160, 320, 32, 128, 128),

nn.MaxPool2d(3, 2)

)

self.block5 = nn.Sequential(

inception(832, 256, 160, 320, 32, 128, 128),

inception(832, 384, 182, 384, 48, 128, 128),

nn.AvgPool2d(2)

)

self.classifier = nn.Linear(1024, num_classes)

def forward(self, x):

x = self.block1(x)

if self.verbose:

print('block 1 output: {}'.format(x.shape))

x = self.block2(x)

if self.verbose:

print('block 2 output: {}'.format(x.shape))

x = self.block3(x)

if self.verbose:

print('block 3 output: {}'.format(x.shape))

x = self.block4(x)

if self.verbose:

print('block 4 output: {}'.format(x.shape))

x = self.block5(x)

if self.verbose:

print('block 5 output: {}'.format(x.shape))

x = x.view(x.shape[0], -1)

x = self.classifier(x)

return x

test_net = googlenet(3, 10, True)

test_x = Variable(torch.zeros(1, 3, 96, 96))

test_y = test_net(test_x)

print('output: {}'.format(test_y.shape))

def data_tf(x):

x = x.resize((96, 96), 2)

x = np.array(x, dtype='float32') / 255

x = (x - 0.5) / 0.5

x = x.transpose((2, 0, 1))

x = torch.from_numpy(x)

return x

from torch.utils.data import DataLoader

from jc_utils import train

train_set = CIFAR10('./data', train=True, transform=data_tf)

train_data = DataLoader(train_set, batch_size=64, shuffle=True)

test_set = CIFAR10('./data', train=False, transform=data_tf)

test_data = DataLoader(test_set, batch_size=128, shuffle=False)

net = googlenet(3, 10)

optimizer = torch.optim.SGD(net.parameters(), lr=0.01)

criterion = nn.CrossEntropyLoss()

train(net, train_data, test_data, 20, optimizer, criterion)