TensorFlow从入门到放弃(一)——实现经典神经网络模型LeNet5

1.mnist手写数字数据集

下载地址:http://yann.lecun.com/exdb/mnist/

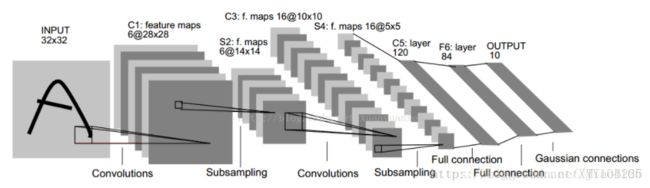

2. LeNet5网络模型

第一层:卷积层

这一层的输入就是原始的图像像素32*32*1。第一个卷积层过滤器尺寸为5*5,深度为6,偏置亦为6,不使用全0填充,长和宽的步长为1。所以这一层的输出:28*28*6,卷积层共有5*5*1*6+6=156个参数。

第二层:池化层

这一层的输入为第一层的输出,是一个28*28*6的节点矩阵。本层采用的过滤器大小为2*2,长和宽的步长均为2,所以本层的输出矩阵大小为14*14*6。

第三层:卷积层

本层的输入矩阵大小为14*14*6,使用的过滤器大小为5*5,深度为16,偏置为16。本层不使用全0填充,长和宽的步长为1。本层的输出矩阵大小为10*10*16。本层有5*5*6*16+16=2416个参数。

第四层:池化层

本层的输入矩阵大小10*10*16。本层采用的过滤器大小为2*2,长和宽的步长均为2,所以本层的输出矩阵大小为5*5*16。

第五层:全连接层

本层的输入矩阵大小为5*5*16,在LeNet-5论文中将这一层称为卷积层,但是因为过滤器的大小就是5*5,所以和全连接层没有区别。如果将5*5*16矩阵中的节点拉成一个向量,那么这一层和全连接层就一样了。本层的输出节点个数为120,总共有5*5*16*120+120=48120个参数。

第六层:全连接层

本层的输入节点个数为120个,输出节点个数为84个,偏置亦为84,总共参数为120*84+84=10164个。

第七层:全连接层

本层的输入节点个数为84个,输出节点个数为10个,偏置亦为10,总共参数为84*10+10=850。

3.使用TensorFlow实现LeNet5神经网络

3.1 初学TensorFlow的个人理解:

一个完整的TensorFlow实现的网络模型需要包换三个文件:

- Inference.py,这个文件定义了网络的前向传播过程以及神经网络中的参数初始化。

- train.py,这个文件定义了神经网络的训练过程。文件中包含了损失函数的定义、学习率的定义、滑动平均操作以及训练过程、以及将训练得到的model的存储。

- eval.py,这个文件定义了测试过程。用训练得到的model重现网络。

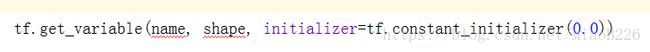

3.2 mnist_inference.py中的常用函数:

函数一

![]()

name:名称

shape:数据的维度

tf.constant_initializer(0.0):初始化全为0的常量

tf.truncated_normal_initializer(stddev=0.1)):初始化产生一个正态分布的初始值,标准差为0.1。

函数二

实现relu激活函数

input:输入

biases:偏置

函数三

将数据加上偏置

函数四

实现卷积层的前向。

实现最大池化。

input[batch_size, length , width, channels]:本层的输入

weights:本层的权重

strides=[1,1,1,1]:1和4位置上的必须是1,2和3位置上的是长和宽的步长。

Padding:’SAME’全0填充,’VALID’不填充

函数五

创建命名空间

函数六

![]()

将权重规格化后加到loss中

函数七

实现全连接层的前向运行

函数八

tf.nn.dropout(fc1, 0.5)这里的0.5是保存的概率,不是遗忘的概率

3.3 mnist_inference.py

import tensorflow as tf

# 输入和输出数据的维度

# 手写数字数据集的图像特征是一个1*784的特征向量

INPUT_NODE = 784

OUT_NODE = 10

# 图像的尺寸、通道数和标签个数

IMAGE_SIZE = 28

NUM_CHANNELS = 1

NUM_LABELS = 10

# 第一卷积层的尺寸和深度

CONV1_DEEP = 6

CONV1_SIZE = 5

# 第二卷积层的尺寸和深度

CONV2_DEEP = 16

CONV2_SIZE = 5

# 全连接层的节点个数

FC1_SIZE = 120

FC2_SIZE = 84

# 定义卷积神经网络的前向传播过程。这里添加了一个新的参数train,用于区别训练过程和测试过程。

# 在这个程序中将用到dropout方法

# dropout可以进一步提升模型可靠性并防止过拟合(dropout过程只在训练时使用)

def inference(input_tensor, train, regularizer):

with tf.variable_scope('layer1-conv1'):

conv1_weights = tf.get_variable("weight", [CONV1_SIZE, CONV1_SIZE, NUM_CHANNELS, CONV1_DEEP],

initializer=tf.truncated_normal_initializer(stddev=0.1))

conv1_biases = tf.get_variable("bias", [CONV1_DEEP],initializer=tf.constant_initializer(0.0))

conv1 = tf.nn.conv2d(input_tensor, conv1_weights, strides=[1,1,1,1], padding='SAME')

relu1 = tf.nn.relu(tf.nn.bias_add(conv1, conv1_biases))

with tf.variable_scope('layer2-pool1'):

pool1 = tf.nn.max_pool(relu1, ksize=[1,2,2,1], strides=[1,2,2,1], padding='VALID')

with tf.variable_scope('layer3-conv2'):

conv2_weights = tf.get_variable("weight", [CONV2_SIZE, CONV2_SIZE, CONV1_DEEP, CONV2_DEEP],

initializer=tf.truncated_normal_initializer(stddev=0.1))

conv2_biases = tf.get_variable("bias", [CONV2_DEEP],

initializer=tf.constant_initializer(0.0))

conv2 = tf.nn.conv2d(pool1, conv2_weights, strides=[1,1,1,1], padding='SAME')

relu2 = tf.nn.relu(tf.nn.bias_add(conv2, conv2_biases))

with tf.variable_scope('layer4-pool2'):

pool2 = tf.nn.max_pool(relu2,ksize=[1,2,2,1],strides=[1,2,2,1], padding='VALID')

# 用于将每张图片数据拉成一个一维的向量

# pool_shape[0]-batch_size,pool_shape[1]、pool_shape[2]-图的长和宽,pool_shape[3]-channel

pool_shape = pool2.get_shape().as_list()

nodes = pool_shape[1] * pool_shape[2] * pool_shape[3]

reshaped = tf.reshape(pool2,[pool_shape[0], nodes])

with tf.variable_scope("layer5-fc1"):

fc1_weights = tf.get_variable("weight", [nodes,FC1_SIZE],

initializer=tf.truncated_normal_initializer(stddev=0.1))

if regularizer != None:

tf.add_to_collection("losses", regularizer(fc1_weights))

fc1_biases = tf.get_variable("bias", [FC1_SIZE], initializer=tf.constant_initializer(0.1))

fc1 = tf.nn.relu(tf.matmul(reshaped, fc1_weights)+fc1_biases)

# LRN层,实现侧抑制,遗忘一半

if train:

fc1 = tf.nn.dropout(fc1, 0.5)

with tf.variable_scope("layer6-fc2"):

fc2_weights = tf.get_variable("weight",[FC1_SIZE,FC2_SIZE],

initializer=tf.truncated_normal_initializer(stddev=0.1))

# 个人理解这个规格化的目的是为了求loss值,并未影响到网络里面的值感觉在测试阶段

# 这个regularizer可以不用

if regularizer != None:

tf.add_to_collection("losses", regularizer(fc2_weights))

fc2_biases = tf.get_variable("bias",[FC2_SIZE], initializer=tf.constant_initializer(0.1))

fc2 = tf.nn.relu(tf.matmul(fc1, fc2_weights)+fc2_biases)

if train:

fc2 = tf.nn.dropout(fc2,0.5)

with tf.variable_scope("layer7-fc3"):

# 最后一层的数据不需要采用relu激活函数

fc3_weights = tf.get_variable("weight",[FC2_SIZE, NUM_LABELS],

initializer=tf.truncated_normal_initializer(stddev=0.1))

if regularizer != None:

tf.add_to_collection("losses", regularizer(fc3_weights))

fc3_biases = tf.get_variable("bias", [NUM_LABELS], initializer=tf.constant_initializer(0.1))

logit = tf.matmul(fc2,fc3_weights)+fc3_biases

return logit

3.4 mnist_train.py

import os

import numpy as np

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import mnist_inference

# 每次取多少张图片训练

BATCH_SIZE = 100

# 基础学习率

LEARNING_RATE_BASE = 0.01

# 学习率下降梯度

LEARNING_RATE_DECAY = 0.99

# L2规则化的系数

REGULARIZATION_RATE = 0.0001

# 训练次数

TRAINING_STEPS = 30000

# 计算滑动平均的系数

MOVING_AVERAGE_DECAY = 0.99

# 定义训练得到模型保存的路径和文件名

MODEL_SAVE_PATH = 'E:\\PythonSpace\\LeNet5\\model'

MODEL_NAME = 'model.ckpt'

def train(mnist):

# 定义网络的输入数据维度和标签数据维度

x = tf.placeholder(tf.float32, [BATCH_SIZE, mnist_inference.IMAGE_SIZE, mnist_inference.IMAGE_SIZE,

mnist_inference.NUM_CHANNELS], name='x-input')

y_ = tf.placeholder(tf.float32,[None, mnist_inference.OUT_NODE], name='y-input')

# 定义L2规格化

regularizer = tf.contrib.layers.l2_regularizer(REGULARIZATION_RATE)

# 调用前向函数实现训练网络的前向运行

y = mnist_inference.inference(x, True, regularizer)

# 定义训练轮数,并将这个变量设为不训练

global_step = tf.Variable(0, trainable=False)

# 初始化滑动平均类

variable_averages = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY, global_step)

# 对所有需要训练的变量使用滑动平均

variable_averages_op = variable_averages.apply(tf.trainable_variables())

# 计算交叉熵损失函数,并求均值

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y, labels=tf.argmax(y_,1))

cross_entropy_mean = tf.reduce_mean(cross_entropy)

# loss = 交叉熵损失值的均值 + L2规则化的weight

loss = cross_entropy_mean + tf.add_n(tf.get_collection('losses'))

# 定义学习率更新方法

# 每训练mnist.train.num_examples/BATCH_SIZE次,修改学习率为LEARNING_RATE_BASE*LEARNING_RATE_DECAY

learning_rate = tf.train.exponential_decay(LEARNING_RATE_BASE, global_step, mnist.train.num_examples/BATCH_SIZE, LEARNING_RATE_DECAY)

# 用来优化损失函数

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step=global_step)

# 这句话的作用是更新权重和偏置的系数,以及更新参数的平均值

with tf.control_dependencies([train_step, variable_averages_op]):

train_op = tf.no_op(name='train')

# 这个saver用于实现数据持久化

saver = tf.train.Saver()

with tf.Session() as sess:

# 初始化所有变量

tf.global_variables_initializer().run()

for i in range(TRAINING_STEPS):

# 读取数据集图像信息和标签

xs, ys = mnist.train.next_batch(BATCH_SIZE)

# 将784维的特征转换为28*28

reshaped_xs = np.reshape(xs, (BATCH_SIZE, mnist_inference.IMAGE_SIZE, mnist_inference.IMAGE_SIZE,

mnist_inference.NUM_CHANNELS))

# 开始训练

_, loss_value, step = sess.run([train_op, loss, global_step],feed_dict={x:reshaped_xs, y_:ys})

# 每训练1000次将model保存

if i % 1000 == 0:

print("After %d training step(s), loss on training"

"batch is %g. " %(step, loss_value))

saver.save(sess, os.path.join(MODEL_SAVE_PATH, MODEL_NAME), global_step=global_step)

# 定义主函数

def main(argv=None):

# 读取mnist数据集数据

mnist = input_data.read_data_sets(r"E:\PythonSpace\mnist_data\\", one_hot=True)

train(mnist)

if __name__=='__main__':

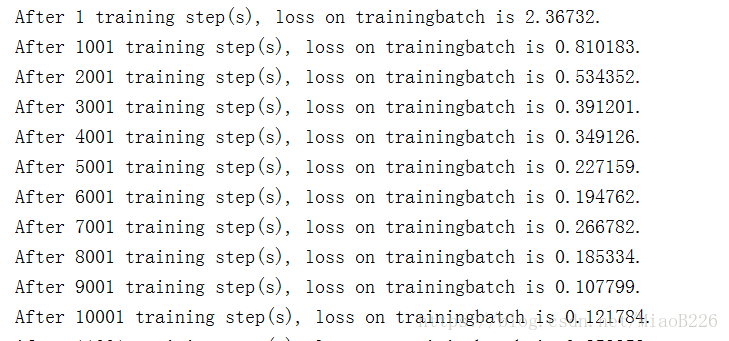

tf.app.run()3.5 代码运行截图

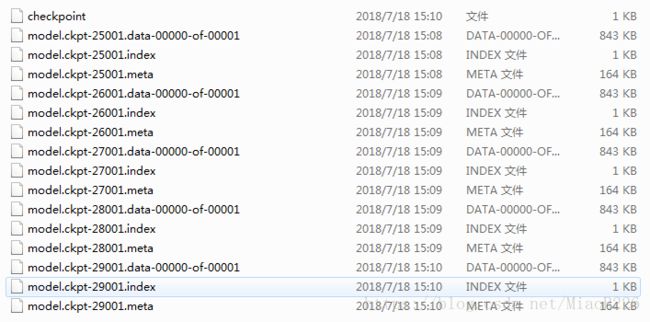

存储的模型文件

3.6 mnist_eval.py

import time

import numpy as np

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import mnist_inference

import mnist_train

# 每10秒加载一次模型

EVAL_INTERVAL_SECS = 10

def evaluate(mnist):

with tf.Graph().as_default() as g:

# 定义输入数据的维度和格式

x = tf.placeholder(tf.float32, [mnist.validation.num_examples, mnist_inference.IMAGE_SIZE,

mnist_inference.IMAGE_SIZE, mnist_inference.NUM_CHANNELS],

name='x-input')

y_ = tf.placeholder(tf.float32,[None, mnist_inference.OUT_NODE], name='y-input')

# 提取验证集的图片和标签,并将图片转换为28*28的

xs = mnist.validation.images

reshaped_xs = np.reshape(xs, (mnist.validation.num_examples, mnist_inference.IMAGE_SIZE,

mnist_inference.IMAGE_SIZE, mnist_inference.NUM_CHANNELS))

validate_feed = {x:reshaped_xs, y_:mnist.validation.labels}

# 测试的时候不用计算正则化损失函数,而且不用调用遗忘函数

y = mnist_inference.inference(x, 0, None)

# 计算准确率并减均值

correct_prediction = tf.equal(tf.argmax(y,1),tf.argmax(y_,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# 调用训练的滑动平均值

variable_averages = tf.train.ExponentialMovingAverage(mnist_train.MOVING_AVERAGE_DECAY)

variables_to_restore = variable_averages.variables_to_restore()

saver = tf.train.Saver(variables_to_restore)

while True:

with tf.Session() as sess:

# 读取保存的最新的模型文件

ckpt = tf.train.get_checkpoint_state(mnist_train.MODEL_SAVE_PATH)

if ckpt and ckpt.model_checkpoint_path:

print(ckpt.model_checkpoint_path)

# 利用模型还原网络

saver.restore(sess, ckpt.model_checkpoint_path)

global_step = ckpt.model_checkpoint_path.split('\\')[-1].split('-')[-1]

# 计算准确率

accuracy_score = sess.run(accuracy, feed_dict=validate_feed)

print("After %s training step(s), validation "

"accuracy = %g" %(global_step, accuracy_score))

else:

print ('No checkpoint file found')

return

time.sleep(EVAL_INTERVAL_SECS)

def main(argv=None):

mnist = input_data.read_data_sets(r"E:\PythonSpace\mnist_data\\", one_hot=True)

evaluate(mnist)

if __name__ == '__main__':

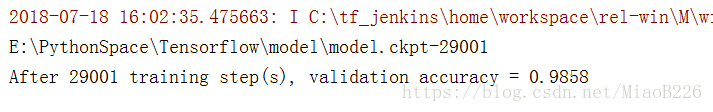

tf.app.run()3.7 运行结果

以上是自己初次学习TensorFlow的一个小例子,一些理解有可能存在错误,欢迎批评指正。