TensorFlow从入门到放弃(二)——基于InceptionV3的迁移学习以及图像特征的提取

1. flower数据集

下载地址:http://download.tensorflow.org/example_images/flower_photos.tgz

共五种花的图片

2. 图片处理

将图片划分为train、val、test三个子集并提取图片特征。这个过程有点儿漫长请耐心等待。。。。。。

import glob

import os.path

import numpy as np

import tensorflow as tf

from tensorflow.python.platform import gfile

# 数据集的路径

INPUT_DATA = r'E:\PythonSpace\finetune_NET\flower_photos'

# 分割好的数据集

OUT_FILE = r'E:\PythonSpace\finetune_NET\flower_processed_data.npy'

# 测试数据和验证数据所占的比例为10%

VALIDATION_PERCENTAGE = 10

TEST_PERCENTAGE = 10

def create_image_lists(sess, testing_percentage, validation_percentage):

# 读取数据集文件夹内的几个文件夹

sub_dirs = [x[0] for x in os.walk(INPUT_DATA)]

is_root_dir = True

# 初始化各个数据集

training_images = []

training_labels = []

testing_images = []

testing_labels = []

validation_images = []

validation_labels = []

current_label = 0

current_image = 0

# 读取所有的子目录

for sub_dir in sub_dirs:

if is_root_dir:

is_root_dir = False

continue

extension = 'jpg'

file_list = []

# 获取图片所属的类别文件夹

dir_name = os.path.basename(sub_dir)

# 读取文件夹下*.jpg的文件名

file_glob = os.path.join(INPUT_DATA, dir_name, '*.' + extension)

# 读取名字为上面类型的文件的名字,保存到列表中

file_list.extend(glob.glob(file_glob))

for file_name in file_list:

current_image = current_image + 1

print(current_image)

# 利用tensorflow的方法以二进制的格式读取图像

image_raw_data = gfile.FastGFile(file_name, 'rb').read()

# 对上面的二进制图像进行解码

image = tf.image.decode_jpeg(image_raw_data)

if image.dtype != tf.float32:

image = tf.image.convert_image_dtype(image, dtype=tf.float32)

# resize图片大小

image = tf.image.resize_images(image,[229,229])

image_value = sess.run(image)

# 随机划分数据集

# 随机生成一个0-100的数

chance = np.random.randint(100)

# 根据比例划分数据集

if chance < validation_percentage:

validation_images.append(image_value)

validation_labels.append(current_label)

elif chance < (validation_percentage + testing_percentage):

testing_images.append(image_value)

testing_labels.append(current_label)

else:

training_images.append(image_value)

training_labels.append(current_label)

current_label += 1

# 打乱训练集数据

state = np.random.get_state()

np.random.shuffle(training_images)

np.random.set_state(state)

np.random.shuffle(training_labels)

return np.asarray([training_images, training_labels,

validation_images, validation_labels,

testing_images, testing_labels])

# 定义主函数

def main():

with tf.Session() as sess:

processed_data = create_image_lists(sess, TEST_PERCENTAGE, VALIDATION_PERCENTAGE)

np.save(OUT_FILE, processed_data)

if __name__ == '__main__':

main()

3. 下载预训练好的inception-v3网络模型权重文件

下载地址:http://download.tensorflow.org/models/inception_v3_2016_08_28.tar.gz

4. finetune_NET.py

import glob

import os.path

import numpy as np

import tensorflow as tf

from tensorflow.python.platform import gfile

import tensorflow.contrib.slim as slim

# 加载inception_v3模型

import tensorflow.contrib.slim.python.slim.nets.inception_v3 as inception_v3

# 导入处理之后的数据文件

INPUT_DATA = r'E:\PythonSpace\finetune_NET\flower_processed_data.npy'

# 定义finetune后变量存储的位置

TRAIN_FILE = r'E:\PythonSpace\finetune_NET\model'

# 预训练的model文件

CKPT_FILE = r'E:\PythonSpace\finetune_NET\inception_v3.ckpt'

# 定义训练中使用的参数

LEARNING_RATE = 0.0001

# 定义训练轮数,每轮训练要跑完所有训练图片

STEPS = 300

# 程序前向运行每次有多少张图片参与

BATCH = 30

# 类别数

N_CLASSES = 5

# finetune时,只是finetune最后的全连接层

CHECKPOINT_EXCLUDE_SCOPES = 'InceptionV3/Logits, InceptionV3/AuxLogits'

TRAINABLE_SCOPES = 'InceptionV3/Logits, InceptionV3/AuxLogits'

# 获取所有需要从训练好的模型中导入数据

def get_tuned_variables():

exclusions = [scope.strip() for scope in CHECKPOINT_EXCLUDE_SCOPES.split(',')]

# 用于存储需要加载参数的名称

variables_to_restore = []

for var in slim.get_model_variables():

excluded = False

for exclusion in exclusions:

if var.op.name.startswith(exclusion):

excluded = True

break

if not excluded:

variables_to_restore.append(var)

return variables_to_restore

# 初始化需要训练的两个层的变量

def get_trainable_variables():

scopes = [scope.strip() for scope in TRAINABLE_SCOPES.split(',')]

variables_to_train = []

for scope in scopes:

variables = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope)

variables_to_train.extend(variables)

return variables_to_train

def main(argv=None):

# 加载预处理的数据

processed_data = np.load(INPUT_DATA)

training_images = processed_data[0]

n_training_example = len(training_images)

training_labels = processed_data[1]

validation_images = processed_data[2]

validation_labels = processed_data[3]

testing_images = processed_data[4]

testing_labels = processed_data[5]

print ("%d training examples, %d validation examples and %d"

"testing examples."%(n_training_example, len(validation_labels),

len(testing_labels)))

# 定义网络的输入

images = tf.placeholder(tf.float32, [None, 229, 229, 3], name="input_images")

labels = tf.placeholder(tf.int64, [None], name="labels")

# 网络的前向运行

with slim.arg_scope(inception_v3.inception_v3_arg_scope()):

logits, _ = inception_v3.inception_v3(images, num_classes=N_CLASSES)

# 获取需要训练的变量

trainable_variables = get_trainable_variables()

# 定义交叉熵损失

tf.losses.softmax_cross_entropy(tf.one_hot(labels, N_CLASSES), logits, weights=1.0)

# 优化损失函数

train_step = tf.train.RMSPropOptimizer(LEARNING_RATE).minimize(tf.losses.get_total_loss())

# 计算正确率

with tf.name_scope("evaluation"):

correct_prediction = tf.equal(tf.argmax(logits,1), labels)

evaluation_step = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# 导入预训练好的权重

load_fn = slim.assign_from_checkpoint_fn(CKPT_FILE, get_tuned_variables(), ignore_missing_vars=True)

# 用于存储finetune后的权重

saver = tf.train.Saver()

with tf.Session() as sess:

# 初始化没有加载进来的变量

init = tf.global_variables_initializer()

sess.run(init)

print ("loading tuned variables from %s" % CKPT_FILE)

load_fn(sess)

start = 0

end = BATCH

for i in range(STEPS):

# 开始训练

sess.run(train_step, feed_dict={

images: training_images[start:end],

labels: training_labels[start:end]

})

if i%30 == 0 or i+1 == STEPS:

# 这里存储权重时一定要带后面的那个.ckpt

model_path = os.path.join(TRAIN_FILE, 'model_step' + str(i + 1) + '.ckpt')

# 保存权重

saver.save(sess, model_path)

validation_accuracy = sess.run(evaluation_step, feed_dict={images: validation_images,

labels: validation_labels})

print('Step %d: Validation accuracy = %.1f%%' % (i, validation_accuracy*100.0))

start = end

if start == n_training_example:

start = 0

end = start + BATCH

if end > n_training_example:

end = n_training_example

# 训练完成后对测试集进行测试

test_accuracy = sess.run(evaluation_step, feed_dict={

images:testing_images, labels:testing_labels})

print("final test accuracy = %.1f%%" %(test_accuracy*100))

if __name__ == '__main__':

tf.app.run()

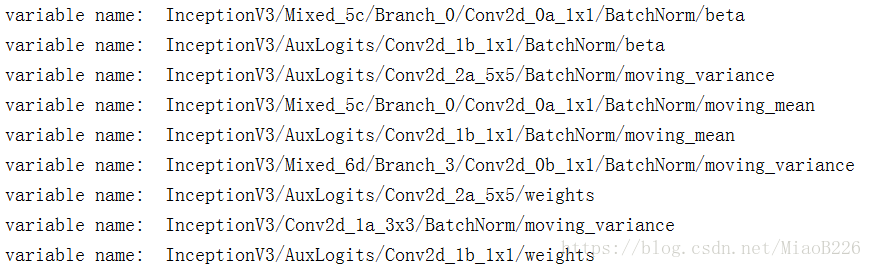

这里为什么是这两个字符串一直困扰着我,后来我试着后来我看了一下定义网络的文件以及.ckpt文件中所存取的变量的名字似乎有了一点理解。

CHECKPOINT_EXCLUDE_SCOPES = 'InceptionV3/Logits, InceptionV3/AuxLogits'

TRAINABLE_SCOPES = 'InceptionV3/Logits, InceptionV3/AuxLogits'读取变量名称的代码,感谢博主AManFromEarth,链接地址https://blog.csdn.net/AManFromEarth/article/details/81057577

import tensorflow as tf

from tensorflow.python import pywrap_tensorflow

#首先,使用tensorflow自带的python打包库读取模型

model_reader = pywrap_tensorflow.NewCheckpointReader(r"E:\PythonSpace\finetune_NET\inception_v3.ckpt")

#然后,使reader变换成类似于dict形式的数据

var_dict = model_reader.get_variable_to_shape_map()

#最后,循环打印输出

for key in var_dict:

print("variable name: ", key)输出结果为:

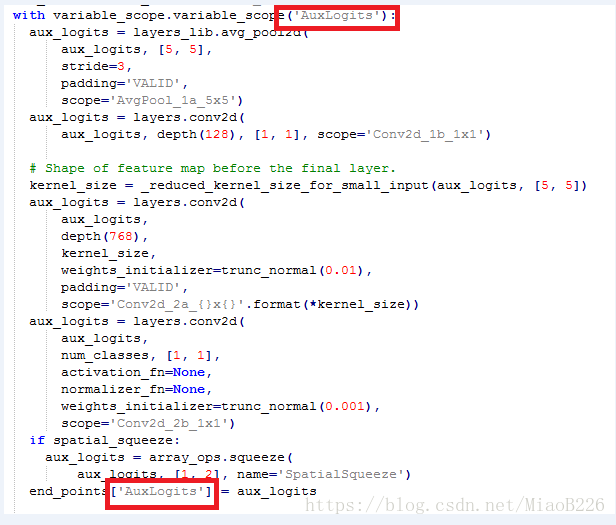

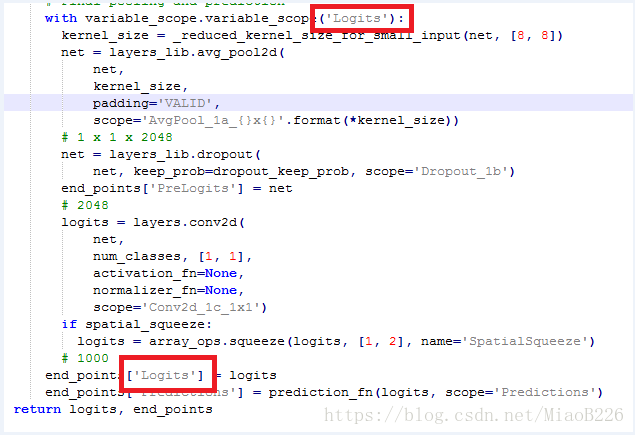

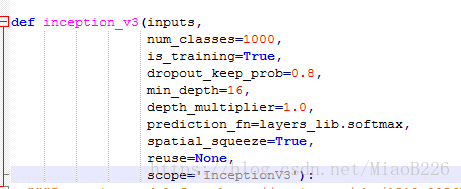

我又查看了网络文件,D:\Anaconda2\envs\Anaconda3\Lib\site-packages\tensorflow\contrib\slim\python\slim\nets,在这个文件夹下可以看到有许多经典的网络模型文件。

在inception_v3.py文件中我们可以看到最后两层是AuxLogits层和Logits层

从上面可以看出所有变量的名都是InceptionV3/开始,从网络文件可以看出最后两层为AuxLogits和Logits

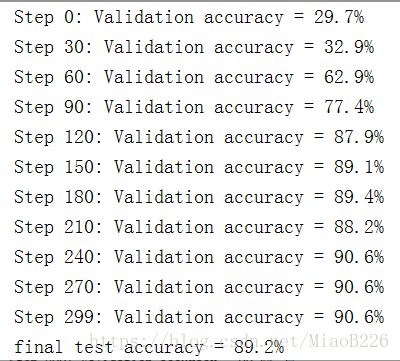

finetune_NET.py运行效果截图:

5. 提取图片特征(deploy.py)

在日常实验中我们有时候不仅要看网络的最终分类结果,有时我们也会提取倒数第二个全连接层的数据作为图片的特征。这里我们假设提取训练集图片的特征,只是写个例子熟悉流程

import glob

import os.path

import numpy as np

import tensorflow as tf

from tensorflow.python.platform import gfile

import tensorflow.contrib.slim as slim

import scipy.io as scio

# 加载inception_v3模型

import tensorflow.contrib.slim.python.slim.nets.inception_v3 as inception_v3

# 导入处理之后的数据文件

INPUT_DATA = r'E:\PythonSpace\finetune_NET\flower_processed_data.npy'

TRAIN_FILE = r'E:\PythonSpace\finetune_NET\model'

# deploy使用的参数

# STEPS * BATCH = 训练图片个数

STEPS = 100

BATCH = 20

N_CLASSES = 5

# fine_tune后存储的网络model文件

model_path = r"E:\PythonSpace\finetune_NET\model\model_step31.ckpt"

feat_train = []

def main(argv=None):

# 加载预处理的数据

processed_data = np.load(INPUT_DATA)

training_images = processed_data[0]

n_training_example = len(training_images)

training_labels = processed_data[1]

validation_images = processed_data[2]

validation_labels = processed_data[3]

testing_images = processed_data[4]

testing_labels = processed_data[5]

# 定义网络的输入

images = tf.placeholder(tf.float32, [None, 229, 229, 3], name="input_images")

labels = tf.placeholder(tf.int64, [None], name="labels")

with slim.arg_scope(inception_v3.inception_v3_arg_scope()):

# num_classes: 必须设定,否则导入精调后的权重会报错

# is_training: 这个设定与否,观察结果后发现无影响,因为只做一次前向,为保险建议设置为false

# dropout: 保存率,提取图片特征TensorFlow设定为1

# 这个end_points存储了网络运行的每一层的数据

logits, end_points = inception_v3.inception_v3(images, num_classes=N_CLASSES,is_training=False, dropout_keep_prob=1.)

feat = end_points

saver = tf.train.Saver()

with tf.Session() as sess:

# 调用finetune好的model来初始化网络参数

saver.restore(sess, model_path)

start = 0

end = BATCH

for i in range(STEPS):

feats = sess.run(feat, feed_dict={

images: training_images[start:end],

labels: training_labels[start:end]

})

# 提取相应层的特征

f = feats['PreLogits']

f = np.asarray(f)

# 提取的特征是batch*1*1*2048的,我们要转化为batch*2048

f = f[:,0,0,:]

feat_train.extend(f)

start = end

if start == n_training_example:

# 训练集的图片特征存储为.mat文件

scio.savemat(r"E:\PythonSpace\finetune_NET\feat_train.mat", {"feat_train": feat_train})

break

start = 0

end = start + BATCH

if end > n_training_example:

end = n_training_example

if __name__ == '__main__':

tf.app.run()

这里我们可以看出inception_v3()函数的输入变量。

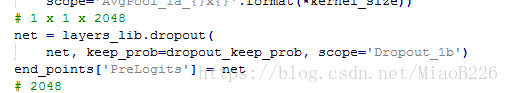

从这里我们可以看出我们要提取的特征存储在end_points这个字典中的Key为'PreLogits'。

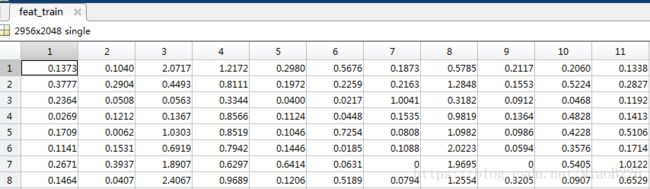

提取的特征结果截图:

本文是自己TensorFlow学习过程中的点滴积累,可能初学对某些地方的理解存在错误,恳请批评指正