Sqoop1 从Oracle往Hive迁移数据

本篇幅讲述如何利用CDH Hadoop版本上面的Sqoop1从Oracle往Hive迁移数据,例子比较简单,仅供参考。

1 列出CDH目录下面的sqoop所有相关命令

[root@n12 bin]# pwd

/opt/cloudera/parcels/CDH/bin

[root@n12 bin]# ll sqoop*

-rwxr-xr-x 1 root root 918 Jul 23 2016 sqoop

-rwxr-xr-x 1 root root 1486 Jul 23 2016 sqoop2

-rwxr-xr-x 1 root root 1795 Jul 23 2016 sqoop2-server

-rwxr-xr-x 1 root root 1842 Jul 23 2016 sqoop2-tool

-rwxr-xr-x 1 root root 926 Jul 23 2016 sqoop-codegen

-rwxr-xr-x 1 root root 936 Jul 23 2016 sqoop-create-hive-table

-rwxr-xr-x 1 root root 923 Jul 23 2016 sqoop-eval

-rwxr-xr-x 1 root root 925 Jul 23 2016 sqoop-export

-rwxr-xr-x 1 root root 923 Jul 23 2016 sqoop-help

-rwxr-xr-x 1 root root 925 Jul 23 2016 sqoop-import

-rwxr-xr-x 1 root root 936 Jul 23 2016 sqoop-import-all-tables

-rwxr-xr-x 1 root root 922 Jul 23 2016 sqoop-job

-rwxr-xr-x 1 root root 933 Jul 23 2016 sqoop-list-databases

-rwxr-xr-x 1 root root 930 Jul 23 2016 sqoop-list-tables

-rwxr-xr-x 1 root root 924 Jul 23 2016 sqoop-merge

-rwxr-xr-x 1 root root 928 Jul 23 2016 sqoop-metastore

-rwxr-xr-x 1 root root 926 Jul 23 2016 sqoop-version

[root@n12 bin]# sqoop help

Warning: /opt/cloudera/parcels/CDH-5.7.2-1.cdh5.7.2.p0.18/bin/../lib/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

17/02/24 14:29:04 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.2

usage: sqoop COMMAND [ARGS]

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.2 根据上述结果可知,运行sqoop命令,可以使用”sqoop tool-name [tool-arguments]”运行,也可以使用对应的Aliaes,如”sqoop import …”可以直接运行”sqoop-import”命令

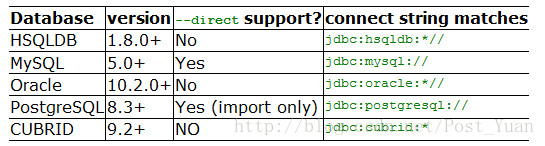

3 sqoop支持从多种RDBMS与Hadoop平台的数据导入导出,典型的有Oracle,Mysql,Postgresql,具体请参考官网:http://sqoop.apache.org/docs/1.4.6/SqoopUserGuide.html#_supported_databases

4 这里以从Oracle往Hive抽取数据为例,首先需要准备Oracle JDBC驱动ojdbc6.jar,Jar请自行从网上下载,这里不再赘述;将下载的ojdbc6.jar放到$SQOOP_HOME/lib目录下,如下

/opt/cloudera/parcels/CDH/lib/sqoop/lib

[root@n12 lib]# ll ojdbc6.jar

-rwxrwxrwx 1 root root 2739670 Feb 24 14:50 ojdbc6.jar5 执行”sqoop list-tables”命令列出Oracle中的表,

[root@n12 bin]# sqoop-list-tables --driver oracle.jdbc.OracleDriver --connect jdbc:oracle:thin:@10.10.11.16:1521/esgyn --username system --password 12345

Warning: /opt/cloudera/parcels/CDH-5.7.2-1.cdh5.7.2.p0.18/bin/../lib/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

17/02/24 14:58:53 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.2

17/02/24 14:58:53 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

17/02/24 14:58:53 WARN sqoop.ConnFactory: Parameter --driver is set to an explicit driver however appropriate connection manager is not being set (via --connection-manager). Sqoop is going to fall back to org.apache.sqoop.manager.GenericJdbcManager. Please specify explicitly which connection manager should be used next time.

17/02/24 14:58:53 INFO manager.SqlManager: Using default fetchSize of 1000

SYS_IOT_OVER_70800

WWV_COLUMN_EXCEPTIONS

WWV_FLOWS

WWV_FLOWS_RESERVED

WWV_FLOW_ACTIVITY_LOG1$

WWV_FLOW_ACTIVITY_LOG2$

WWV_FLOW_ACTIVITY_LOG_NUMBER$

WWV_FLOW_ALTERNATE_CONFIG

WWV_FLOW_ALT_CONFIG_DETAIL

WWV_FLOW_ALT_CONFIG_PICK

WWV_FLOW_APPLICATION_GROUPS

WWV_FLOW_APP_BUILD_PREF

WWV_FLOW_APP_COMMENTS

WWV_FLOW_BANNER

WWV_FLOW_BUILDER_AUDIT_TRAIL

WWV_FLOW_BUTTON_TEMPLATES

WWV_FLOW_CALS

...6 执行”sqoop import”命令抽取Oracle中的一个表到Hive,如下

[root@n12 bin]# sqoop-import --connect jdbc:oracle:thin:@10.10.11.16:1521/esgyn --username system --password 12345 --table TEST_GROUPBY --split-by A --hive-import --create-hive-table --hive-table test_groupby --hive-overwrite --null-string '\\N' --null-non-string '\\N' --hive-drop-import-delims --verbose

Warning: /opt/cloudera/parcels/CDH-5.7.2-1.cdh5.7.2.p0.18/bin/../lib/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

17/02/24 15:08:46 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.2

17/02/24 15:08:46 DEBUG tool.BaseSqoopTool: Enabled debug logging.

...

Logging initialized using configuration in jar:file:/opt/cloudera/parcels/CDH-5.7.2-1.cdh5.7.2.p0.18/jars/hive-common-1.1.0-cdh5.7.2.jar!/hive-log4j.properties

OK

Time taken: 2.409 seconds

Loading data to table default.test_groupby

chgrp: changing ownership of 'hdfs://n11.trafodion.local:8020/user/hive/warehouse/test_groupby/part-m-00000': User does not belong to hive

chgrp: changing ownership of 'hdfs://n11.trafodion.local:8020/user/hive/warehouse/test_groupby/part-m-00001': User does not belong to hive

chgrp: changing ownership of 'hdfs://n11.trafodion.local:8020/user/hive/warehouse/test_groupby/part-m-00002': User does not belong to hive

chgrp: changing ownership of 'hdfs://n11.trafodion.local:8020/user/hive/warehouse/test_groupby/part-m-00003': User does not belong to hive

Table default.test_groupby stats: [numFiles=4, numRows=0, totalSize=60, rawDataSize=0]

OK

Time taken: 0.886 seconds7 在Hive CLI中检查是否导入成功

hive> show tables;

test_groupby

Time taken: 1.726 seconds, Fetched: 11 row(s)

hive> select * from test_groupby;

OK

1.0 A B NULL NULL

2.0 A C NULL NULL

Time taken: 0.755 seconds, Fetched: 2 row(s)8 除了可以直接使用”sqoop-import”这种命令直接运行sqoop命令,也可以使用”sqoop-job”将一个sqoop命令保存为一个job,这样可以在需要的时间再调用它,而且可以重复执行。使用–create创建job,–list查看job,–delete删除job,–exec执行job

[root@n12 bin]# sqoop-job --create job1 -- import --connect jdbc:oracle:thin:@10.10.11.16:1521/esgyn --username system --password 12345 --table TEST_GROUPBY --split-by A --hive-import --create-hive-table --hive-table test_groupby --hive-overwrite --null-string '\\N' --null-non-string '\\N' --hive-drop-import-delims --verbose

Warning: /opt/cloudera/parcels/CDH-5.7.2-1.cdh5.7.2.p0.18/bin/../lib/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

17/02/24 17:00:43 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.2

17/02/24 17:00:44 DEBUG tool.BaseSqoopTool: Enabled debug logging.

...

[root@n12 bin]# sqoop-job --list

Warning: /opt/cloudera/parcels/CDH-5.7.2-1.cdh5.7.2.p0.18/bin/../lib/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

17/02/24 17:02:42 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.2

Available jobs:

job1

[root@n12 bin]# sqoop-job --delete job1

Warning: /opt/cloudera/parcels/CDH-5.7.2-1.cdh5.7.2.p0.18/bin/../lib/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

17/02/24 17:03:12 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.2