python 的read 、readline和 readlines以及如何读取大文件

read()方法:

1、读取整个文件,将文件内容放到一个字符串变量中,如果需要对文件按行进行处理,则不可用该方法

2、如果文件大于可用内存(好几个G的),不可能使用这种处理,系统会报错:MemoryError 以下两种实现方式

f = open(r'C:\Users\DELL\Desktop\python\words.txt','r') #创建一个文件对象,也是一个可迭代对象

try:

content = f.read() #结果为str类型

print (type(content))

print (content)

finally:

f.close()

with open(r'C:\Users\DELL\Desktop\python\words.txt','r') as f:#创建一个文件对象,也是一个可迭代对象

content = f.read() #结果为str类型

print (type(content))

print (content)

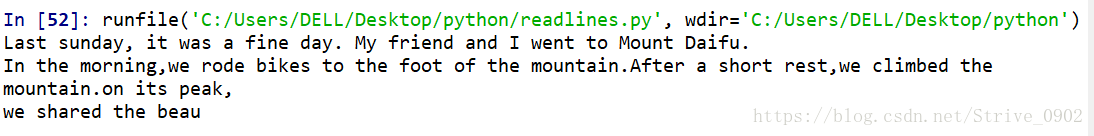

结果为:

Last sunday, it was a fine day. My friend and I went to Mount Daifu.

In the morning,we rode bikes to the foot of the mountain.After a short rest,we climbed the mountain.on its peak,

we shared the beautiful scenery in our eyes.

There were lots of light foggy clouds around us.What's more, varieties of birds flying around us were pretty.

What a harmony situation! At noon,we had lunch in a restaurant. With a happy emotion, we finished our climbing.

Although tired, we still felt happy and relaxed.we hope our country will become more and more beautiful. readline()方法:

1、readline()每次读取一行,比readlines()慢得多

2、readline()返回的是一个字符串对象,保存当前行的内容,两种方式:

f = open(r'C:\Users\DELL\Desktop\python\words.txt','r')

try:

while True:

line = f.readline()

if line:

print (line)

else:

break

finally:

f.close()

with open(r'C:\Users\DELL\Desktop\python\words.txt','r') as f:

while True:

line = f.readline()

if line:

print (line)

else:

break #跳出循环,不写的if条件判断Line是不是读完了的话就是死循环

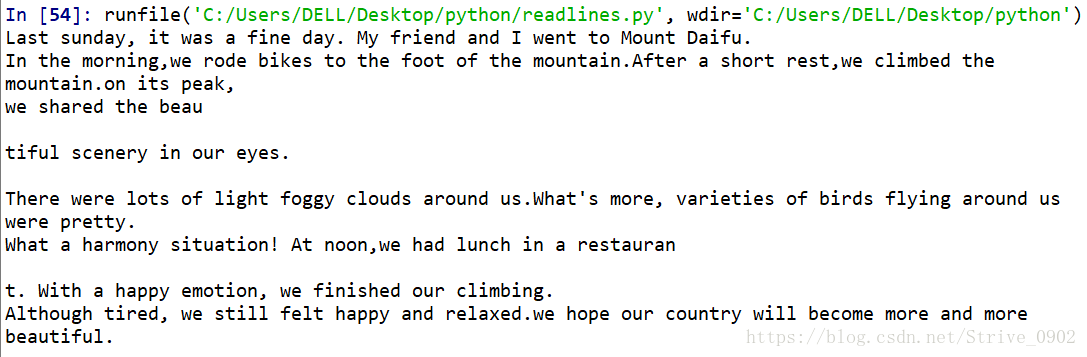

因为readline是按行读取,按行打印,而print函数默认输出完,需要跨行!所以每打印一行中间都有空一行

Last sunday, it was a fine day. My friend and I went to Mount Daifu.

In the morning,we rode bikes to the foot of the mountain.After a short rest,we climbed the mountain.on its peak,

we shared the beautiful scenery in our eyes.

There were lots of light foggy clouds around us.What's more, varieties of birds flying around us were pretty.

What a harmony situation! At noon,we had lunch in a restaurant. With a happy emotion, we finished our climbing.

Although tired, we still felt happy and relaxed.we hope our country will become more and more beautiful.readlines()方法

1、一次性读取整个文件。

2、自动将文件内容分析成一个行的列表。两种实现方式如下

f = open(r'C:\Users\DELL\Desktop\python\words.txt','r')

try:

lines = f.readlines()

print ("type(lines)=",type(lines)) #type(lines)=

print (lines)

finally:

f.close()

with open(r'C:\Users\DELL\Desktop\python\words.txt','r') as f:

lines = f.readlines()

print ("type(lines)=",type(lines)) #type(lines)=

for line in lines:

print (line)

结果如下:

type(lines)=

['Last sunday, it was a fine day. My friend and I went to Mount Daifu.\n', 'In the morning,we rode bikes to the foot of the mountain.After a short rest,we climbed the mountain.on its peak,\n', 'we shared the beautiful scenery in our eyes.\n', ' \n', "There were lots of light foggy clouds around us.What's more, varieties of birds flying around us were pretty.\n", 'What a harmony situation! At noon,we had lunch in a restaurant. With a happy emotion, we finished our climbing.\n', 'Although tired, we still felt happy and relaxed.we hope our country will become more and more beautiful.']

type(lines)=

Last sunday, it was a fine day. My friend and I went to Mount Daifu.

In the morning,we rode bikes to the foot of the mountain.After a short rest,we climbed the mountain.on its peak,

we shared the beautiful scenery in our eyes.

There were lots of light foggy clouds around us.What's more, varieties of birds flying around us were pretty.

What a harmony situation! At noon,we had lunch in a restaurant. With a happy emotion, we finished our climbing.

Although tired, we still felt happy and relaxed.we hope our country will become more and more beautiful. 大文件读取数据

处理大文件是很容易想到的就是将大文件分割成若干小文件处理,处理完每个小文件后释放该部分内存。这里用了iter & yield:

- 分批读取

def read_in_chunks(filePath, chunk_size=1024*1024):

""" Lazy function (generator) to read a file piece by piece.

Default chunk size: 1M You can set your own chunk size """

file_object = open(filePath)

while True:

chunk_data = file_object.read(chunk_size)

if not chunk_data:

break

yield chunk_data

if __name__ == "__main__":

filePath = './path/filename'

for chunk in read_in_chunks(filePath):

process(chunk) #

注意:

1 size设置成1024*1024的意思,就是1bit*1024*1024 = 1M,也就是说,每隔1M读取一次数据

2 这里使用了yield哦!!! 为啥不用return,要使用yeild的原因,可以看看这个:

https://blog.csdn.net/Strive_0902/article/details/82924728

例如,就像上面的例子,我们在read()的括号里面加了个参数,表示一次要读取得大小,代码改变如下:

def read_trunk(file_name,trunk_size):

f = open(file_name,'r') #创建一个文件对象,也是一个可迭代对象

while True:

content = f.read(trunk_size) #结果为str类型

if not content:

break

#print (type(content))

#print()

#print (content)

yield content

#return content

if __name__=='__main__':

file_name = r'C:\Users\DELL\Desktop\python\words.txt'

#print(read_trunk(file_name,200))

for i in read_trunk(file_name,200):

print(i)

print()可以看到,它会根据每200大小,读取一次,这里说明一下,为啥不能用return,而要用yeild,因为return执行一次,就带着返回值退出函数了,后面得没法读取,在上面得代码中,如果用注释掉得return语句时,函数运行结果如下:

-

Using

with open()

对可迭代对象 f,进行迭代遍历:for line in f,会自动地使用缓冲IO(buffered IO)以及内存管理,而不必担心任何大文件的问题。

with open(filename, 'rb') as f:

for line in f: