sofasofa竞赛:一 公共自行车使用量预测

一 简介

背景介绍:

公共自行车低碳、环保、健康,并且解决了交通中“最后一公里”的痛点,在全国各个城市越来越受欢迎。本练习赛的数据取自于两个城市某街道上的几处公共自行车停车桩。我们希望根据时间、天气等信息,预测出该街区在一小时内的被借取的公共自行车的数量。

数据下载 地址:http://sofasofa.io/competition.php?id=1

数据文件(三个):

train.csv 训练集,文件大小 273kb

test.csv 预测集, 文件大小 179kb

sample_submit.csv 提交示例 文件大小 97kb

训练集中共有10000条样本,预测集中有7000条样本。

变量说明:

| 变量名 | 解释 |

|---|---|

| id | 行编号,没有实际意义 |

| y | 一小时内自行车被借取的数量。在test.csv中,这是需要被预测的数值。 |

| city | 表示该行记录所发生的城市,一共两个城市 |

| hour | 当时的时间,精确到小时,24小时计时法 |

| is_workday | 1表示工作日,0表示节假日或者周末 |

| temp_1 | 当时的气温,单位为摄氏度 |

| temp_2 | 当时的体感温度,单位为摄氏度 |

| weather | 当时的天气状况,1为晴朗,2为多云、阴天,3为轻度降水天气,4为强降水天气 |

| wind | 当时的风速,数值越大表示风速越大 |

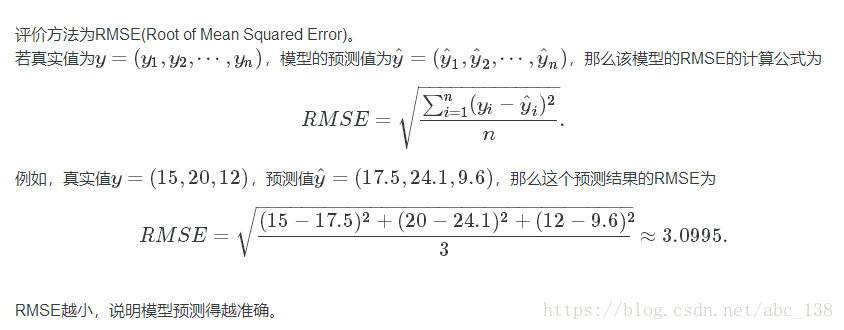

评价方法

二 解决方法

1 简单线性回归模型(Python)

# 标杆模型

import pandas as pd

from sklearn.linear_model import LinearRegression

# 读取数据

train = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\train.csv")

test = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\test.csv")

submit = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\sample_submit.csv")

# 删除id

train.drop('id', axis=1, inplace=True)

test.drop('id',axis = 1,inplace = True)

# 取出训练集的y

y_train = train.pop('y')

# 建立回归模型

reg = LinearRegression()

reg.fit(train,y_train)

y_pred = reg.predict(test)

# 若预测值是负数,则取0

y_pred = list(map(lambda x: x if x >= 0 else 0, y_pred))

# 输出预测结果到my_LR_prediction.csv

submit['y'] = y_pred

submit.to_csv("my_LR_prediction3.csv",index = False)

该模型预测结果的RMSE为:39.132

2 决策树回归模型(Python)

# 决策树

from sklearn.tree import DecisionTreeRegressor

import pandas as pd

# 读取数据

train = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\train.csv")

test = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\test.csv")

submit = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\sample_submit.csv")

# 删除id

train.drop('id', axis=1, inplace=True)

test.drop('id', axis=1, inplace=True)

# 取出训练集的y

y_train = train.pop('y')

# 建立最大深度为5的决策树回归模型

reg = DecisionTreeRegressor(max_depth=5)

reg.fit(train, y_train)

y_pred = reg.predict(test)

# 输出预测结果至my_DT_prediction.csv

submit['y'] = y_pred

submit.to_csv('my_DT_prediction.csv', index=False)

该模型预测结果的RMSE为:28.818

3 xgboost回归模型(Python)

from xgboost import XGBRegressor

import pandas as pd

# 读取数据

train = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\train.csv")

test = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\test.csv")

submit = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\sample_submit.csv")

# 删除id

train.drop('id', axis=1, inplace=True)

test.drop('id', axis=1, inplace=True)

# 取出训练集的y

y_train = train.pop('y')

# 建立一个默认的xgboost回归模型

reg = XGBRegressor(learning_rate = 0.05,max_depth = 6,n_estimators = 500)

reg.fit(train, y_train)

y_pred = reg.predict(test)

# 输出预测结果至my_XGB_prediction.csv

submit['y'] = y_pred

submit.to_csv('my_XGB_tc_prediction.csv', index=False)该模型预测结果的RMSE为:18.947

4 随机森林(Python)

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestRegressor

from sklearn import cross_validation, metrics

import pandas as pd

# 读取数据

train = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\train.csv")

test = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\test.csv")

submit = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\sample_submit.csv")

# 删除id

train.drop('id', axis=1, inplace=True)

test.drop('id', axis=1, inplace=True)

# 取出训练集的y

y_train = train.pop('y')

rf = RandomForestRegressor(n_estimators=70)

rf.fit(train,y_train)

y_pred = rf.predict(test)

submit['y'] = y_pred

submit.to_csv('my_rf_prediction.csv',index=False)

该模型预测结果的RMSE为:16.245

二 调参

参数调优的一般方法。

需要进行如下步骤:

1. 选择较高的学习速率(learning rate)。一般情况下,学习速率的值为0.1。但是,对于不同的问题,理想的学习速率有时候会在0.05到0.3之间波动。选择对应于此学习速率的理想决策树数量。XGBoost有一个很有用的函数“cv”,这个函数可以在每一次迭代中使用交叉验证,并返回理想的决策树数量。

2. 对于给定的学习速率和决策树数量,进行决策树特定参数调优(max_depth, min_child_weight, gamma, subsample, colsample_bytree)。在确定一棵树的过程中,我们可以选择不同的参数,待会儿我会举例说明。

3. xgboost的正则化参数的调优。(lambda, alpha)。这些参数可以降低模型的复杂度,从而提高模型的表现。

4. 降低学习速率,确定理想参数

1 xgboost使用 GridSearchCV调参

(1)首先调 n_estimators

from xgboost import XGBRegressor

from sklearn.model_selection import GridSearchCV

import pandas as pd

# 读取数据

train = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\train.csv")

test = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\test.csv")

submit = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\sample_submit.csv")

# 删除id

train.drop('id', axis=1, inplace=True)

test.drop('id', axis=1, inplace=True)

# 取出训练集的y

y_train = train.pop('y')

param_test1 = {

'n_estimators':range(100,1000,100)

}

gsearch1 = GridSearchCV(estimator = XGBRegressor( learning_rate =0.1, max_depth=5,

min_child_weight=1, gamma=0, subsample=0.8, colsample_bytree=0.8,

nthread=4, scale_pos_weight=1, seed=27),

param_grid = param_test1, iid=False, cv=5)

gsearch1.fit(train, y_train)

print(gsearch1.best_params_, gsearch1.best_score_)

得到结果为 {'n_estimators': 200} 0.902471795911

(2)参数:max_depth 和min_child_weight

我们先对这两个参数调优,是因为它们对最终结果有很大的影响。首先,我们先大范围地粗调参数,然后再小范围地微调

from xgboost import XGBRegressor

from sklearn.model_selection import GridSearchCV

import pandas as pd

# 读取数据

train = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\train.csv")

test = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\test.csv")

submit = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\sample_submit.csv")

# 删除id

train.drop('id', axis=1, inplace=True)

test.drop('id', axis=1, inplace=True)

# 取出训练集的y

y_train = train.pop('y')

param_test2 = {

'max_depth':range(3,10,2),

'min_child_weight':range(1,6,2)

}

gsearch2 = GridSearchCV(estimator = XGBRegressor( learning_rate =0.1, n_estimators=200 ),

param_grid = param_test2)

gsearch2.fit(train, y_train)

print(gsearch2.best_params_, gsearch2.best_score_)得到结果为:{'min_child_weight': 5, 'max_depth': 5} 0.903728688825

我们对于数值进行了较大跨度的12中不同的排列组合,可以看出理想的max_depth值为5,理想的min_child_weight值为5。在这个值附近我们可以再进一步调整,来找出理想值。我们把上下范围各拓展1,因为之前我们进行组合的时候,参数调整的步长是2。

from xgboost import XGBRegressor

from sklearn.model_selection import GridSearchCV

import pandas as pd

# 读取数据

train = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\train.csv")

test = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\test.csv")

submit = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\sample_submit.csv")

# 删除id

train.drop('id', axis=1, inplace=True)

test.drop('id', axis=1, inplace=True)

# 取出训练集的y

y_train = train.pop('y')

param_test3 = {

'max_depth':[4,5,6],

'min_child_weight':[4,5,6]

}

gsearch3 = GridSearchCV(estimator = XGBRegressor( learning_rate =0.1, n_estimators=200 ),

param_grid = param_test3)

gsearch3.fit(train, y_train)

print(gsearch3.best_params_, gsearch3.best_score_)得到的结果:{'min_child_weight': 5, 'max_depth': 5} 0.903728688825

(3)gamma参数调优

在已经调整好其它参数的基础上,我们可以进行gamma参数的调优了。Gamma参数取值范围可以很大,我这里把取值范围设置为5了。你其实也可以取更精确的gamma值。

from xgboost import XGBRegressor

from sklearn.model_selection import GridSearchCV

import pandas as pd

# 读取数据

train = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\train.csv")

test = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\test.csv")

submit = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\sample_submit.csv")

# 删除id

train.drop('id', axis=1, inplace=True)

test.drop('id', axis=1, inplace=True)

# 取出训练集的y

y_train = train.pop('y')

param_test4 = {

'gamma': [i / 10.0 for i in range(0, 5)]

}

gsearch4 = GridSearchCV(estimator = XGBRegressor( learning_rate =0.1, n_estimators=200,max_depth=5,min_child_weight=5 ),

param_grid = param_test4)

gsearch4.fit(train, y_train)

print(gsearch4.best_params_, gsearch4.best_score_)得到的结果为:{'gamma': 0.0} 0.903728688825

(4)调整subsample 和 colsample_bytree 参数

下一步是尝试不同的subsample 和 colsample_bytree 参数。我们分两个阶段来进行这个步骤。这两个步骤都取0.6,0.7,0.8,0.9作为起始值。

from xgboost import XGBRegressor

from sklearn.model_selection import GridSearchCV

import pandas as pd

# 读取数据

train = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\train.csv")

test = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\test.csv")

submit = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\sample_submit.csv")

# 删除id

train.drop('id', axis=1, inplace=True)

test.drop('id', axis=1, inplace=True)

# 取出训练集的y

y_train = train.pop('y')

param_test5 = {

'subsample': [i / 10.0 for i in range(6, 10)],

'colsample_bytree': [i / 10.0 for i in range(6, 10)]

}

gsearch5 = GridSearchCV(estimator = XGBRegressor( learning_rate =0.1, n_estimators=200,max_depth=5,min_child_weight=5,gamma=0.0 ),

param_grid = param_test5)

gsearch5.fit(train, y_train)

print(gsearch5.best_params_, gsearch5.best_score_)得到的结果为:{'colsample_bytree': 0.9, 'subsample': 0.7} 0.903596572763

(5)正则化参数调优

from xgboost import XGBRegressor

from sklearn.model_selection import GridSearchCV

import pandas as pd

# 读取数据

train = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\train.csv")

test = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\test.csv")

submit = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\sample_submit.csv")

# 删除id

train.drop('id', axis=1, inplace=True)

test.drop('id', axis=1, inplace=True)

# 取出训练集的y

y_train = train.pop('y')

param_test6 = {

'reg_alpha': [0, 0.001, 0.005, 0.01, 0.05]

}

gsearch6 = GridSearchCV(estimator = XGBRegressor( learning_rate =0.1, n_estimators=200,max_depth=5,min_child_weight=5,gamma=0.0,colsample_bytree= 0.9, subsample=0.7),

param_grid = param_test6)

gsearch6.fit(train, y_train)

print(gsearch6.best_params_, gsearch6.best_score_)得到的结果为 {'reg_alpha': 0.001} 0.903596599584汇总最后提交得到的结果为15.02,比直接用xgboost提高3.9

from xgboost import XGBRegressor

from sklearn.model_selection import GridSearchCV

import pandas as pd

# 读取数据

train = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\train.csv")

test = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\test.csv")

submit = pd.read_csv(r"D:\PythonWenjian\sofasofa\bike_pre\data\sample_submit.csv")

# 删除id

train.drop('id', axis=1, inplace=True)

test.drop('id', axis=1, inplace=True)

# 取出训练集的y

y_train = train.pop('y')

reg= XGBRegressor( learning_rate =0.1, n_estimators=200,max_depth=5,min_child_weight=5,gamma=0.0,colsample_bytree= 0.9, subsample=0.7,reg_alpha=0.001)

reg.fit(train, y_train)

y_pred = reg.predict(test)

# 输出预测结果至my_XGB_prediction.csv

submit['y'] = y_pred

submit.to_csv('my_XGB_tc2_prediction.csv', index=False)