Docker下,实战mongodb副本集(Replication)

在《Docker下,极速体验mongodb》一文中我们体验了单机版的mongodb,实际生产环境中,一般都会通过集群的方式来避免单点故障,今天我们就在Docker下实战mongodb副本集(Replication)集群环境的搭建;

副本集简介

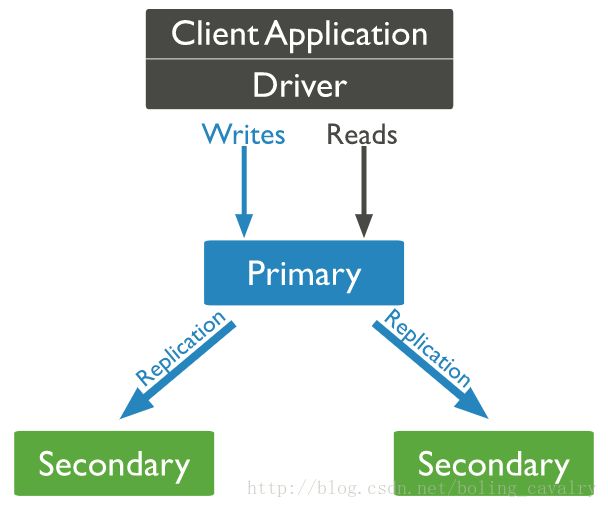

下图来自mongodb官网,说明了副本集的部署和用法:

如上图,一共有三个mongodb server,分别是Primary、Secondary1、Secondary2,应用服务器的读写都在Primary完成,但是数据都会同步到Secondary1和Secondary2上,当Primary不可用时,会通过重新选举得到新的Primary机器,以保证整个服务是可用的,如下图:

环境信息

本次实战一共要启动三个容器,它们的身份和IP如下表所示:

| 容器名 | ip | 备注 |

|---|---|---|

| m0 | 172.18.0.3 | Primary |

| m1 | 172.18.0.4 | Secondary1 |

| m2 | 172.18.0.2 | Secondary2 |

本次用到的镜像

本次用到的镜像是bolingcavalry/ubuntu16-mongodb349:0.0.1,这是我自己制作的mongodb的镜像,已经上传到hub.docker.com网站,可以通过docker pull bolingcavalry/ubuntu16-mongodb349:0.0.1命令下载使用,关于此镜像的详情请看《制作mongodb的Docker镜像文件》;

docker-compose.yml

为了便于集中管理所有容器,我们使用docker-compose.yml来管理三个server,内容如下所示:

version: '2'

services:

m0:

image: bolingcavalry/ubuntu16-mongodb349:0.0.1

container_name: m0

ports:

- "28017:28017"

command: /bin/sh -c 'mongod --replSet replset0'

restart: always

m1:

image: bolingcavalry/ubuntu16-mongodb349:0.0.1

container_name: m1

command: /bin/sh -c 'mongod --replSet replset0'

restart: always

m2:

image: bolingcavalry/ubuntu16-mongodb349:0.0.1

container_name: m2

command: /bin/sh -c 'mongod --replSet replset0'

restart: always如上所示,三个容器使用了相同的镜像,并且使用了相同的启动命令/bin/sh -c ‘mongod –replSet replset0’,–replSet replset0是启动副本集模式服务的参数;

在使用docker-compose up -d命令启动的时候遇到一点小问题:启动后用docker ps命令查看容器状态,发现三个容器均是”Restarting”状态,网上搜了一下说有可能是内存不足引起的,于是我重启了电脑,在没有打开其他耗费内存的进程的时候再次执行docker-compose up -d命令,这次启动成功了(我的电脑是8G内存的Mac pro);

关于这个问题,最好的解决方法应该是限制mongodb的占用内存,但是好像没有找到控制参数,其次的解决方法是限制docker容器的内存大小,这个在执行docker run来启动的时候可以通过-m来限制,但是在docker-compose命令中并没有找到限制内存的参数,所以读者们如果也遇到此问题,请不要用docker-compose来启动,用下面三个docker run命令也可能达到相同的效果,并且还能通过-m参数来限制内存:

docker run --name m0 -idt -p 28017:28017 bolingcavalry/ubuntu16-mongodb349:0.0.1 /bin/bash -c 'mongod --replSet replset0'

docker run --name m1 -idt bolingcavalry/ubuntu16-mongodb349:0.0.1 /bin/bash -c 'mongod --replSet replset0'

docker run --name m2 -idt bolingcavalry/ubuntu16-mongodb349:0.0.1 /bin/bash -c 'mongod --replSet replset0'取得三个容器的IP

执行以下命令可以得到hosts文件信息,里面有容器IP:

docker exec m1 cat /etc/hosts得到结果如下图所示,红框中的172.18.0.4就是容器的IP:

用上述方法可以得到三个容器的IP:

| 容器名 | ip | 备注 |

|---|---|---|

| m0 | 172.18.0.3 | Primary |

| m1 | 172.18.0.4 | Secondary1 |

| m2 | 172.18.0.2 | Secondary2 |

配置副本集

- 执行docker exec -it m0 /bin/bash进入m0容器,执行mongo进入mongodb控制台;

- 执行use admin,使用admin数据库;

- 执行以下命令,配置机器信息,其中的use replset0是启动mongodb时候的–replSet参数,定义副本集的id:

config = { _id:"replset0", members:[{_id:0,host:"172.18.0.3:27017"},{_id:1,host:"172.18.0.4:27017"},{_id:2,host:"172.18.0.2:27017"}]}控制台输出如下:

> config = { _id:"replset0", members:[{_id:0,host:"172.18.0.3:27017"},{_id:1,host:"172.18.0.4:27017"},{_id:2,host:"172.18.0.2:27017"}]}

{

"_id" : "replset0",

"members" : [

{

"_id" : 0,

"host" : "172.18.0.3:27017"

},

{

"_id" : 1,

"host" : "172.18.0.4:27017"

},

{

"_id" : 2,

"host" : "172.18.0.2:27017"

}

]

}可见三个机器都已经加入集群;

4. 执行rs.initiate(config)初始化配置;

5. 执行rs.status()查看状态,得到输出如下:

replset0:OTHER> rs.status()

{

"set" : "replset0",

"date" : ISODate("2017-10-08T02:36:19.839Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1507430170, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1507430170, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1507430170, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "172.18.0.3:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 666,

"optime" : {

"ts" : Timestamp(1507430170, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-10-08T02:36:10Z"),

"electionTime" : Timestamp(1507429929, 1),

"electionDate" : ISODate("2017-10-08T02:32:09Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 1,

"name" : "172.18.0.4:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 262,

"optime" : {

"ts" : Timestamp(1507430170, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1507430170, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-10-08T02:36:10Z"),

"optimeDurableDate" : ISODate("2017-10-08T02:36:10Z"),

"lastHeartbeat" : ISODate("2017-10-08T02:36:19.373Z"),

"lastHeartbeatRecv" : ISODate("2017-10-08T02:36:18.275Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "172.18.0.3:27017",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "172.18.0.2:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 262,

"optime" : {

"ts" : Timestamp(1507430170, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1507430170, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-10-08T02:36:10Z"),

"optimeDurableDate" : ISODate("2017-10-08T02:36:10Z"),

"lastHeartbeat" : ISODate("2017-10-08T02:36:19.373Z"),

"lastHeartbeatRecv" : ISODate("2017-10-08T02:36:18.274Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "172.18.0.3:27017",

"configVersion" : 1

}

],

"ok" : 1

}可以看到三个容器都已经加入集群,并且m0是Primary;

验证同步

- 在m0的mongodb控制台执行以下命令,创建数据库school并往集合student中新增三条记录:

use school

db.student.insert({name:"Tom", age:16})

db.student.insert({name:"Jerry", age:15})

db.student.insert({name:"Mary", age:9})- 进入m1容器,执行mongo进入mongodb的控制台,执行以下命令查看school数据库的记录:

use school

db.student.find()控制台直接返回以下错误:

replset0:SECONDARY> db.student.find()

Error: error: {

"ok" : 0,

"errmsg" : "not master and slaveOk=false",

"code" : 13435,

"codeName" : "NotMasterNoSlaveOk"

}发生上述错误是因为mongodb默认读写都是在Primary上进行的,副本节点不允许读写,可以使用如下命令来允许副本读:

db.getMongo().setSlaveOk()这时候再执行查询,如下:

replset0:SECONDARY> db.student.find()

{ "_id" : ObjectId("59d98fde9740291fac4998fb"), "name" : "Tom", "age" : 16 }

{ "_id" : ObjectId("59d98fe69740291fac4998fc"), "name" : "Jerry", "age" : 15 }

{ "_id" : ObjectId("59d98fed9740291fac4998fd"), "name" : "Mary", "age" : 9 }现在读操作就成功了;

3. 在m1上尝试新增一条记录会:

replset0:SECONDARY> db.student.insert({name:"Mike", age:18})

WriteResult({ "writeError" : { "code" : 10107, "errmsg" : "not master" } })新增失败了,因为m1不是Primary,不允许写入;

4. 在m2上也执行命令db.getMongo().setSlaveOk(),使得m2也能读数据;

验证故障转移

副本集模式下,如果Primary不可用,整个集群将会选举出新的Primary来继续对外提供读写服务,一起来验证一下m0不可用的时候的状况:

1. 打开一个终端执行docker stop m0停掉m0容器;

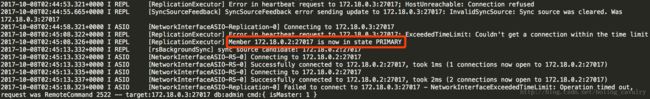

2. 执行docker logs -f m1查看m1的标准输出信息,如下图:

日志中显示m2现在是Primary了;

3. 进入m2容器,执行mongo进入mongodb控制台,新增一条记录:

replset0:SECONDARY> db.student.insert({name:"John", age:10})

WriteResult({ "nInserted" : 1 })如上所示,可以成功;

4. 进入m1容器,执行mongo进入mongodb控制台,查询记录发现新增的数据已经同步过来,但是在m1上新增记录依旧失败,如下所示:

replset0:SECONDARY> db.student.find()

{ "_id" : ObjectId("59d98fde9740291fac4998fb"), "name" : "Tom", "age" : 16 }

{ "_id" : ObjectId("59d98fe69740291fac4998fc"), "name" : "Jerry", "age" : 15 }

{ "_id" : ObjectId("59d98fed9740291fac4998fd"), "name" : "Mary", "age" : 9 }

{ "_id" : ObjectId("59d991802c44ff654953dc24"), "name" : "John", "age" : 10 }

replset0:SECONDARY> db.student.insert({name:"Mike", age:18})

WriteResult({ "writeError" : { "code" : 10107, "errmsg" : "not master" } })新增失败,说明m1依然是secondary身份;

5. 在m1或者m2的mongodb控制台执行r s.status()命令,可以看到如下信息:

replset0:SECONDARY> rs.status()

{

"set" : "replset0",

"date" : ISODate("2017-10-08T08:26:11.219Z"),

"myState" : 2,

"term" : NumberLong(2),

"syncingTo" : "172.18.0.2:27017",

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1507451161, 1),

"t" : NumberLong(2)

},

"appliedOpTime" : {

"ts" : Timestamp(1507451161, 1),

"t" : NumberLong(2)

},

"durableOpTime" : {

"ts" : Timestamp(1507451161, 1),

"t" : NumberLong(2)

}

},

"members" : [

{

"_id" : 0,

"name" : "172.18.0.3:27017",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)",

"uptime" : 0,

"optime" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDurable" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("1970-01-01T00:00:00Z"),

"optimeDurableDate" : ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" : ISODate("2017-10-08T08:26:07.898Z"),

"lastHeartbeatRecv" : ISODate("2017-10-08T02:44:51.730Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "No route to host",

"configVersion" : -1

},

{

"_id" : 1,

"name" : "172.18.0.4:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 21658,

"optime" : {

"ts" : Timestamp(1507451161, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2017-10-08T08:26:01Z"),

"syncingTo" : "172.18.0.2:27017",

"configVersion" : 1,

"self" : true

},

{

"_id" : 2,

"name" : "172.18.0.2:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 21251,

"optime" : {

"ts" : Timestamp(1507451161, 1),

"t" : NumberLong(2)

},

"optimeDurable" : {

"ts" : Timestamp(1507451161, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2017-10-08T08:26:01Z"),

"optimeDurableDate" : ISODate("2017-10-08T08:26:01Z"),

"lastHeartbeat" : ISODate("2017-10-08T08:26:10.063Z"),

"lastHeartbeatRecv" : ISODate("2017-10-08T08:26:09.725Z"),

"pingMs" : NumberLong(0),

"electionTime" : Timestamp(1507430703, 1),

"electionDate" : ISODate("2017-10-08T02:45:03Z"),

"configVersion" : 1

}

],

"ok" : 1

}如上所示,m0节点的配置信息虽然还在,但是stateStr属性已经变为not reachable/healthy,而m2的已经成为了Primary;

至此,Docker下的mongodb副本集实战就完成了,这里依然留下了一个小问题:对于调用mongodb服务的应用来说,应该怎么连接mongodb呢?一开始直连m0,等到出了问题再手工切换到m2么?还是有自动切换的方法?之后的文章中我们再一起实战吧。