Spark2.x学习笔记:8、 Spark应用程打包与提交

8、 Spark应用程打包与提交

提示:基于Windows平台+Intellij IDEA的Spark开发环境,仅用于编写程序和代码以本地模式调试。

Windows+Intellij IDEA下的Spark程序不能直接连接到Linux集群。如果需要将Spark程序在Linux集群中运行,需要将Spark程序打包,并提交到集中运行,这就是本章的主要内容。

8.1 应用程序打包

(1)Maven打包

进入Maven项目根目录(比如前一章创建的simpleSpark项目,进入simpleSpark项目根目录可以看到1个pom.xml文件),执行mvn package命令进行自动化打包。

Maven根据pom文件里packaging的配置,决定是生成jar文件还是war文件,并放到target目录下。

这时Maven项目根目录下的target子目录中即可看到生成的对应Jar包

备注:此命令需要在项目的根目录(也就是pom.xml文件所在的目录)下运行,Maven才知道打包哪个项目。

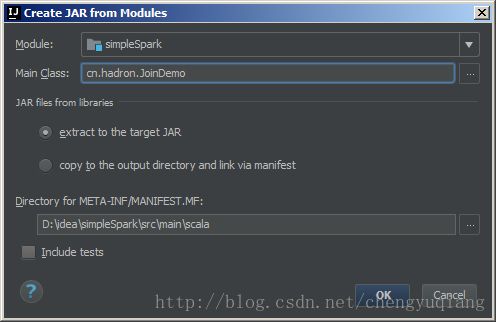

(2)IntelliJ IDEA打包

主要是图形界面操作。

- 菜单操作:File主菜单 –> Project Structure –> Artifacts –> Jar –>From modules whit dependencies…

- 菜单操作:Build主菜单 –>Build Artifacts –>快捷菜单 -> simpleSpark:jar -> build/rebuild

8.2 应用程序提交

从Spark1.0.0开始,Spark提供了一个容易上手的应用程序部署工具bin/spark-submit,可以完成Spark应用程序在local、Standalone、YARN、Mesos上的快捷部署。

(1)将jar包上传到集群

首先需要将我们开发测试好的程序打成Jar包,然后上传到集群的客户端。

(2)查看jar包结构

有时需要查看Jar包结构,可以通过jar命令:

jar tf simpleSpark-1.0-SNAPSHOT.jar

(2)spark-submit提交

执行下面命令:

spark-submit –class cn.hadron.JoinDemo –master local /root/simpleSpark-1.0-SNAPSHOT.jar

参数说明:

--master local表示本地运行--class cn.hadron.JoinDemo表示需要运行的主类/root/simpleSpark-1.0-SNAPSHOT.jar表示Jar所在位置

[root@node1 ~]# spark-submit - -class cn.hadron.JoinDemo - -master local /root/simpleSpark-1.0-SNAPSHOT.jar

17/09/16 10:23:23 INFO spark.SparkContext: Running Spark version 2.2.0

17/09/16 10:23:24 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/09/16 10:23:24 INFO spark.SparkContext: Submitted application: JoinDemo

17/09/16 10:23:24 INFO spark.SecurityManager: Changing view acls to: root

17/09/16 10:23:24 INFO spark.SecurityManager: Changing modify acls to: root

17/09/16 10:23:24 INFO spark.SecurityManager: Changing view acls groups to:

17/09/16 10:23:24 INFO spark.SecurityManager: Changing modify acls groups to:

17/09/16 10:23:24 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

17/09/16 10:23:25 INFO util.Utils: Successfully started service 'sparkDriver' on port 35808.

17/09/16 10:23:25 INFO spark.SparkEnv: Registering MapOutputTracker

17/09/16 10:23:25 INFO spark.SparkEnv: Registering BlockManagerMaster

17/09/16 10:23:25 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

17/09/16 10:23:25 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

17/09/16 10:23:25 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-7351d3bf-6741-4259-bc60-88ba3b5c5e6d

17/09/16 10:23:25 INFO memory.MemoryStore: MemoryStore started with capacity 413.9 MB

17/09/16 10:23:26 INFO spark.SparkEnv: Registering OutputCommitCoordinator

17/09/16 10:23:26 INFO util.log: Logging initialized @7009ms

17/09/16 10:23:26 INFO server.Server: jetty-9.3.z-SNAPSHOT

17/09/16 10:23:26 INFO server.Server: Started @7253ms

17/09/16 10:23:26 INFO server.AbstractConnector: Started ServerConnector@8ab78bc{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

17/09/16 10:23:26 INFO util.Utils: Successfully started service 'SparkUI' on port 4040.

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@21a5fd96{/jobs,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6d511b5f{/jobs/json,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@40f33492{/jobs/job,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6bc28a83{/jobs/job/json,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@13579834{/stages,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5bd73d1a{/stages/json,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2555fff0{/stages/stage,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@702ed190{/stages/stage/json,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7c18432b{/stages/pool,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@70e29e14{/stages/pool/json,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5a4bef8{/storage,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2449cff7{/storage/json,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@62da83ed{/storage/rdd,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@37d80fe7{/storage/rdd/json,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@e3cee7b{/environment,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6b9267b{/environment/json,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@29ad44e3{/executors,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5af9926a{/executors/json,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@fac80{/executors/threadDump,null,AVAILABLE,@Spark}

17/09/16 10:23:26 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@649f2009{/executors/threadDump/json,null,AVAILABLE,@Spark}

17/09/16 10:23:27 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@69adf72c{/static,null,AVAILABLE,@Spark}

17/09/16 10:23:27 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@48e64352{/,null,AVAILABLE,@Spark}

17/09/16 10:23:27 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4362d7df{/api,null,AVAILABLE,@Spark}

17/09/16 10:23:27 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3e587920{/jobs/job/kill,null,AVAILABLE,@Spark}

17/09/16 10:23:27 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@24f43aa3{/stages/stage/kill,null,AVAILABLE,@Spark}

17/09/16 10:23:27 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://192.168.80.131:4040

17/09/16 10:23:27 INFO spark.SparkContext: Added JAR file:/root/simpleSpark-1.0-SNAPSHOT.jar at spark://192.168.80.131:35808/jars/simpleSpark-1.0-SNAPSHOT.jar with timestamp 1505571807177

17/09/16 10:23:27 INFO executor.Executor: Starting executor ID driver on host localhost

17/09/16 10:23:27 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 41590.

17/09/16 10:23:27 INFO netty.NettyBlockTransferService: Server created on 192.168.80.131:41590

17/09/16 10:23:27 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

17/09/16 10:23:27 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 192.168.80.131, 41590, None)

17/09/16 10:23:27 INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.80.131:41590 with 413.9 MB RAM, BlockManagerId(driver, 192.168.80.131, 41590, None)

17/09/16 10:23:27 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 192.168.80.131, 41590, None)

17/09/16 10:23:27 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, 192.168.80.131, 41590, None)

17/09/16 10:23:28 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5eccd3b9{/metrics/json,null,AVAILABLE,@Spark}

17/09/16 10:23:29 INFO spark.SparkContext: Starting job: take at JoinDemo.scala:19

17/09/16 10:23:29 INFO scheduler.DAGScheduler: Registering RDD 0 (parallelize at JoinDemo.scala:12)

17/09/16 10:23:29 INFO scheduler.DAGScheduler: Registering RDD 1 (parallelize at JoinDemo.scala:15)

17/09/16 10:23:29 INFO scheduler.DAGScheduler: Got job 0 (take at JoinDemo.scala:19) with 1 output partitions

17/09/16 10:23:29 INFO scheduler.DAGScheduler: Final stage: ResultStage 2 (take at JoinDemo.scala:19)

17/09/16 10:23:29 INFO scheduler.DAGScheduler: Parents of final stage: List(ShuffleMapStage 0, ShuffleMapStage 1)

17/09/16 10:23:29 INFO scheduler.DAGScheduler: Missing parents: List(ShuffleMapStage 0, ShuffleMapStage 1)

17/09/16 10:23:29 INFO scheduler.DAGScheduler: Submitting ShuffleMapStage 0 (ParallelCollectionRDD[0] at parallelize at JoinDemo.scala:12), which has no missing parents

17/09/16 10:23:30 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 2.0 KB, free 413.9 MB)

17/09/16 10:23:30 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1308.0 B, free 413.9 MB)

17/09/16 10:23:30 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.80.131:41590 (size: 1308.0 B, free: 413.9 MB)

17/09/16 10:23:30 INFO spark.SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1006

17/09/16 10:23:30 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 0 (ParallelCollectionRDD[0] at parallelize at JoinDemo.scala:12) (first 15 tasks are for partitions Vector(0))

17/09/16 10:23:30 INFO scheduler.TaskSchedulerImpl: Adding task set 0.0 with 1 tasks

17/09/16 10:23:30 INFO scheduler.DAGScheduler: Submitting ShuffleMapStage 1 (ParallelCollectionRDD[1] at parallelize at JoinDemo.scala:15), which has no missing parents

17/09/16 10:23:30 INFO memory.MemoryStore: Block broadcast_1 stored as values in memory (estimated size 2.0 KB, free 413.9 MB)

17/09/16 10:23:30 INFO memory.MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 1305.0 B, free 413.9 MB)

17/09/16 10:23:30 INFO storage.BlockManagerInfo: Added broadcast_1_piece0 in memory on 192.168.80.131:41590 (size: 1305.0 B, free: 413.9 MB)

17/09/16 10:23:30 INFO spark.SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1006

17/09/16 10:23:30 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 1 (ParallelCollectionRDD[1] at parallelize at JoinDemo.scala:15) (first 15 tasks are for partitions Vector(0))

17/09/16 10:23:30 INFO scheduler.TaskSchedulerImpl: Adding task set 1.0 with 1 tasks

17/09/16 10:23:30 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 4950 bytes)

17/09/16 10:23:30 INFO executor.Executor: Running task 0.0 in stage 0.0 (TID 0)

17/09/16 10:23:30 INFO executor.Executor: Fetching spark://192.168.80.131:35808/jars/simpleSpark-1.0-SNAPSHOT.jar with timestamp 1505571807177

17/09/16 10:23:31 INFO client.TransportClientFactory: Successfully created connection to /192.168.80.131:35808 after 107 ms (0 ms spent in bootstraps)

17/09/16 10:23:31 INFO util.Utils: Fetching spark://192.168.80.131:35808/jars/simpleSpark-1.0-SNAPSHOT.jar to /tmp/spark-1fe804d0-f8f4-459a-a2fc-cd128f4d3904/userFiles-db139484-b759-4251-8a82-c4cb9d310939/fetchFileTemp1136713366369070572.tmp

17/09/16 10:23:32 INFO executor.Executor: Adding file:/tmp/spark-1fe804d0-f8f4-459a-a2fc-cd128f4d3904/userFiles-db139484-b759-4251-8a82-c4cb9d310939/simpleSpark-1.0-SNAPSHOT.jar to class loader

17/09/16 10:23:32 INFO executor.Executor: Finished task 0.0 in stage 0.0 (TID 0). 983 bytes result sent to driver

17/09/16 10:23:32 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 1.0 (TID 1, localhost, executor driver, partition 0, PROCESS_LOCAL, 4906 bytes)

17/09/16 10:23:32 INFO executor.Executor: Running task 0.0 in stage 1.0 (TID 1)

17/09/16 10:23:32 INFO executor.Executor: Finished task 0.0 in stage 1.0 (TID 1). 897 bytes result sent to driver

17/09/16 10:23:32 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 1901 ms on localhost (executor driver) (1/1)

17/09/16 10:23:32 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

17/09/16 10:23:32 INFO scheduler.DAGScheduler: ShuffleMapStage 0 (parallelize at JoinDemo.scala:12) finished in 2.101 s

17/09/16 10:23:32 INFO scheduler.DAGScheduler: looking for newly runnable stages

17/09/16 10:23:32 INFO scheduler.DAGScheduler: running: Set(ShuffleMapStage 1)

17/09/16 10:23:32 INFO scheduler.DAGScheduler: waiting: Set(ResultStage 2)

17/09/16 10:23:32 INFO scheduler.DAGScheduler: failed: Set()

17/09/16 10:23:32 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 1.0 (TID 1) in 199 ms on localhost (executor driver) (1/1)

17/09/16 10:23:32 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

17/09/16 10:23:32 INFO scheduler.DAGScheduler: ShuffleMapStage 1 (parallelize at JoinDemo.scala:15) finished in 1.940 s

17/09/16 10:23:32 INFO scheduler.DAGScheduler: looking for newly runnable stages

17/09/16 10:23:32 INFO scheduler.DAGScheduler: running: Set()

17/09/16 10:23:32 INFO scheduler.DAGScheduler: waiting: Set(ResultStage 2)

17/09/16 10:23:32 INFO scheduler.DAGScheduler: failed: Set()

17/09/16 10:23:32 INFO scheduler.DAGScheduler: Submitting ResultStage 2 (MapPartitionsRDD[3] at cogroup at JoinDemo.scala:18), which has no missing parents

17/09/16 10:23:32 INFO memory.MemoryStore: Block broadcast_2 stored as values in memory (estimated size 3.0 KB, free 413.9 MB)

17/09/16 10:23:32 INFO memory.MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 1822.0 B, free 413.9 MB)

17/09/16 10:23:32 INFO storage.BlockManagerInfo: Added broadcast_2_piece0 in memory on 192.168.80.131:41590 (size: 1822.0 B, free: 413.9 MB)

17/09/16 10:23:32 INFO spark.SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:1006

17/09/16 10:23:32 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from ResultStage 2 (MapPartitionsRDD[3] at cogroup at JoinDemo.scala:18) (first 15 tasks are for partitions Vector(0))

17/09/16 10:23:32 INFO scheduler.TaskSchedulerImpl: Adding task set 2.0 with 1 tasks

17/09/16 10:23:32 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 2.0 (TID 2, localhost, executor driver, partition 0, PROCESS_LOCAL, 4684 bytes)

17/09/16 10:23:32 INFO executor.Executor: Running task 0.0 in stage 2.0 (TID 2)

17/09/16 10:23:32 INFO storage.ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks

17/09/16 10:23:32 INFO storage.ShuffleBlockFetcherIterator: Started 0 remote fetches in 18 ms

17/09/16 10:23:33 INFO storage.ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks

17/09/16 10:23:33 INFO storage.ShuffleBlockFetcherIterator: Started 0 remote fetches in 3 ms

17/09/16 10:23:33 INFO executor.Executor: Finished task 0.0 in stage 2.0 (TID 2). 2166 bytes result sent to driver

17/09/16 10:23:33 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 2.0 (TID 2) in 298 ms on localhost (executor driver) (1/1)

17/09/16 10:23:33 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool

17/09/16 10:23:33 INFO scheduler.DAGScheduler: ResultStage 2 (take at JoinDemo.scala:19) finished in 0.308 s

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Job 0 finished: take at JoinDemo.scala:19, took 3.600765 s

(index.jsp,(CompactBuffer(192.168.1.100, 192.168.1.102),CompactBuffer(Home)))

(about.jsp,(CompactBuffer(192.168.1.101),CompactBuffer(About)))

--------------

17/09/16 10:23:33 INFO spark.SparkContext: Starting job: take at JoinDemo.scala:22

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Registering RDD 0 (parallelize at JoinDemo.scala:12)

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Registering RDD 1 (parallelize at JoinDemo.scala:15)

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Got job 1 (take at JoinDemo.scala:22) with 1 output partitions

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Final stage: ResultStage 5 (take at JoinDemo.scala:22)

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Parents of final stage: List(ShuffleMapStage 3, ShuffleMapStage 4)

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Missing parents: List(ShuffleMapStage 3, ShuffleMapStage 4)

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Submitting ShuffleMapStage 3 (ParallelCollectionRDD[0] at parallelize at JoinDemo.scala:12), which has no missing parents

17/09/16 10:23:33 INFO memory.MemoryStore: Block broadcast_3 stored as values in memory (estimated size 2.0 KB, free 413.9 MB)

17/09/16 10:23:33 INFO memory.MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 1309.0 B, free 413.9 MB)

17/09/16 10:23:33 INFO storage.BlockManagerInfo: Added broadcast_3_piece0 in memory on 192.168.80.131:41590 (size: 1309.0 B, free: 413.9 MB)

17/09/16 10:23:33 INFO spark.SparkContext: Created broadcast 3 from broadcast at DAGScheduler.scala:1006

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 3 (ParallelCollectionRDD[0] at parallelize at JoinDemo.scala:12) (first 15 tasks are for partitions Vector(0))

17/09/16 10:23:33 INFO scheduler.TaskSchedulerImpl: Adding task set 3.0 with 1 tasks

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Submitting ShuffleMapStage 4 (ParallelCollectionRDD[1] at parallelize at JoinDemo.scala:15), which has no missing parents

17/09/16 10:23:33 INFO memory.MemoryStore: Block broadcast_4 stored as values in memory (estimated size 2.0 KB, free 413.9 MB)

17/09/16 10:23:33 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 3.0 (TID 3, localhost, executor driver, partition 0, PROCESS_LOCAL, 4950 bytes)

17/09/16 10:23:33 INFO executor.Executor: Running task 0.0 in stage 3.0 (TID 3)

17/09/16 10:23:33 INFO memory.MemoryStore: Block broadcast_4_piece0 stored as bytes in memory (estimated size 1305.0 B, free 413.9 MB)

17/09/16 10:23:33 INFO storage.BlockManagerInfo: Added broadcast_4_piece0 in memory on 192.168.80.131:41590 (size: 1305.0 B, free: 413.9 MB)

17/09/16 10:23:33 INFO executor.Executor: Finished task 0.0 in stage 3.0 (TID 3). 897 bytes result sent to driver

17/09/16 10:23:33 INFO spark.SparkContext: Created broadcast 4 from broadcast at DAGScheduler.scala:1006

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 4 (ParallelCollectionRDD[1] at parallelize at JoinDemo.scala:15) (first 15 tasks are for partitions Vector(0))

17/09/16 10:23:33 INFO scheduler.TaskSchedulerImpl: Adding task set 4.0 with 1 tasks

17/09/16 10:23:33 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 4.0 (TID 4, localhost, executor driver, partition 0, PROCESS_LOCAL, 4906 bytes)

17/09/16 10:23:33 INFO executor.Executor: Running task 0.0 in stage 4.0 (TID 4)

17/09/16 10:23:33 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 3.0 (TID 3) in 160 ms on localhost (executor driver) (1/1)

17/09/16 10:23:33 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 3.0, whose tasks have all completed, from pool

17/09/16 10:23:33 INFO scheduler.DAGScheduler: ShuffleMapStage 3 (parallelize at JoinDemo.scala:12) finished in 0.182 s

17/09/16 10:23:33 INFO scheduler.DAGScheduler: looking for newly runnable stages

17/09/16 10:23:33 INFO scheduler.DAGScheduler: running: Set(ShuffleMapStage 4)

17/09/16 10:23:33 INFO scheduler.DAGScheduler: waiting: Set(ResultStage 5)

17/09/16 10:23:33 INFO scheduler.DAGScheduler: failed: Set()

17/09/16 10:23:33 INFO executor.Executor: Finished task 0.0 in stage 4.0 (TID 4). 897 bytes result sent to driver

17/09/16 10:23:33 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 4.0 (TID 4) in 39 ms on localhost (executor driver) (1/1)

17/09/16 10:23:33 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 4.0, whose tasks have all completed, from pool

17/09/16 10:23:33 INFO scheduler.DAGScheduler: ShuffleMapStage 4 (parallelize at JoinDemo.scala:15) finished in 0.046 s

17/09/16 10:23:33 INFO scheduler.DAGScheduler: looking for newly runnable stages

17/09/16 10:23:33 INFO scheduler.DAGScheduler: running: Set()

17/09/16 10:23:33 INFO scheduler.DAGScheduler: waiting: Set(ResultStage 5)

17/09/16 10:23:33 INFO scheduler.DAGScheduler: failed: Set()

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Submitting ResultStage 5 (MapPartitionsRDD[6] at join at JoinDemo.scala:21), which has no missing parents

17/09/16 10:23:33 INFO storage.BlockManagerInfo: Removed broadcast_2_piece0 on 192.168.80.131:41590 in memory (size: 1822.0 B, free: 413.9 MB)

17/09/16 10:23:33 INFO memory.MemoryStore: Block broadcast_5 stored as values in memory (estimated size 3.2 KB, free 413.9 MB)

17/09/16 10:23:33 INFO memory.MemoryStore: Block broadcast_5_piece0 stored as bytes in memory (estimated size 1902.0 B, free 413.9 MB)

17/09/16 10:23:33 INFO storage.BlockManagerInfo: Added broadcast_5_piece0 in memory on 192.168.80.131:41590 (size: 1902.0 B, free: 413.9 MB)

17/09/16 10:23:33 INFO spark.SparkContext: Created broadcast 5 from broadcast at DAGScheduler.scala:1006

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from ResultStage 5 (MapPartitionsRDD[6] at join at JoinDemo.scala:21) (first 15 tasks are for partitions Vector(0))

17/09/16 10:23:33 INFO scheduler.TaskSchedulerImpl: Adding task set 5.0 with 1 tasks

17/09/16 10:23:33 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 5.0 (TID 5, localhost, executor driver, partition 0, PROCESS_LOCAL, 4684 bytes)

17/09/16 10:23:33 INFO executor.Executor: Running task 0.0 in stage 5.0 (TID 5)

17/09/16 10:23:33 INFO storage.ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks

17/09/16 10:23:33 INFO storage.ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms

17/09/16 10:23:33 INFO storage.ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks

17/09/16 10:23:33 INFO storage.ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms

17/09/16 10:23:33 INFO storage.BlockManagerInfo: Removed broadcast_3_piece0 on 192.168.80.131:41590 in memory (size: 1309.0 B, free: 413.9 MB)

17/09/16 10:23:33 INFO executor.Executor: Finished task 0.0 in stage 5.0 (TID 5). 1283 bytes result sent to driver

17/09/16 10:23:33 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 5.0 (TID 5) in 98 ms on localhost (executor driver) (1/1)

17/09/16 10:23:33 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 5.0, whose tasks have all completed, from pool

17/09/16 10:23:33 INFO scheduler.DAGScheduler: ResultStage 5 (take at JoinDemo.scala:22) finished in 0.102 s

17/09/16 10:23:33 INFO scheduler.DAGScheduler: Job 1 finished: take at JoinDemo.scala:22, took 0.503311 s

(index.jsp,(192.168.1.100,Home))

(index.jsp,(192.168.1.102,Home))

(about.jsp,(192.168.1.101,About))

17/09/16 10:23:33 INFO spark.SparkContext: Invoking stop() from shutdown hook

17/09/16 10:23:33 INFO server.AbstractConnector: Stopped Spark@8ab78bc{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

17/09/16 10:23:33 INFO ui.SparkUI: Stopped Spark web UI at http://192.168.80.131:4040

17/09/16 10:23:33 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

17/09/16 10:23:34 INFO memory.MemoryStore: MemoryStore cleared

17/09/16 10:23:34 INFO storage.BlockManager: BlockManager stopped

17/09/16 10:23:34 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

17/09/16 10:23:34 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

17/09/16 10:23:34 INFO spark.SparkContext: Successfully stopped SparkContext

17/09/16 10:23:34 INFO util.ShutdownHookManager: Shutdown hook called

17/09/16 10:23:34 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-1fe804d0-f8f4-459a-a2fc-cd128f4d3904

[root@node1 ~]#

8.3 修改Spark日志级别

(1)永久修改

从上面Spark日志输出可以看到大量普通INFO级别的日志,淹没了我们需要运行输出结果。可以通过修改Spark配置文件来Spark日志级别(永久的)。

执行下面命令修改Spark日志级别:

sed -i ‘s/log4j.rootCategory=INFO/log4j.rootCategory=WARN/’ log4j.properties

[root@node1 ~]# cd /opt/spark-2.2.0/conf/

[root@node1 conf]# sed -i 's/log4j.rootCategory=INFO/log4j.rootCategory=WARN/' log4j.properties(2)临时修改

在spark-shell中可以通过下面两句

import org.apache.log4j.{Level.Logger}

Logger.geLogger("org.apache.spark").setLevel(Level.WARN)

8.4 提交到Spark on YARN

执行下面命令:

spark-submit –master yarn –deploy-mode client –class cn.hadron.JoinDemo /root/simpleSpark-1.0-SNAPSHOT.jar

[root@node1 ~]# spark-submit --master yarn --deploy-mode client --class cn.hadron.JoinDemo /root/simpleSpark-1.0-SNAPSHOT.jar

17/09/17 06:04:46 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

(index.jsp,(CompactBuffer(192.168.1.100, 192.168.1.102),CompactBuffer(Home)))

(about.jsp,(CompactBuffer(192.168.1.101),CompactBuffer(About)))

--------------

(index.jsp,(192.168.1.100,Home))

(index.jsp,(192.168.1.102,Home))

(about.jsp,(192.168.1.101,About))

[root@node1 ~]#当spark-submit命令太长时,可以通过\进行命令换行。主要参数之间保留空格,这里在\前加了一个空格。

[root@node1 ~]# spark-submit \

> --master yarn \

> --deploy-mode client \

> --class cn.hadron.JoinDemo \

> /root/simpleSpark-1.0-SNAPSHOT.jar

17/09/17 06:07:55 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

(index.jsp,(CompactBuffer(192.168.1.100, 192.168.1.102),CompactBuffer(Home)))

(about.jsp,(CompactBuffer(192.168.1.101),CompactBuffer(About)))

--------------

(index.jsp,(192.168.1.100,Home))

(index.jsp,(192.168.1.102,Home))

(about.jsp,(192.168.1.101,About))

[root@node1 ~]#

8.5 spark-submit

[root@node1 ~]# spark-submit --help

Usage: spark-submit [options] file> [app arguments]

Usage: spark-submit --kill [submission ID] --master [spark://...]

Usage: spark-submit --status [submission ID] --master [spark://...]

Usage: spark-submit run-example [options] example-class [example args]

Options:

--master MASTER_URL spark://host:port, mesos://host:port, yarn, or local.

--deploy-mode DEPLOY_MODE Whether to launch the driver program locally ("client") or

on one of the worker machines inside the cluster ("cluster")

(Default: client).

--class CLASS_NAME Your application's main class (for Java / Scala apps).

--name NAME A name of your application.

--jars JARS Comma-separated list of local jars to include on the driver

and executor classpaths.

--packages Comma-separated list of maven coordinates of jars to include

on the driver and executor classpaths. Will search the local

maven repo, then maven central and any additional remote

repositories given by --repositories. The format for the

coordinates should be groupId:artifactId:version.

--exclude-packages Comma-separated list of groupId:artifactId, to exclude while

resolving the dependencies provided in --packages to avoid

dependency conflicts.

--repositories Comma-separated list of additional remote repositories to

search for the maven coordinates given with --packages.

--py-files PY_FILES Comma-separated list of .zip, .egg, or .py files to place

on the PYTHONPATH for Python apps.

--files FILES Comma-separated list of files to be placed in the working

directory of each executor. File paths of these files

in executors can be accessed via SparkFiles.get(fileName).

--conf PROP=VALUE Arbitrary Spark configuration property.

--properties-file FILE Path to a file from which to load extra properties. If not

specified, this will look for conf/spark-defaults.conf.

--driver-memory MEM Memory for driver (e.g. 1000M, 2G) (Default: 1024M).

--driver-java-options Extra Java options to pass to the driver.

--driver-library-path Extra library path entries to pass to the driver.

--driver-class-path Extra class path entries to pass to the driver. Note that

jars added with --jars are automatically included in the

classpath.

--executor-memory MEM Memory per executor (e.g. 1000M, 2G) (Default: 1G).

--proxy-user NAME User to impersonate when submitting the application.

This argument does not work with --principal / --keytab.

--help, -h Show this help message and exit.

--verbose, -v Print additional debug output.

--version, Print the version of current Spark.

Spark standalone with cluster deploy mode only:

--driver-cores NUM Cores for driver (Default: 1).

Spark standalone or Mesos with cluster deploy mode only:

--supervise If given, restarts the driver on failure.

--kill SUBMISSION_ID If given, kills the driver specified.

--status SUBMISSION_ID If given, requests the status of the driver specified.

Spark standalone and Mesos only:

--total-executor-cores NUM Total cores for all executors.

Spark standalone and YARN only:

--executor-cores NUM Number of cores per executor. (Default: 1 in YARN mode,

or all available cores on the worker in standalone mode)

YARN-only:

--driver-cores NUM Number of cores used by the driver, only in cluster mode

(Default: 1).

--queue QUEUE_NAME The YARN queue to submit to (Default: "default").

--num-executors NUM Number of executors to launch (Default: 2).

If dynamic allocation is enabled, the initial number of

executors will be at least NUM.

--archives ARCHIVES Comma separated list of archives to be extracted into the

working directory of each executor.

--principal PRINCIPAL Principal to be used to login to KDC, while running on

secure HDFS.

--keytab KEYTAB The full path to the file that contains the keytab for the

principal specified above. This keytab will be copied to

the node running the Application Master via the Secure

Distributed Cache, for renewing the login tickets and the

delegation tokens periodically. 参数说明:

| 参数 | 可选值 | 说明 |

|---|---|---|

--master MASTER_URL` |

spark://host:port, mesos://host:port, yarn, or local | |

--deploy-mode DEPLOY_MODE |

cluster,client | Driver程序运行的地方,client或者cluster |

--class CLASS_NAME |

应用程序主类 | |

--name NAME |

应用程序名称 | |

--jars JARS |

Driver依赖的第三方jar包 | |

--driver-memory MEM |

Driver程序使用内存大小 | |

--executor-memory MEM |

默认1G | executor内存大小 |

--executor-cores NUM |

默认为1 | 每个executor使用的内核数,仅限于Spark on Yarn模式 |

--num-executors NUM |

默认是2个 | 启动的executor数量,仅限于Spark on Yarn模式 |

[root@node1 ~]# spark-submit \

> --master yarn \

> --deploy-mode cluster \

> --class cn.hadron.JoinDemo \

> --name sparkJoin \

> --driver-memory 2g \

> --executor-memory 2g \

> --executor-cores 2 \

> --num-executors 2 \

> /root/simpleSpark-1.0-SNAPSHOT.jar

17/09/17 09:13:15 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/09/17 09:13:21 WARN Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

Exception in thread "main" org.apache.spark.SparkException: Application application_1505642385307_0002 finished with failed status

at org.apache.spark.deploy.yarn.Client.run(Client.scala:1104)

at org.apache.spark.deploy.yarn.Client$.main(Client.scala:1150)

at org.apache.spark.deploy.yarn.Client.main(Client.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:755)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:205)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:119)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

[root@node1 ~]#