tensorflow:搭建Rnn(一)

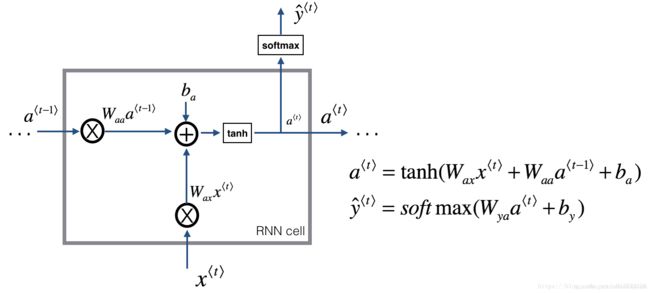

Rnn原理图:

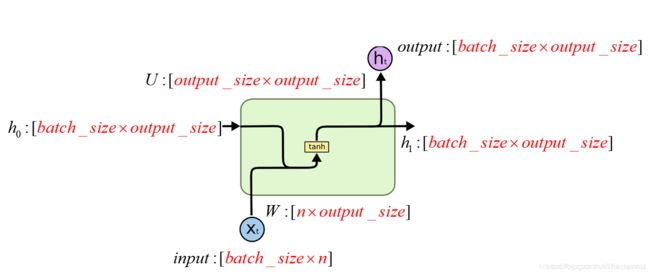

Rnn的权重配置图:

tensorflow搭建基本的Rnn

class tf.contrib.rnn.BasicRNNCell(num_units, activation=None, reuse=None, name=None)

输入参数:

num_units: int, the number of units in the RNN cell.

activation: Nonlinearity to use. Default: tanh.

reuse: (optional) Python boolean describing whether to reuse variables in an existing scope. If not True, and the existing scope already has the given variables, an error is raised.

name: String, the name of the layer. Layers with the same name will share weights, but to avoid mistakes we require reuse=True in such cases.

输出:

一个隐层神经元数量为 num_units 的 RNN 基本单元(实例化的 cell)

常用属性:

state_size:size(s) of state(s) used by this cell,等于隐层神经元数量

output_size: size of outputs produced by this cell

注意: 在此函数中,state_size 永远等于 output_size

常用方法:

call(inputs, state): 返回两个一模一样的隐层状态值

zero_state(batch_size, dtype): 返回一个形状为 [batch_size, state_size] 的全零张量

代码示例

output_size = 10

# RNN的输出模块的大小

batch_size = 32

# 输入的维度

cell = tf.nn.rnn_cell.BasicRNNCell(num_units=output_size)

# 一个隐层神经元数量为 num_units 的 RNN 基本单元(实例化的 cell)

input = tf.placeholder(dtype=tf.float32,shape=[batch_size,150])

# 输入的维度是 batch_size * 150

h0 = cell.zero_state(batch_size=batch_size,dtype=tf.float32)

# 构建h0 维度是 batch_size * output_size

output,h1 = cell.call(input,h0)

# 调用 call 函数, 在时间序列上推进一步

按照上面的推断各个参数的维度为:input: [32,150]; W: [150,10]; h0: [32,10]; U: [10,10] B: [10];所以最终输出的维度就应该为[32,10]

import tensorflow as tf

import numpy as np

from tensorflow.python.ops import variable_scope as vs

output_size = 4

batch_size = 3

dim = 5

cell = tf.nn.rnn_cell.BasicRNNCell(num_units=output_size)

input = tf.placeholder(dtype=tf.float32, shape=[batch_size, dim])

h0 = cell.zero_state(batch_size=batch_size, dtype=tf.float32)

output, h1 = cell.call(input, h0)

x = np.array([[1, 2, 1, 1, 1], [2, 0, 0, 1, 1], [2, 1, 0, 1, 0]])

scope = vs.get_variable_scope()

with vs.variable_scope(scope,reuse=True) as outer_scope:

weights = vs.get_variable(

"kernel", [9, output_size],

dtype=tf.float32,

initializer= None)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

a,b,w= sess.run([output,h1,weights],feed_dict={input:x})

print('output:')

print(a)

print('h1:')

print(b)

print("weights:")

print(w)# shape = (9,4)

state = np.zeros(shape=(3,4))# shape = (3,4)

all_input = np.concatenate((x,state),axis=1)# shape = (3,9)

result = np.tanh(np.matmul(all_input,w))

print('result:')

print(result)