《机器学习实战》Logisic回归算法(2)之从疝气病症预测病马的死亡率

=====================================================================

《机器学习实战》系列博客是博主阅读《机器学习实战》这本书的笔记也包含一些其他python实现的机器学习算法

算法实现均采用python

github 源码同步:https://github.com/Thinkgamer/Machine-Learning-With-Python

======================================================================

关于回归算法的分析与scikit-learn代码分析实现请参考:点击阅读

关于回归算法的理论分析和python代码实现请参考: 点击阅读 本篇主要是使用 《机器学习实战》Logistic回归算法(1)构建的模型代码进行案例实战

使用logistic回归估计马疝气病的死亡率的步骤

1、收集数据:给定数据文件

2、准备数据:用Python解析文本文件并填充空缺值

3、分析数据:可视化并观察数据

4、训练算法:使用优化算法,找到最佳回归系数

5、测试算法:为了量化回归的效果,需要观察错误率,根据错误率决定是否退回到训练阶段,通过改变迭代的次数和步长等参数来得到更好的回归系数

6、使用算法:

针对数据预处理的一些问题和解决办法

自动填入缺失值的三种策略

1:使用一个全局常量填充缺失值,将缺失的属性值用同一个常熟代替

2:使用与给定记录属于同一类的所有样本的均值和众数填充默认值,加入某数据集的一条属于a类的记录在A属性下存在缺失值,那么可以用该属性上属于a类的全部记录的平均值来代替该缺失值

3:用可能值来代替缺失值,可以用回归,基于推理的工具或者决策树归纳确定,加入利用数据集中其他顾客的属性,可以构造一颗决策树来预测相同属性的缺失值

噪声数据的平滑方法

1:分箱,通过考察邻居来平滑有序数据的值

平均值平滑,中值平滑,边界平滑

2:聚类,将类似的值组织成群或者簇,离群点可以被检测,通过删除离群点来平滑数据

3:回归,通过回归方法(线性回归,非线性回归)让数据适合一个函数来平滑数据

数据规范化三种方法

1:最小-最大规范化

value = (当前值 - min)/(max - min)

2:z-score规范化

(1):计算求得平均值avg,标准差t

(2):规范化,value=(当前值 - avg)/t

3:小数定标规范化

通过移动属性f的小数点位置进行规范化,小数点移动位数依赖于f的最大绝对值

数据离散化

1:监督离散化 离散化过程中使用类别信息

基于熵的离散化方法,采用自定向下的分裂技术,在计算和确定分裂点时利用类分布信息

2:非监督;离散化 离散化过程中没有使用类别信息

(1)等宽离散化:将属性的值域划分成相同宽度的区间,区间的个数由用户指定,存在的问题是实例分布非常不均匀

(2)等频离散化:将相同数量的对象放进每个区间,区间个数由用户指定

等频方法的一种变体是近似等频离散化方法,其基本思想是基于数据近似服从正太分布的假设,对连续数据进行离散化

(3)基于聚类的离散化方法

本实例采用的处理缺失值得办法是,过滤掉label为空的数据,同时将数据中除了类别标签之外的所有空值填充0,且所用数据为处理后的数据(点击下载训练数据集 测试数据集),下面看代码

#coding:utf-8

'''

Created on 2016/4/25

@author: Gamer Think

'''

import LogisticRegession as lr

from numpy import *

#二分类问题进行分类

def classifyVector(inX,weights):

prob = lr.sigmoid(sum(inX * weights))

if prob>0.5:

return 1.0

else:

return 0.0

#训练和测试

def colicTest():

frTrain = open('horseColicTraining.txt'); frTest = open('horseColicTest.txt')

trainingSet = []; trainingLabels = []

#训练回归模型

for line in frTrain.readlines():

currLine = line.strip().split('\t')

lineArr =[]

for i in range(21):

lineArr.append(float(currLine[i]))

trainingSet.append(lineArr)

trainingLabels.append(float(currLine[21]))

trainWeights = lr.stocGradAscent1(array(trainingSet), trainingLabels, 1000)

errorCount = 0; numTestVec = 0.0

#测试回归模型

for line in frTest.readlines():

numTestVec += 1.0

currLine = line.strip().split('\t')

lineArr =[]

for i in range(21):

lineArr.append(float(currLine[i]))

if int(classifyVector(array(lineArr), trainWeights))!= int(currLine[21]):

errorCount += 1

errorRate = (float(errorCount)/numTestVec)

print "the error rate of this test is: %f" % errorRate

return errorRate

def multiTest():

numTests = 10

errorSum = 0.0

for k in range(numTests):

errorSum += colicTest()

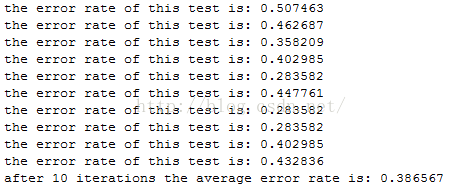

print "after %d iterations the average error rate is: %f" % (numTests,errorSum/float(numTests))

if __name__=="__main__":

multiTest()

代码解读:

colicTest():打开测试集和训练集,并对数据进行格式化处理,并调用stocGradAscent1()函数进行回归系数的计算,设置迭代次数为500,这里可以自行设定,在系数计算完之后,导入测试集并计算分类错误率。整体看来,colictest()函数具有完全独立的功能,多次运行的结果可能稍有不同,这是因为其中包含有随机的成分在里边,如果计算的回归系数是完全收敛,那么结果才是确定的

multiTest():调用 colicTest() 10次并求结果的平均值

运行结果如下图: