Deep Learning with Python 系列笔记(五):处理文本和序列数据

处理文本

文本是最广泛的序列数据形式之一。它可以被理解为一个字符序列,或者一个单词序列,尽管它在单词的级别上是最常见的。深度学习序列处理模型可以利用文本来生成一种基本的自然语言理解形式,充分适用于从文档分类、情绪分析、作者识别,甚至是问题回答(在受约束的上下文)中。

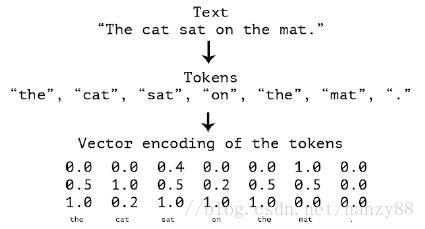

与所有其他的神经网络一样,深度学习模型不以输入原始文本作为输入:它们只处理数字张量。矢量化文本是将文本转换为数字张量的过程,可以通过多种方式实现:

- 通过将文本分割成单词,并将每个单词转换成一个向量。

- 通过将文本分割成字符,并将每个字符转换成一个矢量。

- 通过提取单词或字符的“N-grams”,并将每个N-gram转换成一个矢量。“N-gram”是多个连续单词或字符的重叠组。

总的来说,可以分解文本(单词、字符或N-gram)的不同单位称为“tokens”,将文本分解为这样的标记称为“标记化(tokenization)”。所有的文本矢量化过程包括应用一些标记化方案,然后将数字向量与生成的标记关联起来。这些矢量被压缩成序列张量,被送入深度神经网络。将向量与 tokens 关联的方法有多种。两种主要的方法:一种是 tokens 的热编码,另一种是 tokens 嵌入(通常只用于单词,并称为“单词嵌入”)。

理解 N-grams 和 bag-of-words

单词N-grams是一组N个(或更少)的连续单词,你可以从一个句子中提取出来,同样的概念也可以应用于字符而不是单词。

2 - grams:

{"The", "The cat", "cat", "cat sat", "sat",

"sat on", "on", "on the", "the", "the mat", "mat"}

3 - grams

{"The", "The cat", "cat", "cat sat", "The cat sat",

"sat", "sat on", "on", "cat sat on", "on the", "the",

"sat on the", "the mat", "mat", "on the mat"}

Such a set is called a “bag-of-3-grams” (resp. 2-grams). The term “bag” here refers to the fact that we are dealing with a set of tokens rather than a list or sequence: the tokens have no specific order. This family of tokenization method is called “bag-of-words.”

对单词或字符的One-hot encoding

one-hot编码是把标记转变为向量最常用和基础的方法,它包括将一个唯一的整数索引与每个单词联系起来,然后将这个整数索引 i 变成一个大小为N的二进制向量,即词汇表的大小,除了第 i 项之外为 1 外,其他全是0。

Word level one-hot encoding (toy example)

import numpy as np

# This is our initial data; one entry per "sample"

# (in this toy example, a "sample" is just a sentence, but

# it could be an entire document).

samples = ['The cat sat on the mat.', 'The dog ate my homework.']

# First, build an index of all tokens in the data.

token_index = {}

for sample in samples:

# We simply tokenize the samples via the `split` method.

# in real life, we would also strip punctuation and special characters

# from the samples.

for word in sample.split():

if word not in token_index:

# Assign a unique index to each unique word

token_index[word] = len(token_index) + 1

# Note that we don't attribute index 0 to anything.

# Next, we vectorize our samples.

# We will only consider the first `max_length` words in each sample.

max_length = 10

# This is where we store our results:

results = np.zeros((len(samples), max_length, max(token_index.values()) + 1))

for i, sample in enumerate(samples):

for j, word in list(enumerate(sample.split()))[:max_length]:

index = token_index.get(word)

results[i, j, index] = 1.

Character level one-hot encoding (toy example)

import string

samples = ['The cat sat on the mat.', 'The dog ate my homework.']

characters = string.printable # All printable ASCII characters.

token_index = dict(zip(range(1, len(characters) + 1), characters))

max_length = 50

results = np.zeros((len(samples), max_length, max(token_index.keys()) + 1))

for i, sample in enumerate(samples):

for j, character in enumerate(sample):

index = token_index.get(character)

results[i, j, index] = 1.

注意,Keras有内置的实用程序,可以从原始文本数据开始,在单词级别或字符级别执行一种one-hot编码文本。这是你实际上应该使用,因为它会照顾一些重要的特性,如剥离特殊字符的字符串,或只在前N个最常见的单词在你的数据集(一个常见的限制,以避免处理那些非常大的输入向量空间)。

Using Keras for word-level one-hot encoding

from keras.preprocessing.text import Tokenizer

samples = ['The cat sat on the mat.', 'The dog ate my homework.']

# We create a tokenizer, configured to only take

# into account the top-1000 most common on words

tokenizer = Tokenizer(num_words=1000)

# This builds the word index

tokenizer.fit_on_texts(samples)

# This turns strings into lists of integer indices.

sequences = tokenizer.texts_to_sequences(samples)

# You could also directly get the one-hot binary representations.

# Note that other vectorization modes than one-hot encoding are supported!

one_hot_results = tokenizer.texts_to_matrix(samples, mode='binary')

# This is how you can recover the word index that was computed

word_index = tokenizer.word_index

print('Found %s unique tokens.' % len(word_index))

one-hot 编码的一个变种就是所谓的“one - hot hashing trick”,当你的词库中独特的标记太多的时候常常时候这种方法。不是显式地为每个单词分配索引,并在字典中保存这些索引的引用,而是可以把单词哈希(散列)成固定大小的向量,这通常是使用非常轻量级的哈希函数完成的。

这种方法的主要优点是,它可以保留一个显式的单词索引,这可以节省内存,并允许数据的在线编码(在看到所有可用数据之前,开始生成 token 向量)。这种方法的一个缺点是,它容易受到“哈希冲突”的影响:两个不同的单词可能会以相同的散列结束,随后,任何查看这些哈希的机器学习模型都无法区分这两个单词之间的区别。当哈希空间的维数大于被散列的唯一令牌的总数时,哈希冲突的可能性就会降低。

Word-level one-hot encoding with hashing trick (toy example)

samples = ['The cat sat on the mat.', 'The dog ate my homework.']

# We will store our words as vectors of size 1000.

# Note that if you have close to 1000 words (or more)

# you will start seeing many hash collisions, which

# will decrease the accuracy of this encoding method.

dimensionality = 1000

max_length = 10

results = np.zeros((len(samples), max_length, dimensionality))

for i, sample in enumerate(samples):

for j, word in list(enumerate(sample.split()))[:max_length]:

# Hash the word into a "random" integer index

# that is between 0 and 1000

index = abs(hash(word)) % dimensionality

results[i, j, index] = 1.

word embeddings