离群点检测算法——LOF(Local Outlier Factor)

异常检测

异常检测的实质是寻找观测值和参照值之间有意义的偏差。数据库中的数据由于各种原因常常会包含一些异常记录,对这些异常记录的检测和解释有很重要的意义。异常检测目前在入侵检测、金融欺诈、股票分析等领域都有着比较好的实际应用效果。

离群点检测是异常检测中最常用的方法之一。离群点检测的主要目的是为了检测出那些与正常数据行为或特征属性差别较大的异常数据或行为,在一些文献中,这些数据和行为又被叫做孤立点、噪音、异常点或离群点,这些叫法中离群点的叫法较为普遍。

离群点检测算法分类

总的来说,目前主要的离群点检测技术包括:基于统计的离群检测方法、基于聚类的离群检测方法、基于分类的离群检测方法、基于距离的离群检测方法、基于密度的离群检测方法和基于信息熵的离群检测方法。

而LOF算法就是基于距离的离群检测方法之一。

LOF简介

LOF算法(Local Outlier Factor),全称又叫局部异常因子,是一种基于距离的异常点检测算法。

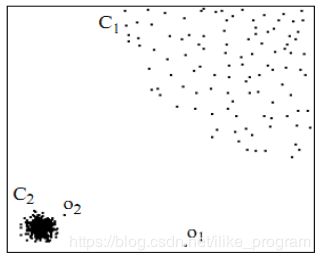

用视觉直观的感受一下,如下图,对于 C 1 C_1 C1集合的点,整体间距、密度、分散情况较为均匀一致,可以认为是同一簇;对于 C 2 C_2 C2集合的点,同样可认为是一簇。 o 1 、 o 2 o_1、o_2 o1、o2点相对孤立,可以认为是异常点或离散点。现在的问题是,如何实现算法的通用性,可以满足 C 1 C_1 C1和 C 2 C_2 C2这种密度分散情况迥异的集合的异常点识别。LOF可以实现我们的目标。

下面介绍LOF算法的重要概念:

1) d ( p , o ) d(p,o) d(p,o): p p p和 o o o两点之间的距离;

2) k − d i s t a n c e k-distance k−distance:第 k k k距离;

对于点 p p p的第 k k k距离 d k ( p ) d_k(p) dk(p)定义如下: d k ( p ) = d ( p , o ) d_k(p)=d(p,o) dk(p)=d(p,o),并且满足:

a)在集合中至少有不包括 p p p在内的 k k k个点 o ′ ∈ C { x ≠ p } , 满 足 d ( p , o ′ ) ≤ d ( p , o ) {o}'\in C\{x\ne p\},满足d(p,{o}')\le d(p,o) o′∈C{x̸=p},满足d(p,o′)≤d(p,o);

b)在集合中最多有不包括 p p p在内的 k − 1 k−1 k−1个点 o ′ ∈ C { x ≠ p } , 满 足 d ( p , o ′ ) < d ( p , o ) {o}'\in C\{x\ne p\},满足d(p,{o}')< d(p,o) o′∈C{x̸=p},满足d(p,o′)<d(p,o);

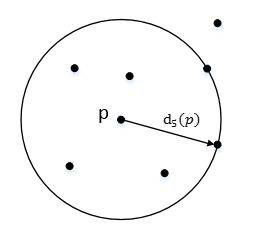

p p p的第 k k k距离,也就是距离 p p p第 k k k远的点的距离,不包括 p p p,如图

3) k − d i s t a n c e k-distance k−distance n e i g h b o r h o o d neighborhood neighborhood o f of of p p p:第 k k k距离邻域

点 p p p的第 k k k距离邻域 N k ( p ) N_k(p) Nk(p),就是 p p p的第 k k k距离即以内的所有点,包括第 k k k距离。因此 p p p的第 k k k邻域点的个数 ∣ N k ( p ) ∣ ≥ k |{{N}_{k}}(p)|\ge k ∣Nk(p)∣≥k。

4) r e a c h reach reach d i s t a n c e distance distance:可达距离

点 o o o到点 p p p的第 k k k可达距离定义为:

r e a c h − d i s t a n c e k ( p , o ) = m a x { k − d i s tan c e ( o ) , d ( p , o ) } reach-distance_k(p,o)=max \{k-dis\tan ce(o),d(p,o)\} reach−distancek(p,o)=max{k−distance(o),d(p,o)}

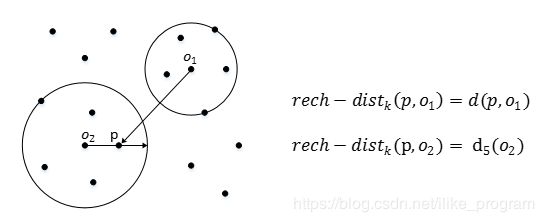

也就是,点 o o o到点 p p p的第 k k k可达距离,至少是 o o o的第 k k k距离,或者为 o 、 p o、p o、p间的真实距离。这也意味着,离点 o o o最近的 k k k个点, o o o到他们的可达距离被认为相等,且都等于 d k ( o ) d_k(o) dk(o)。如图, o 1 o_1 o1到 p p p的第5可达距离为 d ( p , o 1 ) d(p,o_1) d(p,o1), o 2 o_2 o2到 p p p的第5可达距离为 d 5 ( o 2 ) d_5(o_2) d5(o2)。

5) l o c a l local local r e a c h a b i l i t y reachability reachability d e n s i t y density density:局部可达密度

点 p p p的局部可达密度表示为:

l r d k ( p ) = 1 / ( ∑ o ∈ N k ( p ) r e a c h − d i s t k ( p , o ) ∣ N k ( p ) ∣ ) lr{{d}_{k}}(p)=1/(\frac{\sum\nolimits_{o\in {{N}_{k}}(p)}{reach-dis{{t}_{k}}(p,o)}}{|{{N}_{k}}(p)|}) lrdk(p)=1/(∣Nk(p)∣∑o∈Nk(p)reach−distk(p,o))

表示点 p p p的第 k k k邻域内点到 p p p的平均可达距离的倒数。

注意,是 p p p的邻域点 N k ( p ) N_k(p) Nk(p)到 p p p的可达距离,不是 p p p到 N k ( p ) N_k(p) Nk(p)的可达距离,一定要弄清楚关系。

这个值的含义可以这样理解,首先这代表一个密度,密度越高,我们认为越可能属于同一簇,密度越低,越可能是离群点。如果 p p p和周围邻域点是同一簇,那么可达距离越可能为较小的 d k ( o ) d_k(o) dk(o),导致可达距离之和较小,密度值较高;如果 p p p和周围邻居点较远,那么可达距离可能都会取较大值 d p ( o ) d_p(o) dp(o),导致密度较小,越可能是离群点。

6) l o c a l local local o u t l i e r outlier outlier o u t l i e r outlier outlier:局部离群因子

点 p p p的局部离群因子表示为:

L O F k ( p ) = ∑ o ∈ N k ( p ) l r d k ( o ) l r d k ( p ) ∣ N k ( p ) ∣ = ∑ o ∈ N k ( p ) l r d k ( o ) ∣ N k ( p ) ∣ / l r d k ( p ) LO{{F}_{k}}(p)=\frac{\sum\nolimits_{o\in {{N}_{k}}(p)}{\frac{lr{{d}_{k}}(o)}{lr{{d}_{k}}(p)}}}{|{{N}_{k}}(p)|}=\frac{\sum\nolimits_{o\in {{N}_{k}}(p)}{lr{{d}_{k}}(o)}}{|{{N}_{k}}(p)|}/lr{{d}_{k}}(p) LOFk(p)=∣Nk(p)∣∑o∈Nk(p)lrdk(p)lrdk(o)=∣Nk(p)∣∑o∈Nk(p)lrdk(o)/lrdk(p)

表示点 p p p的邻域点 N k ( p ) N_k(p) Nk(p)的局部可达密度与点 p p p的局部可达密度之比的平均数。

如果这个比值越接近1,说明 p p p的其邻域点密度差不多, p p p可能和邻域同属一簇;如果这个比值越小于1,说明 p p p的密度高于其邻域点密度, p p p为密集点;如果这个比值越大于1,说明 p p p的密度小于其邻域点密度, p p p越可能是异常点。

小结

现在概念定义已经介绍完了,现在再回过头来看一下LOF的思想,主要是通过比较每个点 p p p和其邻域点的密度来判断该点是否为异常点,如果点 p p p的密度越低,越可能被认定是异常点。至于密度,是通过点之间的距离来计算的,点之间距离越远,密度越低,距离越近,密度越高,完全符合我们的理解。而且,因为LOF对密度的是通过点的第 k k k邻域来计算,而不是全局计算,因此得名为“局部”异常因子,这样,对于图1的两种数据集 C 1 C_1 C1和 C 2 C_2 C2,LOF完全可以正确处理,而不会因为数据密度分散情况不同而错误的将正常点判定为异常点。

LOF算法的python实现

下述代码在python2.7中测试通过。

lof.py如下:

#!/usr/bin/python

# -*- coding: utf8 -*-

from __future__ import division

def distance_euclidean(instance1, instance2):

"""Computes the distance between two instances. Instances should be tuples of equal length.

Returns: Euclidean distance

Signature: ((attr_1_1, attr_1_2, ...), (attr_2_1, attr_2_2, ...)) -> float"""

def detect_value_type(attribute):

"""Detects the value type (number or non-number).

Returns: (value type, value casted as detected type)

Signature: value -> (str or float type, str or float value)"""

from numbers import Number

attribute_type = None

if isinstance(attribute, Number):

attribute_type = float

attribute = float(attribute)

else:

attribute_type = str

attribute = str(attribute)

return attribute_type, attribute

# check if instances are of same length

if len(instance1) != len(instance2):

raise AttributeError("Instances have different number of arguments.")

# init differences vector

differences = [0] * len(instance1)

# compute difference for each attribute and store it to differences vector

for i, (attr1, attr2) in enumerate(zip(instance1, instance2)):

type1, attr1 = detect_value_type(attr1)

type2, attr2 = detect_value_type(attr2)

# raise error is attributes are not of same data type.

if type1 != type2:

raise AttributeError("Instances have different data types.")

if type1 is float:

# compute difference for float

differences[i] = attr1 - attr2

else:

# compute difference for string

if attr1 == attr2:

differences[i] = 0

else:

differences[i] = 1

# compute RMSE (root mean squared error)

rmse = (sum(map(lambda x: x**2, differences)) / len(differences))**0.5

return rmse

class LOF:

"""Helper class for performing LOF computations and instances normalization."""

def __init__(self, instances, normalize=True, distance_function=distance_euclidean):

self.instances = instances

self.normalize = normalize

self.distance_function = distance_function

if normalize:

self.normalize_instances()

def compute_instance_attribute_bounds(self):

min_values = [float("inf")] * len(self.instances[0]) #n.ones(len(self.instances[0])) * n.inf

max_values = [float("-inf")] * len(self.instances[0]) #n.ones(len(self.instances[0])) * -1 * n.inf

for instance in self.instances:

min_values = tuple(map(lambda x,y: min(x,y), min_values,instance)) #n.minimum(min_values, instance)

max_values = tuple(map(lambda x,y: max(x,y), max_values,instance)) #n.maximum(max_values, instance)

self.max_attribute_values = max_values

self.min_attribute_values = min_values

def normalize_instances(self):

"""Normalizes the instances and stores the infromation for rescaling new instances."""

if not hasattr(self, "max_attribute_values"):

self.compute_instance_attribute_bounds()

new_instances = []

for instance in self.instances:

new_instances.append(self.normalize_instance(instance)) # (instance - min_values) / (max_values - min_values)

self.instances = new_instances

def normalize_instance(self, instance):

return tuple(map(lambda value,max,min: (value-min)/(max-min) if max-min > 0 else 0,

instance, self.max_attribute_values, self.min_attribute_values))

def local_outlier_factor(self, min_pts, instance):

"""The (local) outlier factor of instance captures the degree to which we call instance an outlier.

min_pts is a parameter that is specifying a minimum number of instances to consider for computing LOF value.

Returns: local outlier factor

Signature: (int, (attr1, attr2, ...), ((attr_1_1, ...),(attr_2_1, ...), ...)) -> float"""

if self.normalize:

instance = self.normalize_instance(instance)

return local_outlier_factor(min_pts, instance, self.instances, distance_function=self.distance_function)

def k_distance(k, instance, instances, distance_function=distance_euclidean):

#TODO: implement caching

"""Computes the k-distance of instance as defined in paper. It also gatheres the set of k-distance neighbours.

Returns: (k-distance, k-distance neighbours)

Signature: (int, (attr1, attr2, ...), ((attr_1_1, ...),(attr_2_1, ...), ...)) -> (float, ((attr_j_1, ...),(attr_k_1, ...), ...))"""

distances = {}

for instance2 in instances:

distance_value = distance_function(instance, instance2)

if distance_value in distances:

distances[distance_value].append(instance2)

else:

distances[distance_value] = [instance2]

distances = sorted(distances.items())

neighbours = []

k_sero = 0

k_dist = None

for dist in distances:

k_sero += len(dist[1])

neighbours.extend(dist[1])

k_dist = dist[0]

if k_sero >= k:

break

return k_dist, neighbours

def reachability_distance(k, instance1, instance2, instances, distance_function=distance_euclidean):

"""The reachability distance of instance1 with respect to instance2.

Returns: reachability distance

Signature: (int, (attr_1_1, ...),(attr_2_1, ...)) -> float"""

(k_distance_value, neighbours) = k_distance(k, instance2, instances, distance_function=distance_function)

return max([k_distance_value, distance_function(instance1, instance2)])

def local_reachability_density(min_pts, instance, instances, **kwargs):

"""Local reachability density of instance is the inverse of the average reachability

distance based on the min_pts-nearest neighbors of instance.

Returns: local reachability density

Signature: (int, (attr1, attr2, ...), ((attr_1_1, ...),(attr_2_1, ...), ...)) -> float"""

(k_distance_value, neighbours) = k_distance(min_pts, instance, instances, **kwargs)

reachability_distances_array = [0]*len(neighbours) #n.zeros(len(neighbours))

for i, neighbour in enumerate(neighbours):

reachability_distances_array[i] = reachability_distance(min_pts, instance, neighbour, instances, **kwargs)

sum_reach_dist = sum(reachability_distances_array)

if sum_reach_dist == 0:

return float('inf')

return len(neighbours) / sum_reach_dist

def local_outlier_factor(min_pts, instance, instances, **kwargs):

"""The (local) outlier factor of instance captures the degree to which we call instance an outlier.

min_pts is a parameter that is specifying a minimum number of instances to consider for computing LOF value.

Returns: local outlier factor

Signature: (int, (attr1, attr2, ...), ((attr_1_1, ...),(attr_2_1, ...), ...)) -> float"""

(k_distance_value, neighbours) = k_distance(min_pts, instance, instances, **kwargs)

instance_lrd = local_reachability_density(min_pts, instance, instances, **kwargs)

lrd_ratios_array = [0]* len(neighbours)

for i, neighbour in enumerate(neighbours):

instances_without_instance = set(instances)

instances_without_instance.discard(neighbour)

neighbour_lrd = local_reachability_density(min_pts, neighbour, instances_without_instance, **kwargs)

lrd_ratios_array[i] = neighbour_lrd / instance_lrd

return sum(lrd_ratios_array) / len(neighbours)

def outliers(k, instances, **kwargs):

"""Simple procedure to identify outliers in the dataset."""

instances_value_backup = instances

outliers = []

for i, instance in enumerate(instances_value_backup):

instances = list(instances_value_backup)

instances.remove(instance)

l = LOF(instances, **kwargs)

value = l.local_outlier_factor(k, instance)

if value > 1:

outliers.append({"lof": value, "instance": instance, "index": i})

outliers.sort(key=lambda o: o["lof"], reverse=True)

return outliers

测试上述代码,测试脚本test_lof.py:

# -*- coding: utf8 -*-

instances = [

(-4.8447532242074978, -5.6869538132901658),

(1.7265577109364076, -2.5446963280374302),

(-1.9885982441038819, 1.705719643962865),

(-1.999050026772494, -4.0367551415711844),

(-2.0550860126898964, -3.6247409893236426),

(-1.4456945632547327, -3.7669258809535102),

(-4.6676062022635554, 1.4925324371089148),

(-3.6526420667796877, -3.5582661345085662),

(6.4551493172954029, -0.45434966683144573),

(-0.56730591589443669, -5.5859532963153349),

(-5.1400897823762239, -1.3359248994019064),

(5.2586932439960243, 0.032431285797532586),

(6.3610915734502838, -0.99059648246991894),

(-0.31086913190231447, -2.8352818694180644),

(1.2288582719783967, -1.1362795178325829),

(-0.17986204466346614, -0.32813130288006365),

(2.2532002509929216, -0.5142311840491649),

(-0.75397166138399296, 2.2465141276038754),

(1.9382517648161239, -1.7276112460593251),

(1.6809250808549676, -2.3433636210337503),

(0.68466572523884783, 1.4374914487477481),

(2.0032364431791514, -2.9191062023123635),

(-1.7565895138024741, 0.96995712544043267),

(3.3809644295064505, 6.7497121359292684),

(-4.2764152718650896, 5.6551328734397766),

(-3.6347215445083019, -0.85149861984875741),

(-5.6249411288060385, -3.9251965527768755),

(4.6033708001912093, 1.3375110154658127),

(-0.685421751407983, -0.73115552984211407),

(-2.3744241805625044, 1.3443896265777866)]

from lof import outliers

lof = outliers(5, instances)

for outlier in lof:

print outlier["lof"],outlier["instance"]

from matplotlib import pyplot as p

x,y = zip(*instances)

p.scatter(x,y, 20, color="#0000FF")

for outlier in lof:

value = outlier["lof"]

instance = outlier["instance"]

color = "#FF0000" if value > 1 else "#00FF00"

p.scatter(instance[0], instance[1], color=color, s=(value-1)**2*10+20)

p.show()

输出的结果为:

2.20484969217 (3.3809644295064505, 6.749712135929268)

1.79484408482 (-4.27641527186509, 5.6551328734397766)

1.50121865848 (6.455149317295403, -0.45434966683144573)

1.47940253262 (6.361091573450284, -0.9905964824699189)

1.37216956549 (5.258693243996024, 0.032431285797532586)

1.29100195101 (4.603370800191209, 1.3375110154658127)

1.20274006333 (-4.844753224207498, -5.686953813290166)

1.18718018398 (-5.6249411288060385, -3.9251965527768755)

1.10898567816 (0.6846657252388478, 1.4374914487477481)

1.05728304007 (-4.667606202263555, 1.4925324371089148)

1.04216295935 (-5.140089782376224, -1.3359248994019064)

1.02801167935 (-0.5673059158944367, -5.585953296315335)

输出的结果为测试点集中的距离值以及离群点。

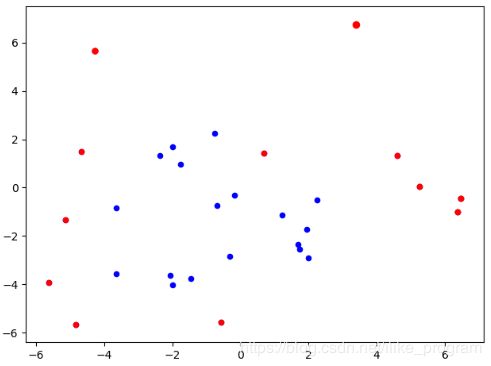

绘制Scatter图像为:

其中蓝点表示传递给LOF构造函数的实例,红点是异常值的实例(lof值> 1)。大小或红点代表lof值,这意味着更大的lof值会产生更大的点。

参考

[1]陈瑜. 离群点检测算法研究[D].兰州大学,2018.

[2]https://blog.csdn.net/wangyibo0201/article/details/51705966