Oracle12c udev管理的asm磁盘组扩容

1、环境

grid@r9ithzmedcc01[/home/grid]$cat /etc/redhat-release

CentOS Linux release 7.4.1708 (Core)

grid@r9ithzmedcc01[/home/grid]$

oracle:12.2.0.1.0 rac双节点

2、添加共享存储

本次使用的是中兴的tecs虚拟化平台:

然后针对刚添加的磁盘管理挂载点

选择rac节点的两台主机:

输入虚机的名字,点击确定即可以添加到虚机。添加以后在虚机可以直接fdisk查看对应的磁盘,不需要重启虚机或做任何其它的操作。上图可以看出来,刚添加的这块磁盘在rac的两个节点都是/dev/sdg。

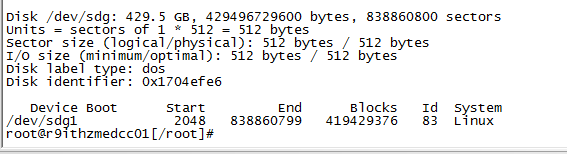

3、磁盘分区

执行:

fdisk /dev/sdg

进行分区,本次只分为一个主分区即可。

4、获取共享盘的uuid

root@r9ithzmedcc01[/root]#/usr/lib/udev/scsi_id -g -u -d /dev/sdg

0QEMU_QEMU_HARDDISK_47611dbd-d99f-42e9-9

root@r9ithzmedcc01[/root]#

5、配置asm磁盘组规则(两个节点都需要加好)

oracle@r9ithzmedcc02[/home/oracle]$cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL=="sd?1",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent",RESULT=="0QEMU_QEMU_HARDDISK_adb15f0f-c3cf-42bb-a",SYMLINK+="asmocrvote1",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="sd?1",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent",RESULT=="0QEMU_QEMU_HARDDISK_96d0d19f-5968-41ee-8",SYMLINK+="asmocrvote2",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="sd?1",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent",RESULT=="0QEMU_QEMU_HARDDISK_0f11ec8e-3d8d-4a0f-9",SYMLINK+="asmocrvote3",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="sd?1",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent",RESULT=="0QEMU_QEMU_HARDDISK_f8cd06fa-f7ee-45b8-a",SYMLINK+="asmrbdata01",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="sd?1",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent",RESULT=="0QEMU_QEMU_HARDDISK_6accb175-9d48-4c1a-b",SYMLINK+="asmccdata01",OWNER="grid",GROUP="asmadmin",MODE="0660"

#KERNEL=="sd?1",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent",RESULT=="0QEMU_QEMU_HARDDISK_dd8e4e6e-f995-4df9-b",SYMLINK+="asmarchcc01",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="sd?1",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent",RESULT=="0QEMU_QEMU_HARDDISK_22a6bd8c-2a7e-4801-a",SYMLINK+="asmarchcc01",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="sd?1",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent",RESULT=="0QEMU_QEMU_HARDDISK_47611dbd-d99f-42e9-9",SYMLINK+="asmarchrb01",OWNER="grid",GROUP="asmadmin",MODE="0660"

oracle@r9ithzmedcc02[/home/oracle]$

飘红的是才加的。

6、使用如下的命令是udev重新加载规则(两个节点都需要执行)

udevadm control --reload

udevadm trigger --type=devices --action=change

partprobe

最后一个命令partprobe可以先别执行,可以先查看是否识别到asm磁盘了。

oracle@r9ithzmedcc02[/home/oracle]$ll /dev/asm*

lrwxrwxrwx 1 root root 4 Oct 21 21:39 /dev/asmarchcc01 -> sdf1

lrwxrwxrwx 1 root root 4 Oct 21 21:39 /dev/asmarchrb01 -> sdg1

lrwxrwxrwx 1 root root 4 Oct 21 22:04 /dev/asmccdata01 -> sdb1

lrwxrwxrwx 1 root root 4 Oct 21 22:07 /dev/asmocrvote1 -> sda1

lrwxrwxrwx 1 root root 4 Oct 21 22:07 /dev/asmocrvote2 -> sde1

lrwxrwxrwx 1 root root 4 Oct 21 22:07 /dev/asmocrvote3 -> sdd1

lrwxrwxrwx 1 root root 4 Oct 21 22:04 /dev/asmrbdata01 -> sdc1

oracle@r9ithzmedcc02[/home/oracle]$

如果发现识别到了,就可以不需要执行partprobe命令。我这边是需要执行的,否则无法识别出来。

7、查询磁盘组和磁盘

--显示磁盘组

select group_number,name,state,type,total_mb,free_mb,usable_file_mb,allocation_unit_size/1024/1024 unit_mb from v$asm_diskgroup order by 1;

--显示磁盘

set linesize 120

col group_number format 99

col NAME format a10

col PATH format a20

col FAILGROUP format a10

col mount_status format a10

col state format a10

col redundancy format a10

col TOTAL_MB format 99999

col FREE_MB format 999999

col FAILGROUP format a15

col CREATE_DA format a15

select group_number,name,path,mount_status,state,redundancy,total_mb,free_mb,failgroup,create_date from v$asm_disk order by 1,2;

8、创建磁盘组。

create diskgroup CCARCH external redundancy disk '/dev/asmarchcc01' ;

create diskgroup RBARCH external redundancy disk '/dev/asmarchrb01' ;

9、常用的一些命令

--显示使用ASM磁盘组的数据库

col INSTANCE_NAME format a20

col SOFTWARE_VERSION format a20

select * from gv$asm_client order by 1,2;

2.检查各个节点服务状态(切换到grid用户)

--列出数据库名

[grid@node2 ~]$ srvctl config database

RacNode

[grid@node2 ~]$

--实例状态

[grid@node2 ~]$ srvctl status database -d RacNode

Instance RacNode1 is running on node node1

Instance RacNode2 is running on node node2

[grid@node2 ~]$

2.检查各个节点服务状态(切换到grid用户)

--列出数据库名

[grid@node2 ~]$ srvctl config database

RacNode

[grid@node2 ~]$

--实例状态

[grid@node2 ~]$ srvctl status database -d RacNode

Instance RacNode1 is running on node node1

Instance RacNode2 is running on node node2

[grid@node2 ~]$

3.ASM磁盘组及磁盘检查

[grid@node1 ~]$ export ORACLE_SID=+ASM1

[grid@node1 ~]$ sqlplus /nolog

SQL> conn /as sysasm

2.检查数据库会话及停止监听

--检查各个节点监听状态

[grid@node1 ~]$ srvctl status listener -n node1

Listener LISTENER is enabled on node(s): node1

Listener LISTENER is running on node(s): node1

[grid@node1 ~]$ srvctl status listener -n node2

Listener LISTENER is enabled on node(s): node2

Listener LISTENER is running on node(s): node2

[grid@node1 ~]$

--禁止监听自启动

[grid@node1 ~]$ srvctl disable listener -n node1

[grid@node1 ~]$ srvctl disable listener -n node2

--停止监听

[grid@node1 ~]$ srvctl stop listener -n node1

[grid@node1 ~]$ srvctl stop listener -n node2

--查看停止及关闭自启后的监听状态

[grid@node1 ~]$ srvctl status listener -n node1

Listener LISTENER is disabled on node(s): node1

Listener LISTENER is not running on node(s): node1

[grid@node1 ~]$ srvctl status listener -n node2

Listener LISTENER is disabled on node(s): node2

Listener LISTENER is not running on node(s): node2

[grid@node1 ~]$

3.关闭数据库

--检查数据库配置

[grid@node1 ~]$ srvctl config database -d RacNode

Database unique name: RacNode

Database name: RacNode

Oracle home: /u01/app/oracle/11.2.0/dbhome_1

Oracle user: oracle

Spfile: +DATA/RacNode/spfileRacNode.ora

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools: RacNode

Database instances: RacNode1,RacNode2

Disk Groups: DATA

Mount point paths:

Services:

Type: RAC

Database is administrator managed

[grid@node1 ~]$

--禁止数据库自启动(需切换root用户)

[root@node2 ~]# cd /u01/app/11.2.0/grid/bin

[root@node2 bin]# ./srvctl disable database -d RacNode

[root@node2 bin]#

--关闭数据库

[grid@node1 ~]$ srvctl stop database -d RacNode

[grid@node1 ~]$

--检查关闭后数据库状态

[grid@node1 ~]$ srvctl status database -d RacNode

Instance RacNode1 is not running on node node1

Instance RacNode2 is not running on node node2

[grid@node1 ~]$

4.关闭集群软件

--查看各个节点集群是否为自启动

[root@node1 bin]# ./crsctl config has

CRS-4622: Oracle High Availability Services autostart is enabled.

[root@node1 bin]#

[root@node2 bin]# ./crsctl config has

CRS-4622: Oracle High Availability Services autostart is enabled.

--禁止各个节点的自启动

[root@node1 bin]# ./crsctl disable has

CRS-4621: Oracle High Availability Services autostart is disabled.

[root@node1 bin]#

[root@node2 bin]# ./crsctl disable has

CRS-4621: Oracle High Availability Services autostart is disabled.

[root@node2 bin]#

--查看各个节点禁止自启动是否生效

[root@node1 bin]# ./crsctl config has

CRS-4621: Oracle High Availability Services autostart is disabled.

[root@node1 bin]#

[root@node2 bin]# ./crsctl config has

CRS-4621: Oracle High Availability Services autostart is disabled.

[root@node2 bin]#

--停止各个节点集群

[root@node1 bin]# ./crsctl stop has

[root@node2 bin]# ./crsctl stop has

5.系统层面添加磁盘(存储工程师协助完成)

7.启动集群

[root@node1 bin]# ./crsctl start has

CRS-4123: Oracle High Availability Services has been started.

[root@node2 bin]# ./crsctl start has

CRS-4123: Oracle High Availability Services has been started.

[root@node2 bin]#

--检查集群的各个组件是否启动正常

[grid@node2 ~]$ crsctl status res -t

此时,监听和数据库服务是停掉的

7.启动集群

[root@node1 bin]# ./crsctl start has

CRS-4123: Oracle High Availability Services has been started.

[root@node2 bin]# ./crsctl start has

CRS-4123: Oracle High Availability Services has been started.

[root@node2 bin]#

--检查集群的各个组件是否启动正常

[grid@node2 ~]$ crsctl status res -t

此时,监听和数据库服务是停掉的