Keras 构建CNN

Keras 构建CNN

一.构建CNN准备

Keras构建CNN准备不像Tensorflow那么繁琐,只需要导入对应的包就行。

from keras.models import Sequential

导入顺序模型,这是Keras最简单的模型Sequential 顺序模型,它由多个网络层线性堆叠。

from keras.layers import Dense,Activation,Convolution2D,MaxPooling2D,Flatten

导入可用于处理全连接层,激活函数,二维卷积,最大池化,压平数据包

from keras.optimizers import Adam

导入优化损失方法

构建模型:

| model = Sequential() |

二.构建CNN结构

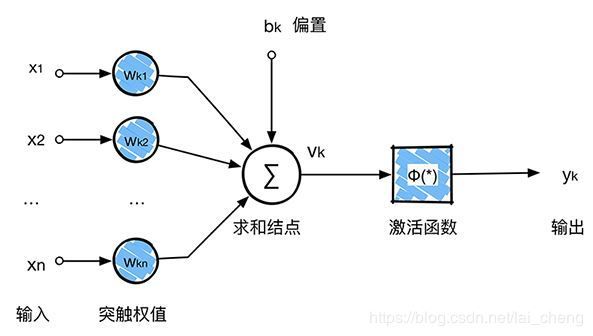

上图为一个卷积层的示意图,可以知道,卷积层需要突触权值,偏置(可以选择不要偏置)激活函数,最后得到输出。

1.创建卷积层,并且用relu激活。

只需要在model中加入对应层

| model.add(Convolution2D( filters=32, kernel_size=3, padding='same', ))##patch 3x3 ,in size 1,out size 32, Nx465x128x32 model.add(Activation('relu')) |

filters=32,表示要输出32个通道

kernel_size=3,卷积核大小3x3

padding=’same’,这样最后输出的每个通道大小不变。

2.创建池化层

池化层按照我的理解是对卷积后的结果进行降维。降维后每个通道图大小为N=(imgSize-kSize)/Strides,这里imgSize为原来图像的宽或者高,kSize为池化核大小,Strides为池化步长。同样只需要把对应层加入model中,这里需要注意的是我们输入的形式最好定义为(batch_size,channels ,pooled_rows, pooled_cols) 4D 张量,在Keras中通道是放在first,所以称为’channel_first‘,而在Tensorflow通道放在最后称为’channel_last‘。当然Keras也能定义为和Tensorflow一样的形式。只是在运算时速度会变慢不少,因为在Keras内部会转换成’channel_first’。

| model.add(MaxPooling2D( pool_size=2, strides=2, padding='valid', data_format='channels_first' )) ## Nx232x64x32 |

3.对池化得到的结果压平用于全连接层

压平这个操作其实就是矩阵转换成一维矩阵,最后一维矩阵大小为N=high*wide*channel也就是输出通道数乘以图的宽度高度

| model.add(Flatten())# N x 3 x 2 x 64 =>> N x 384 |

4.创建全连接层

全连接是对压平后的数据再次变小,用矩阵乘法得到更新的维度再激活函数激活

| # Dense layer # 1 Dense layer(units: 100, activation: ReLu ) model.add(Dense(100)) model.add(Activation('relu')) |

5.预测

预测也是矩阵相乘,压缩输出

| model.add(Dense(10)) model.add(Activation('softmax')) |

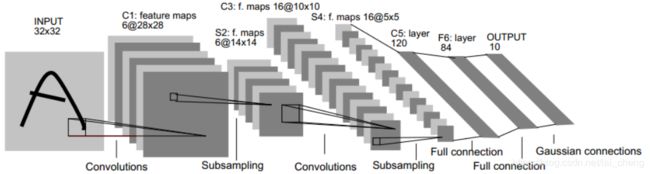

到此一个CNN构建完成,卷积池化全连接大小可以根据实际情况自行增加或者减少。最后可以看下图进行回顾。

三.训练模型

训练模型我们需要定义损失,优化损失方法,接下来就是训练。因为训练数据量很大我们需要对数据按照batch划分,一个一个小的batch进行训练。

1.定义优化方法编译并且编译最后模型

| # Another way to define your optimizer adam = Adam(lr=1e-4) # We add metrics to get more results you want to see model.compile(optimizer=adam, loss='categorical_crossentropy', metrics=['accuracy']) |

2.定义训练

| model.fit(X_train, y_train, epochs=10, batch_size=64,) |

epochs=10,表示把数据反复训练10遍。batch_size=64

三.完整实例

| import numpy as np import os import datetime import tensorflow as tf import h5py from ops import * from read_hdf5 import * from keras.utils import np_utils from keras.models import Sequential from keras.layers import Dense,Activation,Convolution2D,MaxPooling2D,Flatten,Dropout from keras.optimizers import Adam feature_format = 'tfrecord' feature_path = '/home/rainy/tlj/dcase/h5/train_fold1.h5' statistical_parameter_path = '/home/rainy/Desktop/model_xception/statistical_parameter.hdf5' save_path = '/home/rainy/Desktop/model_xception' max_epoch = 20 high = 465 wide = 128 shape = high * wide keep_prob = 1 max_batch_size = 50 #fp = h5py.File(statistical_parameter_path, 'r') starttime = datetime.datetime.now() feature, label = load_hdf5(feature_path) index_shuffle = np.arange(feature.shape[0]) np.random.shuffle(index_shuffle) feature = feature[index_shuffle] label = label[index_shuffle] feature_mean = np.zeros(wide) feature_var = np.zeros(wide) for i in range(feature.shape[2]): feature_mean[i] = np.mean(feature[:,:,i]) feature_var[i] = np.var(feature[:,:,i]) for i in range(feature.shape[0]): for j in range(feature.shape[1]): feature[i,j,:] = (feature[i,j,:] - feature_mean)/np.sqrt(feature_var) y_data = np.zeros((label.shape[0], 10),dtype=int) for j in range(label.shape[0]): y_data[j, label[j]] = 1

feature = feature.reshape([-1,1,465,128]) # load testing data test_feature,test_label = read_data() test_feature = test_feature.reshape([-1,1,465,128]) LEARNING_RATE_BASE = 0.001 LEARNING_RATE_DECAY = 0.1 LEARNING_RATE_STEP = 300 gloabl_steps = tf.Variable(0, trainable=False) learning_rate = tf.train.exponential_decay(LEARNING_RATE_BASE , gloabl_steps, LEARNING_RATE_STEP, LEARNING_RATE_DECAY, staircase=True) model = Sequential() # 2D Convolutional layer(filters: 32, kernelsize: 7) + Batchnormalization + ReLuactivation model.add(Convolution2D( filters=32, kernel_size=3, padding='same', ))##patch 3x3 ,in size 1,out size 32, Nx465x128x32 model.add(Activation('relu')) # 2D maxpooling(poolsize: (5, 2)) + Dropout(rate: 30 %) model.add(MaxPooling2D( pool_size=2, strides=2, padding='valid', data_format='channels_first' )) ## Nx232x64x32 # 2D Convolutional layer(filters: 64, kernelsize: 7) + Batchnormalization + ReLuactivation model.add(Convolution2D( filters=64, kernel_size=5, padding='same' )) ##patch 5x5 ,in size 32,out size 64 , Nx232x64x64 model.add(Activation('relu')) # 2D maxpooling(poolsize: (4, 100)) + Dropout(rate: 30 %) model.add(MaxPooling2D( pool_size=(2,2), strides=(2,2), padding='valid', data_format='channels_first' )) ## Nx116x32x64 # Flatten model.add(Flatten()) # N x 116 x 32 x 64 =>> N x (116*32*64) # Dense layer # 1 Dense layer(units: 100, activation: ReLu ) Dropout(rate: 30 %) model.add(Dense(100)) model.add(Activation('relu')) # Output layer(activation: softmax) model.add(Dense(10)) model.add(Activation('softmax')) adam = Adam(lr=learning_rate) model.compile( optimizer=adam, loss='categorical_crossentropy',metrics=['accuracy'] ) print('Training--------------------------') model.fit(feature,y_data,epochs=max_epoch,batch_size=max_batch_size) print('Testing') validation_loss = model.evaluate(feature,y_data) print('validation loss:',validation_loss) endtime = datetime.datetime.now() print("code finish time is:",(endtime - starttime).seconds) |

参考:以上图片均为网络图片,仅作示例,侵权联系删除