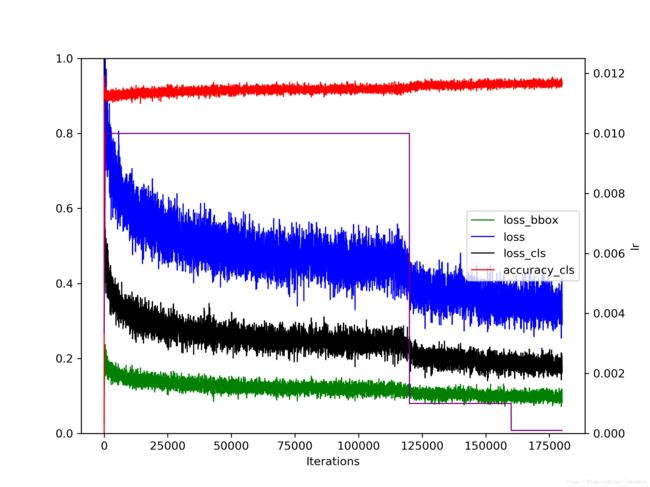

Detectron训练日志可视化

Detectron框架的训练日志如下(一般还含有其它信息,但是出于隐私方面的考虑删去)

json_stats: {"accuracy_cls": 0.000000, "eta": "29 days, 16:00:50", "iter": 0, "loss": 6.594362, "loss_bbox": 0.024408, "loss_cls": 5.633613, "loss_rpn_bbox_fpn2": 0.094387, "loss_rpn_bbox_fpn3": 0.054846, "loss_rpn_bbox_fpn4": 0.023384, "loss_rpn_bbox_fpn5": 0.041790, "loss_rpn_bbox_fpn6": 0.010158, "loss_rpn_cls_fpn2": 0.497471, "loss_rpn_cls_fpn3": 0.138108, "loss_rpn_cls_fpn4": 0.041028, "loss_rpn_cls_fpn5": 0.025832, "loss_rpn_cls_fpn6": 0.009337, "lr": 0.003333, "mb_qsize": 64, "mem": 9376, "time": 14.240279}

json_stats: {"accuracy_cls": 0.953979, "eta": "3 days, 20:23:34", "iter": 20, "loss": 1.091401, "loss_bbox": 0.094622, "loss_cls": 0.490638, "loss_rpn_bbox_fpn2": 0.050163, "loss_rpn_bbox_fpn3": 0.021536, "loss_rpn_bbox_fpn4": 0.018526, "loss_rpn_bbox_fpn5": 0.007447, "loss_rpn_bbox_fpn6": 0.019559, "loss_rpn_cls_fpn2": 0.223254, "loss_rpn_cls_fpn3": 0.089653, "loss_rpn_cls_fpn4": 0.044842, "loss_rpn_cls_fpn5": 0.013908, "loss_rpn_cls_fpn6": 0.018942, "lr": 0.003600, "mb_qsize": 64, "mem": 10136, "time": 1.848062}

json_stats: {"accuracy_cls": 0.949097, "eta": "1 day, 21:43:08", "iter": 40, "loss": 0.877637, "loss_bbox": 0.095891, "loss_cls": 0.387184, "loss_rpn_bbox_fpn2": 0.070995, "loss_rpn_bbox_fpn3": 0.014666, "loss_rpn_bbox_fpn4": 0.011028, "loss_rpn_bbox_fpn5": 0.009867, "loss_rpn_bbox_fpn6": 0.010003, "loss_rpn_cls_fpn2": 0.115113, "loss_rpn_cls_fpn3": 0.058147, "loss_rpn_cls_fpn4": 0.034393, "loss_rpn_cls_fpn5": 0.011855, "loss_rpn_cls_fpn6": 0.007660, "lr": 0.003867, "mb_qsize": 64, "mem": 10140, "time": 0.914581}

json_stats: {"accuracy_cls": 0.951416, "eta": "1 day, 20:44:22", "iter": 60, "loss": 0.745121, "loss_bbox": 0.101246, "loss_cls": 0.374634, "loss_rpn_bbox_fpn2": 0.035099, "loss_rpn_bbox_fpn3": 0.018243, "loss_rpn_bbox_fpn4": 0.010567, "loss_rpn_bbox_fpn5": 0.009695, "loss_rpn_bbox_fpn6": 0.015504, "loss_rpn_cls_fpn2": 0.061784, "loss_rpn_cls_fpn3": 0.047311, "loss_rpn_cls_fpn4": 0.031080, "loss_rpn_cls_fpn5": 0.012028, "loss_rpn_cls_fpn6": 0.003118, "lr": 0.004133, "mb_qsize": 64, "mem": 10145, "time": 0.895089}

json_stats: {"accuracy_cls": 0.945312, "eta": "1 day, 19:56:00", "iter": 80, "loss": 0.801967, "loss_bbox": 0.124918, "loss_cls": 0.390439, "loss_rpn_bbox_fpn2": 0.029391, "loss_rpn_bbox_fpn3": 0.013622, "loss_rpn_bbox_fpn4": 0.010590, "loss_rpn_bbox_fpn5": 0.008154, "loss_rpn_bbox_fpn6": 0.010013, "loss_rpn_cls_fpn2": 0.071442, "loss_rpn_cls_fpn3": 0.039746, "loss_rpn_cls_fpn4": 0.029353, "loss_rpn_cls_fpn5": 0.012935, "loss_rpn_cls_fpn6": 0.002855, "lr": 0.004400, "mb_qsize": 64, "mem": 10148, "time": 0.879061}

json_stats: {"accuracy_cls": 0.938436, "eta": "1 day, 19:38:56", "iter": 100, "loss": 0.852091, "loss_bbox": 0.130605, "loss_cls": 0.421313, "loss_rpn_bbox_fpn2": 0.030303, "loss_rpn_bbox_fpn3": 0.015375, "loss_rpn_bbox_fpn4": 0.016021, "loss_rpn_bbox_fpn5": 0.006687, "loss_rpn_bbox_fpn6": 0.013717, "loss_rpn_cls_fpn2": 0.086395, "loss_rpn_cls_fpn3": 0.052884, "loss_rpn_cls_fpn4": 0.026198, "loss_rpn_cls_fpn5": 0.012458, "loss_rpn_cls_fpn6": 0.004256, "lr": 0.004667, "mb_qsize": 64, "mem": 10148, "time": 0.873466}

json_stats: {"accuracy_cls": 0.935838, "eta": "1 day, 19:30:04", "iter": 120, "loss": 0.805401, "loss_bbox": 0.136671, "loss_cls": 0.421075, "loss_rpn_bbox_fpn2": 0.035047, "loss_rpn_bbox_fpn3": 0.010261, "loss_rpn_bbox_fpn4": 0.011568, "loss_rpn_bbox_fpn5": 0.009536, "loss_rpn_bbox_fpn6": 0.008578, "loss_rpn_cls_fpn2": 0.065820, "loss_rpn_cls_fpn3": 0.030599, "loss_rpn_cls_fpn4": 0.022531, "loss_rpn_cls_fpn5": 0.011994, "loss_rpn_cls_fpn6": 0.003227, "lr": 0.004933, "mb_qsize": 64, "mem": 10148, "time": 0.870607}

json_stats: {"accuracy_cls": 0.933575, "eta": "1 day, 19:18:09", "iter": 140, "loss": 0.797393, "loss_bbox": 0.145647, "loss_cls": 0.421425, "loss_rpn_bbox_fpn2": 0.028673, "loss_rpn_bbox_fpn3": 0.010867, "loss_rpn_bbox_fpn4": 0.008765, "loss_rpn_bbox_fpn5": 0.007707, "loss_rpn_bbox_fpn6": 0.012843, "loss_rpn_cls_fpn2": 0.058527, "loss_rpn_cls_fpn3": 0.029445, "loss_rpn_cls_fpn4": 0.020039, "loss_rpn_cls_fpn5": 0.010104, "loss_rpn_cls_fpn6": 0.003275, "lr": 0.005200, "mb_qsize": 64, "mem": 10149, "time": 0.866728}

json_stats: {"accuracy_cls": 0.921060, "eta": "1 day, 19:02:14", "iter": 160, "loss": 0.852583, "loss_bbox": 0.162178, "loss_cls": 0.454664, "loss_rpn_bbox_fpn2": 0.031978, "loss_rpn_bbox_fpn3": 0.011842, "loss_rpn_bbox_fpn4": 0.009797, "loss_rpn_bbox_fpn5": 0.010263, "loss_rpn_bbox_fpn6": 0.011376, "loss_rpn_cls_fpn2": 0.063614, "loss_rpn_cls_fpn3": 0.037496, "loss_rpn_cls_fpn4": 0.026917, "loss_rpn_cls_fpn5": 0.011700, "loss_rpn_cls_fpn6": 0.003257, "lr": 0.005467, "mb_qsize": 64, "mem": 10149, "time": 0.861511}

json_stats: {"accuracy_cls": 0.921783, "eta": "1 day, 18:51:04", "iter": 180, "loss": 0.945993, "loss_bbox": 0.180890, "loss_cls": 0.512756, "loss_rpn_bbox_fpn2": 0.034612, "loss_rpn_bbox_fpn3": 0.014450, "loss_rpn_bbox_fpn4": 0.011294, "loss_rpn_bbox_fpn5": 0.008607, "loss_rpn_bbox_fpn6": 0.008530, "loss_rpn_cls_fpn2": 0.069237, "loss_rpn_cls_fpn3": 0.035557, "loss_rpn_cls_fpn4": 0.019328, "loss_rpn_cls_fpn5": 0.010626, "loss_rpn_cls_fpn6": 0.002266, "lr": 0.005733, "mb_qsize": 64, "mem": 10149, "time": 0.857880}

json_stats: {"accuracy_cls": 0.903529, "eta": "1 day, 18:38:57", "iter": 200, "loss": 0.994964, "loss_bbox": 0.214039, "loss_cls": 0.536701, "loss_rpn_bbox_fpn2": 0.023562, "loss_rpn_bbox_fpn3": 0.013030, "loss_rpn_bbox_fpn4": 0.012633, "loss_rpn_bbox_fpn5": 0.014375, "loss_rpn_bbox_fpn6": 0.011610, "loss_rpn_cls_fpn2": 0.054858, "loss_rpn_cls_fpn3": 0.040812, "loss_rpn_cls_fpn4": 0.022550, "loss_rpn_cls_fpn5": 0.008525, "loss_rpn_cls_fpn6": 0.002074, "lr": 0.006000, "mb_qsize": 64, "mem": 10149, "time": 0.853933}

json_stats: {"accuracy_cls": 0.895940, "eta": "1 day, 18:30:52", "iter": 220, "loss": 1.151429, "loss_bbox": 0.235513, "loss_cls": 0.611490, "loss_rpn_bbox_fpn2": 0.050025, "loss_rpn_bbox_fpn3": 0.012086, "loss_rpn_bbox_fpn4": 0.013214, "loss_rpn_bbox_fpn5": 0.009534, "loss_rpn_bbox_fpn6": 0.007997, "loss_rpn_cls_fpn2": 0.058478, "loss_rpn_cls_fpn3": 0.027707, "loss_rpn_cls_fpn4": 0.019668, "loss_rpn_cls_fpn5": 0.008558, "loss_rpn_cls_fpn6": 0.001807, "lr": 0.006267, "mb_qsize": 64, "mem": 10149, "time": 0.851334}

json_stats: {"accuracy_cls": 0.902832, "eta": "1 day, 18:27:17", "iter": 240, "loss": 1.049950, "loss_bbox": 0.207919, "loss_cls": 0.635510, "loss_rpn_bbox_fpn2": 0.029180, "loss_rpn_bbox_fpn3": 0.012820, "loss_rpn_bbox_fpn4": 0.013130, "loss_rpn_bbox_fpn5": 0.007720, "loss_rpn_bbox_fpn6": 0.007256, "loss_rpn_cls_fpn2": 0.059993, "loss_rpn_cls_fpn3": 0.031574, "loss_rpn_cls_fpn4": 0.025615, "loss_rpn_cls_fpn5": 0.008021, "loss_rpn_cls_fpn6": 0.001899, "lr": 0.006533, "mb_qsize": 64, "mem": 10149, "time": 0.850229}

json_stats: {"accuracy_cls": 0.902193, "eta": "1 day, 18:26:37", "iter": 260, "loss": 0.982858, "loss_bbox": 0.213457, "loss_cls": 0.583732, "loss_rpn_bbox_fpn2": 0.041903, "loss_rpn_bbox_fpn3": 0.010444, "loss_rpn_bbox_fpn4": 0.014965, "loss_rpn_bbox_fpn5": 0.011974, "loss_rpn_bbox_fpn6": 0.009276, "loss_rpn_cls_fpn2": 0.058820, "loss_rpn_cls_fpn3": 0.025879, "loss_rpn_cls_fpn4": 0.020322, "loss_rpn_cls_fpn5": 0.009166, "loss_rpn_cls_fpn6": 0.002166, "lr": 0.006800, "mb_qsize": 64, "mem": 10149, "time": 0.850103}

json_stats: {"accuracy_cls": 0.915435, "eta": "1 day, 18:25:14", "iter": 280, "loss": 0.978791, "loss_bbox": 0.180303, "loss_cls": 0.477744, "loss_rpn_bbox_fpn2": 0.046597, "loss_rpn_bbox_fpn3": 0.009179, "loss_rpn_bbox_fpn4": 0.011615, "loss_rpn_bbox_fpn5": 0.010282, "loss_rpn_bbox_fpn6": 0.012041, "loss_rpn_cls_fpn2": 0.064443, "loss_rpn_cls_fpn3": 0.037864, "loss_rpn_cls_fpn4": 0.020618, "loss_rpn_cls_fpn5": 0.010627, "loss_rpn_cls_fpn6": 0.002763, "lr": 0.007067, "mb_qsize": 64, "mem": 10149, "time": 0.849738}

json_stats: {"accuracy_cls": 0.922067, "eta": "1 day, 18:29:11", "iter": 300, "loss": 0.829082, "loss_bbox": 0.169718, "loss_cls": 0.467146, "loss_rpn_bbox_fpn2": 0.013072, "loss_rpn_bbox_fpn3": 0.008168, "loss_rpn_bbox_fpn4": 0.011719, "loss_rpn_bbox_fpn5": 0.009481, "loss_rpn_bbox_fpn6": 0.014123, "loss_rpn_cls_fpn2": 0.037682, "loss_rpn_cls_fpn3": 0.027673, "loss_rpn_cls_fpn4": 0.016552, "loss_rpn_cls_fpn5": 0.010431, "loss_rpn_cls_fpn6": 0.003071, "lr": 0.007333, "mb_qsize": 64, "mem": 10149, "time": 0.851147}

json_stats: {"accuracy_cls": 0.899153, "eta": "1 day, 18:25:40", "iter": 320, "loss": 1.009500, "loss_bbox": 0.206610, "loss_cls": 0.548972, "loss_rpn_bbox_fpn2": 0.031082, "loss_rpn_bbox_fpn3": 0.014388, "loss_rpn_bbox_fpn4": 0.015104, "loss_rpn_bbox_fpn5": 0.009974, "loss_rpn_bbox_fpn6": 0.015146, "loss_rpn_cls_fpn2": 0.054975, "loss_rpn_cls_fpn3": 0.029925, "loss_rpn_cls_fpn4": 0.020506, "loss_rpn_cls_fpn5": 0.008476, "loss_rpn_cls_fpn6": 0.003429, "lr": 0.007600, "mb_qsize": 64, "mem": 10149, "time": 0.850070}

json_stats: {"accuracy_cls": 0.913325, "eta": "1 day, 18:21:39", "iter": 340, "loss": 0.925371, "loss_bbox": 0.193941, "loss_cls": 0.527709, "loss_rpn_bbox_fpn2": 0.028242, "loss_rpn_bbox_fpn3": 0.012744, "loss_rpn_bbox_fpn4": 0.015579, "loss_rpn_bbox_fpn5": 0.011000, "loss_rpn_bbox_fpn6": 0.013291, "loss_rpn_cls_fpn2": 0.050656, "loss_rpn_cls_fpn3": 0.024538, "loss_rpn_cls_fpn4": 0.019409, "loss_rpn_cls_fpn5": 0.008190, "loss_rpn_cls_fpn6": 0.002918, "lr": 0.007867, "mb_qsize": 64, "mem": 10149, "time": 0.848824}以下脚本使用matlibplot根据日志进行可视化,并保存为图片.脚本在日志文件含有其他信息的时候能够正常工作.

import json

import re

from pylab import *

fig = figure(figsize=(8,6), dpi=300)

y1 = fig.add_subplot(111)

y1.set_xlabel('Iterations')

y2 = y1.twinx()

y1.set_ylim(0,1.0)

with open('./日志名.txt') as f:

whole = f.read()

pattern = re.compile(r'json_stats: (\{.*\})')

lis = pattern.findall(whole)

try:

parsed = [json.loads(j) for j in lis]

print(parsed[0])

except:

print("json format is not corrrect")

exit(1)

_iter = [ j['iter'] for j in parsed]

_loss = [ j['loss'] for j in parsed]

_loss_bbox = [ j['loss_bbox'] for j in parsed]

_loss_cls = [ j['loss_cls'] for j in parsed]

_accuracy_cls = [ j['accuracy_cls'] for j in parsed]

_lr = [ j['lr'] for j in parsed]

try:

_mask_loss = [ j['mask_loss'] for j in parsed]

except:

_mask_loss = None

y1.plot(_iter, _loss_bbox, color="green", linewidth=1.0,linestyle="-",label='loss_bbox')

y1.plot(_iter, _loss, color="blue", linewidth=1.0, linestyle="-",label='loss')

y1.plot(_iter, _loss_cls, color="black", linewidth=1.0, linestyle="-",label='loss_cls')

y1.plot(_iter, _accuracy_cls, color="red", linewidth=1.0, linestyle="-",label='accuracy_cls')

if _mask_loss is not None:

y1.plot(_iter, _mask_loss, color="grey", linewidth=1.0, linestyle="-",label='mask_loss')

y2.set_ylim(0,max(_lr)/0.8)

y2.plot(_iter, _lr, color="purple", linewidth=1.0, linestyle="-",label='lr')

y2.set_ylabel('lr')

#可以选择开启网格

#grid()

#图例

y1.legend()

savefig('./fig.png')

show()如何将训练日志保存成文件

Detectron在训练网络的时候,会在终端中输出训练日志,但是训练日志不会自动保存成文件.

只需要将输出到终端的日志,以管道的方式送至tee命令即可.

下面的命令将终端的输出存成名为log.txt,文件名和后缀名其实不重要.

python2 tools/train_net.py \

--cfg configs/getting_started/tutorial_1gpu_e2e_faster_rcnn_R-50-FPN.yaml \

OUTPUT_DIR /tmp/detectron-output | tee log.txt

使用tee方式比起重定向至文件的方式来说更加方便:因为它同时保留了终端中的输出.