基于日志文件的elk平台的搭建

基于日志文件的elk平台的搭建

概述:

ELK是指Elasticsearch + Logstash + Kibaba三个组件的组合,这三者是核心套件,但并非全部。

Elasticsearch是实时全文搜索和分析引擎,提供搜集、分析、存储数据三大功能;是一套开放REST和JAVA API等结构提供高效搜索功能,可扩展的分布式系统。它构建于Apache Lucene搜索引擎库之上。

Logstash是一个用来搜集、分析、过滤日志的工具。它支持几乎任何类型的日志,包括系统日志、错误日志和自定义应用程序日志。它可以从许多来源接收日志,这些来源包括 syslog、消息传递(例如 RabbitMQ)和JMX,它能够以多种方式输出数据,包括电子邮件、websockets和Elasticsearch。

Kibana是一个基于Web的图形界面,用于搜索、分析和可视化存储在 Elasticsearch指标中的日志数据。它利用Elasticsearch的REST接口来检索数据,不仅允许用户创建他们自己的数据的定制仪表板视图,还允许他们以特殊的方式查询和过滤数据实验环境:

server1: master 172.25.52.1

server2: worker 172.25.52.2

server3: worker 172.25.52.31.Elasticsearch配置

##安装jdk

[root@server1 ~]# ls

anaconda-ks.cfg elk install.log install.log.syslog jdk-8u121-linux-x64.rpm

[root@server1 ~]# yum install -y jdk-8u121-linux-x64.rpm

[root@server1 ~]# cd elk/

[root@server1 elk]# ls

bigdesk-master.zip jemalloc-devel-3.6.0-1.el6.x86_64.rpm

elasticsearch-2.3.3.rpm kibana-4.5.1-1.x86_64.rpm

elasticsearch-head-master.zip logstash-2.3.3-1.noarch.rpm

elk日志分析平台.pdf nginx-1.8.0-1.el6.ngx.x86_64.rpm

jemalloc-3.6.0-1.el6.x86_64.rpm redis-3.0.6.tar.gz##安装elasticsearch

[root@server1 elk]# yum install -y elasticsearch-2.3.3.rpm jdk-8u121-linux-x64.rpm ##编写文件

[root@server1 elk]# pwd

/root/elk

[root@server1 elk]# cd /etc/elasticsearch/

[root@server1 elasticsearch]# ls

elasticsearch.yml logging.yml scripts

[root@server1 elasticsearch]# vim elasticsearch.yml

############################

17 cluster.name: my-es

23 node.name: server1

33 path.data: /var/lib/elasticsearch/

37 path.logs: /var/log/elasticsearch/

43 bootstrap.mlockall: true

54 network.host: 172.25.52.1

58 http.port: 9200

##开启服务

[root@server1 ~]# /etc/init.d/elasticsearch start

Starting elasticsearch: [ OK ]##查看到9200 9300端口

[root@server1 ~]# netstat -antlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 908/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 984/master

tcp 0 0 172.25.52.1:22 172.25.52.250:35134 ESTABLISHED 1381/sshd

tcp 0 0 ::ffff:172.25.52.1:9200 :::* LISTEN 1656/java

tcp 0 0 ::ffff:172.25.52.1:9300 :::* LISTEN 1656/java

tcp 0 0 :::22 :::* LISTEN 908/sshd

tcp 0 0 ::1:25 :::* LISTEN 984/master [root@server1 ~]# cd elk/

[root@server1 elk]# ls

bigdesk-master.zip jemalloc-devel-3.6.0-1.el6.x86_64.rpm

elasticsearch-2.3.3.rpm kibana-4.5.1-1.x86_64.rpm

elasticsearch-head-master.zip logstash-2.3.3-1.noarch.rpm

elk日志分析平台.pdf nginx-1.8.0-1.el6.ngx.x86_64.rpm

jemalloc-3.6.0-1.el6.x86_64.rpm redis-3.0.6.tar.gz

[root@server1 elk]# pwd

/root/elk

##安装head插件

[root@server1 elk]# /usr/share/elasticsearch/bin/plugin install file:/root/elk/elasticsearch-head-master.zip

[root@server1 elk]# cd /usr/share/elasticsearch/bin

[root@server1 bin]# ./plugin list

Installed plugins in /usr/share/elasticsearch/plugins:

- head

[root@server1 bin]# cd /usr/share/elasticsearch/plugins

[root@server1 plugins]# ls

head

[root@server1 plugins]# cd head/

[root@server1 head]# ls

elasticsearch-head.sublime-project LICENCE _site

Gruntfile.js package.json src

grunt_fileSets.js plugin-descriptor.properties test

index.html README.textile

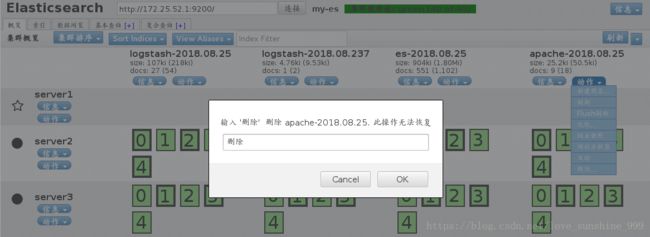

在网页上输入:172.25.52.1:9200/_plugin/head/

绿色: 健康

黄色: 主分区未丢失

红色: 主分区丢失[root@server1 ~]# scp jdk-8u121-linux-x64.rpm elk/elasticsearch-2.3.3.rpm server2:

[root@server1 ~]# scp jdk-8u121-linux-x64.rpm elk/elasticsearch-2.3.3.rpm server3:[root@server2 ~]# ls

anaconda-ks.cfg install.log jdk-8u121-linux-x64.rpm

elasticsearch-2.3.3.rpm install.log.syslog

[root@server2 ~]# yum install * -y[root@server3 ~]# ls

anaconda-ks.cfg install.log jdk-8u121-linux-x64.rpm

elasticsearch-2.3.3.rpm install.log.syslog

[root@server3 ~]# yum install -y *[root@server1 ~]# cd /etc/elasticsearch/

[root@server1 elasticsearch]# ls

elasticsearch.yml logging.yml scripts

[root@server1 elasticsearch]# vim elasticsearch.yml

#############################

68 discovery.zen.ping.unicast.hosts: ["server1", "server2","server3"]

[root@server1 elasticsearch]# /etc/init.d/elasticsearch reload

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ]

[root@server1 elasticsearch]# scp elasticsearch.yml server2:/etc/elasticsearch/

root@server2's password:

elasticsearch.yml 100% 3199 3.1KB/s 00:00

[root@server1 elasticsearch]# scp elasticsearch.yml server3:/etc/elasticsearch/

root@server3's password:

elasticsearch.yml 100% 3199 3.1KB/s 00:00 [root@server2 ~]# cd /etc/elasticsearch/

[root@server2 elasticsearch]# vim elasticsearch.yml

#############################

23 node.name: server2

54 network.host: 172.25.52.2

[root@server2 elasticsearch]# /etc/init.d/elasticsearch start

Starting elasticsearch: [ OK ][root@server3 ~]# cd /etc/elasticsearch/

[root@server3 elasticsearch]# vim elasticsearch.yml

#############################

23 node.name: server3

54 network.host: 172.25.52.3

[root@server3 elasticsearch]# /etc/init.d/elasticsearch start

Starting elasticsearch: [ OK ][root@server1 elasticsearch]# vim elasticsearch.yml

#############################

添加:

node.master: true

node.data: false

node.enabled: true

[root@server1 elasticsearch]# /etc/init.d/elasticsearch reload

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ][root@server2 elasticsearch]# vim elasticsearch.yml

############################

添加:

node.master: false

node.data: true

node.enabled: true

[root@server2 elasticsearch]# /etc/init.d/elasticsearch reload

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ][root@server3 elasticsearch]# vim elasticsearch.yml

############################

添加:

node.master: false

node.data: true

node.enabled: true

[root@server3 elasticsearch]# /etc/init.d/elasticsearch reload

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ][root@server1 elasticsearch]# curl 172.25.52.1:9200/_nodes/stats/process?pretty

{

"cluster_name" : "my-es",

"nodes" : {

"aaw1B751Qge8tRZ1c9Q1aA" : {

"timestamp" : 1535164777880,

"name" : "server2",

"transport_address" : "172.25.52.2:9300",

"host" : "172.25.52.2",

"ip" : [ "172.25.52.2:9300", "NONE" ],

"attributes" : {

"enabled" : "true",

"master" : "false"

},

"process" : {

"timestamp" : 1535164777880,

"open_file_descriptors" : 159,

"max_file_descriptors" : 65535,

"cpu" : {

"percent" : 0,

"total_in_millis" : 11760

},

"mem" : {

"total_virtual_in_bytes" : 3248803840

}

}

},

"D3YWS-JKTV6jawTqVq3ufQ" : {

"timestamp" : 1535164777980,

"name" : "server1",

"transport_address" : "172.25.52.1:9300",

"host" : "172.25.52.1",

"ip" : [ "172.25.52.1:9300", "NONE" ],

"attributes" : {

"data" : "false",

"enabled" : "true",

"master" : "true"

},

"process" : {

"timestamp" : 1535164777980,

"open_file_descriptors" : 144,

"max_file_descriptors" : 65535,

"cpu" : {

"percent" : 0,

"total_in_millis" : 12910

},

"mem" : {

"total_virtual_in_bytes" : 3238096896

}

}

},

"ttOO8V5XQ064lF1NzjfkjQ" : {

"timestamp" : 1535164778294,

"name" : "server3",

"transport_address" : "172.25.52.3:9300",

"host" : "172.25.52.3",

"ip" : [ "172.25.52.3:9300", "NONE" ],

"attributes" : {

"enabled" : "true",

"master" : "false"

},

"process" : {

"timestamp" : 1535164778294,

"open_file_descriptors" : 154,

"max_file_descriptors" : 65535,

"cpu" : {

"percent" : 0,

"total_in_millis" : 14050

},

"mem" : {

"total_virtual_in_bytes" : 3255107584

}

}

}

}

}[root@server1 elasticsearch]# curl -XGET 'http://172.25.52.1:9200/_cluster/health?pretty=true'

{

"cluster_name" : "my-es",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 2,

"active_primary_shards" : 5,

"active_shards" : 10,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}[root@server1 elasticsearch]# curl -XDELETE 'http://172.25.52.1:9200/index'

{"acknowledged":true}[root@server1 elasticsearch]# 2.Logstash配置

[root@server1 elasticsearch]# cd

[root@server1 ~]# cd elk/

[root@server1 elk]# ls

bigdesk-master.zip jemalloc-devel-3.6.0-1.el6.x86_64.rpm

elasticsearch-2.3.3.rpm kibana-4.5.1-1.x86_64.rpm

elasticsearch-head-master.zip logstash-2.3.3-1.noarch.rpm

elk日志分析平台.pdf nginx-1.8.0-1.el6.ngx.x86_64.rpm

jemalloc-3.6.0-1.el6.x86_64.rpm redis-3.0.6.tar.gz

[root@server1 elk]# rpm -ivh logstash-2.3.3-1.noarch.rpm

Preparing... ########################################### [100%]

1:logstash ########################################### [100%]数据测试:

1.在终端输出:

[root@server1 bin]# pwd

/opt/logstash/bin

[root@server1 bin]# ls

logstash logstash.lib.sh logstash-plugin.bat plugin.bat rspec.bat

logstash.bat logstash-plugin plugin rspec setup.bat

##input模块:终端输入 output模块:终端输出

[root@server1 bin]# /opt/logstash/bin/logstash -e 'input { stdin{} } output{ stdout {} }'

Settings: Default pipeline workers: 1

Pipeline main started

hello

2018-08-25T02:49:23.432Z server1 hello

hi

2018-08-25T02:49:28.124Z server1 hi

westos

2018-08-25T02:49:37.269Z server1 westos

^CSIGINT received. Shutting down the agent. {:level=>:warn}

stopping pipeline {:id=>"main"}

## codec => rubydebug 转换输出格式:

[root@server1 bin]# /opt/logstash/bin/logstash -e 'input { stdin{} } output{ stdout { codec => rubydebug } }'

Settings: Default pipeline workers: 1

Pipeline main started

hello word!

{

"message" => "hello word!",

"@version" => "1",

"@timestamp" => "2018-08-25T03:08:24.792Z",

"host" => "server1"

}

^CSIGINT received. Shutting down the agent. {:level=>:warn}

stopping pipeline {:id=>"main"}

Received shutdown signal, but pipeline is still waiting for in-flight events

to be processed. Sending another ^C will force quit Logstash, but this may cause

data loss. {:level=>:warn}

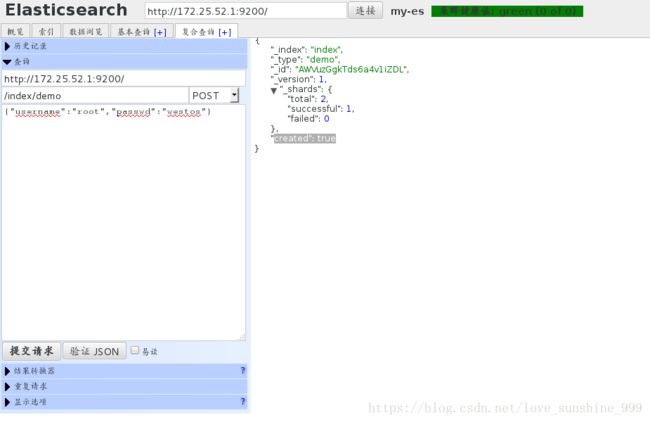

Pipeline main has been shutdown2.在es端输出:

##elasticsearch表示输出到es hosts指定主机 index指定索引

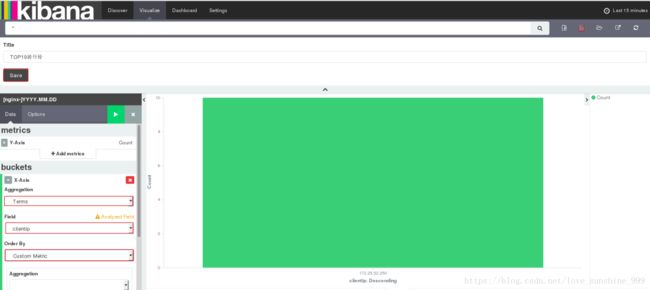

[root@server1 bin]# /opt/logstash/bin/logstash -e 'input { stdin{} } output{ elasticsearch {hosts => ["172.25.52.1"] index => "logstash-%{+YYY.MM.DD}" } }'

Settings: Default pipeline workers: 1

Pipeline main started

hello word

^CSIGINT received. Shutting down the agent. {:level=>:warn}

stopping pipeline {:id=>"main"}

Pipeline main has been shutdown

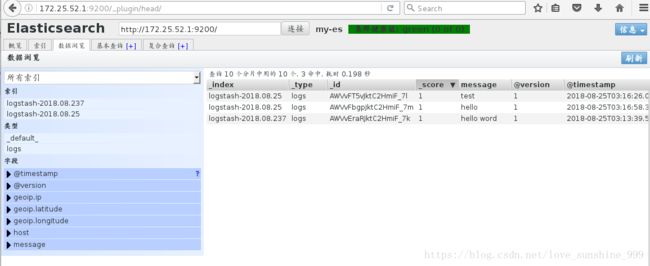

##转换输出格式

[root@server1 bin]# /opt/logstash/bin/logstash -e 'input { stdin{} } output{ elasticsearch {hosts => ["172.25.52.1"] index => "logstash-%{+YYY.MM.dd}"} stdout { codec => rubydebug } }'

Settings: Default pipeline workers: 1

Pipeline main started

test

{

"message" => "test",

"@version" => "1",

"@timestamp" => "2018-08-25T03:16:26.007Z",

"host" => "server1"

}

hello

{

"message" => "hello",

"@version" => "1",

"@timestamp" => "2018-08-25T03:16:58.357Z",

"host" => "server1"

}

^CSIGINT received. Shutting down the agent. {:level=>:warn}

stopping pipeline {:id=>"main"}

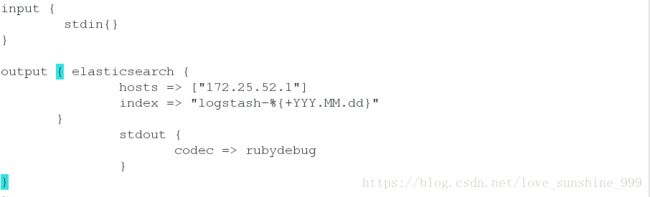

Pipeline main has been shutdown3.以文件的形式将信息输出到es端

[root@server1 bin]# cd /etc/logstash/conf.d/

[root@server1 conf.d]# ls

[root@server1 conf.d]# vim es.conf

######################

input {

stdin{}

}

output { elasticsearch {

hosts => ["172.25.52.1"]

index => "logstash-%{+YYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}## -f 指定配置文件

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1

Pipeline main started

westos

{

"message" => "westos",

"@version" => "1",

"@timestamp" => "2018-08-25T03:24:26.479Z",

"host" => "server1"

}

linux

{

"message" => "linux",

"@version" => "1",

"@timestamp" => "2018-08-25T03:24:29.601Z",

"host" => "server1"

}

redhat

{

"message" => "redhat",

"@version" => "1",

"@timestamp" => "2018-08-25T03:24:32.021Z",

"host" => "server1"

}

^CSIGINT received. Shutting down the agent. {:level=>:warn}

stopping pipeline {:id=>"main"}

Pipeline main has been shutdown[root@server1 conf.d]# vim es.conf

######################

input {

stdin{}

}

output { elasticsearch {

hosts => ["172.25.52.1"]

index => "logstash-%{+YYY.MM.dd}"

}

stdout {

codec => rubydebug

}

file{

path => "/tmp/testfile"

codec => line { format => "custom format: %{message}"}

}

}[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1

Pipeline main started

my love

{

"message" => "my love",

"@version" => "1",

"@timestamp" => "2018-08-25T03:41:12.426Z",

"host" => "server1"

}

wow

{

"message" => "wow",

"@version" => "1",

"@timestamp" => "2018-08-25T03:41:21.383Z",

"host" => "server1"

}

^CSIGINT received. Shutting down the agent. {:level=>:warn}

stopping pipeline {:id=>"main"}

Pipeline main has been shutdown测试:

[root@server1 conf.d]# cd /tmp

[root@server1 tmp]# ls

hsperfdata_elasticsearch hsperfdata_root jna--1985354563 testfile yum.log

[root@server1 tmp]# cat testfile

custom format: my love

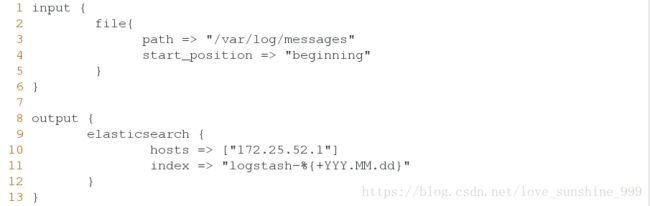

custom format: wow##文件模块

[root@server1 log]# pwd

/var/log

[root@server1 log]# ll messages

-rw------- 1 root root 373 Aug 25 09:47 messages

[root@server1 log]# ll -d /var/log

drwxr-xr-x. 6 root root 4096 Aug 25 10:43 /var/log

[root@server1 log]# cd /etc/logstash/

[root@server1 logstash]# ls

conf.d

[root@server1 logstash]# cd conf.d/

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

[root@server1 conf.d]# cp es.conf message.conf

[root@server1 conf.d]# vim message.conf

######################

input {

file{

path => "/var/log/messages"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["172.25.52.1"]

index => "logstash-%{+YYY.MM.dd}"

}

}[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started##再打开一个shell

[root@server1 ~]# logger test

[root@server1 ~]# logger hello

[root@server1 ~]# logger haha

[root@server1 ~]# logger westos

[root@server1 ~]# logger westos

[root@server1 ~]# logger westos

[root@server1 ~]# logger westos

[root@server1 ~]# logger westos

[root@server1 ~]# cat /var/log/messages

Aug 25 09:47:01 server1 rsyslogd: [origin software="rsyslogd" swVersion="5.8.10" x-pid="866" x-info="http://www.rsyslog.com"] rsyslogd was HUPed

Aug 25 09:47:03 server1 rhsmd: In order for Subscription Manager to provide your system with updates, your system must be registered with the Customer Portal. Please enter your Red Hat login to ensure your system is up-to-date.

Aug 25 11:57:33 server1 root: test

Aug 25 11:57:38 server1 root: hello

Aug 25 11:57:47 server1 root: haha

Aug 25 12:01:47 server1 root: westos

Aug 25 12:01:49 server1 root: westos

Aug 25 12:01:49 server1 root: westos

Aug 25 12:01:50 server1 root: westos

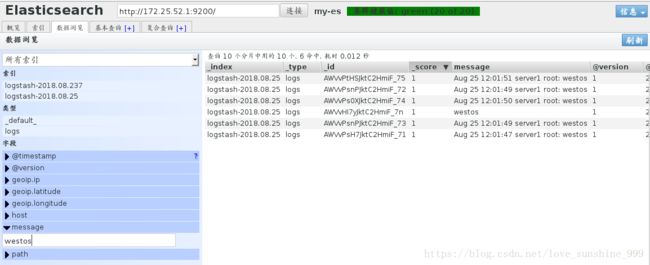

Aug 25 12:01:51 server1 root: westos[root@server1 conf.d]# pwd

/etc/logstash/conf.d

##输出到终端 便于观察

[root@server1 conf.d]# vim message.conf

######################

input {

file{

path => "/var/log/messages"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["172.25.52.1"]

index => "logstash-%{+YYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}

##注意不要退出来

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started[root@server1 ~]# logger linu

[root@server1 ~]# logger linu

[root@server1 ~]# logger linushell 1中:

[root@server1 ~]# l.

. .bash_logout .cshrc .ssh

.. .bash_profile .oracle_jre_usage .tcshrc

.bash_history .bashrc .sincedb_452905a167cf4509fd08acb964fdb20c .viminfo

##1045338为文件ID 用于定位读取文件位置

##根据769判断文件是否变更

[root@server1 ~]# cat .sincedb_452905a167cf4509fd08acb964fdb20c

1045338 0 64768 769

[root@server1 ~]# ls -i /var/log/messages

1045338 /var/log/messagesshell 2 中:

[root@server1 ~]# logger love

[root@server1 ~]# logger love

[root@server1 ~]# logger loveshell 1中:

[root@server1 ~]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "Aug 25 13:00:41 server1 root: love",

"@version" => "1",

"@timestamp" => "2018-08-25T05:01:13.240Z",

"path" => "/var/log/messages",

"host" => "server1"

}

{

"message" => "Aug 25 13:00:42 server1 root: love",

"@version" => "1",

"@timestamp" => "2018-08-25T05:01:15.083Z",

"path" => "/var/log/messages",

"host" => "server1"

}

{

"message" => "Aug 25 13:00:43 server1 root: love",

"@version" => "1",

"@timestamp" => "2018-08-25T05:01:15.083Z",

"path" => "/var/log/messages",

"host" => "server1"

}shell 2中:

[root@server1 ~]# l.

. .bash_logout .cshrc .ssh

.. .bash_profile .oracle_jre_usage .tcshrc

.bash_history .bashrc .sincedb_452905a167cf4509fd08acb964fdb20c .viminfo

##发现一旦文件有所变更 第3个数字就会随之发生变化

[root@server1 ~]# cat .sincedb_452905a167cf4509fd08acb964fdb20c

1045338 0 64768 874[root@server1 conf.d]# pwd

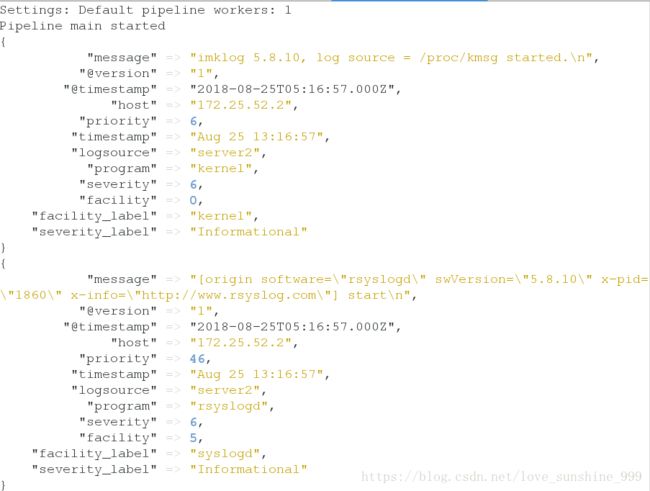

/etc/logstash/conf.d

[root@server1 conf.d]# vim message.conf

input {

syslog {

port => 514

}

}

output {

# elasticsearch {

# hosts => ["172.25.52.1"]

# index => "logstash-%{+YYY.MM.dd}"

# }

stdout {

codec => rubydebug

}

} ##注意 不要退出来

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started##在另外一个shell中:

[root@server1 ~]# netstat -antulp | grep :514

tcp 0 0 :::514 :::* LISTEN 2674/java

udp 0 0 :::514 :::* 2674/java [root@server2 elasticsearch]# vim /etc/rsyslog.conf

############################

81 *.* @@172.25.52.1:514[root@server2 elasticsearch]# /etc/init.d/rsyslog restart

Shutting down system logger: [ OK ]

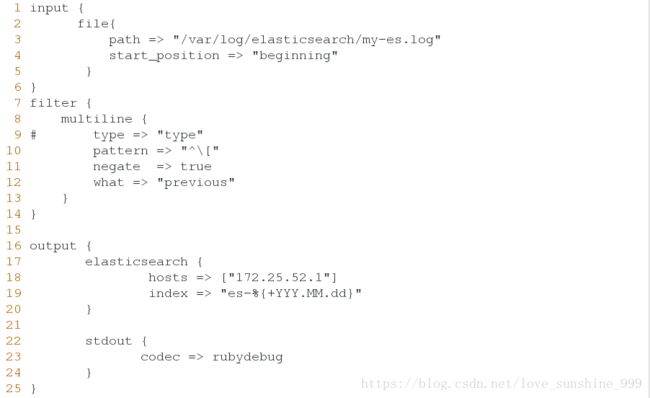

Starting system logger: [ OK ]多行日志合并

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

[root@server1 conf.d]# vim message.conf

######################

input {

file{

path => "/var/log/elasticsearch/my-es.log"

start_position => "beginning" ##从头开始读取

}

}

filter { ##过滤

multiline {

# type => "type"

pattern => "^\[" ##匹配关键字

negate => true

what => "previous"

}

}

output {

elasticsearch {

hosts => ["172.25.52.1"]

index => "es-%{+YYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

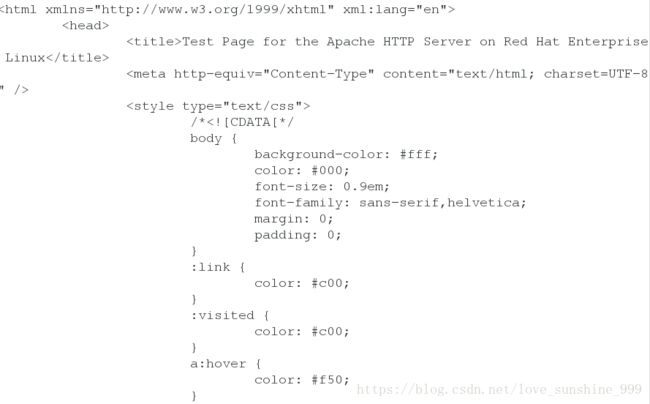

Pipeline main startedhttpd数据采集

[root@server1 ~]# yum install -y httpd

[root@server1 ~]# /etc/init.d/httpd start

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.52.1 for ServerName

[ OK ]

[root@server1 ~]# curl 172.25.52.1[root@server1 ~]# cd /var/www/html

[root@server1 html]# vim index.html

###################

www.westos.org[root@server1 html]# cd /var/log

[root@server1 log]# cd httpd/

[root@server1 httpd]# ll

total 8

-rw-r--r-- 1 root root 180 Aug 25 14:26 access_log

-rw-r--r-- 1 root root 453 Aug 25 14:26 error_log

[root@server1 httpd]# pwd

/var/log/httpd[root@server1 conf.d]# pwd

/etc/logstash/conf.d

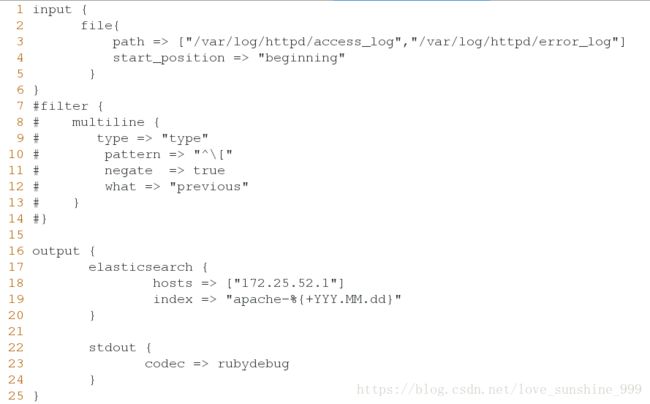

[root@server1 conf.d]# vim message.conf

######################

input {

file{

path => ["/var/log/httpd/access_log","/var/log/httpd/error_log"]

start_position => "beginning"

}

}

#filter {

# multiline {

# type => "type"

# pattern => "^\["

# negate => true

# what => "previous"

# }

#}

output {

elasticsearch {

hosts => ["172.25.52.1"]

index => "apache-%{+YYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

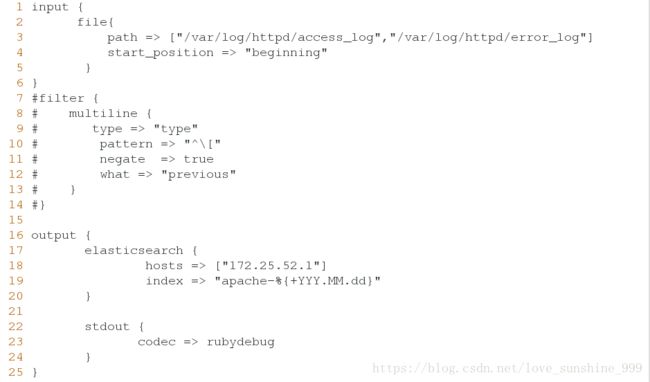

Pipeline main started##更改输出格式

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

[root@server1 conf.d]# vim test.conf

#####################

input {

file{

path => ["/var/log/httpd/access_log","/var/log/httpd/error_log"]

start_position => "beginning"

}

}

#filter {

# multiline {

# type => "type"

# pattern => "^\["

# negate => true

# what => "previous"

# }

#}

output {

elasticsearch {

hosts => ["172.25.52.1"]

index => "apache-%{+YYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/test.conf

Settings: Default pipeline workers: 1

Pipeline main started

55.3.244.1 GET /index.html 15824 0.043 [root@server1 conf.d]# cat /var/log/httpd/access_log

172.25.52.1 - - [25/Aug/2018:14:26:42 +0800] "GET / HTTP/1.1" 403 3985 "-" "curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.14.3.0 zlib/1.2.3 libidn/1.18 libssh2/1.4.2"

172.25.52.250 - - [25/Aug/2018:14:29:17 +0800] "GET / HTTP/1.1" 200 15 "-" "Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0"

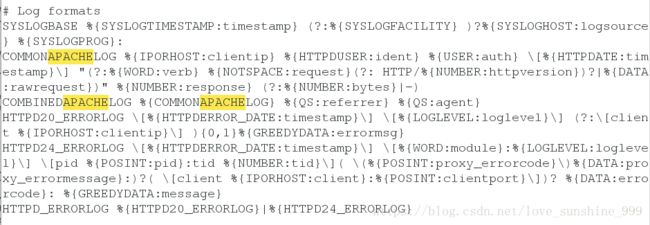

172.25.52.250 - - [25/Aug/2018:14:29:17 +0800] "GET /favicon.ico HTTP/1.1" 404 287 "-" "Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0"[root@server1 patterns]# pwd

/opt/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-2.0.5/patterns

[root@server1 patterns]# ls

aws exim haproxy linux-syslog mongodb rails

bacula firewalls java mcollective nagios redis

bro grok-patterns junos mcollective-patterns postgresql ruby

##查看日志格式

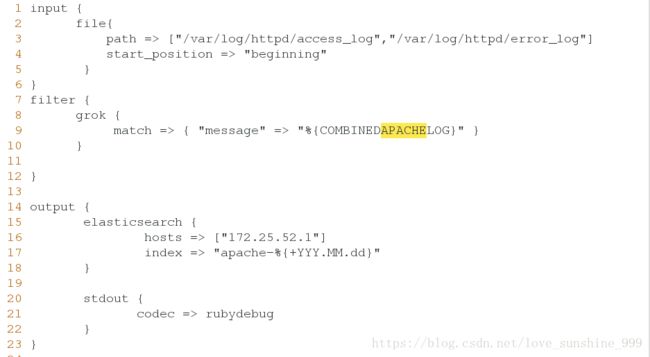

[root@server1 patterns]# vim grok-patterns 数据切分:

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

[root@server1 conf.d]# vim message.conf

######################

input {

file{

path => ["/var/log/httpd/access_log","/var/log/httpd/error_log"]

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" } #设定日志格式

}

}

output {

elasticsearch {

hosts => ["172.25.52.1"]

index => "apache-%{+YYY.MM.dd}"

}

stdout {

codec => rubydebug

}

} [root@server1 patterns]# cd

[root@server1 ~]# l.

. .bashrc .ssh

.. .cshrc .tcshrc

.bash_history .oracle_jre_usage .viminfo

.bash_logout .sincedb_452905a167cf4509fd08acb964fdb20c

.bash_profile .sincedb_ef0edb00900aaa8dcb520b280cb2fb7d

[root@server1 ~]# cat .sincedb_ef0edb00900aaa8dcb520b280cb2fb7d

1045354 0 64768 484

1045278 0 64768 558

[root@server1 ~]# rm -f .sincedb_ef0edb00900aaa8dcb520b280cb2fb7d[root@server1 ~]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf [root@server1 elk]# scp kibana-4.5.1-1.x86_64.rpm server3:

root@server3's password:

kibana-4.5.1-1.x86_64.rpm 100% 32MB 32.3MB/s 00:01 ##安装 Kibana

[root@server3 ~]# ls

anaconda-ks.cfg install.log jdk-8u121-linux-x64.rpm

elasticsearch-2.3.3.rpm install.log.syslog kibana-4.5.1-1.x86_64.rpm

[root@server3 ~]# rpm -ivh kibana-4.5.1-1.x86_64.rpm

Preparing... ########################################### [100%]

1:kibana ########################################### [100%]

[root@server3 ~]# cd /opt

[root@server3 opt]# ls

kibana

[root@server3 opt]# cd kibana/

[root@server3 kibana]# ls

bin installedPlugins node optimize README.txt webpackShims

config LICENSE.txt node_modules package.json src

[root@server3 kibana]# cd config/

[root@server3 config]# ls

kibana.yml

[root@server3 config]# pwd

/opt/kibana/config

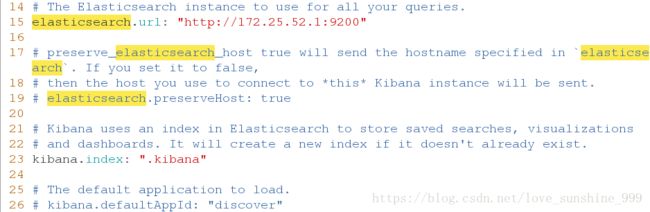

[root@server3 config]# vim kibana.yml

######################

15 elasticsearch.url: "http://172.25.52.1:9200"

23 kibana.index: ".kibana"[root@server3 config]# /etc/init.d/kibana start

kibana started

[root@server3 config]# netstat -antpl[root@server1 elk]# scp redis-3.0.6.tar.gz server2:

root@server2's password:

redis-3.0.6.tar.gz 100% 1340KB 1.3MB/s 00:00

[root@server2 ~]# ls

anaconda-ks.cfg install.log jdk-8u121-linux-x64.rpm

elasticsearch-2.3.3.rpm install.log.syslog redis-3.0.6.tar.gz

[root@server2 ~]# tar zxf redis-3.0.6.tar.gz

[root@server2 ~]# cd redis-3.0.6

[root@server2 redis-3.0.6]# ls

00-RELEASENOTES COPYING Makefile redis.conf runtest-sentinel tests

BUGS deps MANIFESTO runtest sentinel.conf utils

CONTRIBUTING INSTALL README runtest-cluster src

[root@server2 redis-3.0.6]# yum install -y gcc

[root@server2 redis-3.0.6]# make && make install

[root@server2 redis-3.0.6]# ls

00-RELEASENOTES COPYING Makefile redis.conf runtest-sentinel tests

BUGS deps MANIFESTO runtest sentinel.conf utils

CONTRIBUTING INSTALL README runtest-cluster src

[root@server2 redis-3.0.6]# cd utils/

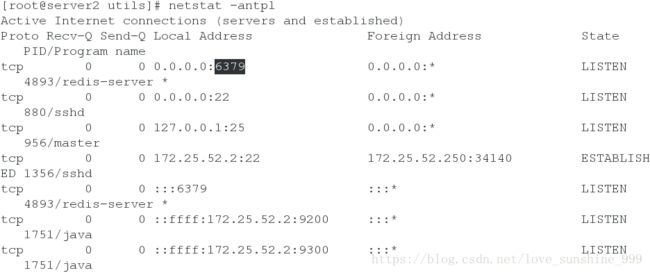

[root@server2 utils]# ./install_server.sh

...............

Installation successful!

[root@server2 utils]# netstat -antp[root@server1 elk]# /etc/init.d/httpd stop

Stopping httpd: [ OK ]

[root@server1 elk]# ls

bigdesk-master.zip jemalloc-devel-3.6.0-1.el6.x86_64.rpm

elasticsearch-2.3.3.rpm kibana-4.5.1-1.x86_64.rpm

elasticsearch-head-master.zip logstash-2.3.3-1.noarch.rpm

elk日志分析平台.pdf nginx-1.8.0-1.el6.ngx.x86_64.rpm

jemalloc-3.6.0-1.el6.x86_64.rpm redis-3.0.6.tar.gz

[root@server1 elk]# rpm -ivh nginx-1.8.0-1.el6.ngx.x86_64.rpm

warning: nginx-1.8.0-1.el6.ngx.x86_64.rpm: Header V4 RSA/SHA1 Signature, key ID 7bd9bf62: NOKEY

Preparing... ########################################### [100%]

1:nginx ########################################### [100%]

----------------------------------------------------------------------

Thanks for using nginx!

Please find the official documentation for nginx here:

* http://nginx.org/en/docs/

Commercial subscriptions for nginx are available on:

* http://nginx.com/products/

----------------------------------------------------------------------

[root@server1 elk]# cd /etc/nginx/

[root@server1 nginx]# ls

conf.d koi-utf mime.types scgi_params win-utf

fastcgi_params koi-win nginx.conf uwsgi_params

[root@server1 nginx]# ll /var/log/nginx/access.log

-rw-r----- 1 nginx adm 0 Aug 25 16:38 /var/log/nginx/access.log

[root@server1 nginx]# ll -d /var/log/nginx/

drwxr-xr-x 2 root root 4096 Aug 25 16:38 /var/log/nginx/

[root@server1 nginx]# chmod 644 /var/log/nginx/access.log

[root@server1 nginx]# cd /etc/logstash/conf.d

[root@server1 conf.d]# cp message.conf nginx.conf

[root@server1 conf.d]# vim nginx.conf

######################

input {

file{

path => "/var/log/nginx/access.log"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG} %{QS:x_forwarded_for}" }

}

}

output {

redis {

host => ["172.25.52.2"]

port => 6379

data_type => "list"

key => "logstash:redis"

}

stdout {

codec => rubydebug

}

}##注意不要退出来

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/nginx.conf

Settings: Default pipeline workers: 1

Pipeline main started##压测

[root@foundation52 Desktop]# ab -c 1 -n 10 http://172.25.52.1/index.html

This is ApacheBench, Version 2.3 <$Revision: 1430300 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 172.25.52.1 (be patient).....done

Server Software: nginx/1.8.0

Server Hostname: 172.25.52.1

Server Port: 80

Document Path: /index.html

Document Length: 612 bytes

Concurrency Level: 1

Time taken for tests: 0.065 seconds

Complete requests: 10

Failed requests: 0

Write errors: 0

Total transferred: 8440 bytes

HTML transferred: 6120 bytes

Requests per second: 153.91 [#/sec] (mean)

Time per request: 6.497 [ms] (mean)

Time per request: 6.497 [ms] (mean, across all concurrent requests)

Transfer rate: 126.86 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.1 0 0

Processing: 0 6 19.9 0 63

Waiting: 0 2 6.7 0 21

Total: 0 6 19.9 0 63

Percentage of the requests served within a certain time (ms)

50% 0

66% 0

75% 0

80% 0

90% 63

95% 63

98% 63

99% 63

100% 63 (longest request)[root@server1 ~]# cd elk/

[root@server1 elk]# scp logstash-2.3.3-1.noarch.rpm server2:

root@server2's password:

logstash-2.3.3-1.noarch.rpm 100% 76MB 6.3MB/s 00:12

[root@server1 elk]# cd /etc/logstash/

[root@server1 logstash]# ls

conf.d

[root@server1 logstash]# cd conf.d/

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

[root@server1 conf.d]# scp es.conf server2:/etc/logstash/conf.d

root@server2's password:

es.conf 100% 401 0.4KB/s 00:00 [root@server2 utils]# cd

[root@server2 ~]# ls

anaconda-ks.cfg install.log.syslog redis-3.0.6

elasticsearch-2.3.3.rpm jdk-8u121-linux-x64.rpm redis-3.0.6.tar.gz

install.log logstash-2.3.3-1.noarch.rpm

[root@server2 ~]# rpm -ivh logstash-2.3.3-1.noarch.rpm

Preparing... ########################################### [100%]

1:logstash ########################################### [100%]

[root@server2 ~]# cd /etc/logstash/conf.d/

[root@server2 conf.d]# ls

[root@server2 conf.d]# pwd

/etc/logstash/conf.d

[root@server2 conf.d]# ls

es.conf

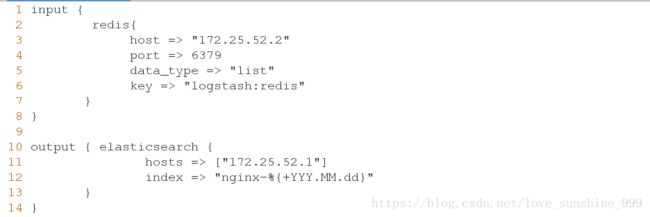

[root@server2 conf.d]# vim es.conf

######################

input {

redis{

host => "172.25.52.2"

port => 6379

data_type => "list"

key => "logstash:redis"

}

}

output { elasticsearch {

hosts => ["172.25.52.1"]

index => "nginx-%{+YYY.MM.dd}"

}

}##注意:两者都要启动

[root@server2 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1

Pipeline main started[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/nginx.conf

Settings: Default pipeline workers: 1

Pipeline main started##压测

[root@foundation52 Desktop]# ab -c 1 -n 10 http://172.25.52.1/index.html[root@server3 ~]# curl 172.25.52.1