自编码网络的发展:From Autoencoder to Beta-VAE

https://lilianweng.github.io/lil-log/2018/08/12/from-autoencoder-to-beta-vae.html

Autocoders are a family of neural network models aiming to learn compressed latent variables of high-dimensional data. Starting from the basic autocoder model, this post reviews several variations, including denoising, sparse, and contractive autoencoders, and then Variational Autoencoder (VAE) and its modification beta-VAE.

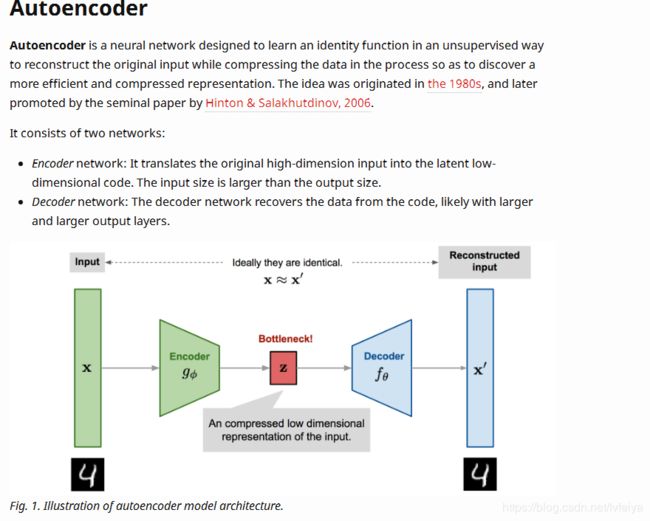

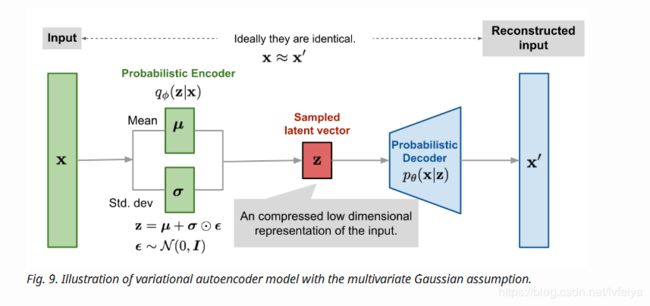

Autocoder is invented to reconstruct high-dimensional data using a neural network model with a narrow bottleneck layer in the middle (oops, this is probably not true for Variational Autoencoder, and we will investigate it in details in later sections). A nice byproduct is dimension reduction: the bottleneck layer captures a compressed latent encoding. Such a low-dimensional representation can be used as en embedding vector in various applications (i.e. search), help data compression, or reveal the underlying data generative factors.

References

[1] Geoffrey E. Hinton, and Ruslan R. Salakhutdinov. “Reducing the dimensionality of data with neural networks.” Science 313.5786 (2006): 504-507.

[2] Pascal Vincent, et al. “Extracting and composing robust features with denoising autoencoders.” ICML, 2008.

[3] Pascal Vincent, et al. “Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion.”. Journal of machine learning research 11.Dec (2010): 3371-3408.

[4] Geoffrey E. Hinton, Nitish Srivastava, Alex Krizhevsky, Ilya Sutskever, and Ruslan R. Salakhutdinov. “Improving neural networks by preventing co-adaptation of feature detectors.” arXiv preprint arXiv:1207.0580 (2012).

[5] Sparse Autoencoder by Andrew Ng.

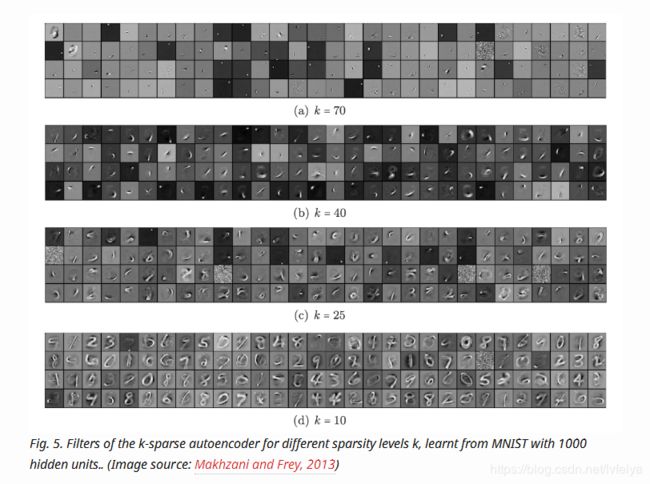

[6] Alireza Makhzani, Brendan Frey (2013). “k-sparse autoencoder”. ICLR 2014.

[7] Salah Rifai, et al. “Contractive auto-encoders: Explicit invariance during feature extraction.” ICML, 2011.

[8] Diederik P. Kingma, and Max Welling. “Auto-encoding variational bayes.” ICLR 2014.

[9] Tutorial - What is a variational autoencoder? on jaan.io

[10] Youtube tutorial: Variational Autoencoders by Arxiv Insights

[11] “A Beginner’s Guide to Variational Methods: Mean-Field Approximation” by Eric Jang.

[12] Carl Doersch. “Tutorial on variational autoencoders.” arXiv:1606.05908, 2016.

[13] Irina Higgins, et al. ”ββ-VAE: Learning basic visual concepts with a constrained variational framework.” ICLR 2017.

[14] Christopher P. Burgess, et al. “Understanding disentangling in beta-VAE.” NIPS 2017.

[15] Aaron van den Oord, et al. “Neural Discrete Representation Learning” NIPS 2017.

[16] Ali Razavi, et al. “Generating Diverse High-Fidelity Images with VQ-VAE-2”. arXiv preprint arXiv:1906.00446 (2019).

[17] Xi Chen, et al. “PixelSNAIL: An Improved Autoregressive Generative Model.” arXiv preprint arXiv:1712.09763 (2017).

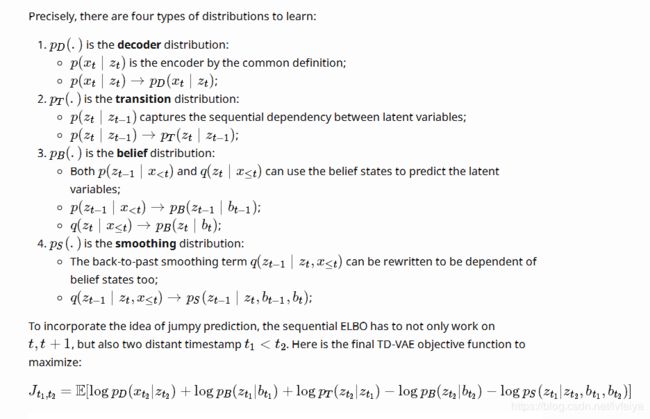

[18] Karol Gregor, et al. “Temporal Difference Variational Auto-Encoder.” ICLR 2019.

@article{weng2018VAE,

title = “From Autoencoder to Beta-VAE”,

author = “Weng, Lilian”,

journal = “lilianweng.github.io/lil-log”,

year = “2018”,

url = “http://lilianweng.github.io/lil-log/2018/08/12/from-autoencoder-to-beta-vae.html”

}