马哥教育N36第二十五周作业

一、制作一个docker镜像,实现sshd服务、nginx服务的正常使用

1.基于容器的制作镜像方式

- 实验docker的版本信息

~]# docker version

Client: Docker Engine - Community

Version: 19.03.1

API version: 1.40

Go version: go1.12.5

Git commit: 74b1e89

Built: Thu Jul 25 21:21:07 2019

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.1

API version: 1.40 (minimum version 1.12)

Go version: go1.12.5

Git commit: 74b1e89

Built: Thu Jul 25 21:19:36 2019

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.2.6

GitCommit: 894b81a4b802e4eb2a91d1ce216b8817763c29fb

runc:

Version: 1.0.0-rc8

GitCommit: 425e105d5a03fabd737a126ad93d62a9eeede87f

docker-init:

Version: 0.18.0

GitCommit: fec3683

- 运行 Centos 基础镜像

docker run -it --name=centos-ssh centos /bin/bash

- 在容器中配置好yum仓库,并安装openssh-server

yum install -y openssh-server

- 生成容器的主机密钥文件

如果没有这些密钥文件,启动 sshd 会报“Could not load host key”错。

ssh-keygen -t rsa -f /etc/ssh/ssh_host_rsa_key

ssh-keygen -t ecdsa -f /etc/ssh/ssh_host_ecdsa_key

ssh-keygen -t ed25519 -f /etc/ssh/ssh_host_ed25519_key

- 修改容器root密码

passwd

- 启动测试

[root@0e512b4e0caf ~]# /sbin/sshd -D

WARNING: 'UsePAM no' is not supported in Red Hat Enterprise Linux and may cause several problems.

Could not load host key: /etc/ssh/ssh_host_ecdsa_key

Could not load host key: /etc/ssh/ssh_host_ed25519_key

- 制作带有 ssh 服务的镜像

docker commit 5560baa0ce06 ssh-centos

docker image ls

- 运行制作好的镜像

docker run -p 10022:22 -d ssh-centos /usr/sbin/sshd -D

- 宿主机通过 ssh 连接容器

ssh 192.168.30.108 -p 10022

2.使用 Dockerfile 制作镜像方式

- 准备存放目录

mkdir -pv /root/dockerfile/ssh-centos/

cd /root/dockerfile/ssh-centos

- 准备容器的主机密码

在宿主机上生成,然后通过dockerfile制作镜像时添加到镜像中

ssh-keygen -t rsa -f ssh_host_rsa_key

- 创建 Dockerfile

MAINTAINER 指令已经不推荐使用了

vim Dockerfile

------------------------

# this image is based on centos image

FROM centos

LABEL version="1.0" \

maintainer="test" \

email="[email protected]"

RUN yum -y install openssh-server

RUN sed -i 's/#UseDNS yes/UseDNS no/' /etc/ssh/sshd_config

COPY ssh_host_rsa_key /etc/ssh/ssh_host_rsa_key

COPY ssh_host_rsa_key.pub /etc/ssh/ssh_host_rsa_key.pub

RUN echo test |passwd --stdin root

EXPOSE 22

CMD ["/usr/sbin/sshd","-D"]

------------------------

- 创建镜像

ssh-centos]# docker build -t ssh:v1 .

Sending build context to Docker daemon 5.632kB

Step 1/8 : FROM centos

---> 67fa590cfc1c

Step 2/8 : LABEL version="1.0" maintainer="test" email="[email protected]"

---> Using cache

---> 9b9511e64010

Step 3/8 : RUN yum -y install openssh-server

---> Using cache

---> 84ed0cd44d2d

Step 4/8 : COPY ssh_host_rsa_key /etc/ssh/ssh_host_rsa_key

---> Using cache

---> 7500ae81bb40

Step 5/8 : COPY ssh_host_rsa_key.pub /etc/ssh/ssh_host_rsa_key.pub

---> Using cache

---> 86e10b971a4a

Step 6/8 : RUN echo test |passwd --stdin root

---> Running in 78d41574dbcc

Changing password for user root.

passwd: all authentication tokens updated successfully.

Removing intermediate container 78d41574dbcc

---> 9551ab37840d

Step 7/8 : EXPOSE 22

---> Running in da1bb8aec380

Removing intermediate container da1bb8aec380

---> 4f47d3a9d677

Step 8/8 : CMD ["/usr/sbin/ssh -D"]

---> Running in 82a268ee83b7

Removing intermediate container 82a268ee83b7

---> 0004c92b6bb3

Successfully built 0004c92b6bb3

Successfully tagged ssh:v1

- 查看生成的镜像

ssh-centos]# docker image ls -a

REPOSITORY TAG IMAGE ID CREATED SIZE

<none> <none> 4f47d3a9d677 8 seconds ago 273MB

<none> <none> 9551ab37840d 8 seconds ago 273MB

ssh v1 0004c92b6bb3 8 seconds ago 273MB

<none> <none> 86e10b971a4a About a minute ago 273MB

<none> <none> 7500ae81bb40 About a minute ago 273MB

<none> <none> 84ed0cd44d2d About a minute ago 273MB

<none> <none> 9b9511e64010 2 minutes ago 202MB

ssh-centos latest 01375639f7d6 4 hours ago 273MB

busybox latest 19485c79a9bb 3 weeks ago 1.22MB

archlinux/base latest 754519a037bc 4 weeks ago 459MB

centos latest 67fa590cfc1c 5 weeks ago 202MB

nginx latest 5a3221f0137b 6 weeks ago 126MB

查看打的标签

ssh-centos]# docker image inspect ssh:v1

"Labels": {

"email": "[email protected]",

"maintainer": "test",

"org.label-schema.build-date": "20190801",

"org.label-schema.license": "GPLv2",

"org.label-schema.name": "CentOS Base Image",

"org.label-schema.schema-version": "1.0",

"org.label-schema.vendor": "CentOS",

"version": "1.0"

}

- 运行镜像

docker container run -d -p 20022:22 ssh:v1

- 测试

ssh-centos]# ssh 127.0.0.1 -p 20022

The authenticity of host '[127.0.0.1]:20022 ([127.0.0.1]:20022)' can't be established.

RSA key fingerprint is SHA256:k2JXlATiFVtwQpyjnWIPDHTRg3l0xMHjDuFxRK8ahfM.

RSA key fingerprint is MD5:03:dd:13:b9:2e:5f:95:23:ad:8a:b5:40:73:f4:7b:ef.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '[127.0.0.1]:20022' (RSA) to the list of known hosts.

[email protected]'s password:

3.制作提供nginx服务的镜像

- 准备制作nginx镜像的文件目录

mkdir -pv /root/dockerfile/nginx/

cd /root/dockerfile/nginx/

- 编写制作镜像的Dockerfile文件

vim Dockerfile

-------------------------------

FROM ssh:v1

LABEL version="v0.2"\

email="[email protected]"

RUN yum -y install epel-release

RUN yum -y install nginx

COPY nginx_and_ssh.sh /usr/local/sbin/nginx_and_ssh.sh

RUN chmod +x /usr/local/sbin/nginx_and_ssh.sh

CMD ["/usr/local/sbin/nginx_and_ssh.sh"]

EXPOSE 22 80 443

-------------------------------

对应的脚本文件

#!/bin/bash

/usr/sbin/sshd &

/usr/sbin/nginx -g "daemon off;"

- 制作镜像

docker build -t nginx:v2 .

- 运行镜像

docker run -d -P nginx:v2

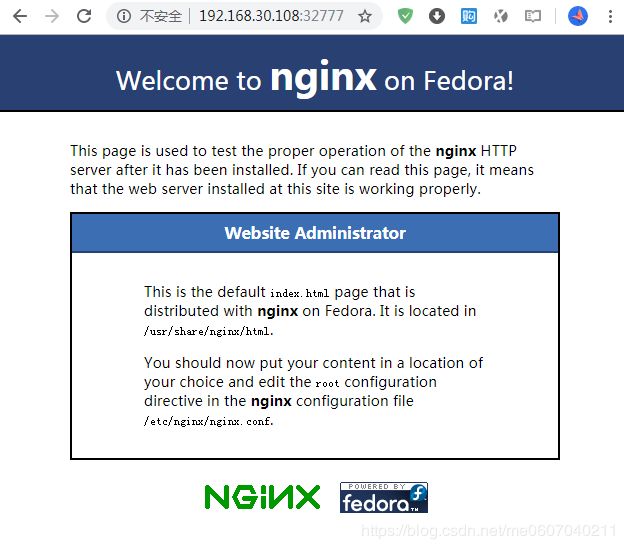

- 测试

查看映射的端口,并访问服务

docker container ls

nginx]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

23d0de4d0037 nginx:v2 "/usr/local/sbin/ngi…" 10 minutes ago Up 10 minutes 0.0.0.0:32778->22/tcp, 0.0.0.0:32777->80/tcp, 0.0.0.0:32776->443/tcp kind_elion

fcfecf58ff7c ssh:v1 "/usr/sbin/sshd -D" 49 minutes ago Up 27 minutes 0.0.0.0:20022->22/tcp gallant_keller

~]# ssh 127.0.0.1 -p 32778

[email protected]'s password:

Last login: Tue Oct 1 01:16:47 2019 from 172.17.0.1

[root@23d0de4d0037 ~]#

二、完成kubernetes集群的搭建

1.各节点准备工作

- 时间同步

systemctl set-ntp true

ntpdate time1.aliyun.com

- 主机名解析

vim /etc/hosts

------------------------

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.30.102 master1.ilinux.io master1

192.168.30.103 master2.ilinux.io master2

192.168.30.100 node1.ilinux.io node1

192.168.30.104 node2.ilinux.io node2

192.168.30.108 node3.ilinux.io node3

------------------------

- iptables及firewalld服务确保未启用

iptables -vnL

systemctl status firewalld.service

getenforce

2.安装程序包

- 配置 docker-ce 的 yum 仓库

这里直接下载阿里的配置文件(官方网站)

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

- 配置 kubernetes 的 yum 仓库

vim /etc/yum.repos.d/kubernetes.repo

----------------------------------------------------------------------------------

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

enabled=1

----------------------------------------------------------------------------------

- 安装

有一个错误要解决,下载对应的 rpm 包安装就好了。

Error: Package: containerd.io-1.2.6-3.3.el7.x86_64 (docker-ce-stable)

Requires: container-selinux >= 2:2.74

Error: Package: 3:docker-ce-19.03.2-3.el7.x86_64 (docker-ce-stable)

Requires: container-selinux >= 2:2.74

You could try using --skip-broken to work around the problem

You could try running: rpm -Va --nofiles --nodigest

然后重新安装,docker必须安装对应的版本:

The list of validated docker versions remains unchanged.

The current list is 1.13.1, 17.03, 17.06, 17.09, 18.06, 18.09. (#72823, #72831)

yum install ./container-selinux-2.74-1.el7.noarch.rpm

yum install docker-ce-18.09.9-3.el7 kubelet kubeadm kubectl

3.初始化主节点

- 初始化报错,无法拉取镜像

这是由于gcr.io在国内无法访问造成的。

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-apiserver:v1.16.0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

- 修改docker cgroup driver为systemd

~]# cat /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

- 查看当前 k8s 版本需要哪些镜像

kubeadm config images list

--------------------------------------

k8s.gcr.io/kube-apiserver:v1.16.0

k8s.gcr.io/kube-controller-manager:v1.16.0

k8s.gcr.io/kube-scheduler:v1.16.0

k8s.gcr.io/kube-proxy:v1.16.0

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.3.15-0

k8s.gcr.io/coredns:1.6.2

--------------------------------------

- 使用GCR Proxy Cache从gcr.io下载镜像

使用帮助:http://mirror.azure.cn/help/gcr-proxy-cache.html

docker pull gcr.azk8s.cn/google_containers/kube-apiserver:v1.16.0

docker pull gcr.azk8s.cn/google_containers/kube-controller-manager:v1.16.0

docker pull gcr.azk8s.cn/google_containers/kube-scheduler:v1.16.0

docker pull gcr.azk8s.cn/google_containers/kube-proxy:v1.16.0

docker pull gcr.azk8s.cn/google_containers/pause:3.1

docker pull gcr.azk8s.cn/google_containers/etcd:3.3.15-0

docker pull gcr.azk8s.cn/google_containers/coredns:1.6.2

- 查看下载的镜像

~]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

gcr.azk8s.cn/google_containers/kube-apiserver v1.16.0 b305571ca60a 13 days ago 217MB

gcr.azk8s.cn/google_containers/kube-controller-manager v1.16.0 06a629a7e51c 13 days ago 163MB

gcr.azk8s.cn/google_containers/kube-proxy v1.16.0 c21b0c7400f9 13 days ago 86.1MB

gcr.azk8s.cn/google_containers/kube-scheduler v1.16.0 301ddc62b80b 13 days ago 87.3MB

gcr.azk8s.cn/google_containers/etcd 3.3.15-0 b2756210eeab 4 weeks ago 247MB

gcr.azk8s.cn/google_containers/coredns 1.6.2 bf261d157914 6 weeks ago 44.1MB

gcr.azk8s.cn/google_containers/pause 3.1 da86e6ba6ca1 21 months ago 742kB

- 修改镜像的标签

docker tag gcr.azk8s.cn/google_containers/kube-apiserver:v1.16.0 k8s.gcr.io/kube-apiserver:v1.16.0

docker tag gcr.azk8s.cn/google_containers/kube-controller-manager:v1.16.0 k8s.gcr.io/kube-controller-manager:v1.16.0

docker tag gcr.azk8s.cn/google_containers/kube-scheduler:v1.16.0 k8s.gcr.io/kube-scheduler:v1.16.0

docker tag gcr.azk8s.cn/google_containers/kube-proxy:v1.16.0 k8s.gcr.io/kube-proxy:v1.16.0

docker tag gcr.azk8s.cn/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag gcr.azk8s.cn/google_containers/etcd:3.3.15-0 k8s.gcr.io/etcd:3.3.15-0

docker tag gcr.azk8s.cn/google_containers/coredns:1.6.2 k8s.gcr.io/coredns:1.6.2

- 修改后的镜像

~]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

gcr.azk8s.cn/google_containers/kube-apiserver v1.16.0 b305571ca60a 13 days ago 217MB

k8s.gcr.io/kube-apiserver v1.16.0 b305571ca60a 13 days ago 217MB

k8s.gcr.io/kube-controller-manager v1.16.0 06a629a7e51c 13 days ago 163MB

gcr.azk8s.cn/google_containers/kube-controller-manager v1.16.0 06a629a7e51c 13 days ago 163MB

gcr.azk8s.cn/google_containers/kube-proxy v1.16.0 c21b0c7400f9 13 days ago 86.1MB

k8s.gcr.io/kube-proxy v1.16.0 c21b0c7400f9 13 days ago 86.1MB

gcr.azk8s.cn/google_containers/kube-scheduler v1.16.0 301ddc62b80b 13 days ago 87.3MB

k8s.gcr.io/kube-scheduler v1.16.0 301ddc62b80b 13 days ago 87.3MB

k8s.gcr.io/etcd 3.3.15-0 b2756210eeab 4 weeks ago 247MB

gcr.azk8s.cn/google_containers/etcd 3.3.15-0 b2756210eeab 4 weeks ago 247MB

gcr.azk8s.cn/google_containers/coredns 1.6.2 bf261d157914 6 weeks ago 44.1MB

k8s.gcr.io/coredns 1.6.2 bf261d157914 6 weeks ago 44.1MB

gcr.azk8s.cn/google_containers/pause 3.1 da86e6ba6ca1 21 months ago 742kB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 21 months ago 742kB

- 再次执行初始化

没有卡在拉取镜像的地方,但是有一个错误。

~]# kubeadm init --kubernetes-version=v1.16.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

[init] Using Kubernetes version: v1.16.0

[preflight] Running pre-flight checks

[WARNING Swap]: running with swap on is not supported. Please disable swap

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [master1.ilinux.io kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.49.150]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [master1.ilinux.io localhost] and IPs [192.168.49.150 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [master1.ilinux.io localhost] and IPs [192.168.49.150 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[kubelet-check] It seems like the kubelet isn't running or healthy.

- 关闭swap

报错日志

failed to run Kubelet: running with swap on is not supported, please disable swap!

解决方法

vim /etc/sysconfig/kubelet

--------------------------------------

KUBELET_EXTRA_ARGS=--fail-swap-on=false

--------------------------------------

- 重置后再次执行初始化

~]# kubeadm reset

~]# kubeadm init --kubernetes-version=v1.16.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

- 记录其他节点加入集群方式

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.49.150:6443 --token 638bqc.eb2yfqhu88afi87s \

--discovery-token-ca-cert-hash sha256:e2098b9b9ab02c1dc1ae715945824f8bb24fc78e1579bea72289524209222787

- 初始化 kubectl

配置常规用户如何使用kubectl访问集群

mkdir ~/.kube

cp /etc/kubernetes/admin.conf ~/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 添加flannel网络附件

For Kubernetes v1.7+ 使用下面的命令即可。注意:下载镜像有可能失败,只有改天再试。

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

- 查看容器运行状态

~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5644d7b6d9-4dj8j 1/1 Running 0 4d18h

coredns-5644d7b6d9-7g7x8 1/1 Running 0 4d18h

etcd-master1.ilinux.io 1/1 Running 1 4d18h

kube-apiserver-master1.ilinux.io 1/1 Running 1 4d18h

kube-controller-manager-master1.ilinux.io 1/1 Running 1 4d18h

kube-flannel-ds-amd64-zrjmq 1/1 Running 0 4d17h

kube-proxy-cjfxx 1/1 Running 1 4d18h

kube-scheduler-master1.ilinux.io 1/1 Running 1 4d18h

~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.ilinux.io Ready master 4d18h v1.16.0

4.添加其他节点到集群中

- 安装对应的软件

yum install docker-ce-18.09.9-3.el7 kubelet kubeadm -y

- 忽略Swap启用的状态错误

vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

- 设定docker和kubelet开机自启动

systemctl enable docker kubelet

systemctl start docker

- 加入集群

由于初始化集群的时候一直下不下来flannel镜像,几天后执行这个命令会一直卡住。这是因为当时生成的集群证书过期了。

~]# kubeadm join 192.168.49.150:6443 --token 638bqc.eb2yfqhu88afi87s --discovery-token-ca-cert-hash sha256:e2098b9b9ab02c1dc1ae715945824f8bb24fc78e1579bea72289524209222787

[preflight] Running pre-flight checks

查看集群的证书

~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

638bqc.eb2yfqhu88afi87s <invalid> 2019-10-03T14:01:44+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

重新生成证书

~]# kubeadm token create

prtofz.n4al29ifp16p7vkq

获取证书sha256编码hash值

~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

e2098b9b9ab02c1dc1ae715945824f8bb24fc78e1579bea72289524209222787

再次尝试加入集群,开启日志详情

~]# kubeadm join 192.168.49.150:6443 --token prtofz.n4al29ifp16p7vkq --discovery-token-ca-cert-hash sha256:e2098b9b9ab02c1dc1ae715945824f8bb24fc78e1579bea72289524209222787 --v=6

[preflight] Running pre-flight checks

...

I1002 01:50:19.216923 22665 round_trippers.go:443] GET https://192.168.49.150:6443/api/v1/namespaces/kube-public/configmaps/cluster-info in 4 milliseconds

I1002 01:50:19.217295 22665 token.go:146] [discovery] Failed to request cluster info, will try again: [Get https://192.168.49.150:6443/api/v1/namespaces/kube-public/configmaps/cluster-info: x509: certificate has expired or is not yet valid]

I1002 01:50:24.237093 22665 round_trippers.go:443] GET https://192.168.49.150:6443/api/v1/namespaces/kube-public/configmaps/cluster-info in 3 milliseconds

I1002 01:50:24.237152 22665 token.go:146] [discovery] Failed to request cluster info, will try again: [Get https://192.168.49.150:6443/api/v1/namespaces/kube-public/configmaps/cluster-info: x509: certificate has expired or is not yet valid]

I1002 01:50:29.241948 22665 round_trippers.go:443] GET https://192.168.49.150:6443/api/v1/namespaces/kube-public/configmaps/cluster-info in 4 milliseconds

I1002 01:50:29.242001 22665 token.go:146] [discovery] Failed to request cluster info, will try again: [Get https://192.168.49.150:6443/api/v1/namespaces/kube-public/configmaps/cluster-info: x509: certificate has expired or is not yet valid]

竟然还是报错,后来看到时间醒悟过来,原来时间没有同步。几天没有开机时间不对了,难怪提示证书过期。同步时间后再试就成功了!期间还将集群重置了,还是不行,最后才发现是时间的问题。

~]# kubeadm join 192.168.49.150:6443 --token pmomhv.x07bruxg871hmjuh --discovery-token-ca-cert-hash sha256:60570ef6ca4e8ba12f9e208cb1137ae61964e99d25d21930c05edb77353f3766

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.16" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

- 验正节点的就绪状态

~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.ilinux.io Ready master 24m v1.16.0

node1.ilinux.io Ready <none> 11m v1.16.0

node2.ilinux.io NotReady <none> 11m v1.16.0

node3.ilinux.io Ready <none> 11m v1.16.0

三、通过kubernetes集群管理应用

- k8s集群的资源的创建和删除都是通过编写 yaml 文件来实现了

下面一个示例

vim test.yaml

-----------------------------------------------------------------------------------------

apiVersion: v1

kind: Pod

metadata:

name: pod-vol-hostpath

namespace: default

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html/

volumes:

- name: html

hostPath:

path: /data/pod/volume1

type: DirectoryOrCreate

-----------------------------------------------------------------------------------------

- 创建资源

kubectl create -f test.yaml

- 查看状态信息

]# kubectl get pods

]# kubectl describe pods pod-name

]# kubectl get pod -o wide

-----------------------------------------------------------------------------------------

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-httpget-pod 1/1 Running 0 49m 10.244.1.2 node1.ilinux.io <none> <none>

pod-demo 1/2 CrashLoopBackOff 8 16m 10.244.2.3 node2.ilinux.io <none> <none>

pod-vol-hostpath 1/1 Running 0 6m5s 10.244.3.2 node3.ilinux.io <none> <none>

-----------------------------------------------------------------------------------------

在节点3上创建一个测试主页,访问测试

]# curl 10.244.3.2

<h1>This is a test page.</h1>

- 删除资源

kubectl delete -f test.yaml

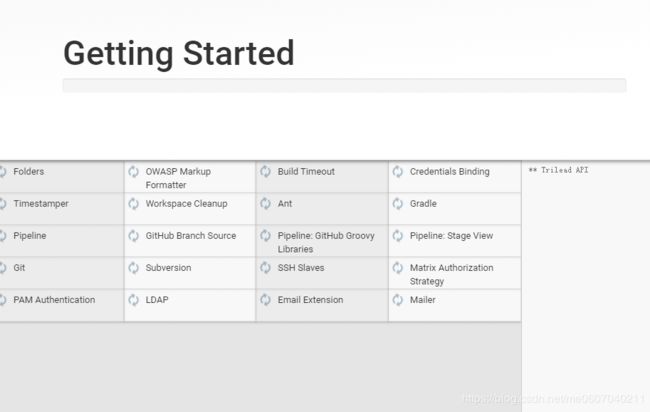

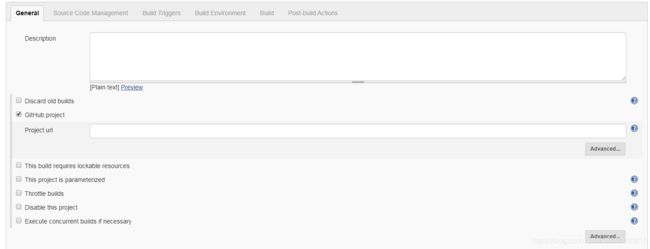

四、基于Jenkins+gitlab+maven+shell实现一个生产环境的持续集成案例

- 部署Java环境

yum install java-1.8.0-openjdk-devel

yum install tomcat

yum install tomcat-admin-webapps tomcat-webapps tomcat-docs-webapp

systemctl start tomcat

- 下载安装(https://pkg.jenkins.io/redhat-stable/)

yum install ./jenkins-2.190.1-1.1.noarch.rpm

复制jenkins.war包到tomcat的/usr/share/tomcat/webapps目录中

~]# rpm -ql jenkins

/etc/init.d/jenkins

/etc/logrotate.d/jenkins

/etc/sysconfig/jenkins

/usr/lib/jenkins

/usr/lib/jenkins/jenkins.war

/usr/sbin/rcjenkins

/var/cache/jenkins

/var/lib/jenkins

/var/log/jenkins

tomcat 为我们自动解包

webapps]# ll

total 76420

drwxr-xr-x 14 root root 4096 Oct 15 14:20 docs

drwxr-xr-x 8 tomcat tomcat 127 Oct 15 14:20 examples

drwxr-xr-x 5 root tomcat 87 Oct 15 14:20 host-manager

drwxr-xr-x 11 tomcat tomcat 4096 Oct 15 14:23 jenkins

-rw-r--r-- 1 root root 78245883 Oct 15 14:23 jenkins.war

drwxr-xr-x 5 root tomcat 103 Oct 15 14:20 manager

drwxr-xr-x 3 tomcat tomcat 306 Oct 15 14:20 ROOT

drwxr-xr-x 5 tomcat tomcat 86 Oct 15 14:20 sample

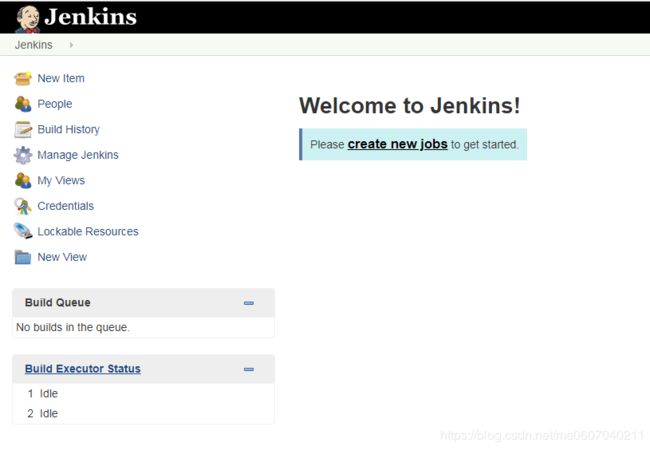

- 登录jenkins

firefox http://192.168.30.103:8080/jenkins

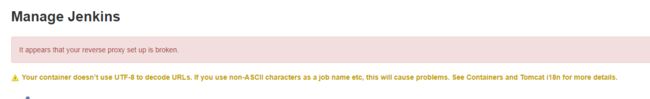

vim /etc/tomcat/server.xml

-------------------------------------

<Connector port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" URIEncoding="UTF-8" />

-------------------------------------

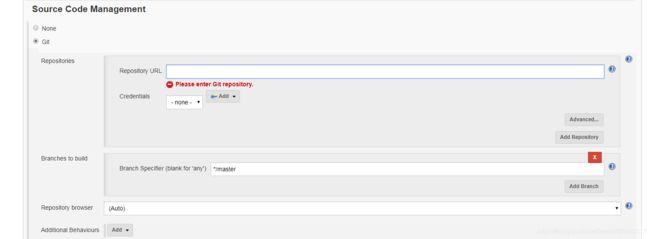

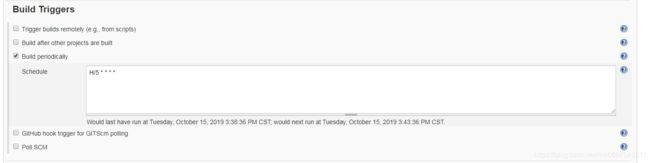

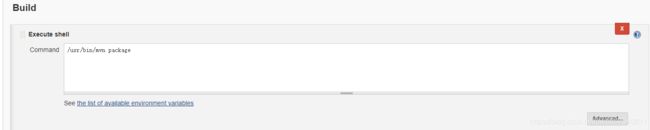

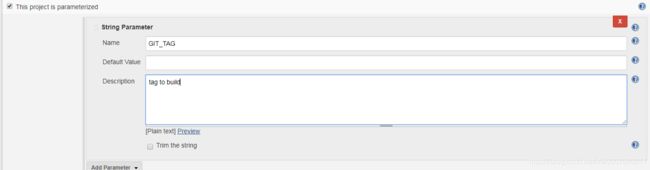

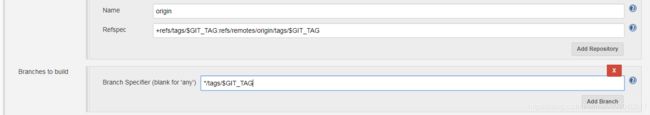

+refs/tags/$GIT_TAG:refs/remotes/origin/tags/$GIT_TAG

这样以后就可以根据标签构建对应的版本了,当然也可以回滚到指定的版本。

参考文章

创建支持SSH服务的CentOS镜像

通过Dockerfile创建支持SSH服务的CentOS镜像

Dockerfile详解以及Flask项目Dockerfile示例

创建带Nginx服务的Centos Docker镜像

CentOS7 单节点 k8s 部署拾遗

使用kubeadm安装Kubernetes 1.15

Kubernetes执行join卡住导致加入集群失败问题解决

Parameterized Jenkins build for rollback purposes.