深度学习模型压缩与优化加速(Model Compression and Acceleration Overview)

1. 简介

深度学习(Deep Learning)因其计算复杂度或参数冗余,在一些场景和设备上限制了相应的模型部署,需要借助模型压缩、优化加速、异构计算等方法突破瓶颈。

- 模型压缩算法能够有效降低参数冗余,从而减少存储占用、通信带宽和计算复杂度,有助于深度学习的应用部署,具体可划分为如下几种方法(后续重点介绍剪枝与量化):

- 线性或非线性量化:1/2bits, int8 和 fp16等;

- 结构或非结构剪枝:deep compression, channel pruning 和 network slimming等;

- 其他:权重矩阵的低秩分解,知识蒸馏与网络结构简化(squeeze-net, mobile-net, shuffle-net)等;

- 模型优化加速能够提升网络的计算效率,具体包括:

- Op-level的快速算法:FFT Conv2d (7x7, 9x9), Winograd Conv2d (3x3, 5x5) 等;

- Layer-level的快速算法:Sparse-block net [1] 等;

- 优化工具与库:TensorRT (Nvidia), Tensor Comprehension (Facebook) 和 Distiller (Intel) 等;

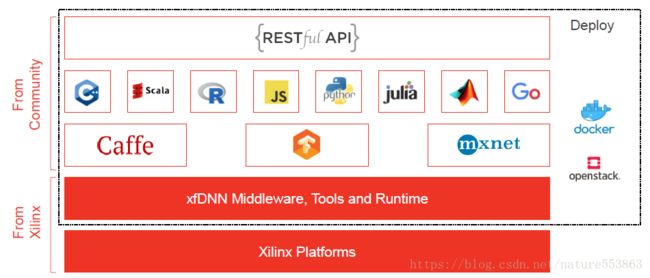

- 异构计算方法借助协处理硬件引擎(通常是PCIE加速卡、ASIC加速芯片或加速器IP),完成深度学习模型在数据中心或边缘计算领域的实际部署,包括GPU、FPGA或DSA (Domain Specific Architecture) ASIC等。异构加速硬件可以选择定制方案,通常能效、性能会更高,目前市面上流行的AI芯片或加速器可参考 [2]。显然,硬件性能提升带来的加速效果非常直观,例如2080ti与1080ti的比较(以复杂的PyramidBox人脸检测算法为例,约提速36%);另外,针对数据中心部署应用,通常选择通用方案,会有完善的生态支持,例如NVIDIA的CUDA,或者Xilinx的xDNN。

2. TensorRT基础

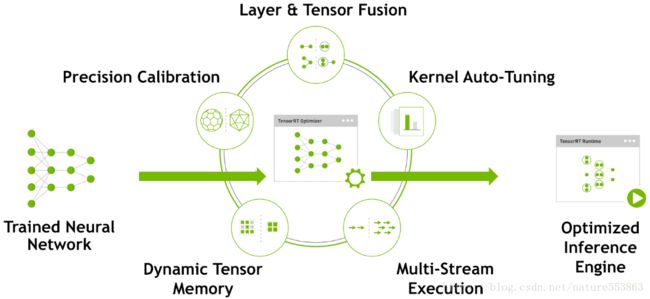

TensorRT是NVIDIA推出的深度学习优化加速工具,采用的原理如下图所示,具体可参考[3] [4]:

TensorRT能够优化重构由不同深度学习框架训练的深度学习模型:

- 对于Caffe与TensorFlow训练的模型,若包含的操作都是TensorRT支持的,则可以直接由TensorRT优化重构;

- 对于MXnet, PyTorch或其他框架训练的模型,若包含的操作都是TensorRT支持的,可以采用TensorRT API重建网络结构,并间接优化重构;

- 其他框架训练的模型,转换为ONNX中间格式后,若包含的操作是TensorRT支持的,可采用TensorRT-ONNX接口予以优化 [27];

- 若训练的网络模型包含TensorRT不支持的操作:

- TensorFlow模型可通过tf.contrib.tensorrt转换,其中不支持的操作会保留为TensorFlow计算节点;MXNet也支持类似的计算图转换方式;

- 不支持的操作可通过Plugin API实现自定义并添加进TensorRT计算图,例如Faster Transformer的自定义扩展 [26];

- 将深度网络划分为两个部分,一部分包含的操作都是TensorRT支持的,可以转换为TensorRT计算图。另一部则采用其他框架实现,如MXnet或PyTorch;

- TensorRT的int8量化需要校准(calibration)数据集,一般至少包含1000个样本(反映真实应用场景),且要求GPU的计算功能集sm >= 6.1;

在1080ti平台上,基于TensorRT4.0.1,Resnet101-v2的优化加速效果如下:

| Network | Precision | Framework / GPU: 1080ti (P) | Avg. Time (Batch=8, unit: ms) | Top1 Val. Acc. (ImageNet-1k) |

| Resnet101 | fp32 | TensorFlow | 36.7 | 0.7612 |

| Resnet101 | fp32 | MXnet | 25.8 | 0.7612 |

| Resnet101 | fp32 | TRT4.0.1 | 19.3 | 0.7612 |

| Resnet101 | int8 | TRT4.0.1 | 9 | 0.7574 |

在1080ti/2080ti平台上,基于TensorRT5.1.5,Resnet101-v1d的float16加速效果如下(由于2080ti包含Tensor Core,因此float16加速效果较为明显):

| 网络 | 平台 | 数值精度 | Batch=8 | Batch=4 | Batch=2 | Batch=1 |

| Resnet101-v1d | 1080ti | float32 | 19.4ms | 12.4ms | 8.4ms | 7.4ms |

| float16 | 28.2ms | 16.9ms | 10.9ms | 8.1ms | ||

| int8 | 8.1ms | 6.7ms | 4.6ms | 4ms | ||

| 2080ti | float32 | 16.6ms | 10.8ms | 8.0ms | 7.2ms | |

| float16 | 14.6ms | 9.6ms | 5.5ms | 4.3ms | ||

| int8 | 7.2ms | 3.8ms | 3.0ms | 2.6ms |

3. 网络剪枝

深度学习模型因其稀疏性或过拟合倾向,可以被裁剪为结构精简的网络模型,具体包括结构性剪枝与非结构性剪枝:

- 非结构剪枝:通常是连接级、细粒度的剪枝方法,精度相对较高,但依赖于特定算法库或硬件平台的支持,如Deep Compression [5], Sparse-Winograd [6] 算法等;

- 结构剪枝:是filter级或layer级、粗粒度的剪枝方法,精度相对较低,但剪枝策略更为有效,不需要特定算法库或硬件平台的支持,能够直接在成熟深度学习框架上运行。如局部方式的、通过layer by layer方式的、最小化输出FM重建误差的Channel Pruning [7], ThiNet [8], Discrimination-aware Channel Pruning [9];全局方式的、通过训练期间对BN层Gamma系数施加L1正则约束的Network Slimming [10];全局方式的、按Taylor准则对Filter作重要性排序的Neuron Pruning [11];全局方式的、可动态重新更新pruned filters参数的剪枝方法 [12];基于GAN思想的GAL方法 [24],可裁剪包括Channel, Branch或Block等在内的异质结构;借助Geometric Median确定卷积滤波器冗余性的剪枝策略 [28];

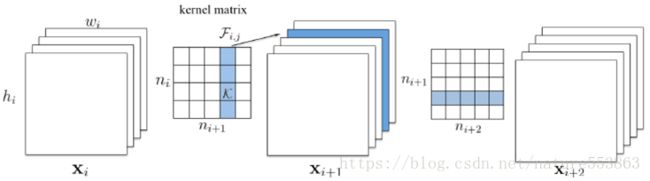

以Channel Pruning为例,结构剪枝的规整操作如下图所示,可兼容现有的、成熟的深度学习框架:

4. 模型量化

模型量化是指权重或激活输出可以被聚类到一些离散、低精度(reduced precision)的数值点上,通常依赖于特定算法库或硬件平台的支持:

- 二值化网络:XNORnet [13], ABCnet with Multiple Binary Bases [14], Bin-net with High-Order Residual Quantization [15], Bi-Real Net [16];

- 三值化网络:Ternary weight networks [17], Trained Ternary Quantization [18];

- W1-A8 或 W2-A8量化: Learning Symmetric Quantization [19];

- INT8量化:TensorFlow-lite [20], TensorRT [21], Quantization Interval Learning [25];

- 其他(非线性):Intel INQ [22], log-net, CNNPack [23] 等;

若模型压缩之后,推理精度存在较大损失,可以通过fine-tuning予以恢复,并在训练过程中结合适当的Tricks,例如Label Smoothing、Mix-up、Knowledge Distillation、Focal Loss等。 此外,模型压缩、优化加速策略可以联合使用,进而可获得更为极致的压缩比与加速比。例如结合Network Slimming与TensorRT int8优化,在1080ti Pascal平台上,Resnet101-v1d在压缩比为1.4倍时(Size=170MB->121MB,FLOPS=16.14G->11.01G),经TensorRT int8量化之后,推理耗时仅为7.4ms(Batch size=8)。

其中知识蒸馏(Knowledge Distillation)相关的讨论可参考:

https://blog.csdn.net/nature553863/article/details/80568658

References

[1] https://arxiv.org/abs/1801.02108, Github: https://github.com/uber/sbnet

[2] https://basicmi.github.io/Deep-Learning-Processor-List/

[3] https://devblogs.nvidia.com/tensorrt-3-faster-tensorflow-inference/

[4] https://devblogs.nvidia.com/int8-inference-autonomous-vehicles-tensorrt/

[5] https://arxiv.org/abs/1510.00149

[6] https://arxiv.org/abs/1802.06367, https://ai.intel.com/winograd-2/, Github: https://github.com/xingyul/Sparse-Winograd-CNN

[7] https://arxiv.org/abs/1707.06168, Github: https://github.com/yihui-he/channel-pruning

[8] https://arxiv.org/abs/1707.06342

[9] https://arxiv.org/abs/1810.11809, Github: https://github.com/Tencent/PocketFlow

[10] https://arxiv.org/abs/1708.06519, Github: https://github.com/foolwood/pytorch-slimming

[11] https://arxiv.org/abs/1611.06440, Github: https://github.com/jacobgil/pytorch-pruning

[12] http://xuanyidong.com/publication/ijcai-2018-sfp/

[13] https://arxiv.org/abs/1603.05279, Github: https://github.com/ayush29feb/Sketch-A-XNORNet

Github: https://github.com/jiecaoyu/XNOR-Net-PyTorch

[14] https://arxiv.org/abs/1711.11294, Github: https://github.com/layog/Accurate-Binary-Convolution-Network

[15] https://arxiv.org/abs/1708.08687

[16] https://arxiv.org/abs/1808.00278, Github: https://github.com/liuzechun/Bi-Real-net

[17] https://arxiv.org/abs/1605.04711

[18] https://arxiv.org/abs/1612.01064, Github: https://github.com/czhu95/ternarynet

[19] http://phwl.org/papers/syq_cvpr18.pdf, Github: https://github.com/julianfaraone/SYQ

[20] https://arxiv.org/abs/1712.05877

[21] http://on-demand.gputechconf.com/gtc/2017/presentation/s7310-8-bit-inference-with-tensorrt.pdf

[22] https://arxiv.org/abs/1702.03044

[23] https://papers.nips.cc/paper/6390-cnnpack-packing-convolutional-neural-networks-in-the-frequency-domain

[24] https://blog.csdn.net/nature553863/article/details/97631176

[25] https://blog.csdn.net/nature553863/article/details/96857133

[26] https://github.com/NVIDIA/DeepLearningExamples/tree/master/FasterTransformer

[27] https://github.com/onnx/onnx-tensorrt

[28] https://blog.csdn.net/nature553863/article/details/97760040